Verwenden von Python in Notebook

Hinweis

Derzeit befindet sich die Funktion in der Vorschauphase.

Das Python-Notizbuch ist ein neues Erlebnis, das auf dem Fabric-Notizbuch aufgebaut ist. Es ist ein vielseitiges und interaktives Tool für die Datenanalyse, Visualisierung und maschinelles Lernen. Es bietet eine nahtlose Entwicklungsumgebung zum Schreiben und Ausführen von Python-Code. Diese Funktion macht es zu einem wesentlichen Tool für Data Scientists, Analysten und BI-Entwickler, insbesondere für Erkundungsaufgaben, die keine Big Data und verteilte Computing erfordern.

Ein Python-Notebook ermöglicht Ihnen Folgendes:

Mehrere integrierte Python-Kernel: Python-Notebooks bieten eine reine Python-Programmierumgebung ohne Spark, mit zwei Versionen des Python-Kernels – Python 3.10 und 3.11 sind standardmäßig verfügbar, und die nativen IPython-Features wie iPyWidget und Magic-Befehle werden unterstützt.

Kostengünstig: Das neue Python-Notebook bietet kostensparende Vorteile, indem es standardmäßig auf einem einzelnen Knotencluster mit 2vCores/16 GB Arbeitsspeicher ausgeführt wird. Durch diese Einrichtung wird eine effiziente Ressourcenauslastung für Datenexplorationsprojekte mit geringerer Datengröße sichergestellt.

Lakehouse und Ressourcen sind nativ verfügbar: Das Fabric Lakehouse zusammen mit der vollständigen Funktionalität der integrierten Notebook-Ressourcen sind im Python-Notebook verfügbar. Mit diesem Feature können Benutzer die Daten auf einfache Weise in das Python-Notizbuch übertragen. Versuchen Sie einfach, & Drop zu ziehen, um den Codeausschnitt abzurufen.

Programmierung mit T-SQL verbinden: Das Python-Notebook bietet eine einfache Möglichkeit, mit Data Warehouse- und SQL-Endpunkten im Explorer zu interagieren. Durch die Verwendung des NotebookUtils-Datenconnectors können Sie die T-SQL-Skripts ganz einfach im Kontext von Python ausführen.

Unterstützung beliebter Datenanalysebibliotheken: Python-Notebooks verfügen über vorinstallierte Bibliotheken wie DuckDB, Polars und Scikit-learn und bieten ein umfassendes Toolkit für die Datenmanipulation, Datenanalyse und maschinelles Lernen.

Erweitertes IntelliSense: Das Python-Notebook verwendet Pylance als IntelliSense-Engine zusammen mit anderen benutzerdefinierten Fabric-Sprachdiensten, um eine erstklassige Programmierumgebung für Notebook-Entwickler bereitzustellen.

NotebookUtils und Semantic Link: Leistungsstarke API-Toolkits ermöglichen Ihnen die einfache Verwendung von Fabric- und Power BI-Funktionen mit Code First.

Umfassende Visualisierungsfunktionen: Außer der beliebten umfassenden Dataframe-Previewfunktion „Tabelle“ und der „Diagramm“-Funktion werden auch beliebte Visualisierungsbibliotheken wie Matplotlib, Seaborn und Plotly unterstützt. Der PowerBIClient unterstützt diese Bibliotheken auch, um Benutzern das Verständnis von Datenmustern und Erkenntnissen zu erleichtern.

Allgemeine Funktionen für Fabric Notebook: Alle Features auf Notebook-Ebene gelten natürlich für Python-Notebooks, z. B. Bearbeitungsfeatures, AutoSpeichern, Zusammenarbeit, Freigabe- und Berechtigungsverwaltung, Git-Integration, Import/Export usw.

Vollständige Data-Science-Fähigkeiten: Das erweiterte Low-Code-Toolkit Data Wrangler, das Machine-Learning-Framework MLFlow und der leistungsstarke Copilot sind alle im Python-Notebook verfügbar.

So greifen Sie auf Python-Notebooks zu

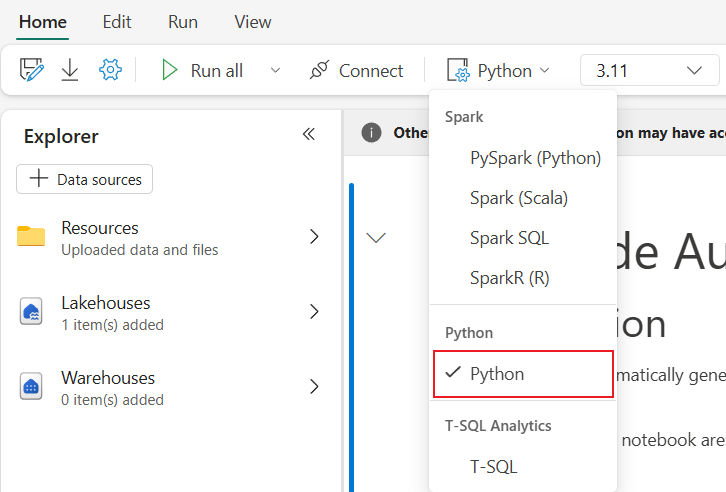

Nach dem Öffnen eines Fabric Notebooks können Sie zu Python im Dropdownmenü „Sprache“ auf der Registerkarte Start wechseln und das gesamte Notebook-Setup in Python konvertieren.

Die meisten gängigen Features werden auf Notebook-Ebene unterstützt. Detaillierte Informationen zur Verwendung erhalten Sie unter Verwenden von Microsoft Fabric-Notebooks und Entwickeln, Ausführen und Verwalten von Microsoft Fabric-Notebooks. Hier sind einige wichtige Funktionen aufgeführt, die für Python-Szenarien spezifisch sind.

Ausführen von Python-Notebooks

Python-Notebook unterstützt mehrere Ausführungsarten für Jobs:

- Interaktive Ausführung: Sie können ein Python-Notebook interaktiv wie ein natives Jupyter-Notebook ausführen.

- Zeitplanausführung: Sie können den Lightweight-Scheduler auf der Seite für die Notebook-Einstellungen verwenden, um Python-Notebooks als Batchauftrag auszuführen.

- Pipelineausführung: Sie können Python-Notebooks als Notebook-Aktivitäten in Data Pipeline orchestrieren. Die Momentaufnahme wird nach der Jobausführung erstellt.

- Referenzausführung: Sie können

notebookutils.notebook.run()odernotebookutils.notebook.runMultiple()verwenden, um für Python-Notebooks eine Referenzausführung in einem anderen Python-Notebook als Batchauftrag vorzunehmen. Die Momentaufnahme wird erstellt, nachdem die Referenzausführung abgeschlossen wurde. - Ausführung mit öffentlicher API: Sie können die Ausführung Ihres Python-Notebook mit der öffentlichen API für Notebook-Ausführungen planen. Stellen Sie sicher, dass die Sprach- und Kerneleigenschaften in den Notebook-Metadaten der Nutzlast der öffentlichen API ordnungsgemäß festgelegt sind.

Sie können die Details der Python-Notebook-Auftragsausführung auf der Registerkarte Ausführen –>Alle Ausführungen anzeigen des Menübands überwachen.

Dateninteraktion

Sie können mit Lakehouse, Warehouses, SQL-Endpunkten und integrierten Ressourcenordnern in einem Python-Notebook interagieren.

Lakehouse-Interaktion

Sie können ein Lakehouse als Standard festlegen, oder Sie können auch mehrere Lakehouses hinzufügen, um diese zu erkunden und in Notebooks zu verwenden.

Wenn Sie mit dem Lesen von Datenobjekten wie der Delta-Tabellenicht vertraut sind, versuchen Sie, die Datei und die Deltatabelle per Drag & Drop in den Notebook-Bereich zu ziehen, oder verwenden Sie Daten laden im Dropdownmenü des Objekts. Notebook fügt automatisch einen Codeschnipsel in die Codezelle ein und generiert Code, um das Zieldatenobjekt zu lesen.

Hinweis

Wenn beim Laden großer Datenmengen OOM auftritt, versuchen Sie, DuckDB, Polars oder PyArrow-DataFrame anstelle von Pandas zu verwenden.

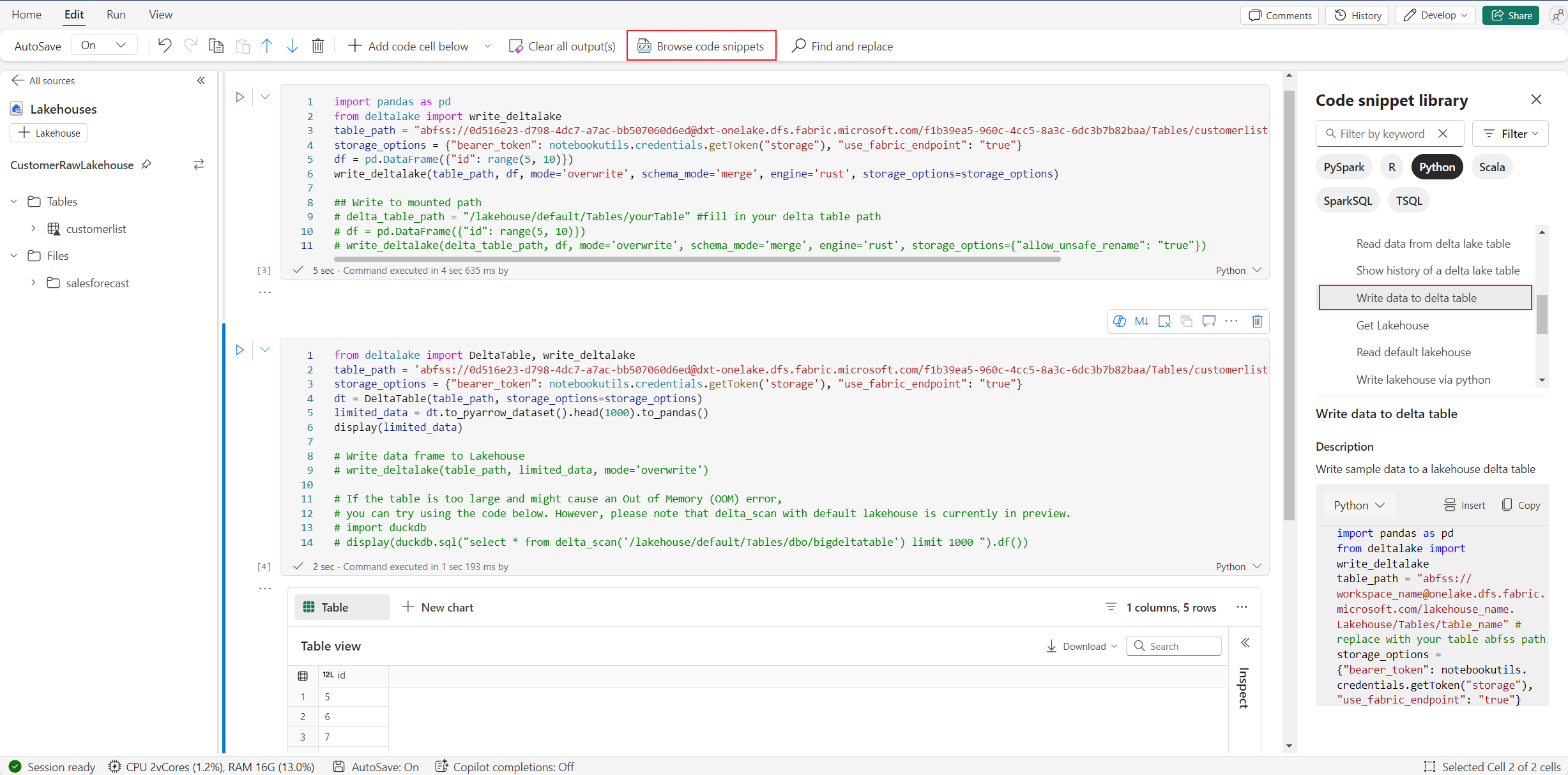

Sie finden den Lakehouse-Schreibvorgang in Durchsuchen von Codeschnipseln –>Schreiben von Daten in Delta-Tabelle.

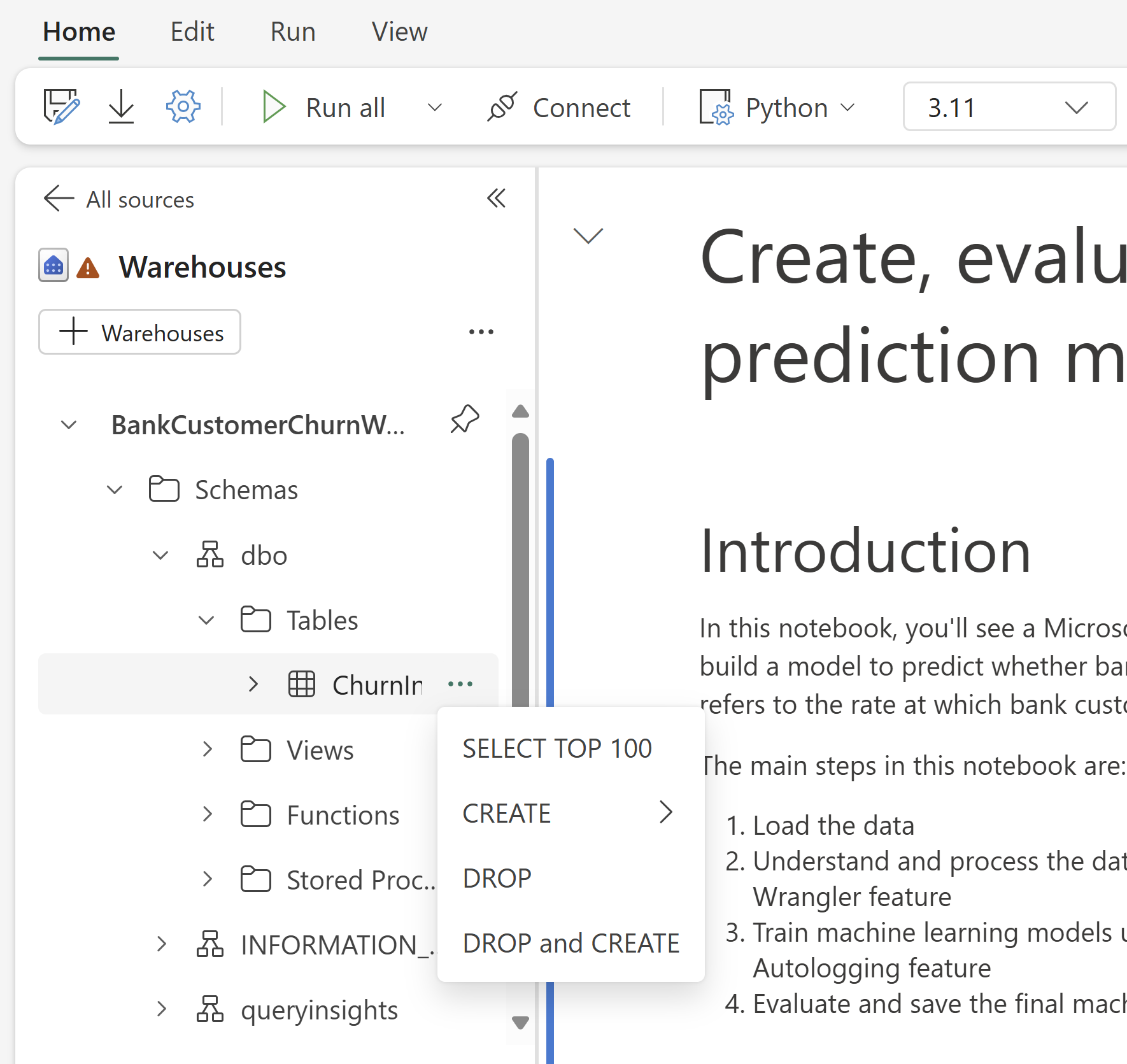

Warehouse-Interaktion und gemischte Programmierung mit T-SQL

Sie können Data Warehouses oder SQL-Endpunkte aus dem Warehouse-Explorer von Notebook hinzufügen. Ebenso können Sie die Tabellen in den Notebook-Bereich ziehen und ablegen oder die Verknüpfungsoperationen im Dropdownmenü der Tabelle verwenden. Notebook generiert automatisch Codeschnipsel für Sie. Sie können die notebookutils.data Hilfsprogramme verwenden, um eine Verbindung mit Datenlagern herzustellen und die Daten im Python-Kontext mithilfe von T-SQL-Anweisungen abzufragen.

Hinweis

SQL-Endpunkte sind hier schreibgeschützt.

Ordner „Notebookressourcen“

Der integrierte Ressourcenordner Notebookressourcen ist nativ in Python-Notebook verfügbar. Sie können ganz einfach mit den Dateien im integrierten Ressourcenordner interagieren, indem Sie Python-Code verwenden, als ob Sie mit Ihrem lokalen Dateisystem arbeiten. Derzeit wird der Ressourcenordner „Umgebung“ nicht unterstützt.

Kernelvorgänge

Python-Notebook unterstützt derzeit zwei integrierte Kernel, sie sind Python 3.10 und Python 3.11, der standardmäßig ausgewählte Kernel ist Python 3.11. Sie können ganz einfach zwischen diesen wechseln.

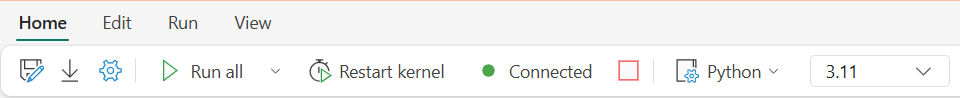

Auf der Registerkarte Start des Menübands können Sie den Kernel unterbrechen, neu starten oder wechseln. Das Unterbrechen des Kernels in Python-Notebooks entspricht dem Abbrechen von Zellen im Spark-Notebook.

Ein abnormer Kernelausgang bewirkt, dass die Codeausführung unterbrochen wird und Variablen verloren gehen, aber die Notebooksitzung wird nicht beendet.

Es gibt Befehle, die dazu führen können, dass der Kernel abbricht. Beispiel: quit(), exit().

Bibliotheksverwaltung

Sie können die Befehle %pip und %conda für Inline-Installationen verwenden. Die Befehle unterstützen sowohl öffentliche Bibliotheken als auch angepasste Bibliotheken.

Für angepasste Bibliotheken können Sie die Bibliotheksdateien in den integrierten Ressourcenordner hochladen. Wir unterstützen mehrere Arten von Bibliotheken wie .whl, .jar, .dll, .pyusw. Versuchen Sie einfach Drag & Drop für die Datei, der Codeschnipsel wird automatisch generiert.

Möglicherweise müssen Sie den Kernel neu starten, um die aktualisierten Pakete zu verwenden.

Magic-Befehl für Sitzungskonfiguration

Ähnlich wie beim Personalisieren einer Spark-Sitzungskonfiguration in Notebook können Sie %%configure ebenfalls in Python-Notebook verwenden. Python-Notebook unterstützt das Anpassen der Größe von Serverknoten, der Bereitstellungspunkte und des Standard-Lakehouse in der Notebooksitzung. Sie können sowohl in interaktiven Notebook- als auch in Pipeline-Notebook-Aktivitäten verwendet werden. Es wird empfohlen, den Befehl %%configure am Anfang Ihres Notizbuchs zu verwenden, oder Sie müssen die Notizbuchsitzung neu starten, damit die Einstellungen übernommen werden.

Dies sind die unterstützten Eigenschaften im Python-Notebook %%configure:

%%configure

{

"vCores": 4, // Recommended values: [4, 8, 16, 32, 64], Fabric will allocate matched memory according to the specified vCores.

"defaultLakehouse": {

// Will overwrites the default lakehouse for current session

"name": "<lakehouse-name>",

"id": "<(optional) lakehouse-id>",

"workspaceId": "<(optional) workspace-id-that-contains-the-lakehouse>" // Add workspace ID if it's from another workspace

},

"mountPoints": [

{

"mountPoint": "/myMountPoint",

"source": "abfs[s]://<file_system>@<account_name>.dfs.core.windows.net/<path>"

},

{

"mountPoint": "/myMountPoint1",

"source": "abfs[s]://<file_system>@<account_name>.dfs.core.windows.net/<path1>"

},

],

}

Sie können die Aktualisierung der Berechnungsressourcen auf der Notebook-Statusleiste anzeigen und die CPU- und Speicherauslastung des Serverknotens in Echtzeit überwachen.

NotebookUtils

Notebook Utilities (NotebookUtils) ist ein integriertes Paket, mit dem Sie allgemeine Aufgaben in Fabric Notebook problemlos ausführen können. Es ist auf Python-Runtime vorinstalliert. Sie können NotebookUtils verwenden, um mit Dateisystemen zu arbeiten, Umgebungsvariablen zu erhalten, Notebooks miteinander zu verketten, auf externe Speicher zuzugreifen und Geheimnisse zu verwenden.

Sie können notebookutils.help() verwenden, um verfügbare APIs aufzulisten und auch Hilfe zu Methoden zu erhalten oder auf das Dokument NotebookUtils zu verweisen.

Datendienstprogramme

Hinweis

Derzeit befindet sich die Funktion in der Vorschauphase.

Sie können notebookutils.data Hilfsprogramme verwenden, um eine Verbindung mit der bereitgestellten Datenquelle herzustellen und dann Daten mithilfe der T-SQL-Anweisung zu lesen und abzufragen.

Führen Sie den folgenden Befehl aus, um eine Übersicht über die verfügbaren Methoden zu erhalten:

notebookutils.data.help()

Ausgabe:

Help on module notebookutils.data in notebookutils:

NAME

notebookutils.data - Utility for read/query data from connected data sources in Fabric

FUNCTIONS

connect_to_artifact(artifact: str, workspace: str = '', artifact_type: str = '', **kwargs)

Establishes and returns an ODBC connection to a specified artifact within a workspace

for subsequent data queries using T-SQL.

:param artifact: The name or ID of the artifact to connect to.

:param workspace: Optional; The workspace in which the provided artifact is located, if not provided,

use the workspace where the current notebook is located.

:param artifactType: Optional; The type of the artifact, Currently supported type are Lakehouse, Warehouse and MirroredDatabase.

If not provided, the method will try to determine the type automatically.

:param **kwargs Optional: Additional optional configuration. Supported keys include:

- tds_endpoint : Allow user to specify a custom TDS endpoint to use for connection.

:return: A connection object to the specified artifact.

:raises UnsupportedArtifactException: If the specified artifact type is not supported to connect.

:raises ArtifactNotFoundException: If the specified artifact is not found within the workspace.

Examples:

sql_query = "SELECT DB_NAME()"

with notebookutils.data.connect_to_artifact("ARTIFACT_NAME_OR_ID", "WORKSPACE_ID", "ARTIFACT_TYPE") as conn:

df = conn.query(sql_query)

display(df)

help(method_name: str = '') -> None

Provides help for the notebookutils.data module or the specified method.

Examples:

notebookutils.data.help()

notebookutils.data.help("connect_to_artifact")

:param method_name: The name of the method to get help with.

DATA

__all__ = ['help', 'connect_to_artifact']

FILE

/home/trusted-service-user/jupyter-env/python3.10/lib/python3.10/site-packages/notebookutils/data.py

Abfragen von Daten aus Lakehouse

conn = notebookutils.data.connect_to_artifact("lakehouse_name_or_id", "optional_workspace_id", "optional_lakehouse_type")

df = conn.query("SELECT * FROM sys.schemas;")

Abfragen von Daten aus Warehouse

conn = notebookutils.data.connect_to_artifact("warehouse_name_or_id", "optional_workspace_id", "optional_warehouse_type")

df = conn.query("SELECT * FROM sys.schemas;")

Hinweis

Die Datendienstprogramme in NotebookUtils sind derzeit nur im Python-Notebook verfügbar.

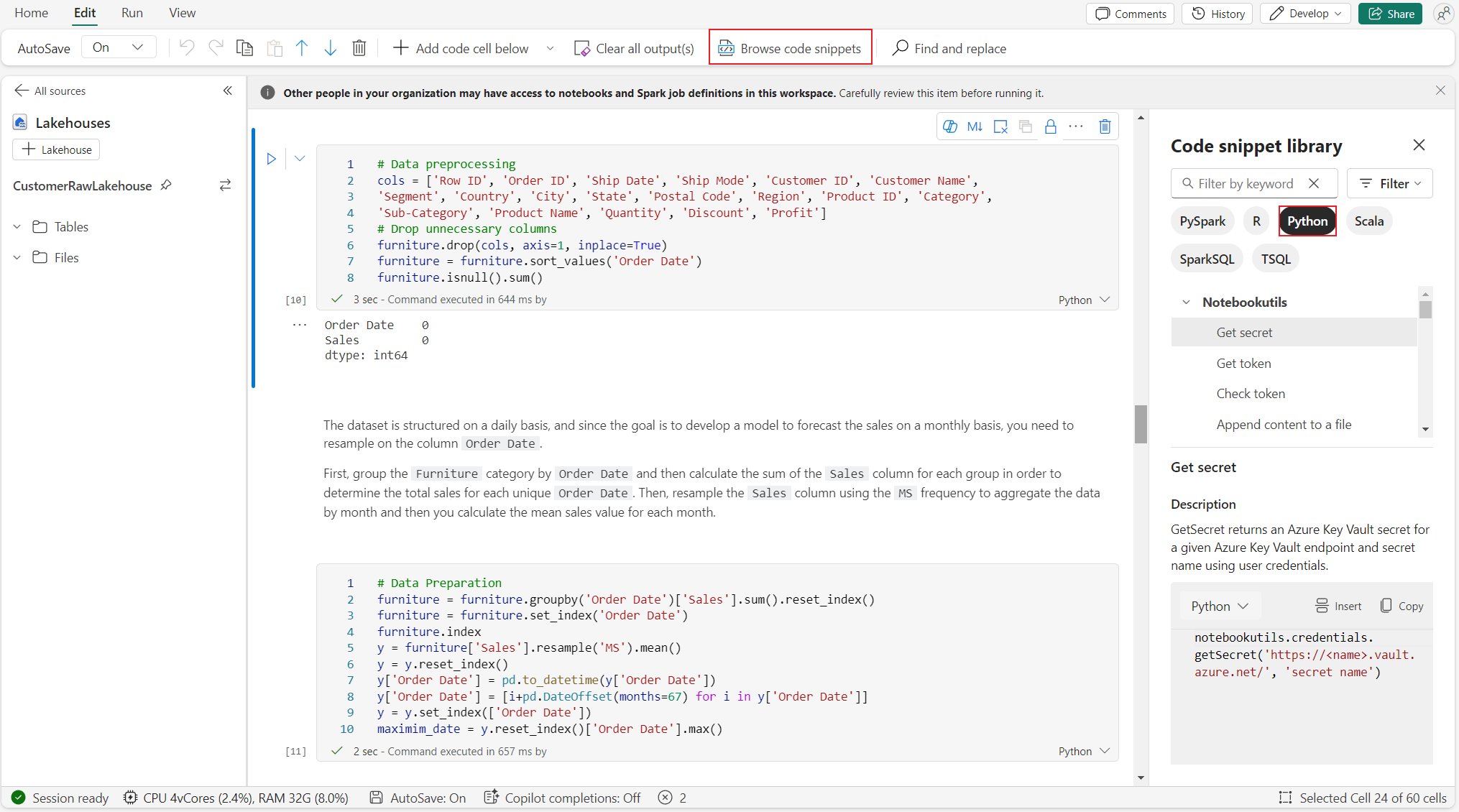

Durchsuchen von Codeschnipseln

Sie finden nützliche Python-Codeschnipsel auf der Registerkarte Bearbeiten –>Durchsuchen von Codeschnipseln, neue Python-Beispiele sind jetzt verfügbar. Sie können anhand der Python-Codeschnipsel lernen, um mit der Erkundung des Notebooks zu beginnen.

Semantische Verknüpfung

Die semantische Verknüpfung ist ein Feature, mit dem Sie in Microsoft Fabric eine Verbindung zwischen semantischen Modellen und Synapse Data Science herstellen können. Es wird nativ im Python-Notebook unterstützt. BI-Ingenieure und Power BI-Entwickler können Semantic link connect verwenden, um das semantische Modell einfach zu verwalten. Lesen Sie das öffentliche Dokument, um mehr über Semantic Link zu erfahren.

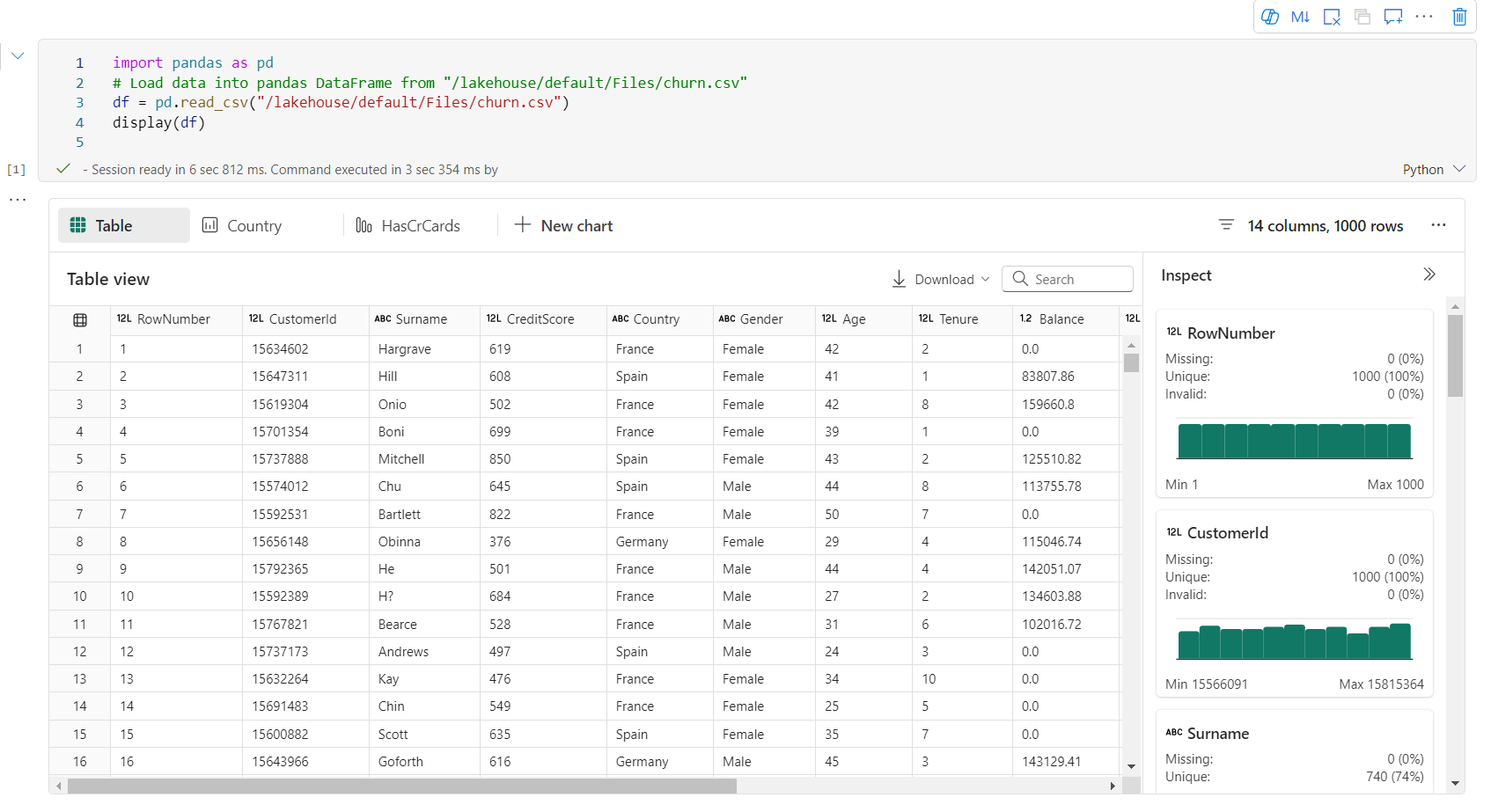

Visualisierung

Neben dem Zeichnen von Diagrammen mit Bibliotheken können Sie mit der integrierten Visualisierungsfunktion DataFrames in umfangreiche Datenvisualisierungen umwandeln. Sie können die display()-Funktion auf DataFrames anwenden, um die umfassende DataFrame-Tabellenansicht und -Diagrammansicht zu erzeugen.

Hinweis

Die Diagrammkonfigurationen werden im Python-Notebook beibehalten, was bedeutet, dass nach dem erneuten Ausführen der Codezelle, wenn sich das Ziel-DataFrame-Schema nicht ändert, die gespeicherten Diagramme weiterhin beibehalten werden.

IntelliSense programmieren

Python-Notebook hat Pylance integriert, um die Python-Programmierung zu verbessern. Pylance ist die Standard-Sprachdienstunterstützung für Python in Visual Studio Code. Es bietet viele leicht zu verwendende Funktionen wie die Kennzeichnung von Keywords, schnelle Informationen, Codevervollständigung, Parameterinformationen und die Erkennung von Syntaxfehlern. Darüber hinaus hat Pylance eine bessere Leistung, wenn das Notebook lang ist.

Data Science-Fähigkeiten

Besuchen Sie Data Science-Dokumentationen in Microsoft Fabric, um mehr über Datenwissenschaft und KI in Fabric zu erfahren. Hier werden einige wichtige Data Science-Features aufgeführt, die nativ im Python-Notebook unterstützt werden.

Data Wrangler: Data Wrangler ist ein notizbuchbasiertes Tool, das eine immersive Schnittstelle für die explorative Datenanalyse bereitstellt. Dieses Feature kombiniert eine rasterähnliche Datenanzeige mit dynamischen Zusammenfassungsstatistiken, integrierten Visualisierungen und einer Bibliothek mit allgemeinen Datenbereinigungsvorgängen. Es ermöglicht die Datenbereinigung, Datentransformation und Integration, wodurch die Datenaufbereitung mit Data Wrangler beschleunigt wird.

MLflow: Ein Machine Learning-Experiment ist die primäre Einheit für die Organisation und Steuerung aller zugehörigen Machine Learning-Ausführungen. Ein Lauf entspricht einer einzelnen Ausführung von Modellcode.

Fabric Auto Logging: Synapse Data Science in Microsoft Fabric enthält eine automatische Protokollierung, wodurch die Menge an erforderlichem Code erheblich reduziert wird, um die Parameter, Metriken und Elemente eines Machine Learning-Modells während des Trainings automatisch zu protokollieren.

Die automatische Protokollierung erweitert die MLflow-Tracking-Funktionen. Die automatische Protokollierung kann verschiedene Metriken aufzeichnen, einschließlich Genauigkeit, Fehler, F1-Score und benutzerdefinierte Metriken, die Sie festlegen. Mit Autologging können Entwickler und Data Scientists die Leistung verschiedener Modelle und Experimente ganz einfach verfolgen und vergleichen, ohne sie manuell nachzuverfolgen.

Copilot: Copilot für Data Science- und Datentechnik-Notebooks ist ein KI-Assistent, mit dem Sie Daten analysieren und visualisieren können. Er funktioniert mit Lakehouse-Tabellen, Power BI-Datasets und Pandas/Spark-DataFrames, die Antworten und Codeschnipsel direkt im Notebook bereitstellen. Sie können den Copilot-Chatbereich und Char-Magics in Notebook verwenden, und die KI stellt Antworten oder Code bereit, den Sie in Ihr Notebook kopieren können.

Bekannte Einschränkungen der Public Preview

Livepool ist nicht bei jeder Ausführung eines Python-Notebooks garantiert. Das Starten der Sitzung kann bis zu 3 Minuten dauern, wenn die Ausführung des Notebooks nicht auf den Livepool trifft. Da die Nutzung von Python-Notebooks ansteigt, erhöhen unsere intelligenten Poolmethoden allmählich die Livepool-Zuweisung, um die Nachfrage zu bedienen.

Die Umgebungsintegration ist im Python-Notebook nicht über die Public Preview verfügbar.

Das Festlegen des Sitzungstimeouts ist zurzeit nicht verfügbar.

Copilot könnte eine Spark-Anweisung erzeugen, die möglicherweise nicht in einem Python-Notizbuch ausführbar ist.

Derzeit wird Copilot in Python-Notebooks in mehreren Regionen nicht vollständig unterstützt. Der Bereitstellungsprozess läuft noch, also bleiben Sie dran, während wir weiterhin Unterstützung in weiteren Regionen einführen.