Azure SQL データベースのデータにインデックスを付ける

この記事では、インデクサーを構成する方法について学習します。これにより、Azure SQL Database または Azure SQL マネージド インスタンスからコンテンツをインポートし、Azure AI Search でその検索ができるようになります。

この記事では、インデクサーの作成に関する記事を補足するため、特に Azure SQL について説明します。 Azure portal と REST API を使用して、すべてのインデクサーに共通する 3 部構成のワークフロー (データ ソースの作成、インデックスの作成、インデクサーの作成) を示します。 データ抽出は、インデクサーの作成要求を送信したときに発生します。

この記事では、次の内容についても説明します。

増分インデックス作成を設定できるようにするための、Azure SQL インデクサーによってサポートされる変更検出ポリシーの説明。

機能の互換性に関する質問への回答に関するよく寄せられる質問 (FAQ) のセクション。

Note

インデクサーでは、リアルタイムのデータ同期は実行できません。 インデクサーがテーブルのインデックスを作成し直すのに、最大で 5 分かかります。 データの更新をより早くインデックスに反映する必要がある場合は、更新された行を直接プッシュすることをお勧めします。

前提条件

単一のテーブルまたはビューにデータを含む Azure SQL データベース、またはパブリック エンドポイントがされたSQL Managed Instance。

データが大きい場合、または SQL のネイティブな変更検出機能を使用した増分インデックス作成が必要な場合は、テーブルを使用します。

複数のテーブルのデータを統合する必要がある場合は、ビューを使用します。 大きなビューは、SQL インデクサーには適していません。 回避策として、Azure AI Search インデックスに取り込むために使用する、専用の新しいテーブルを作成します。 SQL 統合変更追跡を使用して、新規および変更された行を追跡できます。これは、高ウォーター マークよりも実装するのが容易です。

読み取りアクセス許可。 Azure AI Search では、接続文字列にユーザー名とパスワードを指定する SQL Server 認証がサポートされています。 または、マネージド ID を設定し、Azure ロールを使用することもできます。

この記事の中の例をやり遂げるには、Azure portal または REST クライアントが必要です。 Azure portalを使用している場合は、すべての公衆ネットワークへのアクセスが Azure SQL ファイアウォールで有効になっていること、およびクライアントが受信規則によってアクセス可能であることを確認します。 ローカルで実行される REST クライアントの場合は、デバイスの IP アドレスからの受信アクセスを許可するように SQL Server ファイアウォールを構成します。 Azure SQL インデクサーを作成するためのその他のアプローチには、Azure SDK があります。

サンプル データで試す

次の手順を使用して、テスト目的で Azure SQL Database 内にテーブルを作成して読み込みます。

GitHub から hotels-azure-sql.sql をダウンロードして、サンプル ホテル データ セットのサブセットを含むテーブルを Azure SQL Database 上に作成します。

Azure portal にサインインして、Azure SQL データベースおよびデータベース サーバーを作成します。 SQL Server 認証と Microsoft Entra ID 認証の両方を構成することを検討してください。 Azure 上でロールを構成するアクセス許可がない場合は、回避策として SQL 認証を使用できます。

ローカル デバイスからのすべての受信要求に対してサーバー ファイアウォールを構成します。

Azure SQL データベース上で、[クエリ エディター (プレビュー)] を選択してから、[新しいクエリ] を選択します。

貼り付けてから、hotels テーブルを作成する T-SQL スクリプトを実行します。

CREATE TABLE tbl_hotels ( Id TINYINT PRIMARY KEY, Modified DateTime NULL DEFAULT '0000-00-00 00:00:00', IsDeleted TINYINT, HotelName VARCHAR(40), Category VARCHAR(20), City VARCHAR(30), State VARCHAR(4), Description VARCHAR(500) );貼り付けてから、レコードを挿入する T-SQL スクリプトを実行します。

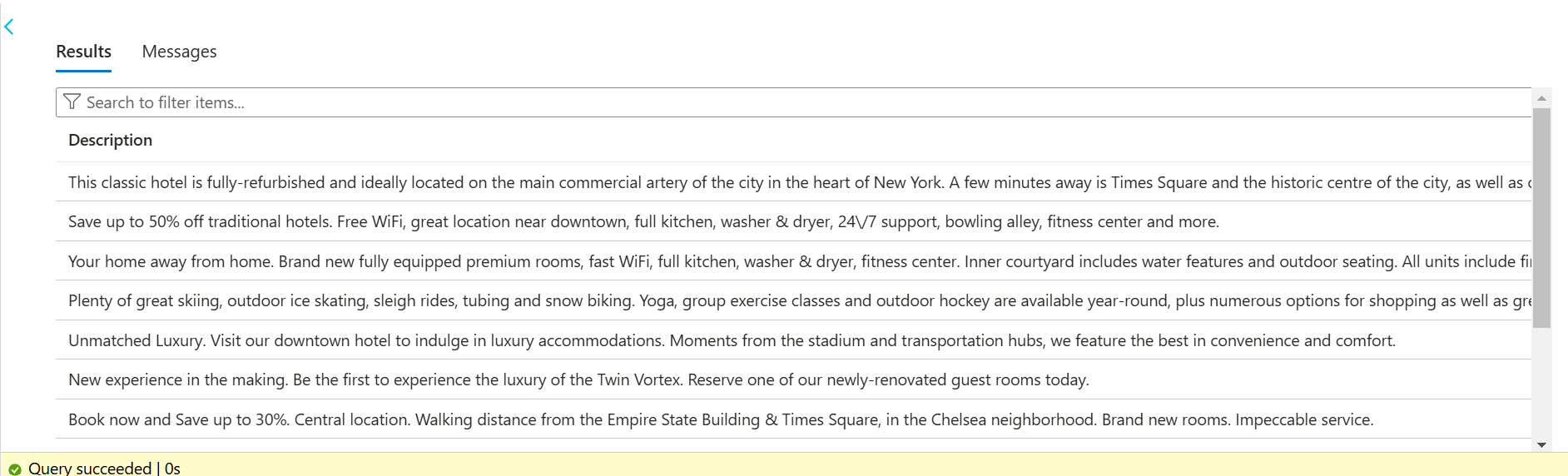

-- Insert rows INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (1, CURRENT_TIMESTAMP, 0, 'Stay-Kay City Hotel', 'Boutique', 'New York', 'NY', 'This classic hotel is fully-refurbished and ideally located on the main commercial artery of the city in the heart of New York. A few minutes away is Times Square and the historic centre of the city, as well as other places of interest that make New York one of Americas most attractive and cosmopolitan cities.'); INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (10, CURRENT_TIMESTAMP, 0, 'Countryside Hotel', 'Extended-Stay', 'Durham', 'NC', 'Save up to 50% off traditional hotels. Free WiFi, great location near downtown, full kitchen, washer & dryer, 24\/7 support, bowling alley, fitness center and more.'); INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (11, CURRENT_TIMESTAMP, 0, 'Royal Cottage Resort', 'Extended-Stay', 'Bothell', 'WA', 'Your home away from home. Brand new fully equipped premium rooms, fast WiFi, full kitchen, washer & dryer, fitness center. Inner courtyard includes water features and outdoor seating. All units include fireplaces and small outdoor balconies. Pets accepted.'); INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (12, CURRENT_TIMESTAMP, 0, 'Winter Panorama Resort', 'Resort and Spa', 'Wilsonville', 'OR', 'Plenty of great skiing, outdoor ice skating, sleigh rides, tubing and snow biking. Yoga, group exercise classes and outdoor hockey are available year-round, plus numerous options for shopping as well as great spa services. Newly-renovated with large rooms, free 24-hr airport shuttle & a new restaurant. Rooms\/suites offer mini-fridges & 49-inch HDTVs.'); INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (13, CURRENT_TIMESTAMP, 0, 'Luxury Lion Resort', 'Luxury', 'St. Louis', 'MO', 'Unmatched Luxury. Visit our downtown hotel to indulge in luxury accommodations. Moments from the stadium and transportation hubs, we feature the best in convenience and comfort.'); INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (14, CURRENT_TIMESTAMP, 0, 'Twin Vortex Hotel', 'Luxury', 'Dallas', 'TX', 'New experience in the making. Be the first to experience the luxury of the Twin Vortex. Reserve one of our newly-renovated guest rooms today.'); INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (15, CURRENT_TIMESTAMP, 0, 'By the Market Hotel', 'Budget', 'New York', 'NY', 'Book now and Save up to 30%. Central location. Walking distance from the Empire State Building & Times Square, in the Chelsea neighborhood. Brand new rooms. Impeccable service.'); INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (16, CURRENT_TIMESTAMP, 0, 'Double Sanctuary Resort', 'Resort and Spa', 'Seattle', 'WA', '5 Star Luxury Hotel - Biggest Rooms in the city. #1 Hotel in the area listed by Traveler magazine. Free WiFi, Flexible check in\/out, Fitness Center & espresso in room.'); INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (17, CURRENT_TIMESTAMP, 0, 'City Skyline Antiquity Hotel', 'Boutique', 'New York', 'NY', 'In vogue since 1888, the Antiquity Hotel takes you back to bygone era. From the crystal chandeliers that adorn the Green Room, to the arched ceilings of the Grand Hall, the elegance of old New York beckons. Elevate Your Experience. Upgrade to a premiere city skyline view for less, where old world charm combines with dramatic views of the city, local cathedral and midtown.'); INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (18, CURRENT_TIMESTAMP, 0, 'Ocean Water Resort & Spa', 'Luxury', 'Tampa', 'FL', 'New Luxury Hotel for the vacation of a lifetime. Bay views from every room, location near the pier, rooftop pool, waterfront dining & more.'); INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (19, CURRENT_TIMESTAMP, 0, 'Economy Universe Motel', 'Budget', 'Redmond', 'WA', 'Local, family-run hotel in bustling downtown Redmond. We are a pet-friendly establishment, near expansive Marymoor park, haven to pet owners, joggers, and sports enthusiasts. Close to the highway and just a short drive away from major cities.'); INSERT INTO tbl_hotels (Id, Modified, IsDeleted, HotelName, Category, City, State, Description) VALUES (20, CURRENT_TIMESTAMP, 0, 'Delete Me Hotel', 'Unknown', 'Nowhere', 'XX', 'Test-case row for change detection and delete detection . For change detection, modify any value, and then re-run the indexer. For soft-delete, change IsDelete from zero to a one, and then re-run the indexer.');クエリを実行して、アップロードを確認します。

SELECT Description FROM tbl_hotels;

次のスクリーンショットのような結果が表示されます。

"Description" フィールドには、最も詳細なコンテンツが表示されます。 フルテキスト検索とオプションのベクトル化のためには、このフィールドをターゲットにする必要があります。

これでデータベース テーブルが作成されたので、Azure portal、REST クライアント、または Azure SDK を使用して、データにインデックスを付けることができます。

ヒント

サンプルのコンテンツおよびコードが用意された別のリソースは、Azure-Samples/SQL-AI-samples にあります。

Azure portal を使用する

データ インポート ウィザードまたはデータのインポートとベクトル化ウィザードのいずれかを使用して、SQL データベース テーブルまたはビューのインデックス作成を自動化できます。 データ ソースの構成は、どちらのウィザードも似ています。

[データへの接続] 上で、データ ソースの種類が [Azure SQL Database] または [SQL データベース] であることを選択または確認します。

データ ソース名は、Azure AI 検索内のデータ ソース接続オブジェクトを参照します。 ベクトル ウィザードを使用する場合、そのデータ ソース名はウィザード ワークフローの最後に、指定されたカスタム プレフィックスを使用して自動生成されます。

サーバー名、データベース名、およびテーブルまたはビュー名を指定します。

Azure portal によってその接続が検証されます。 非アクティブなためにデータベースが一時停止している場合は、[データベース サーバー] ページに移動し、データベースの [状態] が [オンライン] であることを確認します。 任意のテーブルにクエリを実行して、データベースをアクティブ化できます。

![Azure portal 内の、データベースの [状態] ページのスクリーンショット。](media/search-how-to-index-sql-database/database-online.png)

認証方法を指定します (サーバーの構成時に定義した SQL Server ログイン、またはマネージド ID のいずれか)。

マネージド ID を使用するように Azure AI 検索を構成して、SQL Server 共同作成者または SQL DB 共同作成者のアクセス許可をその ID に付与するロールの割り当てをデータベース サーバー上に作成した場合、インデクサーは Microsoft Entra ID とロールを使用して Azure SQL に接続できます。

データのインポートとベクトル化ウィザードでは、変更と削除を追跡するオプションを指定できます。

削除の追跡は、カスタム メタデータを使用した論理的な削除に基づいています。

変更の追跡は、SQL Server 統合変更追跡または高ウォーター マーク変更追跡に基づいています。

残りの手順を続行してウィザードを完了します。

REST API を使用する

このセクションでは、データ ソース、インデックス、インデクサーを作成する REST API 呼び出しを示します。

データ ソースを定義する

データ ソース定義では、インデックスを付けるデータ、資格情報、データの変更を識別するためのポリシーを指定します。 データ ソースは、複数のインデクサーで使用できるように、独立したリソースとして定義します。

データ ソースを作成するかデータ ソースを作成または更新して、その定義を設定します。

POST https://myservice.search.windows.net/datasources?api-version=2024-07-01 Content-Type: application/json api-key: admin-key { "name" : "myazuresqldatasource", "description" : "A database for testing Azure AI Search indexes.", "type" : "azuresql", "credentials" : { "connectionString" : "Server=tcp:<your server>.database.windows.net,1433;Database=<your database>;User ID=<your user name>;Password=<your password>;Trusted_Connection=False;Encrypt=True;Connection Timeout=30;" }, "container" : { "name" : "name of the table or view that you want to index", "query" : null (not supported in the Azure SQL indexer) }, "dataChangeDetectionPolicy": null, "dataDeletionDetectionPolicy": null, "encryptionKey": null, "identity": null }Azure AI Search の名前付け規則に従うデータ ソースの一意の名前を指定します。

"type" を

"azuresql"に指定します (必須)。次のように、"credentials" を接続文字列に設定します。

フル アクセス接続文字列は、Azure portal から取得できます。

ADO.NET connection stringオプションを使用します。 ユーザー名とパスワードを設定します。または、データベース シークレットを含めないマネージド ID 接続文字列を、次の形式で指定できます:

Initial Catalog|Database=<your database name>;ResourceId=/subscriptions/<your subscription ID>/resourceGroups/<your resource group name>/providers/Microsoft.Sql/servers/<your SQL Server name>/;Connection Timeout=connection timeout length;

詳細については、マネージド ID を使用した Azure SQL Database インデクサーへの接続に関する記事を参照してください。

インデックスに検索フィールドを追加する

検索インデックスで、SQL データベースのフィールドに対応するフィールドを追加します。 検索インデックス スキーマが、同等のデータ型を使用してソース スキーマと互換性があることを確認します。

インデックスを作成または更新して、データを格納する検索フィールドを定義します。

POST https://[service name].search.windows.net/indexes?api-version=2024-07-01 Content-Type: application/json api-key: [Search service admin key] { "name": "mysearchindex", "fields": [{ "name": "id", "type": "Edm.String", "key": true, "searchable": false }, { "name": "description", "type": "Edm.String", "filterable": false, "searchable": true, "sortable": false, "facetable": false, "suggestions": true } ] }各検索ドキュメントを一意に識別するドキュメント キー フィールド ("key": true) を作成します。 これは、検索インデックスに必要な唯一のフィールドです。 通常、テーブルの主キーはインデックス キー フィールドにマップされます。 ドキュメント キーは一意で、null 値以外である必要があります。 値はソース データでは数値にできますが、検索インデックスでは、キーは常に文字列です。

さらにフィールドを作成して、検索可能なコンテンツを追加します。 ガイダンスについては、インデックスの作成に関するページを参照してください。

マッピング データ型

| SQL data type (SQL データ型) | Azure AI Search のフィールドの型 | メモ |

|---|---|---|

| bit | Edm.Boolean、Edm.String | |

| int、smallint、tinyint | Edm.Int32、Edm.Int64、Edm.String | |

| bigint | Edm.Int64、Edm.String | |

| real、float | Edm.Double、Edm.String | |

| smallmoney、money decimal numeric | Edm.String | Azure AI Search では、10 進数型を Edm.Double に変換できません。そうすると精度が失われるためです |

| char、nchar、varchar、nvarchar | Edm.String Collection(Edm.String) |

SQL 文字列が JSON 文字列配列 ["red", "white", "blue"] を表している場合、その SQL 文字列を使用して、Collection(Edm.String) フィールドを設定できます |

| smalldatetime、datetime、datetime2、date、datetimeoffset | Edm.DateTimeOffset、Edm.String | |

| uniqueidentifer | Edm.String | |

| geography | Edm.GeographyPoint | 型が POINT で SRID が 4326 (既定) の地理インスタンスのみがサポートされます。 |

| rowversion | 適用なし | 行バージョン列は検索インデックスに保存できませんが、変更追跡に利用できます。 |

| time、timespan、binary、varbinary、image、xml、geometry、CLR 型 | 適用なし | サポートされていません |

Azure SQL インデクサーを構成して実行する

インデックスとデータ ソースを作成したら、インデクサーを作成できます。 インデクサーの構成では、実行時の動作を制御する入力、パラメーター、プロパティを指定します。

名前を指定し、データ ソースとターゲット インデックスを参照することで、インデクサーを作成または更新します。

POST https://[service name].search.windows.net/indexers?api-version=2024-07-01 Content-Type: application/json api-key: [search service admin key] { "name" : "[my-sqldb-indexer]", "dataSourceName" : "[my-sqldb-ds]", "targetIndexName" : "[my-search-index]", "disabled": null, "schedule": null, "parameters": { "batchSize": null, "maxFailedItems": 0, "maxFailedItemsPerBatch": 0, "base64EncodeKeys": false, "configuration": { "queryTimeout": "00:04:00", "convertHighWaterMarkToRowVersion": false, "disableOrderByHighWaterMarkColumn": false } }, "fieldMappings": [], "encryptionKey": null }parameters の下の configuration セクションには、Azure SQL に固有のパラメーターが記載されています。

SQL クエリ実行の既定のクエリ タイムアウトは 5 分ですが、これはオーバーライドできます。

"convertHighWaterMarkToRowVersion" は、高基準値変更検出ポリシー向けに最適化されます。 変更検出ポリシーは、データ ソースに設定されます。 ネイティブの変更検出ポリシーを使用している場合、このパラメーターは無効です。

"disableOrderByHighWaterMarkColumn" により、高基準値ポリシーで使用される SQL クエリで ORDER BY 句が省略されます。 ネイティブの変更検出ポリシーを使用している場合、このパラメーターは無効です。

フィールドの名前または種類に違いがある、または検索インデックスで複数のソース フィールドのバージョンが必要な場合、フィールド マッピングを定義します。

その他のプロパティについては、「インデクサーの作成方法」を参照してください。

インデクサーは、作成されると自動的に実行されます。 これを防ぐには、"disabled" を true に設定します。 インデクサーの実行を制御するには、インデクサーをオンデマンドで実行するか、スケジュールを設定します。

インデクサーの状態を確認する

インデクサーの状態と実行履歴を監視するには、Azure portal 内でインデクサーの実行履歴を確認するか、Get Indexer Status REST API 要求を送信します

[Search Service] ページ上で、[検索管理]>[インデクサー] を開きます。

インデクサーを選択して、[構成] と [実行履歴] にアクセスします。

特定のインデクサー ジョブを選択して、詳細、警告、エラーを表示します。

実行履歴には、最近完了した実行 50 件が含まれます。時系列の逆の順に並べられるため、最後の実行が最初に表示されます。

新しい、変更された、削除された行のインデックス付け

SQL データベースで変更の追跡がサポートされている場合、検索インデクサーでは、後続のインデクサーの実行時に新しいおよび更新されたコンテンツのみを取得できます。

増分インデックス作成を有効にするには、データ ソース定義で "dataChangeDetectionPolicy" プロパティを設定します。 このプロパティで、テーブルまたはビューに対して使用する変更追跡メカニズムをインデクサーに指示します。

Azure SQL インデクサーの場合、次の 2 つの変更検出ポリシーがあります。

"SqlIntegratedChangeTrackingPolicy" (テーブルにのみ適用)

"HighWaterMarkChangeDetectionPolicy" (テーブルとビューに対して機能)

SQL 統合変更追跡ポリシー

効率性と、削除された行を識別する機能を備えているため、"SqlIntegratedChangeTrackingPolicy" を使用することをお勧めします。

データベース要件:

- Azure VM で SQL Server を使用している場合は、SQL Server 2012 SP3 以降

- Azure SQL Database または SQL Managed Instance

- テーブルのみ (ビューなし)

- データベースでテーブルの変更の追跡を有効にしている

- テーブルに複合主キー (複数の列を含む主キー) がない

- テーブルにクラスター化インデックスがない。 回避策として、すべてのクラスター化インデックスを削除して非クラスター化インデックスとして再作成する必要がありますが、クラスター化インデックスがある場合と比較して、ソースのパフォーマンスに影響が出る可能性があります

変更検出ポリシーは、データソース定義に追加されます。 このポリシーを使用するには、データ ソースを次のように作成または更新します。

POST https://myservice.search.windows.net/datasources?api-version=2024-07-01

Content-Type: application/json

api-key: admin-key

{

"name" : "myazuresqldatasource",

"type" : "azuresql",

"credentials" : { "connectionString" : "connection string" },

"container" : { "name" : "table name" },

"dataChangeDetectionPolicy" : {

"@odata.type" : "#Microsoft.Azure.Search.SqlIntegratedChangeTrackingPolicy"

}

}

SQL の統合された変更追跡ポリシーを使用する場合は、別個のデータ削除検出ポリシーを指定しないでください。 SQL の統合された変更追跡ポリシーには、削除された行を識別するためのサポートが組み込まれています。 ただし、削除された行が自動的に検出されるためには、検索インデックスのドキュメント キーが SQL テーブルの主キーと同じである必要があります。

Note

TRUNCATE TABLE を使用して SQL テーブルから多数の行を削除するときは、インデクサーをリセットして変更追跡状態をリセットし、行の削除を取得する必要があります。

高基準値変更検出ポリシー

この変更検出ポリシーは、行が最後に更新されたときのバージョンまたは時刻を取得する、テーブルまたはビューの "高基準" 列に依存します。 ビューを使う場合は、高基準ポリシーを使用する必要があります。

高基準列では、次の要件を満たす必要があります。

- すべての挿入は列の値を指定します。

- 項目を更新すると、列の値も変更されます。

- この列の値は挿入または更新のたびに増加します。

- 次の WHERE 句および ORDER BY 句のあるクエリを効率的に実行できます。

WHERE [High Water Mark Column] > [Current High Water Mark Value] ORDER BY [High Water Mark Column]

Note

高基準列には、rowversion データ型を使用することを強くお勧めします。 その他のデータ型を使用した場合、インデクサー クエリと同時に実行されるトランザクションが存在するところでは、変更の追跡でのすべての変更の捕捉は保証されません。 読み取り専用のレプリカのある構成で rowversion を使用する場合、インデクサーはプライマリ レプリカをポイントする必要があります。 プライマリ レプリカを使用できるのは、データ同期のシナリオのみです。

変更検出ポリシーは、データソース定義に追加されます。 このポリシーを使用するには、データ ソースを次のように作成または更新します。

POST https://myservice.search.windows.net/datasources?api-version=2024-07-01

Content-Type: application/json

api-key: admin-key

{

"name" : "myazuresqldatasource",

"type" : "azuresql",

"credentials" : { "connectionString" : "connection string" },

"container" : { "name" : "table or view name" },

"dataChangeDetectionPolicy" : {

"@odata.type" : "#Microsoft.Azure.Search.HighWaterMarkChangeDetectionPolicy",

"highWaterMarkColumnName" : "[a rowversion or last_updated column name]"

}

}

Note

ソース テーブルの高基準列にインデックスがない場合、SQL インデクサーが使用するクエリはタイムアウトになる可能性があります。具体的には、多くの行が含まれるテーブルに対してクエリを効率的に実行するには、ORDER BY [High Water Mark Column] 句にはインデックスが必要です。

convertHighWaterMarkToRowVersion

高基準値列に rowversion データ型を使用している場合は、インデクサー構成で convertHighWaterMarkToRowVersion を設定することを検討してください。 このプロパティを true に設定すると、次の動作が実行されます。

インデクサー SQL クエリ内の高基準値列に rowversion データ型を使用します。 正しいデータ型を使用することで、インデクサー クエリのパフォーマンスが向上します。

インデクサー クエリが実行される前に、rowversion 値から 1 を減算します。 1 対多の結合を含むビューには、重複する rowversion 値を持つ行が含まれる場合があります。 1 を減算すると、インデクサー クエリはこれらの行を見逃すことがなくなります。

このプロパティを有効にするには、次の構成を使用してインデクサーを作成または更新します。

{

... other indexer definition properties

"parameters" : {

"configuration" : { "convertHighWaterMarkToRowVersion" : true } }

}

queryTimeout

タイムアウト エラーを発生した場合は、queryTimeout インデクサー構成設定を既定の 5 分よりも大きい値に設定します。 たとえば、タイムアウトを 10 分に設定するには、次の構成でインデクサーを作成または更新します。

{

... other indexer definition properties

"parameters" : {

"configuration" : { "queryTimeout" : "00:10:00" } }

}

disableOrderByHighWaterMarkColumn

ORDER BY [High Water Mark Column] 句を無効にすることもできます。 ただし、これはお勧めしません。インデクサーの実行がエラーで中断された場合、その時点でほぼすべての行の処理が完了していても、そのインデクサーが後で実行されたときに、すべての行を再処理しなければならないためです。

ORDER BY 句を無効にするには、インデクサー定義で disableOrderByHighWaterMarkColumn 設定を使用します。

{

... other indexer definition properties

"parameters" : {

"configuration" : { "disableOrderByHighWaterMarkColumn" : true } }

}

論理削除列削除検出ポリシー

ソース テーブルから行が削除されると、おあそらく検索インデックスからも同様に行を削除する必要があります。 SQL 統合変更追跡ポリシーを使用する場合、これは自動的に行われます。 ただし、高基準変更追跡ポリシーでは行は削除されません。 どうすればよいでしょうか。

行がテーブルから物理的に削除されている場合、Azure AI Search では、存在しなくなったレコードの存在を推論することはできません。 ただし、"論理削除" テクニックを使用すると、行をテーブルから削除せずに論理的に削除できます。 このテクニックでは、列をテーブルまたはビューに追加し、その列を使用して行を削除済みとしてマークします。

論理削除手法を使用する場合は、データ ソースを作成または更新するときに、次のような論理削除ポリシーを指定できます。

{

…,

"dataDeletionDetectionPolicy" : {

"@odata.type" : "#Microsoft.Azure.Search.SoftDeleteColumnDeletionDetectionPolicy",

"softDeleteColumnName" : "[a column name]",

"softDeleteMarkerValue" : "[the value that indicates that a row is deleted]"

}

}

softDeleteMarkerValue は、データ ソースの JSON 表現の文字列である必要があります。 実際の値の文字列形式を使用します。 たとえば、削除された行が値 1 でマークされている整数列がある場合は、"1" を使用します。 削除された行が BIT 列でブール型の true 値でマークされている場合は、文字列リテラル "True" または "true" を使用します。大文字/小文字は区別されません。

Azure portal から論理的な削除のポリシーを設定する場合は、論理的な削除のマーカーの値の前後に引用符を追加しないでください。 フィールドの内容は文字列として既に認識されており、自動的に JSON 文字列に変換されます。 前の例では、Azure portal のフィールドに「1」、「True」または「true」と入力するだけです。

よく寄せられる質問

Q: Always Encrypted 列にインデックスを作成できますか?

いいえ、Always Encrypted 列は現在、Azure AI Search インデクサーではサポートされていません。

Q: Azure の IaaS VM で実行する SQL Database で Azure SQL インデクサーを使用できますか?

はい。 ただし、Search サービスに対してデータベースへの接続を許可する必要があります。 詳細については、Azure VM で Azure AI Search インデクサーから SQL Server への接続を構成する方法に関するページを参照してください。

Q: オンプレミスで実行する SQL Database で Azure SQL インデクサーを使用できますか?

直接無効にすることはできません。 直接接続は推奨もサポートもされません。これを行うには、インターネット トラフィックに対してデータベースを開く必要があります。 Azure Data Factory などのブリッジ テクノロジを使用してこのシナリオで成功した事例があります。 詳しくは、Azure Data Factory を使用して Azure Ai Search インデックスにデータをプッシュする方法に関するページを参照してください。

Q: フェールオーバー クラスターでデータ ソースとしてセカンダリ レプリカを使用できますか?

一概には言えません。 テーブルまたはビューのインデックスの完全作成の場合、セカンダリ レプリカを使用できます。

インデックスの増分作成の場合、Azure AI Search では 2 つの変更検出ポリシーをサポートしています。1 つは SQL 統合変更追跡ポリシーで、もう 1 つは高基準値ポリシーです。

読み取り専用のレプリカでは、SQL Database は統合変更追跡に対応しません。 そのため、高基準値ポリシーを使用する必要があります。

標準推奨事項として、高基準列には、rowversion データ型を使用することをお勧めします。 ただし、rowversion を使用するには MIN_ACTIVE_ROWVERSION 関数が必要ですが、この関数は読み取り専用のレプリカではサポートされていません。 そのため、rowversion を使用する場合は、インデクサーはプライマリ レプリカを指す必要があります。

読み取り専用レプリカ上で rowversion の使用を試みると、次のようなエラーが発生します。

"Using a rowversion column for change tracking is not supported on secondary (read-only) availability replicas. (変更追跡での rowversion 列の使用は、セカンダリ (読み取り専用) 可用性レプリカではサポートされていません。) Update the datasource and specify a connection to the primary availability replica. (データソースを更新して、プライマリ可用性レプリカへの接続を指定してください。) Current database 'Updateability' property is 'READ_ONLY' (現在のデータベースの "Updateability" プロパティは "READ_ONLY" です。)"

Q: 高基準変更追跡に代替の非 rowversion 列を使用することはできますか?

それはお勧めしません。 信頼性の高いデータ同期を実行できるのは、rowversion のみです。 ただし、アプリケーション ロジックによっては、次の条件を満たせば、その信頼性が高まる可能性があります。

インデクサーの実行時に、インデックスが作成されるテーブルに未処理のトランザクションがない (すべてのテーブルの更新がスケジュールに従ってバッチ操作として実行され、Azure AI Search インデクサーのスケジュールがテーブル更新スケジュールと重ならないように設定されているなど) ことを確認することができます。

列が実行されないことを防ぐために、インデックスの完全な再作成を定期的に実行している。