Define an agent’s input and output schema

AI agents must adhere to specific input and output schema requirements to be compatible with other features on Azure Databricks. This article explains how to ensure your AI agent adheres to those requirements and how to customize your agent’s input and output schema while ensuring compatibility.

Mosaic AI uses MLflow Model Signatures to define an agent’s input and output schema requirements. The model signature tells internal and external components how to interact with your agent and validates that they adhere to the schema.

OpenAI chat completion schema (recommended)

Databricks recommends using the OpenAI chat completion schema to define agent input and output. This schema is widely adopted and compatible with many agent frameworks and applications, including those in Databricks.

See OpenAI documentation for more information about the chat completion input schema and output schema.

Note

The OpenAI chat completion schema is simply a standard for structuring agent inputs and outputs. Implementing this schema does not involve using OpenAI’s resources or models.

MLflow provides convenient APIs for LangChain and PyFunc-flavored agents, helping you create agents compatible with the chat completion schema.

Implement chat completion with LangChain

If your agent uses LangChain, use MLflow’s ChatCompletionOutputParser() to format your agent’s final output to be compatible with the chat completion schema. If you use LangGraph, see LangGraph custom schemas.

from mlflow.langchain.output_parsers import ChatCompletionOutputParser

chain = (

{

"user_query": itemgetter("messages")

| RunnableLambda(extract_user_query_string),

"chat_history": itemgetter("messages") | RunnableLambda(extract_chat_history),

}

| RunnableLambda(DatabricksChat)

| ChatCompletionOutputParser()

)

Implement chat completion with PyFunc

If you use PyFunc, Databricks recommends writing your agent as a subclass of mlflow.pyfunc.ChatModel. This method provides the following benefits:

Allows you to write agent code compatible with the chat completion schema using typed Python classes.

When logging the agent, MLflow will automatically infer a chat completion-compatible signature, even without an

input_example. This simplifies the process of registering and deploying the agent. See Infer Model Signature during logging.from dataclasses import dataclass from typing import Optional, Dict, List, Generator from mlflow.pyfunc import ChatModel from mlflow.types.llm import ( # Non-streaming helper classes ChatCompletionRequest, ChatCompletionResponse, ChatCompletionChunk, ChatMessage, ChatChoice, ChatParams, # Helper classes for streaming agent output ChatChoiceDelta, ChatChunkChoice, ) class MyAgent(ChatModel): """ Defines a custom agent that processes ChatCompletionRequests and returns ChatCompletionResponses. """ def predict(self, context, messages: list[ChatMessage], params: ChatParams) -> ChatCompletionResponse: last_user_question_text = messages[-1].content response_message = ChatMessage( role="assistant", content=( f"I will always echo back your last question. Your last question was: {last_user_question_text}. " ) ) return ChatCompletionResponse( choices=[ChatChoice(message=response_message)] ) def _create_chat_completion_chunk(self, content) -> ChatCompletionChunk: """Helper for constructing a ChatCompletionChunk instance for wrapping streaming agent output""" return ChatCompletionChunk( choices=[ChatChunkChoice( delta=ChatChoiceDelta( role="assistant", content=content ) )] ) def predict_stream( self, context, messages: List[ChatMessage], params: ChatParams ) -> Generator[ChatCompletionChunk, None, None]: last_user_question_text = messages[-1].content yield self._create_chat_completion_chunk(f"Echoing back your last question, word by word.") for word in last_user_question_text.split(" "): yield self._create_chat_completion_chunk(word) agent = MyAgent() model_input = ChatCompletionRequest( messages=[ChatMessage(role="user", content="What is Databricks?")] ) response = agent.predict(context=None, model_input=model_input) print(response)

Custom inputs and outputs

Databricks recommends adhering to the OpenAI chat completions schema for most agent use cases.

However, some scenarios may require additional inputs, such as client_type and session_id, or outputs like retrieval source links that should not be included in the chat history for future interactions.

For these scenarios, Mosaic AI Agent Framework supports augmenting OpenAI chat completion requests and responses with custom inputs and outputs.

See the following examples to learn how to create custom inputs and outputs for PyFunc and LangGraph agents.

Warning

The Agent Evaluation review app does not currently support rendering traces for agents with additional input fields.

PyFunc custom schemas

The following notebooks show a custom schema example using PyFunc.

PyFunc custom schema agent notebook

PyFunc custom schema driver notebook

LangGraph custom schemas

The following notebooks show a custom schema example using LangGraph. You can modify the wrap_output function in the notebooks to parse and extract information from the message stream.

LangGraph custom schema agent notebook

LangGraph custom schema driver notebook

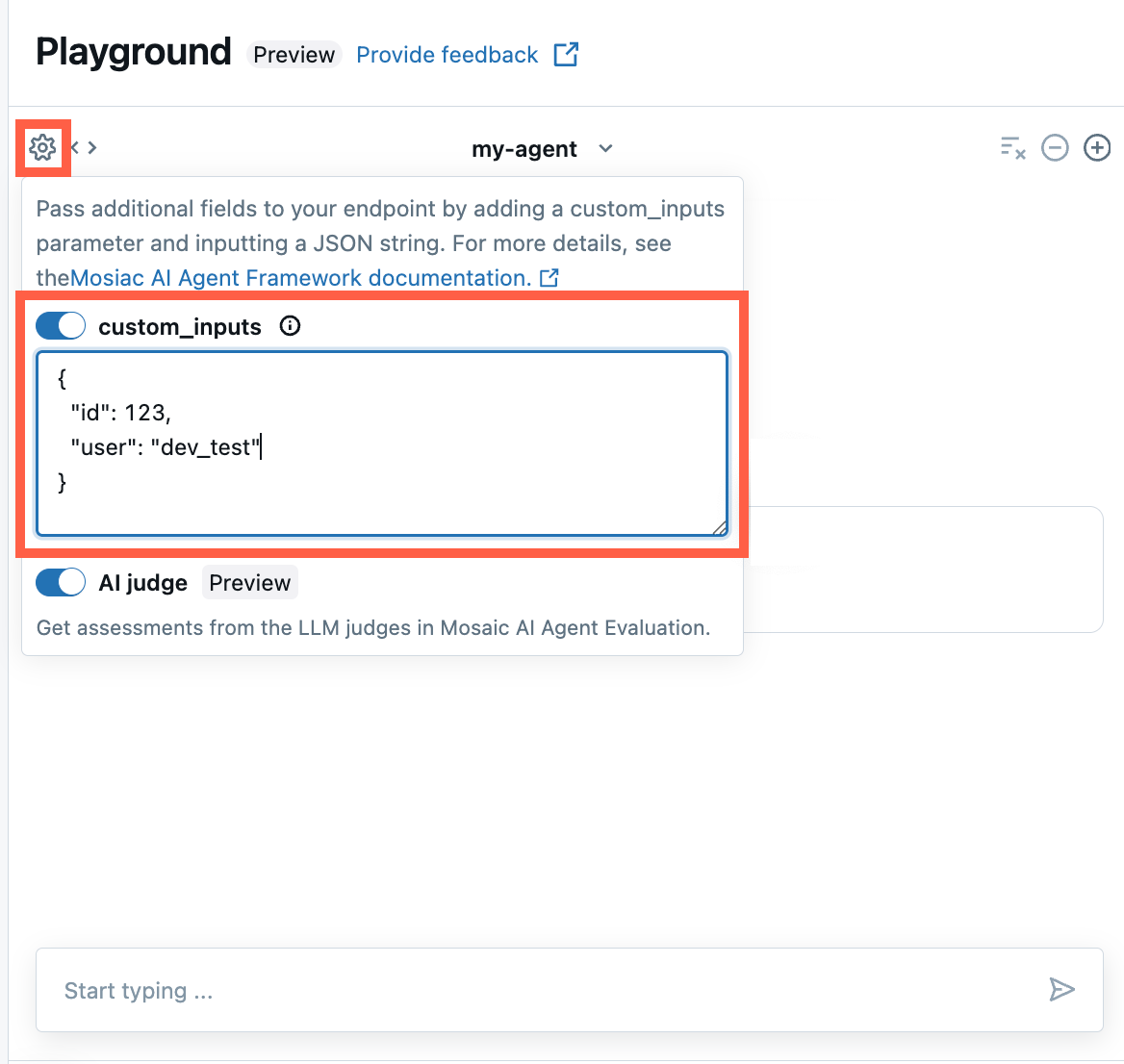

Provide custom_inputs in the AI Playground and agent review app

If your agent accepts additional inputs using the custom_inputs field, you can manually provide these inputs in both the AI Playground and the agent review app.

In either the AI Playground or the Agent Review App, select the gear icon

.

.Enable custom_inputs.

Provide a JSON object that matches your agent’s defined input schema.

Legacy input and output schemas

The SplitChatMessageRequest input schema and StringResponse output schema have been deprecated. If you are using either of these legacy schemas, Databricks recommends that you migrate to the recommended chat completion schema.

SplitChatMessageRequest input schema (deprecated)

SplitChatMessagesRequest allows you to pass the current query and history separately as agent input.

question = {

"query": "What is MLflow",

"history": [

{

"role": "user",

"content": "What is Retrieval-augmented Generation?"

},

{

"role": "assistant",

"content": "RAG is"

}

]

}

StringResponse output schema (deprecated)

StringResponse allows you to return the agent’s response as an object with a single string content field:

{"content": "This is an example string response"}