(Legacy) Get feedback about the quality of an agentic application

Important

This feature is in Public Preview.

Important

Databricks recommends you use the current Review App version.

This article shows you how to use the Databricks review app to gather feedback from human reviewers about the quality of your agentic application. It covers the following:

- How to deploy the review app.

- How reviewers use the app to provide feedback on the agentic application’s responses.

- How experts can review logged chats to provide suggestions for improvement and other feedback using the app.

What happens in a human evaluation?

The Databricks review app stages the LLM in an environment where expert stakeholders can interact with it - in other words, have a conversation, ask questions, provide feedback, and so on. The review app logs all questions, answers, and feedback in an inference table so you can further analyze the LLM’s performance. In this way, the review app helps to ensure the quality and safety of the answers your application provides.

Stakeholders can chat with the application bot and provide feedback on those conversations, or provide feedback on historical logs, curated traces, or agent outputs.

Requirements

Inference tables must be enabled on the endpoint that is serving the agent.

Each human reviewer must have access to the review app workspace or be synced to your Databricks account with SCIM. See the next section, Set up permissions to use the review app.

Developers must install the

databricks-agentsSDK to set up permissions and configure the review app.%pip install databricks-agents dbutils.library.restartPython()

Set up permissions to use the review app

Note

Human reviewers do not require access to the workspace to use the review app.

You can give access to the review app to any user in your Databricks account, even if they do not have access to the workspace that contains the review app.

- For users who do not have access to the workspace, an account admin uses account-level SCIM provisioning to sync users and groups automatically from your identity provider to your Azure Databricks account. You can also manually register these users and groups to give them access when you set up identities in Databricks. See Sync users and groups from Microsoft Entra ID using SCIM.

- For users who already have access to the workspace that contains the review app, no additional configuration is required.

The following code example shows how to give users permission to the review app for an agent. The users parameter takes a list of email addresses.

from databricks import agents

# Note that <user_list> can specify individual users or groups.

agents.set_permissions(model_name=<model_name>, users=[<user_list>], permission_level=agents.PermissionLevel.CAN_QUERY)

To review a chat log, a user must have the CAN_REVIEW permission.

Deploy the review app

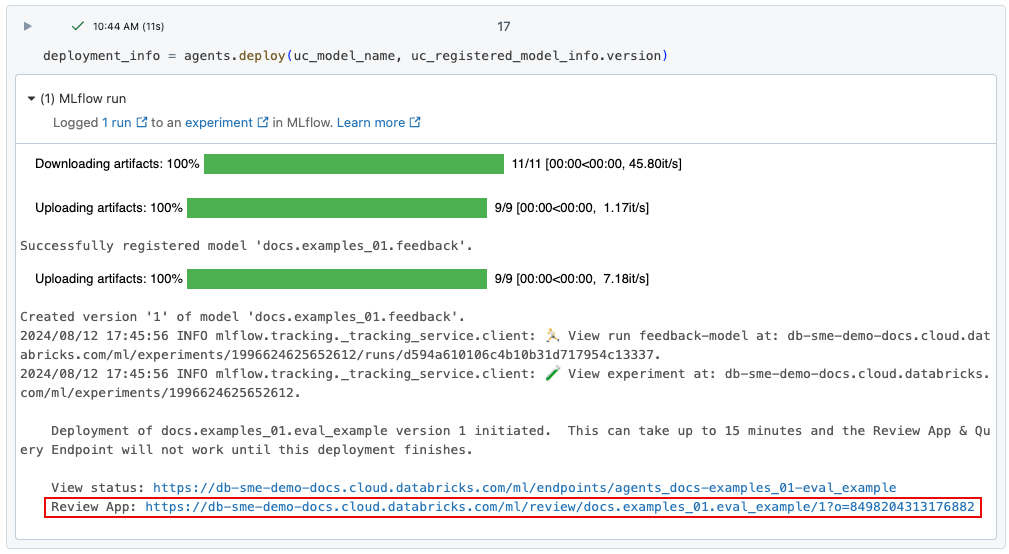

When you deploy an agent using agents.deploy(), the review app is automatically enabled and deployed. The output from the command shows the URL for the review app. For information about deploying an agent, see Deploy an agent for generative AI application.

If you lose the link to the deployment, you can find it using list_deployments().

from databricks import agents

deployments = agents.list_deployments()

deployments

Review app UI

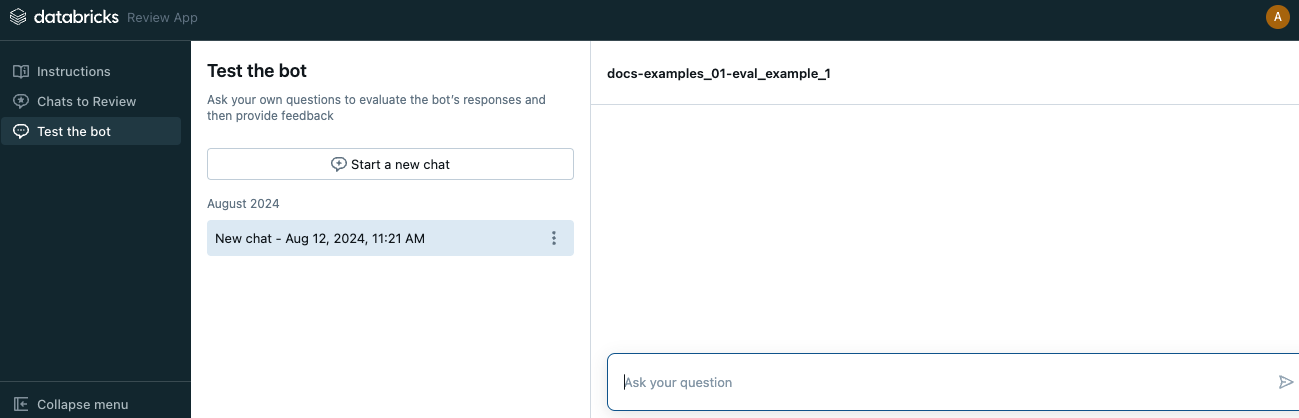

To open the review app, click the provided URL. The review app UI has three tabs in the left sidebar:

- Instructions Displays instructions to the reviewer. See Provide instructions to reviewers.

- Chats to review Displays logs from the interactions of reviewers with the app for experts to evaluate. See Expert review of logs from other user’s interactions with the app.

- Test the bot Lets reviewers chat with the app and submit reviews of its responses. See Chat with the app and submit reviews.

When you open the review app, the instructions page appears.

- To chat with the bot, click Start reviewing, or select Test the bot from the left sidebar. See Chat with the app and submit reviews for more details.

- To review chat logs that have been made available for your review, select Chats to review in the sidebar. See Expert review of logs from other user’s interactions with the app for details. To learn how to make chat logs available from the review app, see Make chat logs available for evaluation by expert reviewers.

Provide instructions to reviewers

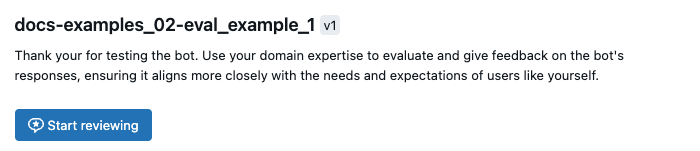

To provide custom text for the instructions displayed for reviewers, use the following code:

from databricks import agents

agents.set_review_instructions(uc_model_name, "Thank you for testing the bot. Use your domain expertise to evaluate and give feedback on the bot's responses, ensuring it aligns with the needs and expectations of users like yourself.")

agents.get_review_instructions(uc_model_name)

Chat with the app and submit reviews

To chat with the app and submit reviews:

Click Test the bot in the left sidebar.

Type your question in the box and press Return or Enter on your keyboard, or click the arrow in the box.

The app displays its answer to your question, and the sources that it used to find the answer.

Note

If the agent uses a retriever, data sources are identified by the

doc_urifield set by the retriever schema defined during agent creation. See Set retriever schema.Review the app’s answer, and select Yes, No, or I dont’ know.

The app asks for additional information. Check the appropriate boxes or type your comments into the field provided.

You can also edit the response directly to provide a better answer. To edit the response, click Edit response, make your changes in the dialog, and click Save, as shown in the following video.

Click Done to save your feedback.

Continue asking questions to provide additional feedback.

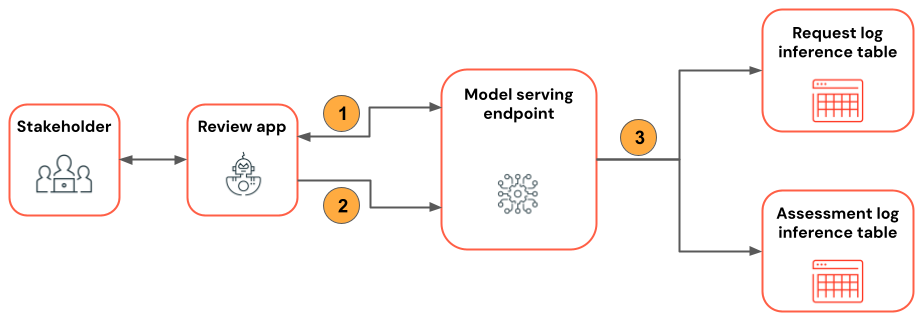

The following diagram illustrates this workflow.

- Using review app, reviewer chats with the agentic application.

- Using review app, reviewer provides feedback on application responses.

- All requests, responses, and feedback are logged to inference tables.

Make chat logs available for evaluation by expert reviewers

When a user interacts with app using the REST API or the review app, all requests, responses, and additional feedback is saved to inference tables. The inference tables are located in the same Unity Catalog catalog and schema where the model was registered and are named <model_name>_payload, <model_name>_payload_assessment_logs, and <model_name>_payload_request_logs. For more information about these tables, including schemas, see Monitor deployed agents.

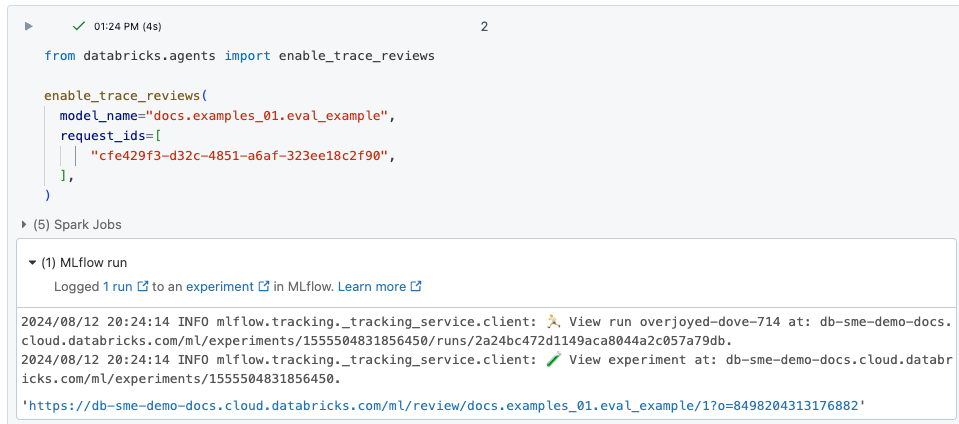

To load these logs into the review app for evaluation by expert reviewers, you must first find the request_id and enable reviews for that request_id as follows:

Locate the

request_ids to be reviewed from the<model_name>_payload_request_logsinference table. The inference table is in the same Unity Catalog catalog and schema where the model was registered.Use code similar to the following to load the review logs into the review app:

from databricks import agents agents.enable_trace_reviews( model_name=model_fqn, request_ids=[ "52ee973e-0689-4db1-bd05-90d60f94e79f", "1b203587-7333-4721-b0d5-bba161e4643a", "e68451f4-8e7b-4bfc-998e-4bda66992809", ], )The result cell includes a link to the review app with the selected logs loaded for review.

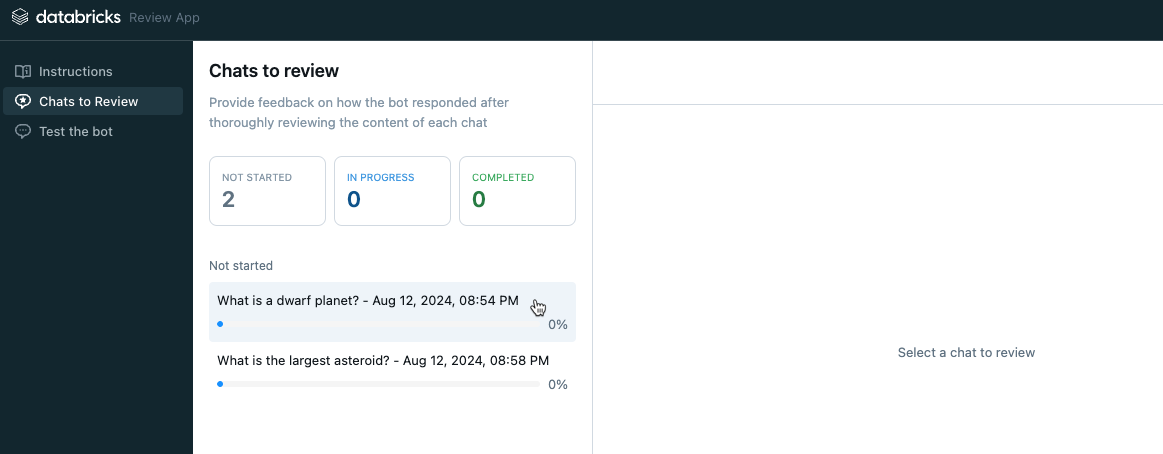

Expert review of logs from other user’s interactions with the app

To review logs from previous chats, the logs must have been enabled for review. See Make chat logs available for evaluation by expert reviewers.

In the left sidebar of the review app, select Chats to review. The enabled requests are displayed.

Click on a request to display it for review.

Review the request and response. The app also shows the sources it used for reference. You can click on these to review the reference, and provide feedback on the relevance of the source.

To provide feedback on the quality of the response, select Yes, No, or I dont’ know.

The app asks for additional information. Check the appropriate boxes or type your comments into the field provided.

You can also edit the response directly to provide a better answer. To edit the response, click Edit response, make your changes in the dialog, and click Save. See Chat with the app and submit reviews for a video that shows the process.

Click Done to save your feedback.

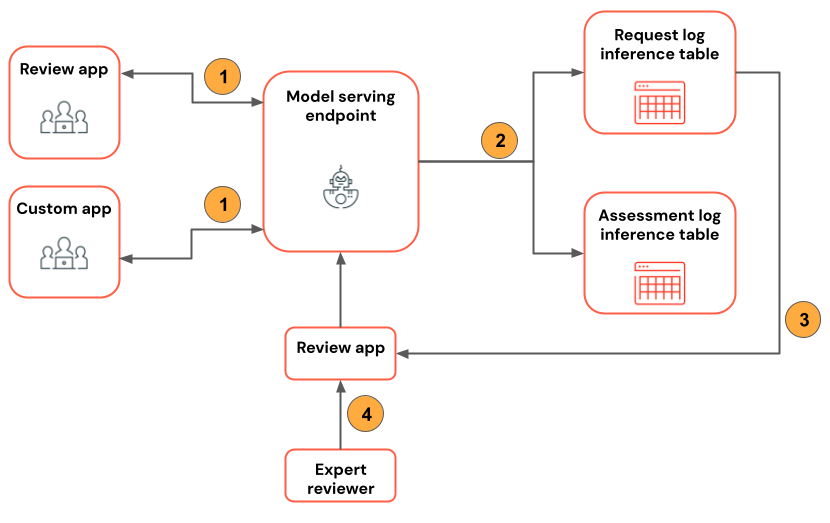

The following diagram illustrates this workflow.

- Using review app or custom app, reviewers chat with the agentic application.

- All requests and responses are logged to inference tables.

- Application developer uses

enable_trace_reviews([request_id])(whererequest_idis from the<model_name>_payload_request_logsinference table) to post chat logs to review app. - Using review app, expert reviews logs and provides feedback. Expert feedback is logged to inference tables.

Note

If you have Azure Storage Firewall enabled, reach out to your Azure Databricks account team to enable inference tables for your endpoints.

Use mlflow.evaluate() on the request logs table

The following notebook illustrates how to use the logs from the review app as input to an evaluation run using mlflow.evaluate().