Mirroring Snowflake in Microsoft Fabric

Mirroring in Fabric provides an easy experience to avoid complex ETL (Extract Transform Load) and integrate your existing Snowflake warehouse data with the rest of your data in Microsoft Fabric. You can continuously replicate your existing Snowflake data directly into Fabric's OneLake. Inside Fabric, you can unlock powerful business intelligence, artificial intelligence, Data Engineering, Data Science, and data sharing scenarios.

For a tutorial on configuring your Snowflake database for Mirroring in Fabric, see Tutorial: Configure Microsoft Fabric mirrored databases from Snowflake.

Why use Mirroring in Fabric?

With Mirroring in Fabric, you don't need to piece together different services from multiple vendors. Instead, you can enjoy a highly integrated, end-to-end, and easy-to-use product that is designed to simplify your analytics needs, and built for openness and collaboration between Microsoft, Snowflake, and the 1000s of technology solutions that can read the open-source Delta Lake table format.

What analytics experiences are built in?

Mirrored databases are an item in Fabric Data Warehousing distinct from the Warehouse and SQL analytics endpoint.

Mirroring creates three items in your Fabric workspace:

- The mirrored database item. Mirroring manages the replication of data into OneLake and conversion to Parquet, in an analytics-ready format. This enables downstream scenarios like data engineering, data science, and more.

- A SQL analytics endpoint

- A default semantic model

Each mirrored database has an autogenerated SQL analytics endpoint that provides a rich analytical experience on top of the Delta Tables created by the mirroring process. Users have access to familiar T-SQL commands that can define and query data objects but not manipulate the data from the SQL analytics endpoint, as it's a read-only copy. You can perform the following actions in the SQL analytics endpoint:

- Explore the tables that reference data in your Delta Lake tables from Snowflake.

- Create no code queries and views and explore data visually without writing a line of code.

- Develop SQL views, inline TVFs (Table-valued Functions), and stored procedures to encapsulate your semantics and business logic in T-SQL.

- Manage permissions on the objects.

- Query data in other Warehouses and Lakehouses in the same workspace.

In addition to the SQL query editor, there's a broad ecosystem of tooling that can query the SQL analytics endpoint, including SQL Server Management Studio (SSMS), the mssql extension with Visual Studio Code, and even GitHub Copilot.

Security considerations

To enable Fabric mirroring, you will need user permissions for your Snowflake database that contains the following permissions:

CREATE STREAMSELECT tableSHOW tablesDESCRIBE tables

For more information, see Snowflake documentation on Access Control Privileges for Streaming tables and Required Permissions for Streams.

Important

Any granular security established in the source Snowflake warehouse must be re-configured in the mirrored database in Microsoft Fabric. For more information, see SQL granular permissions in Microsoft Fabric.

Mirrored Snowflake cost considerations

Fabric doesn't charge for network data ingress fees into OneLake for Mirroring. There are no mirroring costs when your Snowflake data is being replicated into OneLake.

There are Snowflake compute and cloud query costs when data is being mirrored: virtual warehouse compute and cloud services compute.

- Snowflake virtual warehouse compute charges:

- Compute charges will be charged on the Snowflake side if there are data changes that are being read in Snowflake, and in turn are being mirrored into Fabric.

- Any metadata queries run behind the scenes to check for data changes are not charged for any Snowflake compute; however, queries that do produce data such as a

SELECT *will wake up the Snowflake warehouse and compute will be charged.

- Snowflake services compute charges:

- Although there aren't any compute charges for behind the scenes tasks such as authoring, metadata queries, access control, showing data changes, and even DDL queries, there are cloud costs associated with these queries.

- Depending on what type of Snowflake edition you have, you will be charged for the corresponding credits for any cloud services costs.

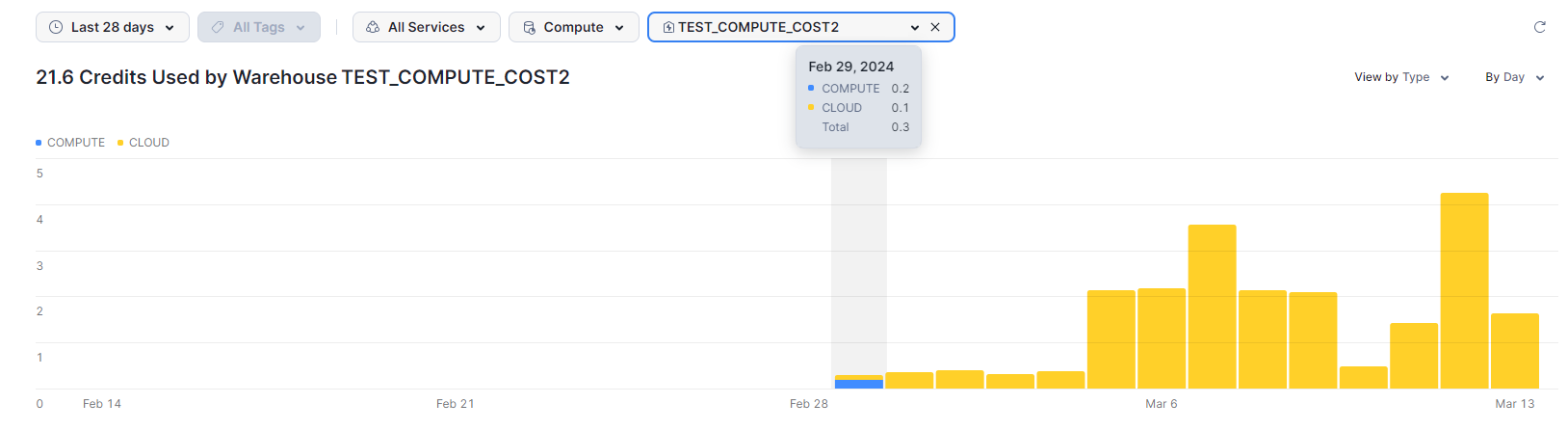

In the following screenshot, you can see the virtual warehouse compute and cloud services compute costs for the associated Snowflake database that is being mirrored into Fabric. In this scenario, majority of the cloud services compute costs (in yellow) are coming from data change queries based on the points mentioned previously. The virtual warehouse compute charges (in blue) are coming strictly from the data changes are being read from Snowflake and mirrored into Fabric.

For more information of Snowflake specific cloud query costs, see Snowflake docs: Understanding overall cost.