Considerations for DSPM for AI & data security and compliance protections for Copilot

When you understand how you can use Microsoft Purview Data Security Posture Management (DSPM) for AI and other capabilities to manage data security and compliance protections for Microsoft 365 Copilot and Microsoft 365 Copilot Chat, use the following detailed information for any prerequisites, considerations, and exemptions that might apply to your organization. For Microsoft 365 Copilot and Microsoft 365 Copilot Chat, be sure to read these in conjunction with Microsoft 365 Copilot requirements and Enterprise data protection in Microsoft 365 Copilot and Microsoft Copilot 365 Chat.

For licensing information to use these capabilities for Copilot, see the licensing and service description links at the top of the page. For licensing information for Copilot, see the service description for Microsoft 365 Copilot.

Data Security Posture Management for AI prerequisites and considerations

For the most part, Data Security Posture Management for AI is easy to use and self-explanatory, guiding you through prerequisites and preconfigured reports and policies. Use this section to complement that information and provide additional details that you might need.

Prerequisites for Data Security Posture Management for AI

To use Data Security Posture Management for AI from the Microsoft Purview portal or the Microsoft Purview compliance portal, you must have the following prerequisites:

You have the right permissions.

Required for monitoring interactions with Copilot:

Users are assigned a license for Microsoft 365 Copilot.

Microsoft Purview auditing is enabled for your organization. Although this is the default, you might want to check the instructions for Turn auditing on or off.

Required for monitoring interactions with third-party generative AI sites:

Devices are onboarded to Microsoft Purview, required for:

- Gaining visibility into sensitive information that's shared with third-party generative AI sites. For example, a user pastes credit card numbers into ChatGPT.

- Applying endpoint DLP policies to warn or block users from sharing sensitive information with third-party generative AI sites. For example, a user identified as elevated risk in Adaptive Protection is blocked with the option to override when they paste credit card numbers into ChatGPT.

The Microsoft Purview browser extension is deployed to users and required to discover site visits to third-party generative AI sites.

You'll find more information about the prerequisites for auditing, device onboarding, and the browser extension in Data Security Posture Management for AI: Navigate to Overview > Get started section.

For a list of currently supported third-party AI apps, see Supported AI sites by Microsoft Purview for data security and compliance protections.

Note

Although administrative units are supported for reports and activity explorer in Microsoft Purview Data Security Posture Management for AI, they're not supported for the one-click policies. This means that an administrator who is assigned to a specific administrative unit will see results only for users in that assigned administrative unit, but can create policies for all users.

One-click policies from Data Security Posture Management for AI

After the default policies are created, you can view and edit them at any time from their respective solution areas in the portal. For example, you want to scope the policies to specific users during testing, or for business requirements. Or, you want to add or remove classifiers that are used to detect sensitive information. Use the Policies page to quickly navigate to the right place in the portal.

If you delete any of the policies, their status on the Policies page displays PendingDeletion and continues to show as created in their respective recommendation cards until the deletion process is complete.

For sensitivity labels and their policies, view and edit these independently from Data Security Posture Management for AI, by navigating to Information Protection in the portal. For more information, use the configuration links in Default labels and policies to protect your data.

For more information about the supported DLP actions and which platforms support them, see the first two rows in the table from Endpoint activities you can monitor and take action on.

For the default policies that use Adaptive Protection, this capability is turned on if it's not already on, using default risk levels for all users and groups to dynamically enforce protection actions. For more information, see Quick setup

Note

Any default policies created while Data Security Posture Management for AI was in preview and named Microsoft Purview AI Hub won't be changed. For example, policy names will retain their Microsoft AI Hub - prefix.

Default policies for data discovery using Data Security Posture Management for AI

DLP policy: DSPM for AI: Detect sensitive info added to AI sites

This policy discovers sensitive content pasted or uploaded in Microsoft Edge, Chrome, and Firefox to AI sites. This policy covers all users and groups in your org in audit mode only.

Insider risk management policy: DSPM for AI - Detect when users visit AI sites

Detects when users use a browser to visit AI sites.

Insider risk management policy: DSPM for AI - Detect risky AI usage

This policy helps calculate user risk by detecting risky prompts and responses in Microsoft 365 Copilot and other generative AI apps.

Insider risk management policy: DSPM for AI - Unethical behavior in Copilot

This policy detects sensitive information in prompts and responses in Microsoft 365 Copilot. This policy covers all users and groups in your organization.

Default policies from data security to help you protect sensitive data used in generative AI

DLP policy DSPM for AI - Block sensitive info from AI sites

This policy uses Adaptive Protection to give a block-with-override to elevated risky users attempting to paste or upload sensitive information to other AI apps in Edge, Chrome, and Firefox. This policy covers all users and groups in your org in test mode.

Information Protection

This option creates default sensitivity labels and sensitivity label policies.

If you've already configured sensitivity labels and their policies, this configuration is skipped.

Activity explorer events

Use the following information to help you understand the events you might see in the activity explorer from Data Security Posture Management for AI. References to a generative AI site can include Microsoft 365 Copilot, Microsoft 365 Copilot Chat and other Microsoft copilots, and third-party AI sites.

| Event | Description |

|---|---|

| AI interaction | User interacted with a generative AI site. Details include the prompts and responses. For Microsoft 365 Copilot and Microsoft 365 Copilot Chat, this event requires auditing to be turned on. |

| AI website visit | User browsed to a generative AI site. |

| DLP rule match | A data loss prevention rule was matched when a user interacted with a generative AI site Includes DLP for Microsoft 365 Copilot. |

| Sensitive info types | Sensitive information types were found while a user interacted with a generative AI site. For Microsoft 365 Copilot and Microsoft 365 Copilot Chat, this event requires auditing to be turned on. |

The AI interaction event doesn't always display text for the Copilot prompt and response. Sometimes, the prompt and response spans consecutive entries. Other scenarios can include:

- Copilot for Word, when a user selects Inspire me for an existing document, no prompt is displayed

- Copilot for Word, when a document doesn't have content or has content but isn't saved, no prompt or response is displayed

- Copilot for Excel, when a user asks to generate data insights, no prompt or response is displayed

- Copilot for Excel, when a user asks to highlight cells or format, no prompt or response is displayed

- Copilot for PowerPoint, when a presentation isn't saved, no prompt or response is displayed

- Copilot for Teams, no prompt is displayed

- Copilot for Whiteboard, no prompt or response is displayed

- Copilot in Forms, no prompt or response is displayed

The Sensitive info types detected event doesn't display the user risk level.

Information protection considerations for Copilot

Microsoft 365 Copilot and Microsoft 365 Copilot Chat have the capability to access data stored within your Microsoft 365 tenant, including mailboxes in Exchange Online and documents in SharePoint or OneDrive.

In addition to accessing Microsoft 365 content, Copilot can also use content from the specific file you're working on in the context of an Office app session, regardless of where that file is stored. For example, local storage, network shares, cloud storage, or a USB stick. When files are open by a user within an app, access is often referred to as data in use.

Before you deploy licenses for Copilot, make sure you're familiar with the following details that help you strengthen your data protection solutions:

If content grants a user VIEW usage rights but not EXTRACT:

- When a user has this content open in an app, they won't be able to use Copilot.

- Copilot won't summarize this content but can reference it with a link so the user can then open and view the content outside Copilot.

You can further prevent Copilot from summarizing labeled files (whether encrypted or not) that are identified by a Microsoft Purview Data Loss Protection policy. Copilot won't summarize this content but can reference it with a link so the user can then open and view the content outside Copilot.

Just like your Office apps, Copilot can access sensitivity labels from your organization, but not other organizations. For more information about labeling support across organizations, see Support for external users and labeled content.

An advanced PowerShell setting for sensitivity labels can prevent Office apps from sending content to some connected experiences, which includes Microsoft 365 Copilot.

Copilot can't access unopened documents in SharePoint and OneDrive when they're labeled and encrypted with user-defined permissions. Copilot can access these documents for a user when they're open in the app (data in use).

Copilot can't access unopened documents in SharePoint that have been configured with a default sensitivity label that extends SharePoint permissions to downloaded documents.

Sensitivity labels that are applied to groups and sites (also known as "container labels") aren't inherited by items in those containers. As a result, the items won't display their container label in Copilot and can't support sensitivity label inheritance. For example, Teams channel chat messages that are summarized from a team that's labeled as Confidential won't display that label for sensitivity context in Microsoft 365 Copilot Chat. Similarly, content from SharePoint site pages and lists won't display the sensitivity label of their container label.

If you're using SharePoint information rights management (IRM) library settings that restrict users from copying text, be aware that usage rights are applied when files are downloaded, and not when they're created or uploaded to SharePoint. If you don't want Copilot to summarize these files when they're at rest, use sensitivity labels that apply encryption without the EXTRACT usage right.

Unlike other automatic labeling scenarios, an inherited label when you create new content will replace a lower priority label that was manually applied.

When an inherited sensitivity label can't be applied, the text won't be added to the destination item. For example:

- The destination item is read-only

- The destination item is already encrypted and the user doesn't have permissions to change the label (requires EXPORT or FULL CONTROL usage rights)

- The inherited sensitivity label isn't published to the user

If a user asks Copilot to create new content from labeled and encrypted items, label inheritance isn't supported when the encryption is configured for user-defined permissions or if the encryption was applied independently from the label. The user won't be able to send this data to the destination item.

Because Double Key Encryption (DKE) is intended for your most sensitive data that is subject to the strictest protection requirements, Copilot can't access this data. As a result, items protected by DKE won't be returned by Copilot, and if a DKE item is open (data in use), you won't be able to use Copilot in the app.

Sensitivity labels that protect Teams meetings and chat aren't currently recognized by Copilot. For example, data returned from a meeting chat or channel chat won't display an associated sensitivity label, copying chat data can't be prevented for a destination item, and the sensitivity label can't be inherited. This limitation doesn't apply to meeting invites, responses, and calendar events that are protected by sensitivity labels.

For Microsoft 365 Copilot Chat (formerly known as Business Chat, Graph-grounded chat, and Microsoft 365 Chat):

- When meeting invites have a sensitivity label applied, the label is applied to the body of the meeting invite but not to the metadata, such as date and time, or recipients. As a result, questions based just on the metadata return data without the label. For example, "What meetings do I have on Monday?" Questions that include the meeting body, such as the agenda, return the data as labeled.

- If content is encrypted independently from its applied sensitivity label, and that encryption doesn't grant the user EXTRACT usage rights (but includes the VIEW usage right), the content can be returned by Copilot and therefore sent to a source item. An example of when this configuration can occur if a user has applied Office restrictions from Information Rights Management when a document is labeled "General" and that label doesn't apply encryption.

- When the returned content has a sensitivity label applied, users won't see the Edit in Outlook option because this feature isn't currently supported for labeled data.

- If you're using the extension capabilities that include plugins and the Microsoft Graph Connector, sensitivity labels and encryption that are applied to this data from external sources aren't recognized by Microsoft 365 Copilot Chat. Most of the time this limitation won't apply because the data is unlikely to support sensitivity labels and encryption, although one exception is Power BI data. You can always disconnect the external data sources by using the Microsoft 365 admin center to turn off those plugins for users, and disconnect connections that use a Graph API connector.

App-specific exceptions:

Microsoft 365 Copilot in Outlook: You must have a minimum version of Outlook to use Microsoft 365 Copilot for encrypted items in Outlook:

- Outlook (Classic) for Windows: Starting with version 2408 in Current Channel and Monthly Enterprise Channel

- Outlook for Mac: Version 16.86.609+

- Outlook for iOS: Version 4.2420.0+

- Outlook for Android: Version 4.2420.0+

- Outlook on the web: Yes

- New Outlook for Windows: Yes

Microsoft 365 Copilot in Edge, Microsoft 365 Copilot in Windows: Unless data loss prevention (DLP) is used in Edge, Copilot can reference encrypted content from the active browser tab in Edge when that content doesn't grant the user EXTRACT usage rights. For example, the encrypted content is from Office for the web or Outlook for the web.

Will an existing label be overridden for sensitivity label inheritance?

Summary of outcomes when Copilot automatically applies protection with sensitivity label inheritance:

| Existing label | Override with sensitivity label inheritance |

|---|---|

| Manually applied, lower priority | Yes |

| Manually applied, higher priority | No |

| Automatically applied, lower priority | Yes |

| Automatically applied, higher priority | No |

| Default label from policy, lower priority | Yes |

| Default label from policy, higher priority | No |

| Default sensitivity label for a document library, lower priority | Yes |

| Default sensitivity label for a document library, higher priority | No |

Copilot honors existing protection with the EXTRACT usage right

Although you might not be very familiar with the individual usage rights for encrypted content, they've been around a long time. From Windows Server Rights Management, to Active Directory Rights Management, to the cloud version that became Azure Information Protection with the Azure Rights Management service.

If you've ever received a "Do Not Forward" email, it's using usage rights to prevent you from forwarding the email after you've been authenticated. As with other bundled usage rights that map to common business scenarios, a Do Not Forward email grants the recipient usage rights that control what they can do with the content, and it doesn't include the FORWARD usage right. In addition to not forwarding, you can't print this Do Not Forward email, or copy text from it.

The usage right that grants permission to copy text is EXTRACT, with the more user-friendly, common name of Copy. It's this usage right that determines whether Copilot can display text to the user from encrypted content.

Note

Because the Full control (OWNER) usage right includes all the usage rights, EXTRACT is automatically included with Full control.

When you use the Microsoft Purview portal or the Microsoft Purview compliance portal to configure a sensitivity label to apply encryption, the first choice is whether to assign the permissions now, or let users assign the permissions. If you assign now, you configure the permissions by either selecting a predefined permission level with a preset group of usage rights, such as Co-Author or Reviewer. Or, you can select custom permissions where you can individually select available usage rights.

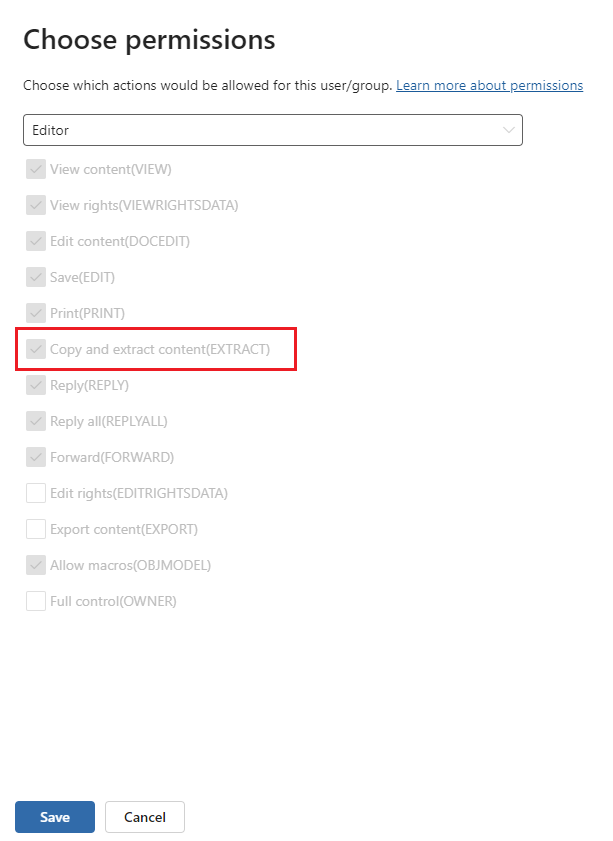

In the Microsoft Purview portal, the EXTRACT usage right is displayed as Copy and extract content(EXTRACT). For example, the default permission level selected is Editor, where you see Copy and extract content(EXTRACT) is included. As a result, content protected with this encryption configuration can be returned by Copilot:

If you select Custom from the dropdown box, and then Full control(OWNER) from the list, this configuration will also grant the EXTRACT usage right.

Note

The person applying the encryption always has the EXTRACT usage right, because they are the Rights Management owner. This special role automatically includes all usage rights and some other actions, which means that content a user has encrypted themselves is always eligible to be returned to them by Microsoft 365 Copilot and Microsoft 365 Copilot Chat. The configured usage restrictions apply to other people who are authorized to access the content.

Alternatively, if you select the encryption configuration to let users assign permissions, for Outlook, this configuration includes predefined permissions for Do Not Forward and Encrypt-Only. The Encrypt-Only option, unlike Do Not Forward, does include the EXTRACT usage right.

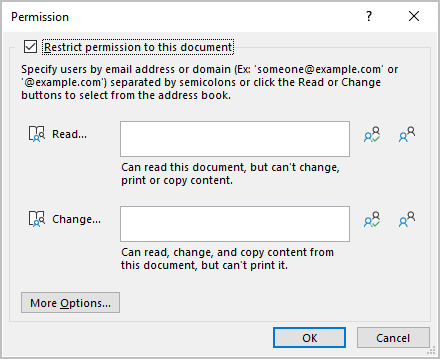

When you select custom permissions for Word, Excel, and PowerPoint, users select their own permissions in the Office app when they apply the sensitivity label. There are currently two versions of the dialog box. In the older version, they're informed that from the two selections, Read doesn't include the permission to copy content, but Change does. These references to copy refer to the EXTRACT usage right. If the user selects More Options, they can add the EXTRACT usage right to Read by selecting Allow users with read access to copy content.

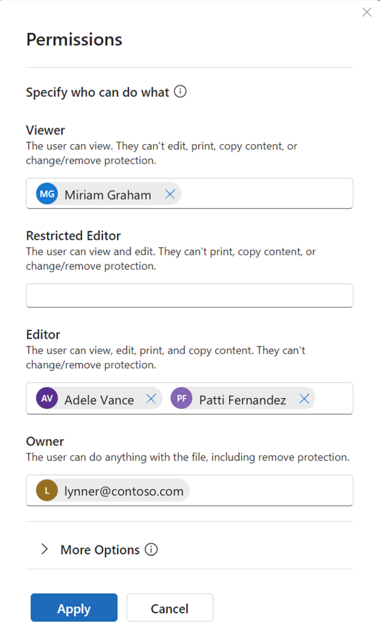

In the latest version of the dialog box, Read and Change is replaced with permission levels where the descriptions that state copy isn't allowed won't include the EXTRACT usage right. The permission levels that do include EXTRACT are Editor and Owner. For example:

Tip

If you need to check whether a document you're authorized to view includes the EXTRACT usage right, open it in the Windows Office app and customize the status bar to show Permissions. Select the icon next to the sensitivity label name to display My Permission. View the value for Copy, which maps to the EXTRACT usage right, and confirm whether it displays Yes or No.

For emails, if the permissions aren't displayed at the top of the message in Outlook for Windows, select the information banner with the label name, and then select View Permission.

Copilot honors the EXTRACT usage right for a user, however it was applied to the content. Most times, when the content is labeled, the usage rights granted to the user match those from the sensitivity label configuration. However, there are some situations that can result in the content's usage rights being different from the applied label configuration:

- The sensitivity label is applied after usage rights are already applied

- Usage rights are applied after a sensitivity label has been applied

For more information about configuring a sensitivity label for encryption, see Restrict access to content by using sensitivity labels to apply encryption.

For technical details about the usage rights, see Configure usage rights for Azure Information Protection.

Compliance management considerations for Copilot

Compliance management for interactions with Copilot extends across Word, Excel, PowerPoint, Outlook, Teams, Loop and Copilot Pages, Whiteboard, OneNote, and Microsoft 365 Copilot Chat (formerly Business Chat, Graph-grounded chat, and Microsoft 365 Chat).

Note

Compliance management for Microsoft 365 Copilot Chat includes prompts and responses to and from the public web when users are signed in and select the Work option rather than Web.

Compliance tools identify the source Copilot interactions by the name of the app. For example, Copilot in Word, Copilot in Teams, and Microsoft 365 Chat (for Microsoft 365 Copilot Chat).

Before your organization uses Microsoft 365 Copilot and Microsoft 365 Copilot Chat, make sure you're familiar with the following details to support your compliance management solutions:

The audit data is designed for data security and compliance purposes, and provides comprehensive visibility into Copilot interactions for these use cases. For example, to discover data oversharing risks or to collect interactions for regulatory compliance or legal purposes. It's not intended to be used as the basis for Copilot usage reporting.

Note

Any aggregated metrics that you build on top of this audit data such as "prompt count" or "active user count" might not be consistent with the corresponding data points in the official Copilot usage reports provided by Microsoft. Microsoft can't provide guidance on how to use audit log data as the basis for usage reporting, nor can Microsoft guartantee that aggregated usage metrics built on top of audit log data will match similar usage metrics reported in other tools.

To access accurate information about Copilot usage, use one of the following reports: the Microsoft 365 Copilot usage report in the Microsoft 365 Admin Center or the Copilot Dashboard in Viva Insights.

Auditing captures the Copilot activity of search, but not the actual user prompt or response. For this information, use eDiscovery. Or from Microsoft Purview Data Security Posture Management for AI, use AI interaction from the Activity explorer page.

Admin-related changes for auditing Copilot aren't yet supported.

Device identify information isn't currently included in the audit details.

Retention policies for Copilot interactions don't inform users when messages are deleted as a result of a retention policy.

App-specific exceptions:

- Microsoft 365 Copilot in Teams:

- If transcripts are turned off, the capabilities for auditing, eDiscovery, and retention aren't supported

- If transcripts are referenced, this action isn't captured for auditing

- For Microsoft 365 Copilot Chat, Copilot currently can't retain as cloud attachments, referenced files that it returns to users. Files referenced by users are supported to be retained as cloud attachments.