Application Insights 中的計量

Application Insights 支援三種不同類型的計量:標準(預先匯總)、記錄型和自定義計量。 每個類型在監視應用程式健康情況、診斷和分析方面帶來獨特的價值。 正在檢測應用程式的開發人員可以決定最適合特定案例的計量類型。 決定是根據應用程式的大小、預期的遙測數量,以及計量精確度和警示的商務需求而進行。 本文說明所有支援的計量類型之間的差異。

標準計量

Application Insights 中的標準計量是預先定義的計量,由服務自動收集及監視。 這些計量涵蓋廣泛的效能和使用指標,例如CPU使用量、記憶體耗用量、要求速率和回應時間。 標準計量提供應用程式健康情況和效能的完整概觀,而不需要任何其他設定。 標準計量 會在收集期間預先匯總 ,並儲存為只有索引鍵維度的特殊存放庫中的時間序列,以在查詢時間提供更好的效能。 這讓標準計量成為近乎即時警示計量和更回應 式儀錶板維度的最佳選擇。

以記錄為基礎的計量

Application Insights 中的記錄型計量是查詢時間概念,在應用程式的記錄數據之上,以時間序列表示。 基礎記錄 不會在集合或儲存時間預先匯總 ,並保留每個記錄專案的所有屬性。 此保留可讓您在查詢時間將記錄屬性當做記錄型計量維度,以 用於計量圖表篩選 和 計量分割,並提供記錄型計量優越的分析與診斷值。 不過,遙測量縮減技術,例如 取樣 和 遙測篩選,通常用於監視產生大量遙測的應用程式、影響收集的記錄項目數量,因而降低記錄型計量的精確度。

自訂計量 (預覽)

Application Insights 中的自定義計量可讓您定義及追蹤應用程式特有的特定度量。 您可以藉由檢測程序代碼,將自定義遙測數據傳送至 Application Insights 來建立這些計量。 自定義計量可讓您彈性地監視標準計量未涵蓋之應用程式的任何層面,讓您深入瞭解應用程式的行為和效能。

如需詳細資訊,請參閱 Azure 監視器中的自定義計量(預覽版)。

注意

Application Insights 也提供稱為 「即時計量」數據流的功能,可讓您近乎即時地監視 Web 應用程式,而且不會儲存任何遙測數據。

計量比較

| 功能 | 標準計量 | 以記錄為基礎的計量 | 自訂計量 |

|---|---|---|---|

| 資料來源 | 在運行時間期間收集的預先匯總時間序列數據。 | 使用 Kusto 查詢衍生自記錄數據。 | 透過 Application Insights SDK 或 API 收集的使用者定義計量。 |

| 細微性 | 固定間隔 (1 分鐘)。 | 取決於記錄數據本身的數據粒度。 | 根據使用者定義的計量彈性粒度。 |

| 準確度 | 高,不受記錄取樣影響。 | 可能會受到取樣和篩選的影響。 | 高精確度,特別是在使用 GetMetric 等預先匯總的方法時。 |

| 成本 | 包含在 Application Insights 定價中。 | 根據記錄數據擷取和查詢成本。 | 請參閱 定價模式和保留期。 |

| Configuration | 以最少的組態自動提供。 | 需要設定記錄查詢,才能從記錄數據擷取所需的計量。 | 需要程序代碼中的自定義實作和組態。 |

| 查詢效能 | 快速,因為預先匯總。 | 速度較慢,因為它牽涉到查詢記錄數據。 | 取決於數據量和查詢複雜度。 |

| Storage | 儲存為 Azure 監視器計量存放區中的時間序列數據。 | 儲存為 Log Analytics 工作區中的記錄。 | 儲存在Log Analytics和 Azure 監視器計量存放區中。 |

| 警示 | 支援即時警示。 | 允許根據詳細記錄數據的複雜警示案例。 | 根據使用者定義的計量彈性警示。 |

| 服務限制 | 受限於 Application Insights 限制。 | 受限於 Log Analytics工作區限制。 | 受限於免費計量的配額,以及額外維度的成本。 |

| 使用案例 | 即時監視、效能儀錶板和快速深入解析。 | 詳細的診斷、疑難解答和深入分析。 | 量身打造的效能指標和商務特定計量。 |

| 範例 | CPU 使用量、記憶體使用量、要求持續時間。 | 要求計數、例外狀況追蹤、相依性呼叫。 | 自定義應用程式特定的計量,例如用戶參與、功能使用量。 |

計量預先匯總

OpenTelemetry SDK 和較新的 Application Insights SDK (傳統 API) 會在收集期間預先匯總計量,以減少從 SDK 傳送到遙測通道端點的數據量。 這個流程適用於預設傳送的標準計量,因此精確度不會受到取樣或篩選的影響。 它也適用於使用 OpenTelemetry API 或 GetMetric 和 TrackValue 傳送的自定義計量,這會產生較少的數據擷取和較低的成本。 如果您的 Application Insights SDK 版本支援 GetMetric 和 TrackValue,則它是傳送自定義計量的慣用方法。

對於未實作預先匯總的 SDK(也就是舊版 Application Insights SDK 或瀏覽器檢測),Application Insights 後端仍會藉由匯總 Application Insights 遙測通道端點收到的事件來填入新的計量。 針對自定義計量,您可以使用 trackMetric 方法。 雖然您並未因透過網路傳輸的資料量減少而受惠,但仍可使用預先彙總的計量和體驗更佳的效能,以及支援在收集期間並未預先彙總計量的 SDK 提供幾近即時的維度警示。

遙測通道端點在擷取取樣之前預先匯總事件。 因此,無論您使用哪個版本的 SDK 搭配您的應用程式,擷取取樣決不會影響預先彙總的計量精確度。

下表列出預先匯總的預先匯總。

使用 Azure 監視器 OpenTelemetry Distro 預先匯總計量

| 目前的生產 SDK | 標準計量預先匯總 | 自定義計量預先匯總 |

|---|---|---|

| ASP.NET Core | SDK | 透過 OpenTelemetry API 的 SDK |

| .NET (透過匯出者) | SDK | 透過 OpenTelemetry API 的 SDK |

| Java (3.x) | SDK | 透過 OpenTelemetry API 的 SDK |

| JAVA 原生 | SDK | 透過 OpenTelemetry API 的 SDK |

| Node.js | SDK | 透過 OpenTelemetry API 的 SDK |

| Python | SDK | 透過 OpenTelemetry API 的 SDK |

使用 Application Insights SDK 預先匯總計量 (傳統 API)

| 目前的生產 SDK | 標準計量預先匯總 | 自定義計量預先匯總 |

|---|---|---|

| .NET Core 和 .NET Framework | SDK (V2.13.1+) | SDK (V2.7.2+) 透過 GetMetric 透過 TrackMetric 的遙測通道端點 |

| Java (2.x) | 遙測通道端點 | 透過 TrackMetric 的遙測通道端點 |

| JavaScript (瀏覽器) | 遙測通道端點 | 透過 TrackMetric 的遙測通道端點 |

| Node.js | 遙測通道端點 | 透過 TrackMetric 的遙測通道端點 |

| Python | 遙測通道端點 | SDK 透過 OpenCensus.stats (已淘汰) 透過 TrackMetric 的遙測通道端點 |

警告

不再建議使用 Application Insights Java 2.x SDK。 請改用 以 OpenTelemetry 為基礎的 Java 供應專案 。

OpenCensus Python SDK 已淘汰。 我們建議 使用以 OpenTelemetry 為基礎的 Python 供應專案 ,並提供 移轉指引。

使用自動結構預先匯總的計量

使用自動結構時,SDK 會自動新增至您的應用程式程式代碼,且無法自定義。 針對自定義計量,需要手動檢測。

| 目前的生產 SDK | 標準計量預先匯總 | 自定義計量預先匯總 |

|---|---|---|

| ASP.NET Core | SDK 1 | 不支援 |

| ASP.NET | SDK 2 | 不支援 |

| Java | SDK | 支援 3 |

| Node.js | SDK | 不支援 |

| Python | SDK | 不支援 |

註腳

-

1ASP.NET App Service 上的 Core 自動結構會發出沒有維度的標準計量。 所有維度都需要手動檢測。

- 2ASP.NET 虛擬機/虛擬機擴展集 上的自動結構,而 內部部署 會發出沒有維度的標準計量。 Azure App Service 也是如此,但集合層級必須設定為建議。 所有維度都需要手動檢測。

- 3 搭配自動結構使用的 Java 代理程式會擷取熱門連結庫發出的計量,並將其傳送至 Application Insights 作為自定義計量。

自訂計量維度和預先彙總

您使用 OpenTelemetry、 trackMetric 或 GetMetric 和 TrackValue API 呼叫傳送的所有計量都會自動儲存在計量存放區和記錄中。 您可以在 Application Insights 的 customMetrics 數據表和名為 azure.applicationinsights 的自定義計量命名空間下,於計量總管中找到這些計量。 雖然記錄型自訂計量版本一律會保留所有維度,但是預先彙總的計量版本儲存時預設不含任何維度。 保留自訂計量維度是預覽功能,您可以選取 [將自訂計量傳送至 Azure Metric Store] 下的 [具有維度],從 [使用量和估計成本] 索引標籤中開啟。

配額

預先彙總的計量會在 Azure 監視器中以時間序列儲存。 套用自訂計量上的 Azure 監視器配額。

注意

超過配額可能會產生意外結果。 Azure 監視器可能會在您的訂用帳戶或區域中變得不可靠。 若要了解如何避免超過配額,請參閱設計限制和考量。

自訂計量維度的收集為何預設會關閉?

默認會關閉自定義計量維度的集合,因為未來儲存具有維度的自定義計量將會與 Application Insights 分開計費。 儲存無維度的自訂計量仍為免費 (達到配額前)。 您可以在我們的官方定價頁面上了解即將推出的定價模型變更。

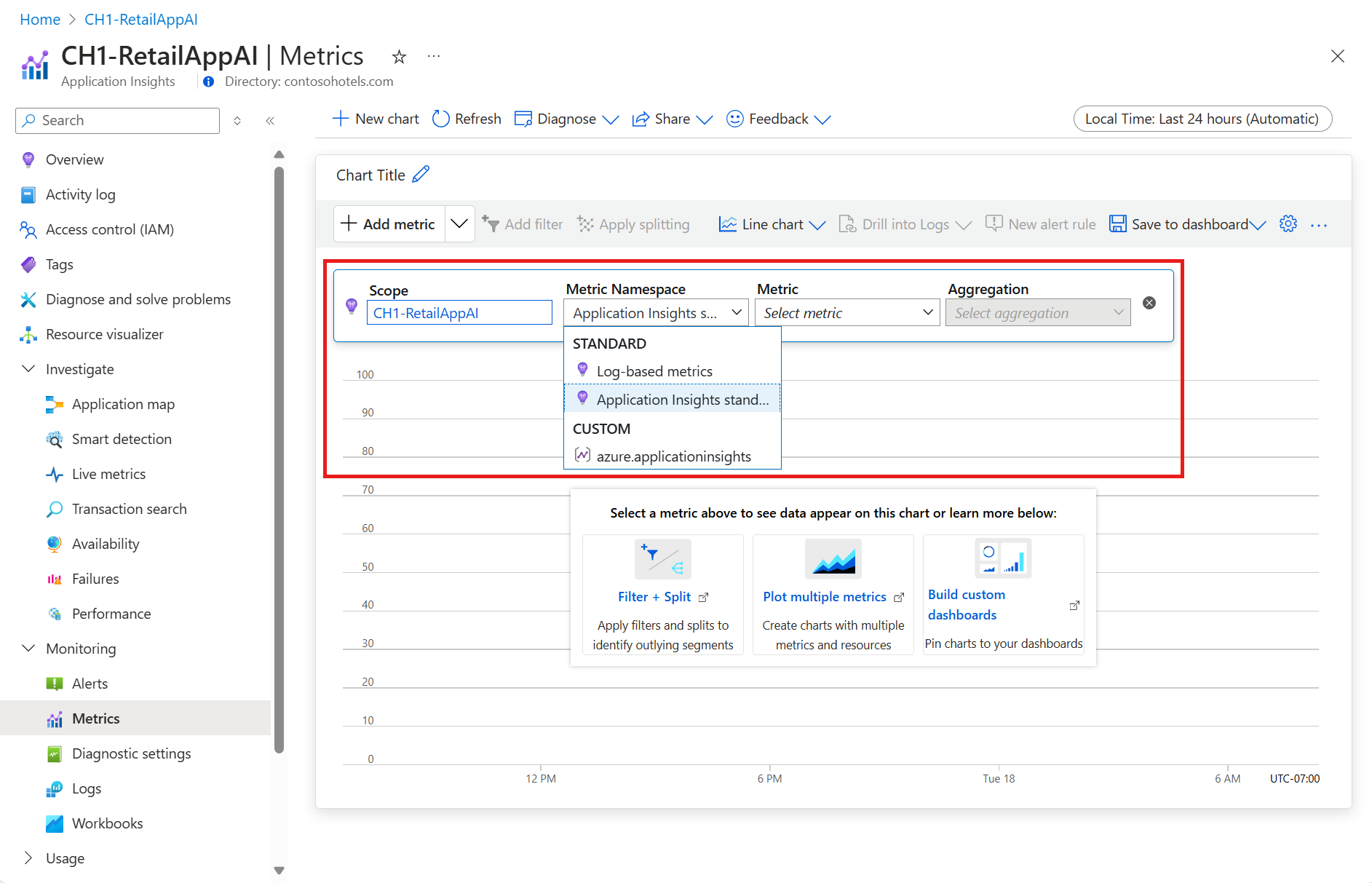

建立圖表並探索計量

使用 Azure 監視器計量總 管從預先匯總、記錄式和自定義計量繪製圖表,以及撰寫具有圖表的儀錶板。 選取您想要的 Application Insights 資源之後,請使用命名空間選擇器在計量之間切換。

Application Insights 計量的定價模型

將計量擷取至 Application Insights,無論是記錄型或預先彙總,都會根據擷取資料的大小產生成本。 如需詳細資訊,請參閱 Azure 監視器記錄定價詳細資料 (部分機器翻譯)。 您的自訂計量,包括其全部維度,一律會儲存在 Application Insights 記錄存放區中。 此外,依預設,不會將預先彙總的自訂計量版本 (不含維度) 轉送至計量存放區。

選取 [啟用自訂計量維度的警示] 選項,以儲存計量存放區中預先彙總計量的全部維度,可以根據自訂計量定價產生額外成本。

可用的計量

可用性計量

[可用性] 類別中的計量可讓您查看 Web 應用程式的健康情況,如世界各地的點所觀察到。 設定可用性測試 ,以從這個類別開始使用任何計量。

可用性 (availabilityResults/availabilityPercentage)

可用性計量會顯示未偵測到任何問題的 Web 測試回合百分比。 可能最低的值為 0,表示所有 Web 測試回合都失敗。 100 的值表示所有 Web 測試回合都通過驗證準則。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 百分比 | Avg |

Run location, Test name |

可用性測試持續時間 (availabilityResults/duration)

可用性測試持續時間計量會顯示 Web 測試執行所花費的時間。 針對多步驟 Web 測試,計量會反映所有步驟的總運行時間。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 毫秒 | Avg、Max、Min |

Run location、 、 Test nameTest result |

可用性測試 (availabilityResults/count)

可用性測試計量會反映 Azure 監視器所執行的 Web 測試計數。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| Count | Count |

Run location、 、 Test nameTest result |

瀏覽器計量

瀏覽器計量是由 Application Insights JavaScript SDK 從實際的終端使用者瀏覽器收集。 他們提供您 Web 應用程式使用者體驗的絕佳見解。 瀏覽器計量通常不會取樣,這表示相較於可能因取樣而扭曲的伺服器端計量,它們提供使用量號碼的精確度較高。

注意

若要收集瀏覽器計量,您的應用程式必須使用Application Insights JavaScript SDK 進行檢測。

瀏覽器頁面載入時間 (browserTimings/totalDuration)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 毫秒 | Avg、Max、Min | 無 |

用戶端處理時間 (browserTiming/processingDuration)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 毫秒 | Avg、Max、Min | 無 |

頁面載入網路連線時間 (browserTimings/networkDuration)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 毫秒 | Avg、Max、Min | 無 |

接收回應時間 (browserTimings/receiveDuration)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 毫秒 | Avg、Max、Min | 無 |

傳送要求時間 (browserTimings/sendDuration)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 毫秒 | Avg、Max、Min | 無 |

失敗計量

失敗中的計量會顯示處理要求、相依性呼叫和擲回例外狀況的問題。

瀏覽器例外狀況(例外狀況/瀏覽器)

此計量會反映在瀏覽器中執行之應用程式程式代碼擲回的例外狀況數目。 計量中只會包含使用 trackException() Application Insights API呼叫追蹤的例外狀況。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| Count | Count | Cloud role name |

相依性呼叫失敗 (相依性/失敗)

失敗的相依性呼叫數目。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| Count | Count |

Cloud role instance、Cloud role name、、Dependency typeDependency performance、Is traffic synthetic、、、 Result codeTarget of dependency call |

例外狀況(例外狀況/計數)

每次當您將例外狀況記錄至 Application Insights 時,都會呼叫 SDK 的 trackException() 方法 。 [例外狀況] 計量會顯示已記錄的例外狀況數目。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| Count | Count |

Cloud role instance、 、 Cloud role nameDevice type |

失敗的要求(要求/失敗)

標示為 失敗的追蹤伺服器要求計數。 根據預設,Application Insights SDK 會自動將傳回 HTTP 回應碼 5xx 或 4xx 的每個伺服器要求標示為失敗的要求。 您可以修改自定義遙測初始化表達式中要求遙測專案的成功屬性,以自定義此邏輯。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| Count | Count |

Cloud role instance、、 Cloud role name、 Is synthetic traffic、 Request performance、 Result code |

伺服器例外狀況(例外狀況/伺服器)

此計量會顯示伺服器例外狀況的數目。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| Count | Count |

Cloud role instance, Cloud role name |

效能計數器

使用性能計數器類別中的計量來存取 Application Insights 收集的系統性能計數器。

可用的記憶體 (performanceCounters/availableMemory)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| MB /GB(資料相依) | Avg、Max、Min | Cloud role instance |

例外狀況率 (performanceCounters/exceptionRate)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 計數 | Avg、Max、Min | Cloud role instance |

HTTP 要求運行時間 (performanceCounters/requestExecutionTime)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 毫秒 | Avg、Max、Min | Cloud role instance |

HTTP 要求率 (performanceCounters/requestsPerSecond)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 每秒要求數 | Avg、Max、Min | Cloud role instance |

應用程式佇列中的 HTTP 要求 (performanceCounters/requestsInQueue)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 計數 | Avg、Max、Min | Cloud role instance |

進程 CPU (performanceCounters/processCpuPercentage)

計量會顯示裝載受監視應用程式的進程所耗用的處理器容量總數。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 百分比 | Avg、Max、Min | Cloud role instance |

注意

計量的範圍介於 0 到 100 * n 之間,其中 n 是可用的 CPU 核心數目。 例如,200% 的計量值可能代表兩個 CPU 核心的完整使用率,或 4 個 CPU 核心的半使用率等等。 進程 CPU 標準化是由許多 SDK 所收集的替代計量,代表相同的值,但將其除以可用的 CPU 核心數目。 因此,進程 CPU 標準化計量的範圍是 0 到 100。

處理 IO 速率 (performanceCounters/processIOBytesPerSecond)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 每秒位元組數 | Average、Min、Max | Cloud role instance |

處理私人位元組 (performanceCounters/processPrivateBytes)

受監視進程為其數據配置的非共用記憶體數量。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| Bytes | Average、Min、Max | Cloud role instance |

處理器時間(performanceCounters/processorCpuPercentage)

受 監視伺服器實例上執行的所有 進程都會耗用CPU。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 百分比 | Average、Min、Max | Cloud role instance |

注意

處理器時間計量不適用於裝載於 Azure App 服務的應用程式。 使用進程 CPU 計量來追蹤載入於 App Services 中的 Web 應用程式的 CPU 使用率。

伺服器計量

相依性呼叫 (相依性/計數)

此計量與相依性呼叫數目有關。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| Count | Count |

Cloud role instance、Cloud role name、Dependency performance、Dependency type、Is traffic synthetic、Result code、、、 Successful callTarget of a dependency call |

相依性持續時間 (相依性/持續時間)

此計量是指相依性呼叫的持續時間。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 毫秒 | Avg、Max、Min |

Cloud role instance、Cloud role name、Dependency performance、Dependency type、Is traffic synthetic、Result code、、、 Successful callTarget of a dependency call |

伺服器要求率(要求/速率)

此計量會反映 Web 應用程式收到的連入伺服器要求數目。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 每秒計數 | Avg |

Cloud role instance、、 Cloud role name、 Is traffic synthetic、 Request performanceResult code、 Successful request |

伺服器要求 (要求/計數)

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| Count | Count |

Cloud role instance、、 Cloud role name、 Is traffic synthetic、 Request performanceResult code、 Successful request |

伺服器回應時間 (要求/持續時間)

此計量會反映伺服器處理連入要求所需的時間。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 毫秒 | Avg、Max、Min |

Cloud role instance、、 Cloud role name、 Is traffic synthetic、 Request performanceResult code、 Successful request |

使用量指標

頁面檢視載入時間 (pageViews/duration)

此計量是指PageView事件載入所花費的時間量。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| 毫秒 | Avg、Max、Min |

Cloud role name, Is traffic synthetic |

頁面檢視 (pageViews/count)

使用 TrackPageView() Application Insights API 記錄的 PageView 事件計數。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| Count | Count |

Cloud role name, Is traffic synthetic |

追蹤 (追蹤/計數)

使用 TrackTrace() Application Insights API 呼叫所記錄的追蹤語句計數。

| 測量單位 | 支援的匯總 | 支援的維度 |

|---|---|---|

| Count | Count |

Cloud role instance、 、 Cloud role name、 Is traffic syntheticSeverity level |

自訂計量

使用 Application Insights REST API 直接存取記錄型計量

Application Insights REST API 可讓您以程序設計方式擷取記錄型計量。 它也具有選擇性參數ai.include-query-payload,當新增至查詢字串時,會提示 API 不僅傳回時間序列數據,還會提示用來擷取它的 Kusto 查詢語言 (KQL) 語句。 這個參數對於用戶特別有用,目的是要理解Log Analytics中原始事件與產生的記錄型計量之間的連線。

若要直接存取您的數據,請使用 KQL,將 參數 ai.include-query-payload 傳遞至查詢中的 Application Insights API。

注意

若要擷取基礎記錄查詢,DEMO_APP且DEMO_KEY不需要取代。 如果您只想擷取 KQL 語句,而不是您自己的應用程式的時間序列數據,您可以直接複製並貼到瀏覽器搜尋列中。

api.applicationinsights.io/v1/apps/DEMO_APP/metrics/users/authenticated?api_key=DEMO_KEY&prefer=ai.include-query-payload

以下是計量「已驗證的使用者」傳回 KQL 語句的範例。 (在此範例中, "users/authenticated" 是計量標識符。

output

{

"value": {

"start": "2024-06-21T09:14:25.450Z",

"end": "2024-06-21T21:14:25.450Z",

"users/authenticated": {

"unique": 0

}

},

"@ai.query": "union (traces | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (requests | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (pageViews | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (dependencies | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (customEvents | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (availabilityResults | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (exceptions | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (customMetrics | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (browserTimings | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)) | where notempty(user_AuthenticatedId) | summarize ['users/authenticated_unique'] = dcount(user_AuthenticatedId)"

}

![顯示 [使用量和估計成本] 的螢幕擷取畫面。](media/metrics-overview/usage-and-costs.png)