Unity 中的扩展眼动跟踪

若要访问扩展眼动跟踪示例的 GitHub 存储库,请使用以下命令:

扩展眼动跟踪是 HoloLens 2 中的一项新功能。 它是标准眼动跟踪的超集,它仅提供组合的眼睛凝视数据。 扩展眼动跟踪还提供单个眼睛凝视数据,并允许应用程序为凝视数据设置不同的帧速率,例如 30、60 和 90fps。 HoloLens 2 目前不支持其他功能,如睁眼和眼球调节。

扩展眼动跟踪 SDK 使应用程序能够访问扩展眼动跟踪的数据和功能。 它可以与 OpenXR API 或旧版 WinRT API 一起使用。

本文介绍将 Unity 中的扩展眼动跟踪 SDK 与混合现实 OpenXR 插件结合使用的方法。

项目设置

- 设置用于 HoloLens 开发的 Unity 项目。

- 选择凝视输入功能

- 从 MRTK 功能工具导入混合现实 OpenXR 插件。

- 将眼动跟踪 SDK NuGet 包导入 Unity 项目。

- 下载并安装 NuGetForUnity 包。

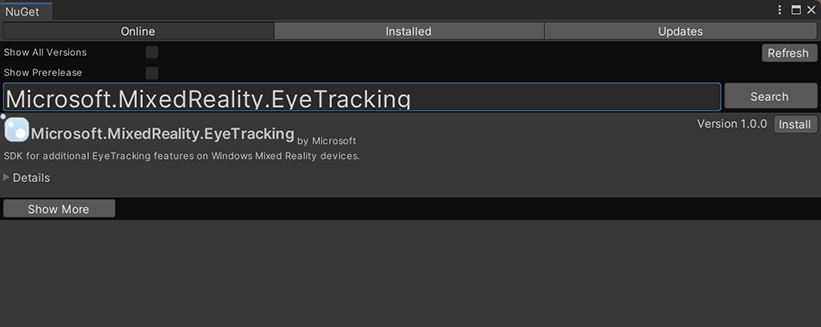

- 在 Unity 编辑器中,转到

NuGet->Manage NuGet Packages,然后搜索Microsoft.MixedReality.EyeTracking - 单击“安装”按钮导入最新版本的 NuGet 包。

- 添加 Unity 帮助程序脚本。

- 将

ExtendedEyeGazeDataProvider.cs脚本从此处添加到 Unity 项目。 - 创建场景,然后将

ExtendedEyeGazeDataProvider.cs脚本附加到任何 GameObject。

- 将

- 使用

ExtendedEyeGazeDataProvider.cs函数并实现逻辑。 - 生成并部署到 HoloLens。

使用 ExtendedEyeGazeDataProvider 的函数

注意

ExtendedEyeGazeDataProvider 脚本依赖于混合现实 OpenXR 插件中的一些 API 来转换凝视数据的坐标。 如果 Unity 项目使用已弃用的 Windows XR 插件或旧版 Unity 中的旧版内置 XR,则它会不起作用。 若要使扩展眼动跟踪也能在这些情况下工作:

- 如果只需要访问帧速率设置,则不需要混合现实 OpenXR 插件,并且可以修改

ExtendedEyeGazeDataProvider以仅保留与帧速率相关的逻辑。 - 如果仍需要访问单个眼睛凝视数据,则需要在 Unity 中使用 WinRT API。 若要了解如何将扩展眼动跟踪 SDK 与 WinRT API 配合使用,请参阅“另请参阅”部分。

该 ExtendedEyeGazeDataProvider 类会包装扩展眼动跟踪 SDK API。 它提供用于获取 Unity 世界空间或相对于主相机的凝视读数的功能。

下面是用于获取凝视数据的 ExtendedEyeGazeDataProvider 的代码示例。

ExtendedEyeGazeDataProvider extendedEyeGazeDataProvider;

void Update() {

timestamp = DateTime.Now;

var leftGazeReadingInWorldSpace = extendedEyeGazeDataProvider.GetWorldSpaceGazeReading(extendedEyeGazeDataProvider.GazeType.Left, timestamp);

var rightGazeReadingInWorldSpace = extendedEyeGazeDataProvider.GetWorldSpaceGazeReading(extendedEyeGazeDataProvider.GazeType.Right, timestamp);

var combinedGazeReadingInWorldSpace = extendedEyeGazeDataProvider.GetWorldSpaceGazeReading(extendedEyeGazeDataProvider.GazeType.Combined, timestamp);

var combinedGazeReadingInCameraSpace = extendedEyeGazeDataProvider.GetCameraSpaceGazeReading(extendedEyeGazeDataProvider.GazeType.Combined, timestamp);

}

执行 ExtendedEyeGazeDataProvider 脚本时,它会将凝视数据帧速率设置为最高选项,目前为 90fps。

扩展眼动跟踪 SDK 的 API 参考

除了使用 ExtendedEyeGazeDataProvider 脚本,还可以创建自己的脚本来直接使用 SDK API。

namespace Microsoft.MixedReality.EyeTracking

{

/// <summary>

/// Allow discovery of Eye Gaze Trackers connected to the system

/// This is the only class from the Extended Eye Tracking SDK that the application will instantiate,

/// other classes' instances will be returned by method calls or properties.

/// </summary>

public class EyeGazeTrackerWatcher

{

/// <summary>

/// Constructs an instance of the watcher

/// </summary>

public EyeGazeTrackerWatcher();

/// <summary>

/// Starts trackers enumeration.

/// </summary>

/// <returns>Task representing async action; completes when the initial enumeration is completed</returns>

public System.Threading.Tasks.Task StartAsync();

/// <summary>

/// Stop listening to trackers additions and removal

/// </summary>

public void Stop();

/// <summary>

/// Raised when an Eye Gaze tracker is connected

/// </summary>

public event System.EventHandler<EyeGazeTracker> EyeGazeTrackerAdded;

/// <summary>

/// Raised when an Eye Gaze tracker is disconnected

/// </summary>

public event System.EventHandler<EyeGazeTracker> EyeGazeTrackerRemoved;

}

/// <summary>

/// Represents an Eye Tracker device

/// </summary>

public class EyeGazeTracker

{

/// <summary>

/// True if Restricted mode is supported, which means the driver supports providing individual

/// eye gaze vector and frame rate

/// </summary>

public bool IsRestrictedModeSupported;

/// <summary>

/// True if Vergence Distance is supported by tracker

/// </summary>

public bool IsVergenceDistanceSupported;

/// <summary>

/// True if Eye Openness is supported by the driver

/// </summary>

public bool IsEyeOpennessSupported;

/// <summary>

/// True if individual gazes are supported

/// </summary>

public bool AreLeftAndRightGazesSupported;

/// <summary>

/// Get the supported target frame rates of the tracker

/// </summary>

public System.Collections.Generic.IReadOnlyList<EyeGazeTrackerFrameRate> SupportedTargetFrameRates;

/// <summary>

/// NodeId of the tracker, used to retrieve a SpatialLocator or SpatialGraphNode to locate the tracker in the scene

/// for the Perception API, use SpatialGraphInteropPreview.CreateLocatorForNode

/// for the Mixed Reality OpenXR API, use SpatialGraphNode.FromDynamicNodeId

/// </summary>

public Guid TrackerSpaceLocatorNodeId;

/// <summary>

/// Opens the tracker

/// </summary>

/// <param name="restrictedMode">True if restricted mode active</param>

/// <returns>Task representing async action; completes when the initial enumeration is completed</returns>

public System.Threading.Tasks.Task OpenAsync(bool restrictedMode);

/// <summary>

/// Closes the tracker

/// </summary>

public void Close();

/// <summary>

/// Changes the target frame rate of the tracker

/// </summary>

/// <param name="newFrameRate">Target frame rate</param>

public void SetTargetFrameRate(EyeGazeTrackerFrameRate newFrameRate);

/// <summary>

/// Try to get tracker state at a given timestamp

/// </summary>

/// <param name="timestamp">timestamp</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAtTimestamp(DateTime timestamp);

/// <summary>

/// Try to get tracker state at a system relative time

/// </summary>

/// <param name="time">time</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAtSystemRelativeTime(TimeSpan time);

/// <summary>

/// Try to get first first tracker state after a given timestamp

/// </summary>

/// <param name="timestamp">timestamp</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAfterTimestamp(DateTime timestamp);

/// <summary>

/// Try to get the first tracker state after a system relative time

/// </summary>

/// <param name="time">time</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAfterSystemRelativeTime(TimeSpan time);

}

/// <summary>

/// Represents a frame rate supported by an Eye Tracker

/// </summary>

public class EyeGazeTrackerFrameRate

{

/// <summary>

/// Frames per second of the frame rate

/// </summary>

public UInt32 FramesPerSecond;

}

/// <summary>

/// Snapshot of Gaze Tracker state

/// </summary>

public class EyeGazeTrackerReading

{

/// <summary>

/// Timestamp of state

/// </summary>

public DateTime Timestamp;

/// <summary>

/// Timestamp of state as system relative time

/// Its SystemRelativeTime.Ticks could provide the QPC time to locate tracker pose

/// </summary>

public TimeSpan SystemRelativeTime;

/// <summary>

/// Indicates of user calibration is valid

/// </summary>

public bool IsCalibrationValid;

/// <summary>

/// Tries to get a vector representing the combined gaze related to the tracker's node

/// </summary>

/// <param name="origin">Origin of the gaze vector</param>

/// <param name="direction">Direction of the gaze vector</param>

/// <returns></returns>

public bool TryGetCombinedEyeGazeInTrackerSpace(out System.Numerics.Vector3 origin, out System.Numerics.Vector3 direction);

/// <summary>

/// Tries to get a vector representing the left eye gaze related to the tracker's node

/// </summary>

/// <param name="origin">Origin of the gaze vector</param>

/// <param name="direction">Direction of the gaze vector</param>

/// <returns></returns>

public bool TryGetLeftEyeGazeInTrackerSpace(out System.Numerics.Vector3 origin, out System.Numerics.Vector3 direction);

/// <summary>

/// Tries to get a vector representing the right eye gaze related to the tracker's node position

/// </summary>

/// <param name="origin">Origin of the gaze vector</param>

/// <param name="direction">Direction of the gaze vector</param>

/// <returns></returns>

public bool TryGetRightEyeGazeInTrackerSpace(out System.Numerics.Vector3 origin, out System.Numerics.Vector3 direction);

/// <summary>

/// Tries to read vergence distance

/// </summary>

/// <param name="value">Vergence distance if available</param>

/// <returns>bool if value is valid</returns>

public bool TryGetVergenceDistance(out float value);

/// <summary>

/// Tries to get left Eye openness information

/// </summary>

/// <param name="value">Eye Openness if valid</param>

/// <returns>bool if value is valid</returns>

public bool TryGetLeftEyeOpenness(out float value);

/// <summary>

/// Tries to get right Eye openness information

/// </summary>

/// <param name="value">Eye openness if valid</param>

/// <returns>bool if value is valid</returns>

public bool TryGetRightEyeOpenness(out float value);

}

}