Prototype tool-calling agents in AI Playground

Important

This feature is in Public Preview.

This article shows how to prototype a tool-calling AI agent with the AI Playground.

Use the AI Playground quickly create a tool-calling agent and chat with it live to see how it behaves. Then, export the agent for deployment or further development in Python code.

To author agents using a code-first approach, see Author AI agents in code.

Requirements

Your workspace must have the following features enabled to prototype agents using AI Playground:

- Either pay-per-token foundation models or external models. See Model serving regional availability

Prototype tool-calling agents in AI Playground

To prototype a tool-calling agent:

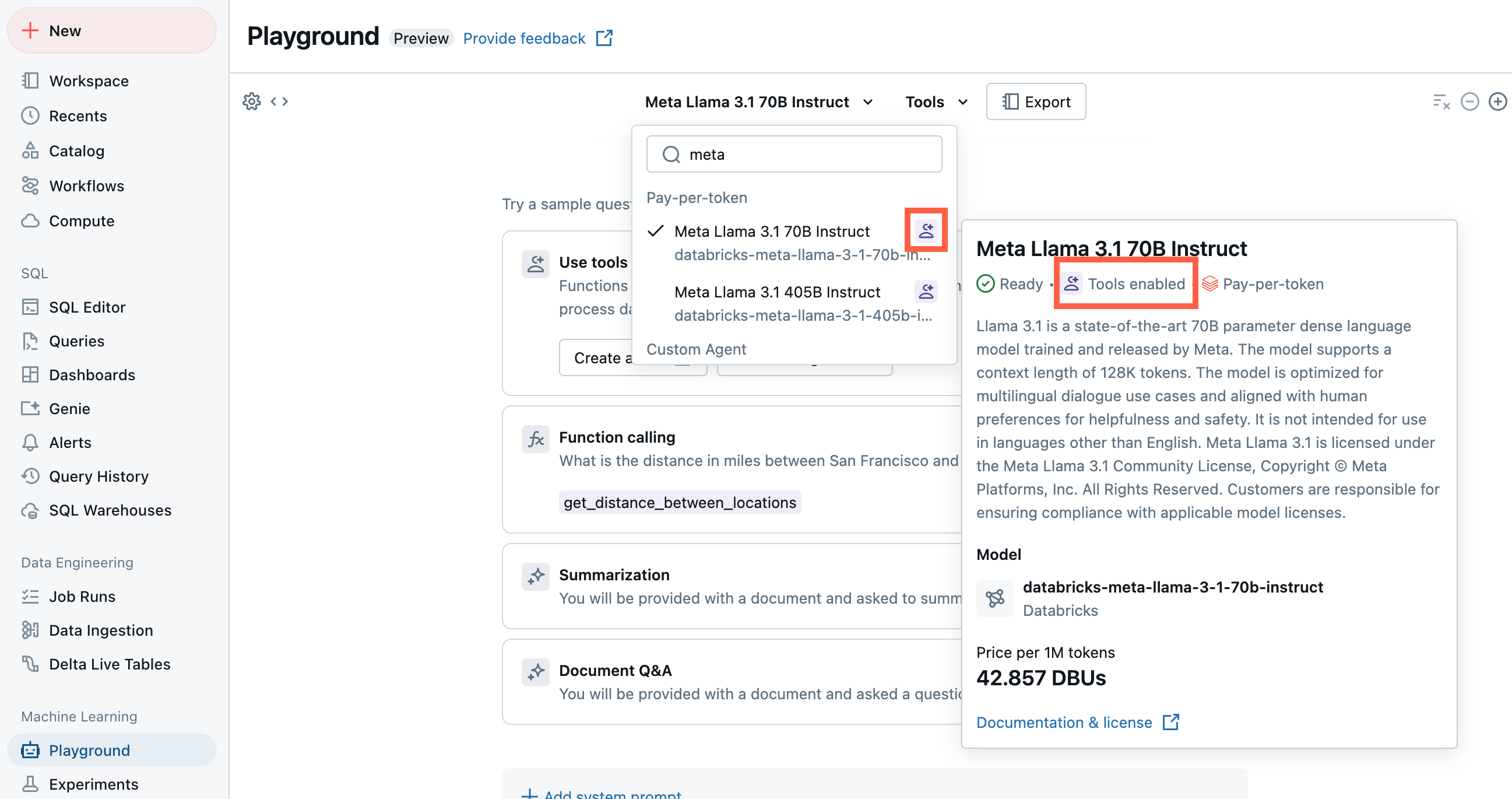

From Playground, select a model with the Tools enabled label.

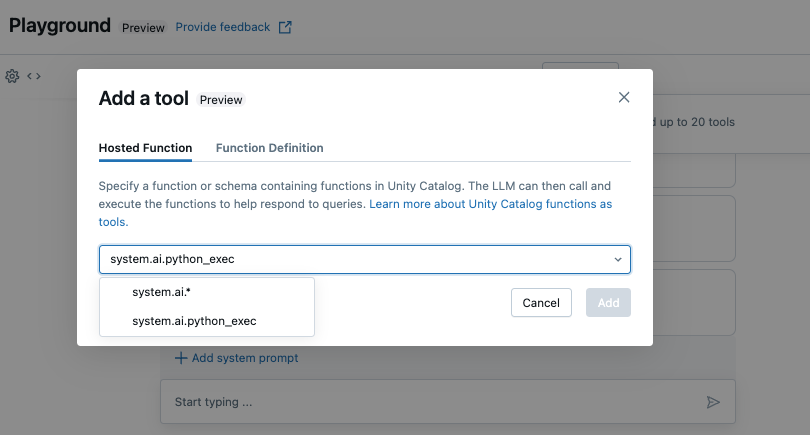

Select Tools and select a tool to give to the agent. For this guide, select the built-in Unity Catalog function,

system.ai.python_exec. This function gives your agent the ability to run arbitrary Python code. To learn how to create agent tools, see AI agent tools.

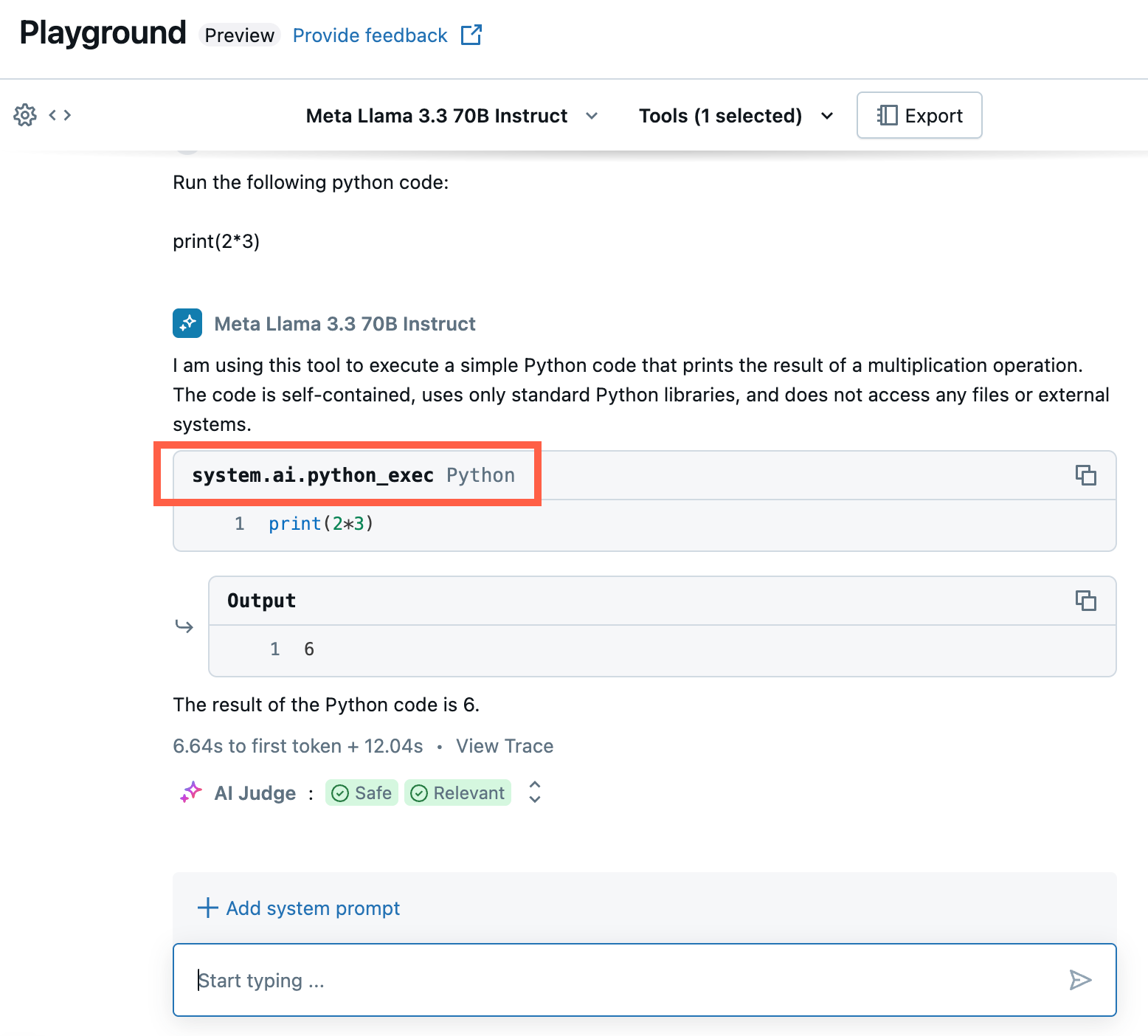

Chat to test out the current combination of LLM, tools, and system prompt and try variations.

Export and deploy AI Playground agents

After prototyping the AI agent in AI Playground, export it to Python notebooks to deploy it to a model serving endpoint.

Click Export to generate Python notebooks that define and deploy the AI agent.

After exporting the agent code, three files are saved to your workspace. These files follow MLflow’s Models from Code methodology, which defines agents directly in code rather than relying on serialized artifacts. To learn more, see MLflow’s Model from Code Guide:

agentnotebook: Contains Python code defining your agent using LangChain.drivernotebook: Contains Python code to log, trace, register, and deploy the AI agent using Mosaic AI Agent Framework.config.yml: Contains configuration information about your agent, including tool definitions.

Open the

agentnotebook to see the LangChain code defining your agent.Run

drivernotebook to log and deploy your agent to a Model Serving endpoint.

Note

The exported code might behave differently from your AI Playground session. Databricks recommends running the exported notebooks to iterate and debug further, evaluate agent quality, and then deploy the agent to share with others.

Develop agents in code

Use the exported notebooks to test and iterate programmatically. Use the notebook to do things like add tools or adjust the agent’s parameters.

When developing programmatically, agents must meet specific requirements to be compatible with other Databricks agent features. To learn how to author agents using a code-first approach, see Author AI agents in code