Configure your AI project to use Azure AI model inference

If you already have an AI project in an existing AI Hub, models via "Models as a Service" are by default deployed inside of your project as stand-alone endpoints. Each model deployment has its own set of URI and credentials to access it. Azure OpenAI models are deployed to Azure AI Services resource or to the Azure OpenAI Service resource.

You can configure the AI project to connect with the Azure AI model inference in Azure AI services. Once configured, deployments of Models as a Service models happen to the connected Azure AI Services resource instead to the project itself, giving you a single set of endpoint and credential to access all the models deployed in Azure AI Foundry.

Additionally, deploying models to Azure AI model inference brings the extra benefits of:

- Routing capability

- Custom content filters

- Global capacity deployment

- Entra ID support and role-based access control

In this article, you learn how to configure your project to use models deployed in Azure AI model inference in Azure AI services.

Prerequisites

To complete this tutorial, you need:

An Azure subscription. If you're using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if it's your case.

An Azure AI services resource. For more information, see Create an Azure AI Services resource.

An Azure AI project and Azure AI Hub.

Tip

When your AI hub is provisioned, an Azure AI services resource is created with it and the two resources connected. To see which Azure AI services resource is connected to your project, go to the Azure AI Foundry portal > Management center > Connected resources, and find the connections of type AI Services.

Configure the project to use Azure AI model inference

To configure the project to use the Azure AI model inference capability in Azure AI Services, follow these steps:

Go to Azure AI Foundry portal.

At the top navigation bar, over the right corner, select the Preview features icon. A contextual blade shows up at the right of the screen.

Turn the feature Deploy models to Azure AI model inference service on.

Close the panel.

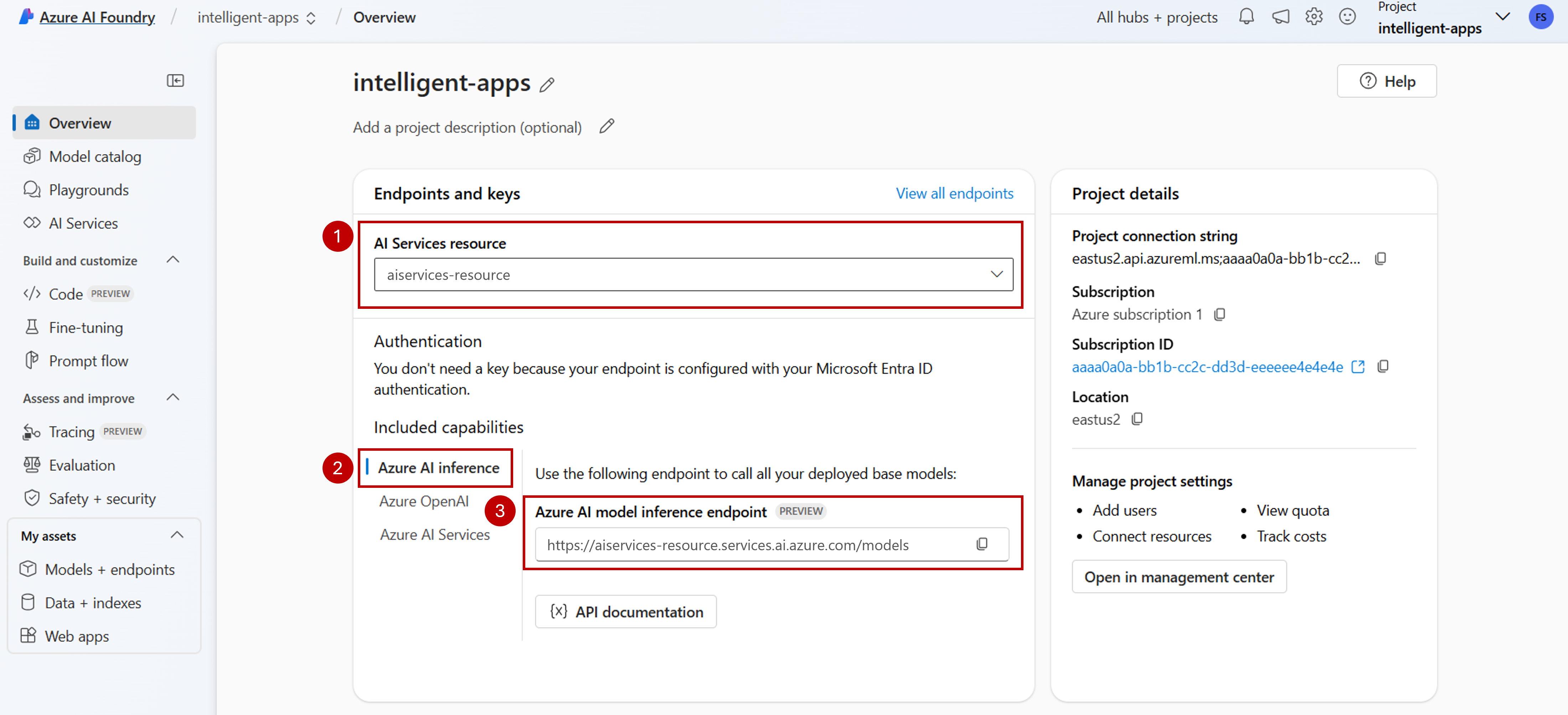

In the landing page of your project, identify the Azure AI Services resource connected to your project. Use the drop-down to change the resource you're connected if you need to.

If no resource is listed in the drop-down, your AI Hub doesn't have an Azure AI Services resource connected to it. Create a new connection by:

In the lower left corner of the screen, select Management center.

In the section Connections select New connection.

Select Azure AI services.

In the browser, look for an existing Azure AI Services resource in your subscription.

Select Add connection.

The new connection is added to your Hub.

Return to the project's landing page to continue and now select the new created connection. Refresh the page if it doesn't show up immediately.

Under Included capabilities, ensure you select Azure AI Inference. The Azure AI model inference endpoint URI is displayed along with the credentials to get access to it.

Tip

Each Azure AI services resource has a single Azure AI model inference endpoint which can be used to access any model deployment on it. The same endpoint serves multiple models depending on which ones are configured. Learn about how the endpoint works.

Take note of the endpoint URL and credentials.

Create the model deployment in Azure AI model inference

For each model you want to deploy under Azure AI model inference, follow these steps:

Go to Model catalog section in Azure AI Foundry portal.

Scroll to the model you're interested in and select it.

You can review the details of the model in the model card.

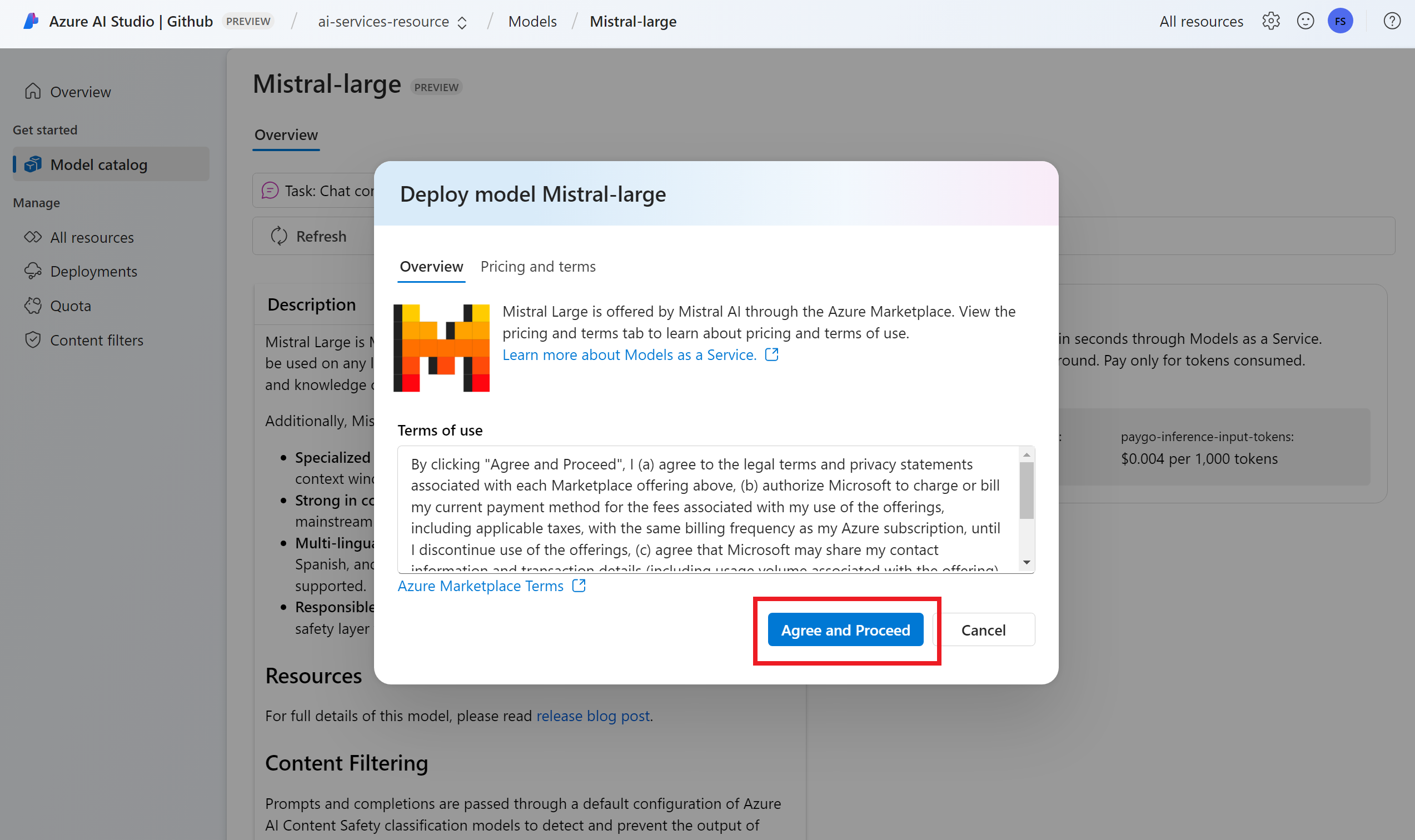

Select Deploy.

For models providers that require more terms of contract, you're asked to accept those terms. Accept the terms on those cases by selecting Subscribe and deploy.

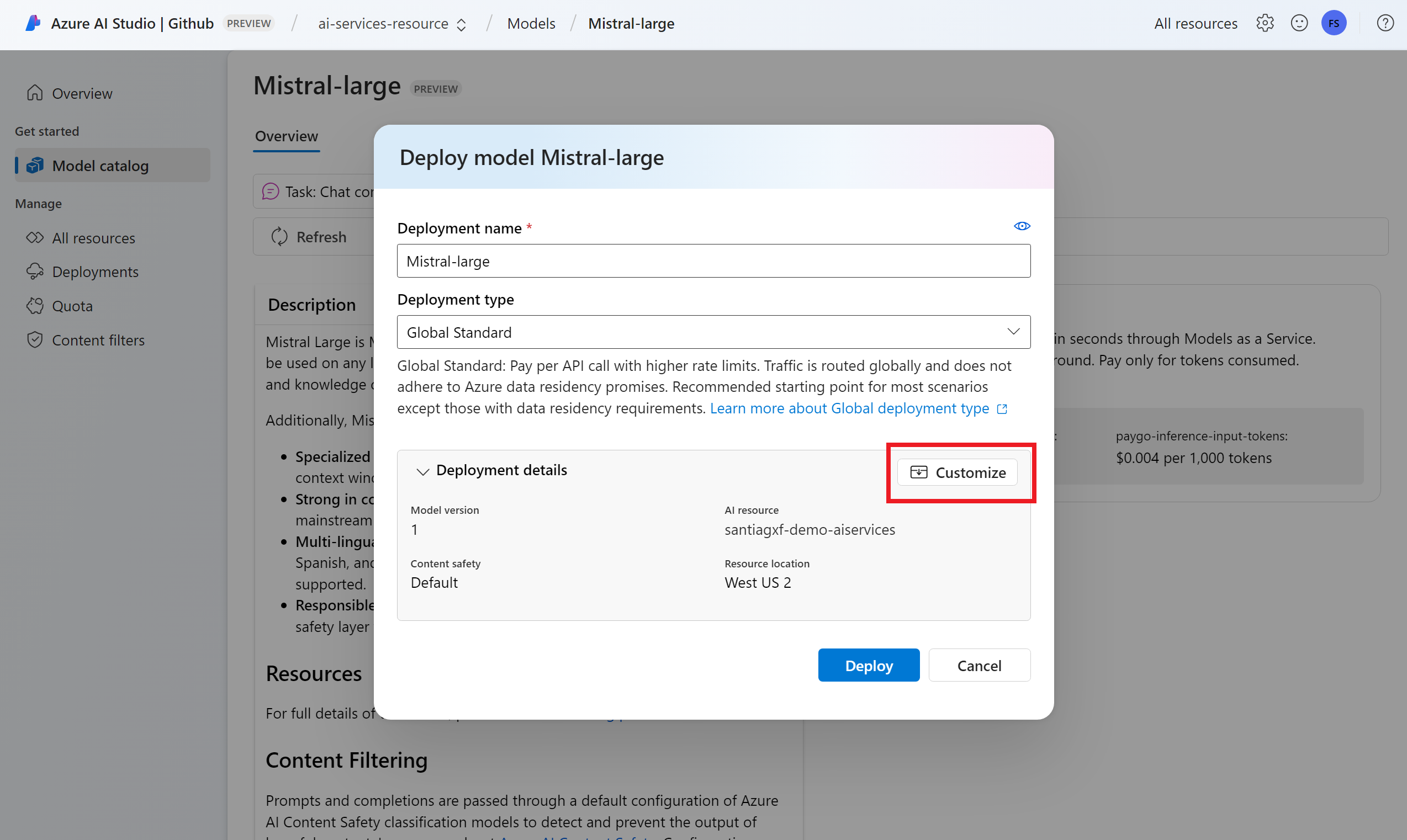

You can configure the deployment settings at this time. By default, the deployment receives the name of the model you're deploying. The deployment name is used in the

modelparameter for request to route to this particular model deployment. It allows you to configure specific names for your models when you attach specific configurations. For instance,o1-preview-safefor a model with a strict content safety content filter.We automatically select an Azure AI Services connection depending on your project because you have turned on the feature Deploy models to Azure AI model inference service. Use the Customize option to change the connection based on your needs. If you're deploying under the Standard deployment type, the models need to be available in the region of the Azure AI Services resource.

Select Deploy.

Once the deployment finishes, you see the endpoint URL and credentials to get access to the model. Notice that now the provided URL and credentials are the same as displayed in the landing page of the project for the Azure AI model inference endpoint.

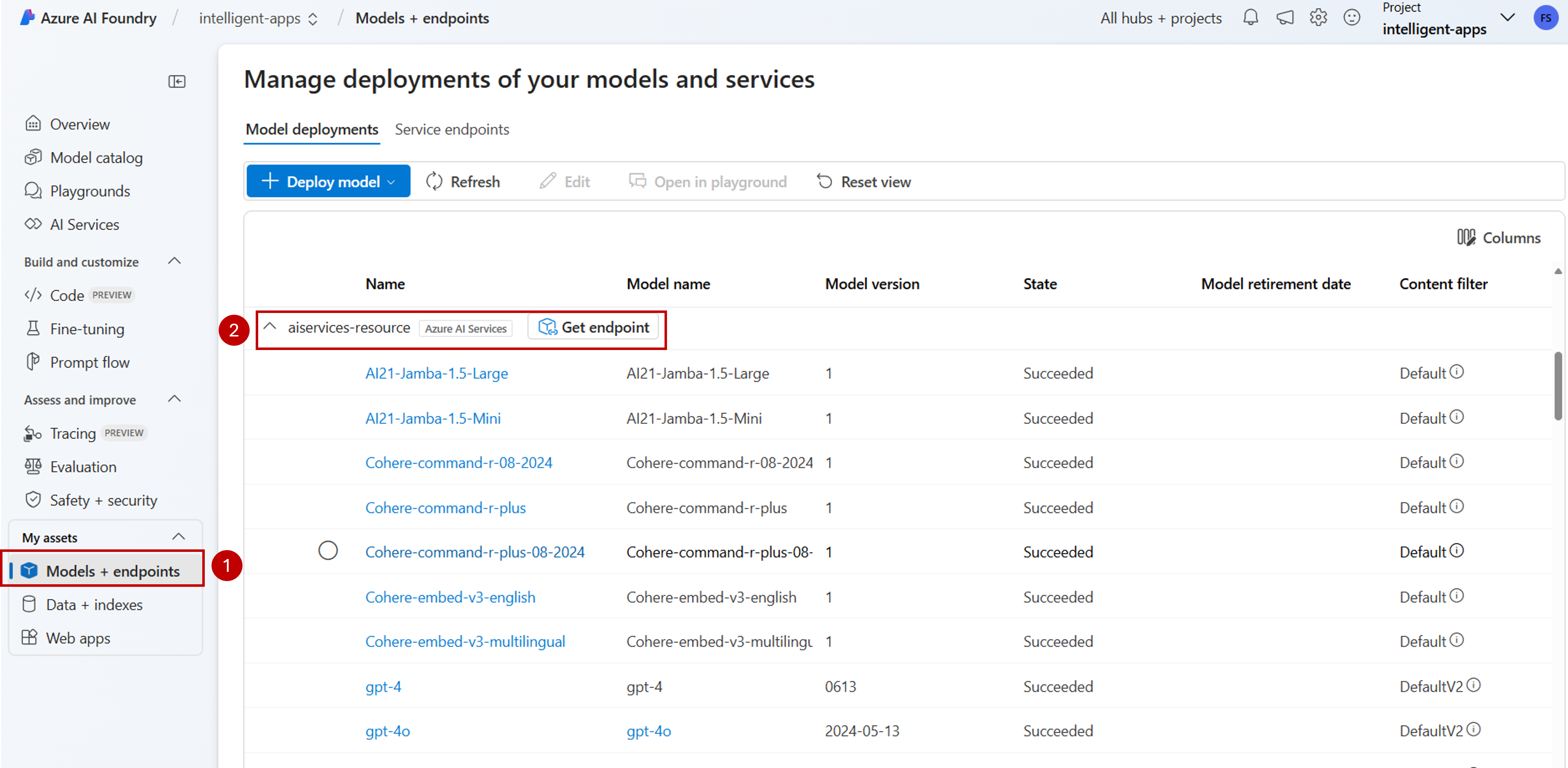

You can view all the models available under the resource by going to Models + endpoints section and locating the group for the connection to your AI Services resource:

Upgrade your code with the new endpoint

Once your Azure AI Services resource is configured, you can start consuming it from your code. You need the endpoint URL and key for it, which can be found in the Overview section:

You can use any of the supported SDKs to get predictions out from the endpoint. The following SDKs are officially supported:

- OpenAI SDK

- Azure OpenAI SDK

- Azure AI Inference SDK

- Azure AI Foundry SDK

See the supported languages and SDKs section for more details and examples. The following example shows how to use the Azure AI model inference SDK with the newly deployed model:

Install the package azure-ai-inference using your package manager, like pip:

pip install azure-ai-inference>=1.0.0b5

Warning

Azure AI Services resource requires the version azure-ai-inference>=1.0.0b5 for Python.

Then, you can use the package to consume the model. The following example shows how to create a client to consume chat completions:

import os

from azure.ai.inference import ChatCompletionsClient

from azure.core.credentials import AzureKeyCredential

model = ChatCompletionsClient(

endpoint=os.environ["AZUREAI_ENDPOINT_URL"],

credential=AzureKeyCredential(os.environ["AZUREAI_ENDPOINT_KEY"]),

)

Explore our samples and read the API reference documentation to get yourself started.

Generate your first chat completion:

from azure.ai.inference.models import SystemMessage, UserMessage

response = client.complete(

messages=[

SystemMessage(content="You are a helpful assistant."),

UserMessage(content="Explain Riemann's conjecture in 1 paragraph"),

],

model="mistral-large"

)

print(response.choices[0].message.content)

Use the parameter model="<deployment-name> to route your request to this deployment. Deployments work as an alias of a given model under certain configurations. See Routing concept page to learn how Azure AI Services route deployments.

Move from Serverless API Endpoints to Azure AI model inference

Although you configured the project to use the Azure AI model inference, existing model deployments continue to exit within the project as Serverless API Endpoints. Those deployments aren't moved for you. Hence, you can progressively upgrade any existing code that reference previous model deployments. To start moving the model deployments, we recommend the following workflow:

Recreate the model deployment in Azure AI model inference. This model deployment is accessible under the Azure AI model inference endpoint.

Upgrade your code to use the new endpoint.

Clean up the project by removing the Serverless API Endpoint.

Upgrade your code with the new endpoint

Once the models are deployed under Azure AI Services, you can upgrade your code to use the Azure AI model inference endpoint. The main difference between how Serverless API endpoints and Azure AI model inference works reside in the endpoint URL and model parameter. While Serverless API Endpoints have set of URI and key per each model deployment, Azure AI model inference has only one for all of them.

The following table summarizes the changes you have to introduce:

| Property | Serverless API Endpoints | Azure AI Model Inference |

|---|---|---|

| Endpoint | https://<endpoint-name>.<region>.inference.ai.azure.com |

https://<ai-resource>.services.ai.azure.com/models |

| Credentials | One per model/endpoint. | One per Azure AI Services resource. You can use Microsoft Entra ID too. |

| Model parameter | None. | Required. Use the name of the model deployment. |

Clean-up existing Serverless API endpoints from your project

After you refactored your code, you might want to delete the existing Serverless API endpoints inside of the project (if any).

For each model deployed as Serverless API Endpoints, follow these steps:

Go to Azure AI Foundry portal.

Select Models + endpoints.

Identify the endpoints of type Serverless and select the one you want to delete.

Select the option Delete.

Warning

This operation can't be reverted. Ensure that the endpoint isn't currently used by any other user or piece of code.

Confirm the operation by selecting Delete.

If you created a Serverless API connection to this endpoint from other projects, such connections aren't removed and continue to point to the inexistent endpoint. Delete any of those connections for avoiding errors.

Limitations

Azure AI model inference in Azure AI Services gives users access to flagship models in the Azure AI model catalog. However, only models supporting pay-as-you-go billing (Models as a Service) are available for deployment.

Models requiring compute quota from your subscription (Managed Compute), including custom models, can only be deployed within a given project as Managed Online Endpoints and continue to be accessible using their own set of endpoint URI and credentials.

Next steps

- Add more models to your endpoint.