Escolher unidades para clusters do Azure Stack HCI e do Windows Server

Aplica-se a: Azure Stack HCI, versões 22H2 e 21H2; Windows Server 2022, Windows Server 2019

Este artigo fornece orientação sobre como escolher drives para satisfazer os seus requisitos de desempenho e capacidade.

Tipos de acionamento

O Storage Spaces Direct, a tecnologia de virtualização de armazenamento subjacente por trás do Azure Stack HCI e do Windows Server, atualmente funciona com quatro tipos de unidades:

| Tipo de acionamento | Descrição |

|---|---|

|

PMem refere-se à memória persistente, um novo tipo de armazenamento de baixa latência e alto desempenho. |

|

NVMe (Non-Volatile Memory Express) refere-se a unidades de estado sólido que ficam diretamente no barramento PCIe. Os fatores de forma comuns são U.2 de 2,5",In-Card de adição PCIe (AIC) e M.2. O NVMe oferece IOPS e taxa de transferência de E/S mais altas com latência mais baixa do que qualquer outro tipo de unidade que suportamos atualmente, exceto PMem. |

|

SSD refere-se a unidades de estado sólido, que se conectam via SATA ou SAS convencionais. |

|

HDD refere-se a unidades de disco rígido magnéticas rotacionais, que oferecem uma vasta capacidade de armazenamento. |

Observação

Este artigo aborda a escolha de configurações de unidade com NVMe, SSD e HDD. Para obter mais informações sobre PMem, consulte Compreender e implementar memória persistente.

Observação

O cache SBL (Storage Bus Layer) não é suportado na configuração de servidor único. Todas as configurações de tipo de armazenamento único plano (por exemplo, all-NVMe ou all-SSD) é o único tipo de armazenamento suportado para um único servidor.

Cache integrado

O Storage Spaces Direct possui um cache integrado do lado do servidor. É um cache de leitura e gravação grande, persistente e em tempo real. Em implantações com vários tipos de discos, está configurado para usar automaticamente todos os discos do tipo "mais rápido". As unidades restantes são usadas para aumentar a capacidade.

Para obter mais informações, consulte Noções básicas sobre o cache do pool de armazenamento.

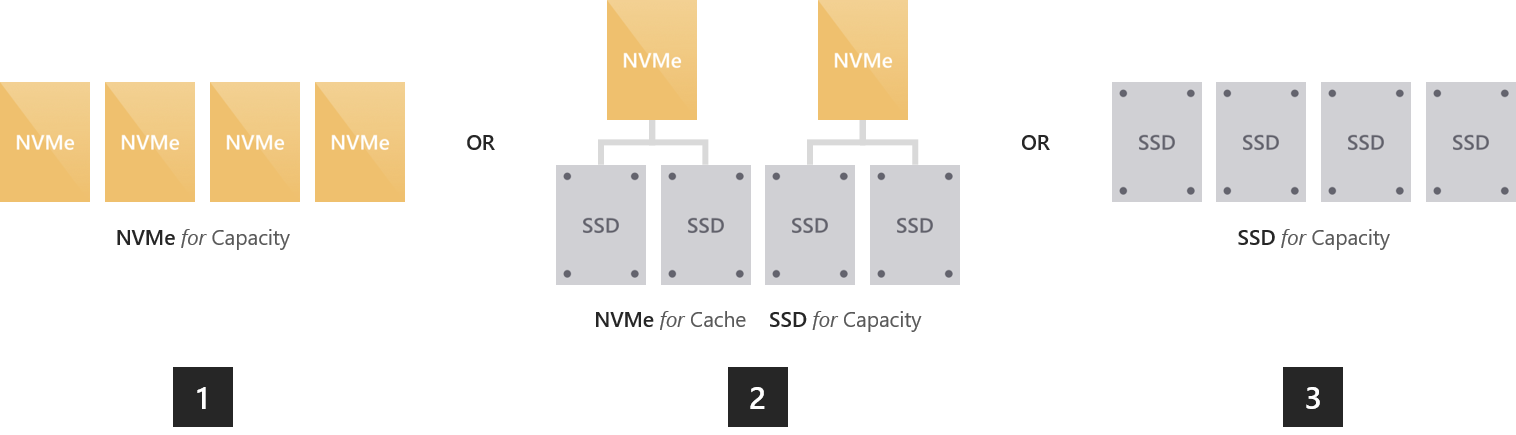

Opção 1 – Maximizar o desempenho

Para obter uma latência previsível e uniforme de submilissegundos em leituras e gravações aleatórias em quaisquer dados, ou para alcançar IOPS extremamente altas (realizámos mais de 13 milhões de!) ou uma taxa de transferência de entrada/saída (realizámos leituras superiores a 500 GB/seg), deves optar só com memória flash.

Existem várias formas de o fazer:

Todos os NVMe. O uso de todos os NVMe oferece um desempenho incomparável, incluindo a baixa latência mais previsível. Se todas as unidades forem do mesmo modelo, não haverá cache. Você também pode misturar modelos NVMe de maior e menor resistência e configurar o primeiro para armazenar gravações em cache para o segundo (requer configuração).

NVMe + SSD. Ao utilizar o NVMe juntamente com SSDs, o NVMe automaticamente faz cache das escritas nos SSDs. Isso permite que as gravações se aglutinem no cache e sejam desativadas apenas conforme necessário, para reduzir o desgaste dos SSDs. Isso fornece características de gravação semelhantes às do NVMe, enquanto as leituras são feitas diretamente dos SSDs, que também são rápidos.

Tudo em SSD. Tal como acontece com All-NVMe, não há cache se todas as suas unidades forem do mesmo modelo. Se você misturar modelos de maior e menor resistência, poderá configurar o primeiro para armazenar gravações em cache para o segundo (requer configuração).

Observação

Uma vantagem de usar totalmente NVMe ou SSD sem cache é que você obtém capacidade de armazenamento utilizável de cada unidade. Não há capacidade "gasta" em cache, o que pode ser atraente em menor escala.

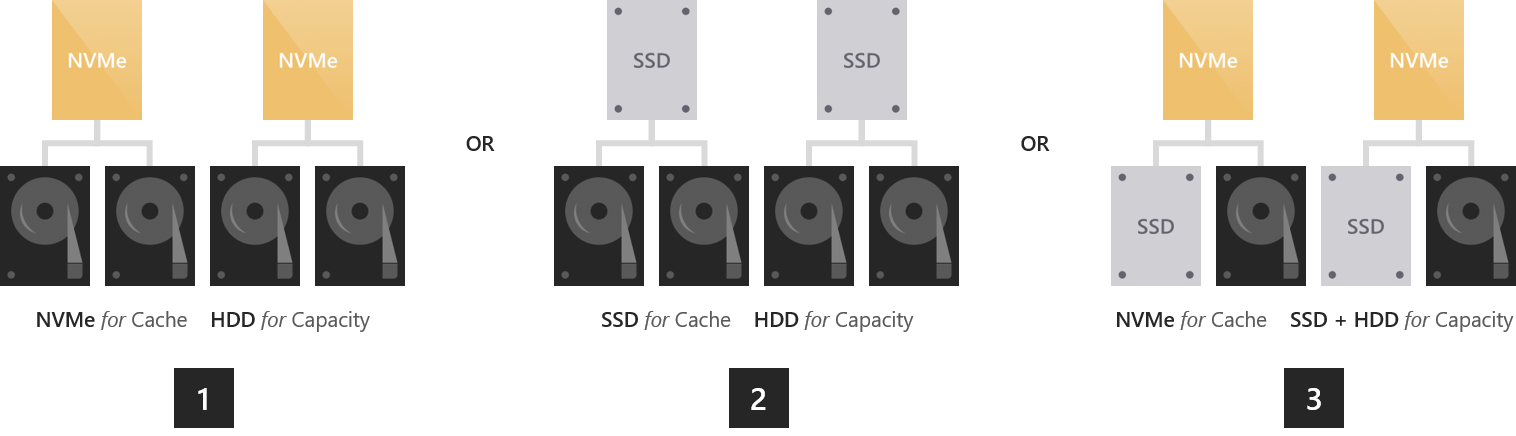

Opção 2 – Equilibrar o desempenho e a capacidade

Para ambientes com uma variedade de aplicações e cargas de trabalho, alguns com requisitos de desempenho rigorosos e outros que exigem uma capacidade de armazenamento considerável, deve optar por uma solução "híbrida" com cache de NVMe ou SSDs para HDDs maiores.

NVMe + HDD. As unidades NVMe aceleram as leituras e gravações ao armazenar ambas em cache. O cache de leituras permite que os HDDs se concentrem nas gravações. As gravações em cache absorvem picos e permitem que as gravações se aglutinem e sejam transferidas apenas conforme necessário, de forma artificialmente serializada que maximiza os IOPS e a taxa de transferência de E/S do HDD. Isso fornece características de gravação semelhantes a NVMe e, para dados lidos com frequência ou recentemente, características de leitura semelhantes a NVMe também.

SSD + HDD. Semelhante ao acima, os SSDs aceleram leituras e gravações armazenando em cache ambos. Isso fornece características de gravação semelhantes a SSD e características de leitura semelhantes a SSD para dados lidos com frequência ou recentemente.

Há mais uma opção, bastante exótica: usar unidades de todos os três tipos .

NVMe + SSD + HDD. Com unidades dos três tipos, unidades NVMe fornecem cache tanto para SSDs quanto para HDDs. A vantagem é que pode-se criar volumes nos SSDs e volumes nos HDDs, paralelamente no mesmo cluster, todos acelerados por NVMe. Os primeiros são exatamente como numa implantação "all-flash", e os segundos são exatamente como nas implantações "híbridas" descritas acima. Isso é conceitualmente como ter dois pools, com gerenciamento de capacidade amplamente independente, ciclos de falha e reparo, e assim por diante.

Importante

Recomendamos usar a camada SSD para colocar as suas cargas de trabalho mais sensíveis ao desempenho totalmente em flash.

Opção 3 – Maximizar a capacidade

Para cargas de trabalho que exigem grande capacidade e escrevem com pouca frequência, como arquivamento, alvos de backup, armazéns de dados ou armazenamento "frio", você deve combinar alguns SSDs para armazenamento em cache com muitos HDDs maiores para capacidade.

- SSD + HDD. Os SSDs fazem cache de leituras e gravações, para absorver picos e fornecer desempenho de gravação semelhante ao SSD, com descarregamento otimizado mais tarde para os HDDs.

Importante

A configuração apenas com HDDs não é suportada. Não se aconselha utilizar a cache de SSDs de alta resistência para SSDs de baixa resistência.

Considerações sobre dimensionamento

Cache

Cada servidor deve ter pelo menos duas unidades de cache (o mínimo necessário para redundância). Recomendamos que o número de unidades de capacidade seja um múltiplo do número de unidades de cache. Por exemplo, se tiveres 4 unidades de cache, vais experimentar um desempenho mais consistente com 8 unidades de capacidade (proporção 1:2) do que com 7 ou 9.

O cache deve ser dimensionado para acomodar o conjunto de trabalho de seus aplicativos e cargas de trabalho, ou seja, todos os dados que eles estão lendo e gravando ativamente a qualquer momento. Não há nenhum requisito de tamanho de cache além disso. Para implementações com HDD, um ponto de partida justo é 10 por cento da capacidade – por exemplo, se cada servidor tiver 4 HDD de 4 TB = 16 TB de capacidade, então 2 x 800 GB SSD = 1,6 TB de cache por servidor. Para implantações totalmente em flash, especialmente com SSDs de alta resistência , pode ser razoável considerar iniciar mais próximo de 5% da capacidade – por exemplo, se cada servidor tiver 24 x SSD de 1,2 TB, somando uma capacidade de 28,8 TB, então 2 x 750 GB NVMe equivalem a 1,5 TB de cache por servidor. Você sempre pode adicionar ou remover unidades de cache mais tarde para ajustar.

Geral

Recomendamos limitar a capacidade total de armazenamento por servidor a aproximadamente 400 terabytes (TB). Quanto mais capacidade de armazenamento por servidor, maior o tempo necessário para ressincronizar dados após o tempo de inatividade ou reinicialização, como ao aplicar atualizações de software. O tamanho máximo atual por pool de armazenamento é de 4 petabytes (PB) (4.000 TB) (1 PB para Windows Server 2016).

Próximos passos

Para obter mais informações, consulte também: