Planear volumes em clusters do Azure Stack HCI e do Windows Server

Aplica-se a: Azure Stack HCI, versões 22H2 e 21H2; Windows Server 2022, Windows Server 2019

Importante

O Azure Stack HCI agora faz parte do Azure Local. A renomeação da documentação do produto está em andamento. No entanto, as versões mais antigas do Azure Stack HCI, por exemplo 22H2, continuarão a fazer referência ao Azure Stack HCI e não refletirão a alteração de nome. Mais informações.

Este artigo fornece orientação sobre como planejar volumes de cluster para atender às necessidades de desempenho e capacidade de suas cargas de trabalho, incluindo a escolha de seu sistema de arquivos, tipo de resiliência e tamanho.

Nota

O Storage Spaces Direct não suporta um servidor de arquivos para uso geral. Se você precisar executar o servidor de arquivos ou outros serviços genéricos no Espaço de Armazenamento Direto, configure-o nas máquinas virtuais.

Revisão: O que são volumes

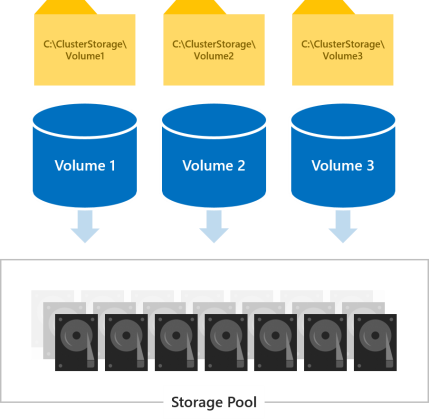

Os volumes são onde você coloca os arquivos de que suas cargas de trabalho precisam, como arquivos VHD ou VHDX para máquinas virtuais Hyper-V. Os volumes combinam as unidades no pool de armazenamento para introduzir os benefícios de tolerância a falhas, escalabilidade e desempenho dos Espaços de Armazenamento Diretos, a tecnologia de armazenamento definida por software por trás do Azure Stack HCI e do Windows Server.

Nota

Usamos o termo "volume" para nos referirmos conjuntamente ao volume e ao disco virtual sob ele, incluindo a funcionalidade fornecida por outros recursos internos do Windows, como Volumes Compartilhados de Cluster (CSV) e ReFS. Não é necessário compreender essas distinções de nível de implementação para planejar e implantar o Storage Spaces Direct com êxito.

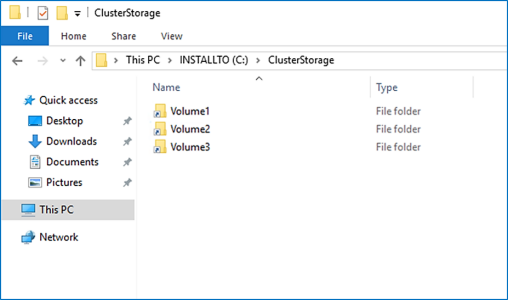

Todos os volumes são acessíveis por todos os servidores no cluster ao mesmo tempo. Uma vez criados, eles aparecem em C:\ClusterStorage\ em todos os servidores.

Escolher quantos volumes criar

Recomendamos tornar o número de volumes um múltiplo do número de servidores no cluster. Por exemplo, se você tiver 4 servidores, experimentará um desempenho mais consistente com 4 volumes totais do que com 3 ou 5. Isso permite que o cluster distribua a "propriedade" do volume (um servidor lida com a orquestração de metadados para cada volume) uniformemente entre os servidores.

Recomendamos limitar o número total de volumes a 64 volumes por cluster.

Escolhendo o sistema de arquivos

Recomendamos o uso do novo Sistema de Arquivos Resiliente (ReFS) para Espaços de Armazenamento Diretos. O ReFS é o principal sistema de arquivos desenvolvido especificamente para virtualização e oferece muitas vantagens, incluindo acelerações de desempenho dramáticas e proteção integrada contra corrupção de dados. Ele suporta quase todos os principais recursos NTFS, incluindo a Desduplicação de Dados no Windows Server versão 1709 e posterior. Consulte a tabela de comparação de recursos do ReFS para obter detalhes.

Se sua carga de trabalho exigir um recurso que o ReFS ainda não suporta, você poderá usar NTFS.

Gorjeta

Volumes com sistemas de arquivos diferentes podem coexistir no mesmo cluster.

Escolher o tipo de resiliência

Os volumes no Storage Spaces Direct fornecem resiliência para proteger contra problemas de hardware, como falhas de unidade ou servidor, e para permitir a disponibilidade contínua durante a manutenção do servidor, como atualizações de software.

Nota

Os tipos de resiliência que você pode escolher são independentes dos tipos de unidades que você tem.

Com dois servidores

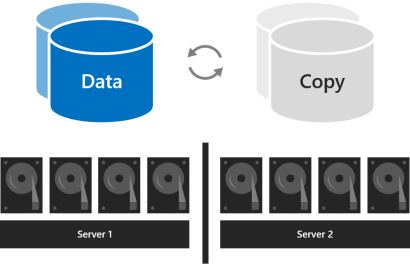

Com dois servidores no cluster, você pode usar o espelhamento bidirecional ou a resiliência aninhada.

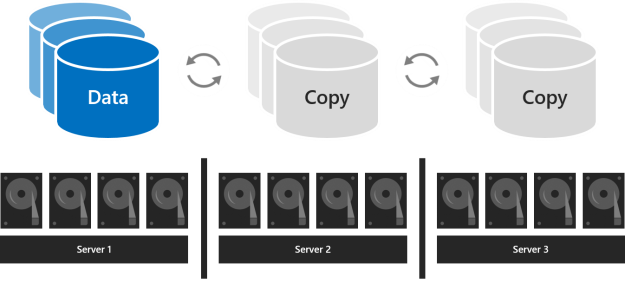

O espelhamento bidirecional mantém duas cópias de todos os dados, uma cópia nas unidades em cada servidor. Sua eficiência de armazenamento é de 50%; para gravar 1 TB de dados, você precisa de pelo menos 2 TB de capacidade de armazenamento físico no pool de armazenamento. O espelhamento bidirecional pode tolerar com segurança uma falha de hardware de cada vez (um servidor ou unidade).

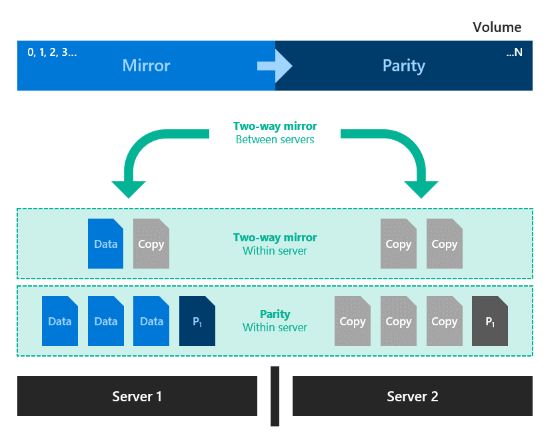

A resiliência aninhada fornece resiliência de dados entre servidores com espelhamento bidirecional e, em seguida, adiciona resiliência dentro de um servidor com espelhamento bidirecional ou paridade acelerada por espelho. O aninhamento fornece resiliência de dados mesmo quando um servidor está reiniciando ou indisponível. Sua eficiência de armazenamento é de 25% com espelhamento bidirecional aninhado e cerca de 35-40% para paridade aninhada acelerada por espelho. A resiliência aninhada pode tolerar com segurança duas falhas de hardware ao mesmo tempo (duas unidades ou um servidor e uma unidade no servidor restante). Devido a essa resiliência de dados adicionada, recomendamos o uso de resiliência aninhada em implantações de produção de clusters de dois servidores. Para saber mais, veja Resiliência aninhada.

Com três servidores

Com três servidores, você deve usar o espelhamento de três vias para melhor tolerância a falhas e desempenho. O espelhamento tridirecional mantém três cópias de todos os dados, uma cópia nas unidades em cada servidor. Sua eficiência de armazenamento é de 33,3% – para gravar 1 TB de dados, você precisa de pelo menos 3 TB de capacidade de armazenamento físico no pool de armazenamento. O espelhamento de três vias pode tolerar com segurança pelo menos dois problemas de hardware (unidade ou servidor) ao mesmo tempo. Se 2 nós ficarem indisponíveis, o pool de armazenamento perderá quorum, já que 2/3 dos discos não estão disponíveis e os discos virtuais estão inacessíveis. No entanto, um nó pode estar inativo e um ou mais discos em outro nó podem falhar e os discos virtuais permanecem online. Por exemplo, se você estiver reinicializando um servidor quando, de repente, outra unidade ou servidor falhar, todos os dados permanecerão seguros e continuamente acessíveis.

Com quatro ou mais servidores

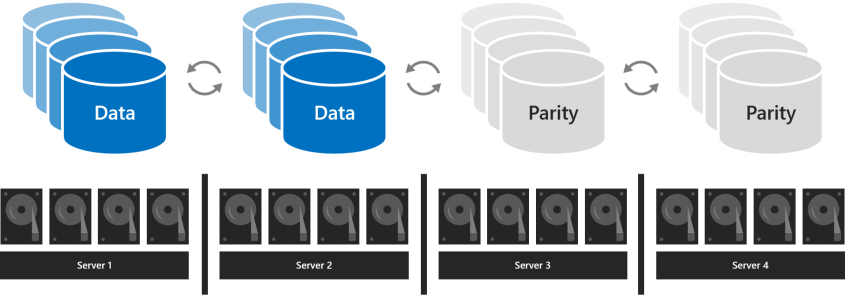

Com quatro ou mais servidores, você pode escolher para cada volume se deseja usar espelhamento de três vias, paridade dupla (geralmente chamada de "codificação de eliminação") ou misturar os dois com paridade acelerada por espelho.

A paridade dupla oferece a mesma tolerância a falhas que o espelhamento de três vias, mas com melhor eficiência de armazenamento. Com quatro servidores, sua eficiência de armazenamento é de 50,0%; para armazenar 2 TB de dados, você precisa de 4 TB de capacidade de armazenamento físico no pool de armazenamento. Isso aumenta para 66,7% de eficiência de armazenamento com sete servidores e continua até 80,0% de eficiência de armazenamento. A contrapartida é que a codificação de paridade é mais intensiva em computação, o que pode limitar seu desempenho.

O tipo de resiliência a ser usado depende dos requisitos de desempenho e capacidade do seu ambiente. Esta é uma tabela que resume o desempenho e a eficiência de armazenamento de cada tipo de resiliência.

| Tipo de resiliência | Eficiência da capacidade | Velocidade |

|---|---|---|

| Espelho |  Espelho de três vias: 33% Espelho bidirecional: 50% |

O mais alto desempenho |

| Paridade acelerada por espelho |  Depende da proporção de espelho e paridade |

Muito mais lento do que o espelho, mas até duas vezes mais rápido que a paridade dupla Ideal para grandes gravações e leituras sequenciais |

| Dupla paridade |  4 servidores: 50% 16 servidores: até 80% |

Maior latência de E/S & Uso da CPU em gravações Ideal para grandes gravações e leituras sequenciais |

Quando o desempenho é mais importante

As cargas de trabalho que têm requisitos de latência estritos ou que precisam de muitas IOPS aleatórias mistas, como bancos de dados SQL Server ou máquinas virtuais Hyper-V sensíveis ao desempenho, devem ser executadas em volumes que usam espelhamento para maximizar o desempenho.

Gorjeta

O espelhamento é mais rápido do que qualquer outro tipo de resiliência. Usamos espelhamento para quase todos os nossos exemplos de desempenho.

Quando a capacidade é mais importante

As cargas de trabalho que gravam com pouca frequência, como data warehouses ou armazenamento "frio", devem ser executadas em volumes que usam paridade dupla para maximizar a eficiência do armazenamento. Determinadas outras cargas de trabalho, como SoFS (Scale-Out File Server), VDI (infraestrutura de área de trabalho virtual) ou outras que não criam muito tráfego de E/S aleatório de deriva rápida e/ou não exigem o melhor desempenho também podem usar paridade dupla, a seu critério. A paridade inevitavelmente aumenta a utilização da CPU e a latência de E/S, particularmente em gravações, em comparação com o espelhamento.

Quando os dados são gravados em massa

Cargas de trabalho que gravam em grandes passagens sequenciais, como destinos de arquivamento ou backup, têm outra opção: um volume pode combinar espelhamento e paridade dupla. Escreve terra primeiro na porção espelhada e são gradualmente movidos para a porção de paridade mais tarde. Isso acelera a ingestão e reduz a utilização de recursos quando grandes gravações chegam, permitindo que a codificação de paridade de computação intensiva aconteça por mais tempo. Ao dimensionar as partes, considere que a quantidade de gravações que acontecem de uma só vez (como um backup diário) deve caber confortavelmente na parte espelhada. Por exemplo, se você ingerir 100 GB uma vez por dia, considere usar espelhamento para 150 GB a 200 GB e paridade dupla para o restante.

A eficiência de armazenamento resultante depende das proporções escolhidas.

Gorjeta

Se você observar uma diminuição abrupta no desempenho de gravação durante a ingestão de dados, isso pode indicar que a parte do espelho não é grande o suficiente ou que a paridade acelerada pelo espelho não é adequada para seu caso de uso. Por exemplo, se o desempenho de gravação diminuir de 400 MB/s para 40 MB/s, considere expandir a parte do espelho ou mudar para espelho de três vias.

Sobre implantações com NVMe, SSD e HDD

Em implantações com dois tipos de unidades, as unidades mais rápidas fornecem cache, enquanto as unidades mais lentas fornecem capacidade. Isso acontece automaticamente – para obter mais informações, consulte Noções básicas sobre o cache em Espaços de Armazenamento Diretos. Nessas implantações, todos os volumes residem, em última análise, no mesmo tipo de drives – os drives de capacidade.

Em implantações com os três tipos de unidades, apenas as unidades mais rápidas (NVMe) fornecem cache, deixando dois tipos de unidades (SSD e HDD) para fornecer capacidade. Para cada volume, pode escolher se reside inteiramente no nível SSD, inteiramente no nível HDD ou se abrange os dois.

Importante

Recomendamos usar a camada SSD para colocar suas cargas de trabalho mais sensíveis ao desempenho em all-flash.

Escolher o tamanho dos volumes

Recomendamos limitar o tamanho de cada volume a 64 TB no Azure Stack HCI.

Gorjeta

Se você usar uma solução de backup que dependa do VSS (Serviço de Cópias de Sombra de Volume) e do provedor de software Volsnap, como é comum em cargas de trabalho de servidor de arquivos, limitar o tamanho do volume a 10 TB melhorará o desempenho e a confiabilidade. As soluções de backup que usam a mais recente API RCT do Hyper-V e/ou a clonagem de blocos ReFS e/ou as APIs de backup SQL nativas têm um bom desempenho de até 32 TB ou mais.

Superfície de apoio das rodas

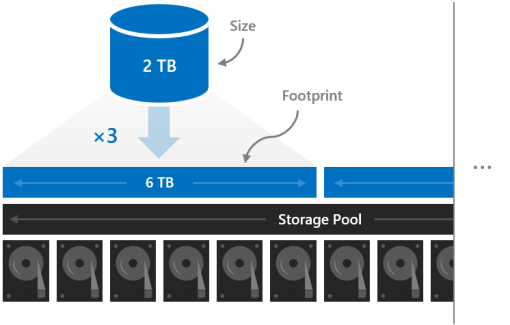

O tamanho de um volume refere-se à sua capacidade utilizável, à quantidade de dados que pode armazenar. Isso é fornecido pelo parâmetro -Size do cmdlet New-Volume e, em seguida, aparece na propriedade Size quando você executa o cmdlet Get-Volume .

O tamanho é distinto do espaço ocupado pelo volume, a capacidade total de armazenamento físico que ele ocupa no pool de armazenamento. A pegada depende do seu tipo de resiliência. Por exemplo, os volumes que usam espelhamento de três vias têm uma pegada três vezes maior do que ela.

As pegadas dos seus volumes precisam caber no pool de armazenamento.

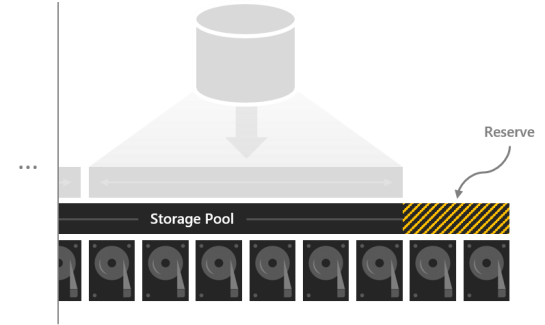

Capacidade de reserva

Deixar alguma capacidade no pool de armazenamento não alocada dá aos volumes espaço para reparar "in-loco" depois que os drives falham, melhorando a segurança e o desempenho dos dados. Se houver capacidade suficiente, um reparo paralelo imediato e in-loco pode restaurar os volumes para a resiliência total, mesmo antes de as unidades com falha serem substituídas. Isso acontece automaticamente.

Recomendamos reservar o equivalente a uma unidade de capacidade por servidor, até 4 unidades. Você pode reservar mais a seu critério, mas esta recomendação mínima garante que um reparo imediato, no local e paralelo pode ser bem-sucedido após a falha de qualquer unidade.

Por exemplo, se você tiver 2 servidores e estiver usando unidades de capacidade de 1 TB, reserve 2 x 1 = 2 TB do pool como reserva. Se você tiver 3 servidores e unidades de capacidade de 1 TB, reserve 3 x 1 = 3 TB como reserva. Se você tiver 4 ou mais servidores e unidades de capacidade de 1 TB, reserve 4 x 1 = 4 TB como reserva.

Nota

Em clusters com unidades dos três tipos (NVMe + SSD + HDD), recomendamos reservar o equivalente a uma SSD mais uma HDD por servidor, até 4 unidades de cada.

Exemplo: planejamento de capacidade

Considere um cluster de quatro servidores. Cada servidor tem algumas unidades de cache mais dezesseis unidades de 2 TB para capacidade.

4 servers x 16 drives each x 2 TB each = 128 TB

A partir desses 128 TB no pool de armazenamento, separamos quatro drives, ou 8 TB, para que os reparos no local possam acontecer sem qualquer pressa para substituir os drives depois que eles falharem. Isso deixa 120 TB de capacidade de armazenamento físico no pool com o qual podemos criar volumes.

128 TB – (4 x 2 TB) = 120 TB

Suponhamos que precisamos de nossa implantação para hospedar algumas máquinas virtuais Hyper-V altamente ativas, mas também temos muito armazenamento frio – arquivos antigos e backups que precisamos reter. Como temos quatro servidores, vamos criar quatro volumes.

Vamos colocar as máquinas virtuais nos dois primeiros volumes, Volume1 e Volume2. Escolhemos o ReFS como o sistema de arquivos (para a criação e os pontos de verificação mais rápidos) e o espelhamento de três vias para resiliência para maximizar o desempenho. Vamos colocar a câmara frigorífica nos outros dois volumes, Volume 3 e Volume 4. Escolhemos NTFS como o sistema de arquivos (para eliminação de duplicação de dados) e paridade dupla para resiliência para maximizar a capacidade.

Não somos obrigados a fazer todos os volumes do mesmo tamanho, mas para simplificar, vamos – por exemplo, podemos fazer todos eles 12 TB.

O Volume1 e o Volume2 ocupam, cada um, 12 TB x 33,3% de eficiência = 36 TB de capacidade de armazenamento físico.

O Volume3 e o Volume4 ocupam, cada um, 12 TB x 50,0 por cento de eficiência = 24 TB de capacidade de armazenamento físico.

36 TB + 36 TB + 24 TB + 24 TB = 120 TB

Os quatro volumes se encaixam exatamente na capacidade de armazenamento físico disponível em nossa piscina. Perfeito!

Gorjeta

Você não precisa criar todos os volumes imediatamente. Você sempre pode estender volumes ou criar novos volumes mais tarde.

Para simplificar, este exemplo usa unidades decimais (base-10) por toda parte, o que significa 1 TB = 1.000.000.000.000 bytes. No entanto, as quantidades de armazenamento no Windows aparecem em unidades binárias (base-2). Por exemplo, cada unidade de 2 TB apareceria como 1,82 TiB no Windows. Da mesma forma, o pool de armazenamento de 128 TB apareceria como 116,41 TiB. Isto é esperado.

Utilização

Consulte Criando volumes no Azure Stack HCI.

Próximos passos

Para obter mais informações, consulte também: