Testing in Production (TiP) - It Really Happens–Examples from Facebook, Amazon, Google, and Microsoft

Testing in Production (TiP) really happens, and yes that it s a good thing! Here are some examples from Facebook, Amazon, Google, and Microsoft.

The Methodologies

This is not a complete list of TiP methodologies, but explains the ones used in the examples given

A/B Testing (aka Online Controlled Experimentation) : I explain this in detail in my previous blog post, but in a nutshell some percent of users of a website or service are unbeknownst to them given an alternate experience (a new version of the service). Data is then collected on how users act in the old versus new service, which can be analyzed to determine whether the new proposed change is good or not. While often used to test design and functionality, it can also be used as a test of the software quality as exposure to the full spectrum of real-world user scenarios can uncover defects.

Ramped Deployment: Using Exposure Control, a new deployment of a website or service is gradually rolled out. First to a small percentage of users, and then ultimately to all of them. At each stage the software is monitored, and if any critical issues are uncovered that cannot be remedied, the deployment is rolled back. There are more details on Ramped Deployment in my previous blog post.

Shadowing: The system under test is deployed and uses real production data in real-time, but the results are not exposed to the end user.

How Facebook Ships Code reveals several insights into the social network

on A/B Testing

arguments about whether or not a feature idea is worth doing or not generally get resolved by just spending a week implementing it and then testing it on a sample of users, e.g., 1% of Nevada users.

on rolling out new code, they show the use of Ramped Deployment. Code is rolled out in several stages. The first three of these expose the code to a bigger and bigger audience

- internal release

- small external release

- full external release

How Facebook Ships Code goes on to say:

if a release is causing any issues (e.g., throwing errors, etc.) then push is halted. the engineer who committed the offending changeset is paged to fix the problem. and then the release starts over again at level 1.

[Ops] server metrics go beyond the usual error logs, load & memory utilization stats — also include user behavior. E.g., if a new release changes the percentage of users who engage with Facebook features, the ops team will see that in their metrics and may stop a release for that reason so they can investigate.

A key to TiP is that it is most applicable to services (including website), and only works when the engineering team is empowered to respond to live site issues, and at Google all engineers have access to the production machines: to “…deploy, configure, monitor, debug, and maintain them” [Google Talk, June 2007 @ 20:55]'

Google is famous for A/B Testing. They even produce a popular product called Website Optimizer that enables website owners to do their own experiments

Google is famous for A/B Testing. They even produce a popular product called Website Optimizer that enables website owners to do their own experiments

They also employees more advanced versions of these tools internally to test Google features. According to [Google Talk, June 2007 @20:35] they use an “Experimentation Framework” that can limit the initial launch to

- Explicit People

- Just Googlers

- Percent of all users

And such experimentation is not limited to features, but “could be a new caching scheme”

An example of TiP using the Shadowing technique is given at [Google Talk, June 2007 @9:00]. When first developing and testing Google talk, the presence status indicator presented a challenge for testing as the expected scale was billions of packets per day. Without their seeing it or knowing it, users of Orkut (a Google product) triggered presence status changes in back-end servers where engineers could assess the health and quality of that system. This approach also utilized Exposure Control where initially only 1% of Orkut page views triggered the presence status changes, which was slowly ramped up.

Of course there will always be resistance to such a data-driven approach, and one high-profile manifestation of this was the resignation of Google’s Visual Design Lead , Douglas Bowman, who complained that he “won’t miss a design philosophy that lives or dies strictly by the sword of data“ – [Goodbye, Google, Mar 2009]

Amazon

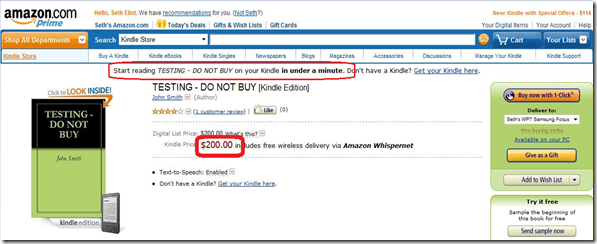

Amazon has a well deserved reputation of being data-driven in its decision making. TiP is a vital part of this, but may not have always been approached as a legitimate methodology instead of an ad hoc approach. An example of the latter can be seen by anyone on the production Amazon.com site who searches for {test ASIN}. where “ASIN” is the Amazon Standard Identification Number assigned to all items for sale on the Amazon site. Such a search will turn up the following Amazon items “for sale”:

This is TiP done poorly as it diminishes the perceived quality of the website, and exposes customers to risk – a $99,999 charge (or even $200 one) for a bogus item would not be a customer satisfying experience.

Another TiP “slip” occurred prior to the launch of Amazon Unbox (now Amazon Instant Video). Amazon attempted to use Exposure Control to limit access to the yet un-launched site, however and enterprising “hacker” found the information anyway and made it public.

However Amazon’s TiP successes should outweigh these missteps. Greg Linden talks about the A/B experiment he ran to show that making recommendations based on the contents of your shopping cart was a good thing (where good thing equals more sales for Amazon). A key take-away was that prior to the experiment an SVP thought this was a bad idea, but as Greg says:

I heard the SVP was angry when he discovered I was pushing out a test. But, even for top executives, it was hard to block a test. Measurement is good. The only good argument against testing would be that the negative impact might be so severe that Amazon couldn't afford it, a difficult claim to make. The test rolled out.

The results were clear. Not only did it win, but the feature won by such a wide margin that not having it live was costing Amazon a noticeable chunk of change. With new urgency, shopping cart recommendations launched.

Another success involved the move of Amazon’s ordering pipeline (where purchase transactions are handled) to a new platform (along with the rest of the site). A “simple” migration, the developers did not expect much trouble, however testers’ wisdom prevailed and a series of online experiments used TiP to uncover revenue impacting problems before the launch [Testing with Real Users, slide 56].

Microsoft

If you have ever logged into a Microsoft property such as Hotmail, Skydrive, Xbox Live, or Zune Marketplace you have used Windows Live ID (WLID), the user/password authentication portal used by these and other products. With so many connection points to other services that depend on it, WLID has implemented ramped deployment to assure high quality through exposure to production traffic. . The new code is deployed to servers but is initially not used. Then the percent of requests served by the new version is incremented and carefully monitored. If monitoring uncovers any issues they are either fixed or the release is rolled back with all users going back to the prior safe version. Ultimately all requests are served by the new system at which time it is fully deployed.

If you have ever logged into a Microsoft property such as Hotmail, Skydrive, Xbox Live, or Zune Marketplace you have used Windows Live ID (WLID), the user/password authentication portal used by these and other products. With so many connection points to other services that depend on it, WLID has implemented ramped deployment to assure high quality through exposure to production traffic. . The new code is deployed to servers but is initially not used. Then the percent of requests served by the new version is incremented and carefully monitored. If monitoring uncovers any issues they are either fixed or the release is rolled back with all users going back to the prior safe version. Ultimately all requests are served by the new system at which time it is fully deployed.

Cosmos is a massively scalable, parallelized data storage and processing system used within Microsoft (that is all of Cosmos’ customers are internal Microsoft teams). As part of its test strategy the Cosmos Test Team has built tools to collect telemetry and monitor the production live-site system and provides quality assessments based on analysis of this telemetry. Some systems are totally automated such as Automated Failure Analysis (AFA) which files bugs when crashes occur or error thresholds are exceeded. Other systems calculate metrics and reveal trends that are then analyzed by testers expert in the system to file detailed bugs.

And of course Microsoft does its share of AB Testing also. MSN, Xbox live, and Bing are among the many products that avail themselves of such testing.

IMVU

It may seem weird to but IMVU, the 3-D avatar based chat service whose slogan “Meet New People in 3-D” may be better applied by leaving the house, but Timothy Fitz has done such an admirable job explaining their Ramped Deployment TiP strategy that this feat of engineering excellence should be shared here:

Back to the deploy process, nine minutes have elapsed and a commit has been greenlit for the website. The programmer runs the imvu_push script. The code is rsync’d out to the hundreds of machines in our cluster. Load average, cpu usage, php errors and dies and more are sampled by the push script, as a basis line. A symlink is switched on a small subset of the machines throwing the code live to its first few customers. A minute later the push script again samples data across the cluster and if there has been a statistically significant regression then the revision is automatically rolled back. If not, then it gets pushed to 100% of the cluster and monitored in the same way for another five minutes. The code is now live and fully pushed. This whole process is simple enough that it’s implemented by a handful of shell scripts.