Don't just listen to your users…watch them with Online Experiments

Online Controlled Experimentation (also known as A/B Testing) is a subject I will re-visit often as I am Test Manager for Microsoft's Experimentation Platform (ExP) which enables properties at Microsoft to conduct such testing. We even have a shiny logo:

Guessing, Listening, and Watching

To produce software that makes our customers giddy we need to understand what those rascally customers want. To that end, we can do the following:

- We can guess. It can even be an educated guess based on past market trends and predictions from highly paid consultants

- We can ask them and listen to the answers. Web surveys cast a wide net and garner some responses, or we might invite folks in for a focus group and offer gifts in return for their time. There's good data to be obtained, but two things must be kept in mind:

- Self-selection bias: you are not finding out what your typical user thinks, you are finding out what your user who is available at 2PM on a Tuesday, shows up at building 21 in Redmond, and spends 2 hours looking at screenshots and will answer questions thinks.

- Note I said what the user "thinks", which may be different than what the user ultimately does. We do not care what the user thinks they'll need/use/buy, we care what the user will need/use/buy.

- We can watch the users' actual behavior. One such way is via a usability lab - this usually involves a single user placed in a room with the software and is observed by a usability expert in another room. The usability expert can then communicate with the subject and either give them tasks or offer guidance (the classic "one-way mirror" setup). There is some cool technology that can be brought to bear here. For example, the point on the screen where the subject is looking can be measured using Eyetracking. Somewhat surprising things can be learned from eyetracking such as readability goes down as the number of graphic elements go up, and that people scan pages in an "F" pattern:

The way of watching our users' behavior that I want to expound upon here however is Online Experimentation.

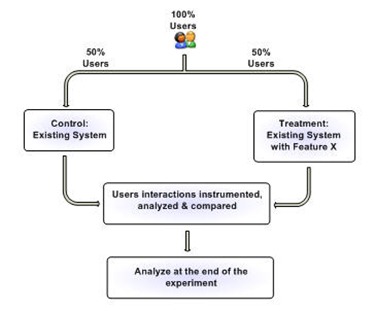

You're in an experiment right now

…well probably…assuming you use one of Microsoft's several web sites or Amazon.com or eBay or Google. Online experimentation is a simple concept. All users request to go to a specific web page, but behind the scenes some percent of users are shown a different page than the others. Users do not know they are in an experiment, all they know is that they asked for a web address (URL) and were served a page. The point is that some users might see the page as it's been for months (the control), while some might see the page with some new feature(s) on it (the treatment). We then can measure what users actually do on these respective pages to find out which one is "better". This diagram offers a simplistic view of it:

I say it's simplistic because there can be more than just two variations of the page, and the users need not be split 50/50. We can have two variants split 90/10, or four variants split 10/10/40/40, etc.

What is better?

The point of experimentation is to determine which implementation is "better", but what do we mean by better? First we must look at what we can measure, and then among that how do we decide which one of those measurements can measure "betterness" (or “betterosity” of you prefer).

As for what we are measuring, ExP as a highly flexible, highly customizable system can measure almost every client-side or server side event from clicks to page views to page requests to even dwell times, and then slice and dice these by user, per hour, per day, or per session. On the other hand a service like Google Website Optimizer, which is free and widely available, but much more limited and offers a fixed set of observations that are available focused on the click-through-rate (clicks per page view).

Regarding how do we define better for ExP experiments, although we collect many different data metrics, we define one as the Overall Evaluation Criterion (OEC). It is this metric that will define whether the treatment is a success or not over the control. In picking the right OEC, you want to have a strong business driver. Revenue is often a good OEC, but remember also to tie it to the long term goals of your website such as customer lifetime value. For a retail site, you can measure sales per user and see if there is an increase (and this would be a good OEC), but conversions per new user (i.e. new users who made a purchase) may be more interesting for the long run of your business.

Keep in mind that just because you are have decided what to measure, are measuring it, and you see that on average B > A, this does not mean you actually have a real difference (that is to say something actionable). The Experimentation Platform employs advanced statistical methods to tell you whether your results are statistically significant. The alternative is that the observed difference in average values in A and B is due to chance. We generally consider the results statistically significant if there is a 5% or lower possibility that the results could have occurred by chance, or put another way that we have a 95% or higher confidence in the results. Of course higher than 95% is better and often seen in ExP experiments.

Ultimate control

So why go through this trouble? Why not simply take down the old website, put up the new one, and then do all the same measurements of user behavior and see if there are any differences? Because then your data will be worthless because you are no longer controlling for the effect of things other than the variant or feature you want to test. What if Oprah mentions your website sometime around the transition period, or what if an ad campaign starts running, or if you have network problems, or any of thousands of events occur that can affect user behavior and your user traffic? You have no way of determining how much of the effect was caused by your change from website A to B and how much was caused by the other effect. That is why only Controlled Experiments are useful.

Even poorly designed parallel A/B experiments can be ill-controlled. For example, if you are using client-side redirect through JavaScript to show the content of the Treatment and not the Control, you may have an extra delay on in the Treatment. This will likely cause a decrease in click-through rate and other metrics. Another common mistake experimenters make is when a site conducting an experiment has frequent updates (e.g. news or other content) and these updates are not made equally to all variants. [Kohavi, et. al. 2009]. Both of these cases introduce that other factor that make comparison impossible.

Show me the money

Here is an example of an experiment that was run. The MSN Real Estate site wanted to test different designs for their “Find a home” widget. Visitors to this widget were sent to Microsoft partner sites from which MSN Real estate earns a referral fee. Six different designs, including the incumbent, were tested. Can you guess which one was the winner?

The winner, Treatment 5, increased revenues from referrals by almost 10% (due to increased clickthrough). The Return-On-Investment (ROI) was phenomenal. [Kohavi, et. al. 2009].

Why did Treatment 5 win? Well, experimentation tells you what happens. The why is not always clear but after running many experiments on many sites certain trends do emerge. One is that tabbed designs (Treatments 1, 2, 3, and 4) tend to test poorly. They present a clean layout but add extra friction (clicking through the tabs) between the user and the functionality. Also the use of the single input field over the multiple input fields tends to be a winner also.

Who doesn't like experimentation?

Google's top designer Doug Bowman resigned his post claiming that too many design decisions were made based on experimentation and that "data eventually becomes a crutch for every decision". He specifically decried "that a team at Google couldn’t decide between two blues, so they’re testing 41 shades between each blue to see which one performs better. I had a recent debate over whether a border should be 3, 4 or 5 pixels wide". It sounds to me like Google needs some help on choosing their OEC. Perhaps it was not experimentation that drove Doug away, but poor experiment design.

Some in software have claimed that experimentation is good for incremental feedback, but not so good (or actually prevents you from) the new and innovative. Again, I think this goes to the experiment design. You can design incremental experiments and realize value from them, or you can design bold experiments and leverage those to make large innovative changes. Experimentation actually drives innovation by allowing you to try many things in the real world and see what works rather than build up a huge feature set in the isolation of development only to release every 6 months or 1 year to find half the features were wasted work (or worse negative contributors).

Some in software have claimed that experimentation is good for incremental feedback, but not so good (or actually prevents you from) the new and innovative. Again, I think this goes to the experiment design. You can design incremental experiments and realize value from them, or you can design bold experiments and leverage those to make large innovative changes. Experimentation actually drives innovation by allowing you to try many things in the real world and see what works rather than build up a huge feature set in the isolation of development only to release every 6 months or 1 year to find half the features were wasted work (or worse negative contributors).

On the ExP team our mascot is the HiPPO which stands for "Highest Paid Person's Opinion". In the time before Experimentation, in a land without data, this was the person who decided what got deployed and what did not. Beware of HiPPOs, they kill innovative ideas.

More

- There's lots more information on the ExP site. If you only read one paper check out Online Experimentation at Microsoft, and when you are ready for more read Seven Pitfalls to Avoid when Running Controlled Experiments on the Web

- A fun site which lets you test your skills at predicting experiment winners is https://whichtestwon.com/. They have a new experiment every few days and you can follow them on Twitter.

- Google Website Optimizer is a free service that lets you run experiments on your own websites.