医療データ ソリューションでの DICOM データ変換の使用に関する考慮事項

この記事では、DICOM データ変換機能を使用する前に確認すべき主な考慮事項について説明します。 これらの要因を理解することで、医療データ ソリューション環境内でのスムーズな統合と運用が可能になります。 また、このリソースは、機能に関する潜在的な課題や制限を効果的にナビゲートするのにも役立ちます。

インジェスト ファイル サイズ

現在、ZIPファイルの場合は8 GB、ネイティブDCMファイルの場合は最大4 GBの論理サイズ制限があります。 ファイルがこれらの制限を超えると、実行時間が長くなったり、インジェストが失敗したりする可能性があります。 正常に実行するために、ZIP ファイルを小さなセグメント (4 GB 未満) に分割することをお勧めします。 4 GBを超えるネイティブDCMファイルの場合は、必要に応じてSparkノード (エグゼキューター) をスケールアップしてください。

DICOM タグの抽出

私たちは、次の2つの理由から、 ブロンズ レイクハウス ImagingDicom デルタ テーブル にある29個のタグのプロモーションを優先しています。

- これらの 29 個のタグは、モダリティやラテラリティなど、DICOM データの一般的なクエリおよび探索に不可欠です。

- これらのタグは、後続の実行手順でシルバー (FHIR) とゴールド (OMOP) のデルタ テーブルを生成して追加するために必要です。

関心のある他の DICOM タグを拡張およびプロモートできます。 ただし、DICOMデータ変換ノートブックは、ブロンズ レイクハウス の ImagingDicom デルタ テーブルに追加したDICOMタグの他の列を自動的に認識または処理しません。 余分な列は個別に処理する必要があります。

ブロンズ レイクハウスにパターンを追加する

新しく取り込まれたすべてのDCM (またはZIP) ファイルは、ブロンズ レイクハウス の ImagingDicom デルタ テーブルに追加されます。 正常に取り込まれたDCMファイルごとに、 ImagingDicom デルタ テーブルに新しいレコード エントリが作成されます。 ブロンズ レイクハウス レベルでのマージまたは更新操作にはビジネス ロジックがありません。

ImagingDicom デルタ テーブルは、DICOMインスタンス レベルで取り込まれたすべてのDCMファイルを反映しているため、そのように考慮する必要があります。 同じDCMファイルが Ingest フォルダーに再度取り込まれると、同じファイルの ImagingDicom デルタ テーブルに別のエントリが追加されます。 ただし、Unix 接頭辞のタイムスタンプにより、ファイル名は異なります。 取り込みの日付によっては、ファイルが別の YYYY\MM\DD フォルダ内に配置される場合があります。

OMOP バージョンとイメージングの拡張機能

ゴールド レイクハウスの現在の実装は、Observational Medical Outcomes Partnership (OMOP) Common Data Model バージョン 5.4 に基づいています。 OMOP には、イメージング データをサポートするための標準的な拡張機能がまだありません。 したがって、この機能は、Development of Medical Imaging Data Standardization for Imaging-Based Observational Research: OMOP Common Data Model Extension (画像に基づく観察研究のための医療画像データの標準化の開発: OMOP Common Data Model 拡張機能) で提案されている拡張機能を実装します。 この延長は、2024年2月5日に公開されたイメージング研究分野における最新の提案です。 DICOM データ変換機能の現行リリースは、ゴールド レイクハウスの Image_Occurrence テーブルに制限されています。

Spark での構造化ストリーミング

構造化ストリーミングは、Spark SQL エンジン上に構築されたスケーラブルでフォールト トレラントなストリーム処理エンジンです。 ストリーミング評価は、静的データに対するバッチ評価と同じように表すことができます。 システムは、チェックポイントと先行書き込みログを通じてエンドツーエンドのフォールト トレランスを保証します。 構造化ストリーミングの詳細については、構造化ストリーミング プログラミング ガイド (v3.5.1) を参照してください。

Microsoft では、増分データを処理するために ForeachBatch を使用します。 このメソッドは、任意の操作を適用し、ストリーミング クエリの出力にロジックを書き込みます。 クエリは、ストリーミング クエリの各マイクロバッチの出力データに対して実行されます。 DICOM データ変換機能では、構造化ストリーミングは次の実行手順で使用されます。

| 実行手順 | チェックポイント フォルダーの場所 | 追跡対象オブジェクト |

|---|---|---|

| DICOM メタデータをブロンズ レイクハウスに抽出する | healthcare#.HealthDataManager\DMHCheckpoint\medical_imaging\dicom_metadata_extraction |

Files\Process\Imaging\DICOM\YYYY\MM\DDのブロンズ レイクハウス にあるDCMファイル。 |

| DICOM メタデータを FHIR 形式に変換する | healthcare#.HealthDataManager\DMHCheckpoint\medical_imaging\dicom_to_fhir |

ブロンズレイクハウスのデルタテーブル ImagingDicom 。 |

| ブロンズ レイクハウス ImagingStudy デルタ テーブルにデータを取り込む | healthcare#.HealthDataManager\DMHCheckpoint\<bronzelakehouse>\ImagingStudy |

Files\Process\Clinical\FHIR NDJSON\YYYY\MM\DD\ImagingStudyの下のブロンズ レイクハウス にあるFHIR NDJSONファイル。 |

| シルバー レイクハウス ImagingStudy デルタ テーブルにデータを取り込む | healthcare#.HealthDataManager\DMHCheckpoint\<silverlakehouse>\ImagingStudy |

ブロンズ レイクハウスのデルタ テーブル ImagingStudy。 |

チップ

OneLake ファイル エクスプローラー を使用して、テーブルに一覧表示されているファイルとフォルダーの内容を表示できます。 詳細については、OneLake ファイル エクスプローラーを使用する を参照してください。

ブロンズ レイクハウスのグループ パターン

グループ パターンは、ブロンズ レイクハウス の ImagingDicom デルタ テーブルからブロンズ レイクハウス の ImagingStudy デルタ テーブルに新しいレコードを取り込むときに適用されます。 DICOMデータ変換機能は、 ImagingDicom デルタ テーブル内のすべてのインスタンス レベルのレコードを研究レベル別にグループ化します。 DICOM study ごとに 1 つのレコードを ImagingStudy として作成し、そのレコードをブロンズ レイクハウスの ImagingStudy デルタ テーブルに挿入します。

シルバーレイクハウスの upsert パターン

upsert 操作は、 {FHIRResource}.idに基づいて、ブロンズ レイクハウスとシルバー レイクハウス間のFHIRデルタ テーブルを比較します。

- 一致が識別され、シルバー レコードが新しい場合、新しいブロンズ レコードで更新されます。

- 一致が識別されない場合、ブロンズ レコードはシルバー レイクハウスの新しいレコードとして挿入されます。

このパターンを使用して、シルバーの レイクハウス ImagingStudy テーブルにリソースを作成します。

ImagingStudy の制限事項

upsert 操作は、同じバッチ実行で同じ DICOM study から DCM ファイルを取り込むと、期待どおりに機能します。 ただし、以前にシルバー レイクハウス に取り込まれた同じDICOM研究に属するDCMファイル (別のバッチから) をさらに取り込むと、取り込んだ結果、 挿入 操作が実行されます。 プロセスは 更新 操作を実行しません。

この 挿入 操作は、ノートブックがバッチ実行ごとに新しい {FHIRResource}.id for ImagingStudy を作成するために発生します。 この新しい ID は、前のバッチの ID と一致しません。 その結果、シルバー テーブルには、ImagingStudy.id 値が異なる 2 つの ImagingStudy レコードが表示されます。 これらの ID は、それぞれのバッチ実行に関連していますが、同じ DICOM study に属しています。

回避策として、バッチ実行を完了して、一意の ID の組み合わせに基づいて 2 つの ImagingStudy レコードをシルバー レイクハウスでマージします。 ただし、マージに ImagingStudy.id を使用しないでください。 代わりに、 [studyInstanceUid (0020,000D)] や [patientId (0010,0020)] などの他のIDを使用してレコードを結合できます。

OMOP 追跡アプローチ

healthcare#_msft_omop_silver_gold_transformation ノートブックは、 OMOP APIを使用して、シルバー レイクハウス デルタ テーブルの変更を監視します。 また、ゴールド レイクハウスのデルタ テーブルへのアップサートが必要な、新しく変更または追加されたレコードを識別します。 このプロセスは、Watermarking と呼ばれています。

OMOP API は、{Silver.FHIRDeltatable.modified_date} と {Gold.OMOPDeltatable.SourceModifiedOn} 間で日付と時刻の値を比較して、最後のノートブック実行以降に変更または追加された増分レコードを決定します。 ただし、この OMOP 追跡アプローチは、ゴールド レイクハウスのすべてのデルタ テーブルに適用されるわけではありません。 以下のテーブルは、シルバー レイクハウスのデルタ テーブルから取り込まれません。

- コンセプト

- concept_ancestor

- concept_class

- concept_relationship

- concept_synonym

- fhir_system_to_omop_vocab_mapping

- ボキャブラリー

これらのゴールド デルタ テーブルは、 OMOP サンプル データ展開に含まれる語彙データを使用して作成されます。 このフォルダー内のボキャブラリ データセットは、Spark の構造化ストリーミング を使用して管理されます。

ゴールド レイクハウスの upsert パターン

ゴールド レイクハウスの upsert パターンは、シルバー レイクハウスとは異なります。 OMOP healthcare#_msft_omop_silver_gold_transformation ノートブックで使用されるAPIは、ゴールド レイクハウス のデルタ テーブルの各エントリに対して新しいIDを作成します。 API は、シルバー レイクハウスの新しいレコードをゴールドレイクハウスに取り込んだり、変換したりするときに、これらの ID を作成します。 また、OMOP API は、新しく作成された ID と、シルバー レイクハウス デルタ テーブル内の対応する内部 ID との間の内部マッピングも維持します。

API は次のように動作します。

レコードをシルバー デルタ テーブルからゴールド デルタ テーブルに初めて変換する場合は、OMOP ゴールド レイクハウスで新しい ID が生成され、シルバー レイクハウスで元の新しい ID にマッピングされます。 次に、新しく生成された ID を使用して、レコードをゴールド デルタ テーブルに挿入します。

シルバー レイクハウス内の同じレコードが変更され、ゴールド レイクハウスに再度取り込まれた場合、OMOP API はゴールド レイクハウス内の既存のレコードを認識します (マッピング情報を使用します)。 次に、ゴールド レイクハウスのレコードを、以前に生成したのと同じ ID で更新します。

ゴールド レイクハウスで新しく生成された ID (ADRM_ID) と各 OMOP デルタ テーブルの元の ID (INTERNAL_ID) とのマッピングは、OneLake Parquet ファイルに格納されます。 Parquet ファイルは、次のパスにあります。

[OneLakePath]\[workspace]\healthcare#.HealthDataManager\DMHCheckpoint\dtt\dtt_state_db\KEY_MAPPING\[OMOPTableName]_ID_MAPPING

また、Spark ノートブック内の Parquet ファイルをクエリして、マッピングを表示することもできます。

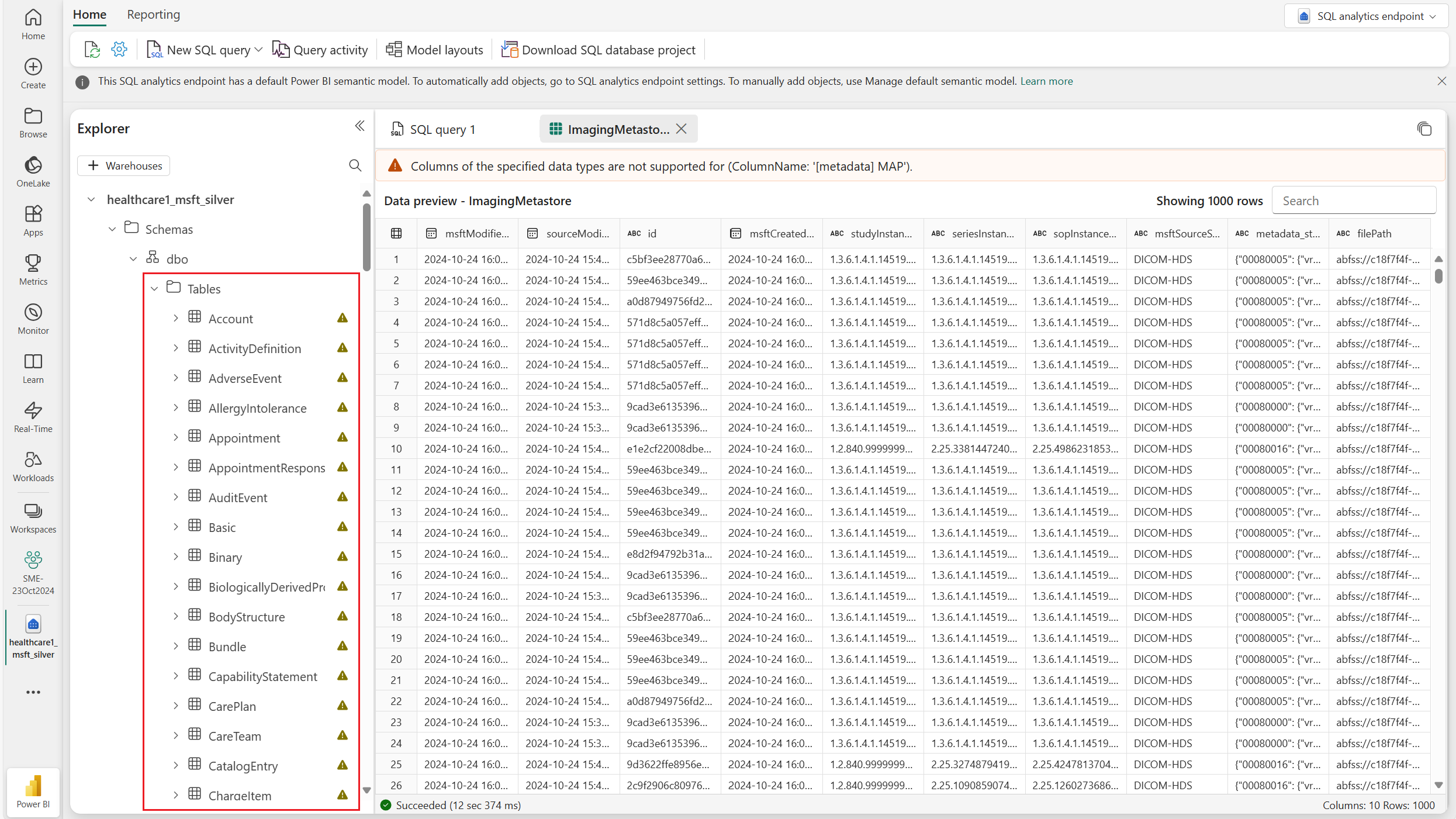

銀色のImagingMetastoreデザイン レイクハウス

1つのDICOMファイルには最大5,000個の異なるタグを含めることができるため、シルバーの レイクハウス でこれらすべてのタグのフィールドをマッピングして作成するのは非効率的で、多くのリソースを消費します。 ただし、データ損失を防ぎ柔軟性を維持するためには、タグの完全なセットへのアクセスを維持することが不可欠です。特に、データ モデルで抽出され表現される29個を超えるタグが必要な場合は、これが重要です。 この問題に対処するために、シルバーの レイクハウス ImagingMetastore デルタ テーブルは、すべてのDICOMタグを metadata_string 列に格納します。 これらのタグは、文字列化されたJSON形式のキーと値のペアとして表され、SQL分析 エンドポイント を通じて効率的なクエリが可能になります。 このアプローチは、シルバー レイクハウス のすべてのフィールドにわたって複雑なJSONデータを管理するための標準的な方法と一致しています。

ブロンズ レイクハウス の ImagingDicom テーブルからシルバー レイクハウス の ImagingMetastore テーブルへの変換では、グループ化は実行されません。 両方のテーブルで、リソースはインスタンス レベルで表されます。 ただし、 {FHIRResource}.id は ImagingMetastore テーブルに含まれています。 この値を使用すると、一意のIDを参照して、特定の調査に関連付けられているすべてのインスタンス レベルの成果物を照会できます。

DICOM サービスとの統合

DICOM データ変換機能と Azure Health Data Services DICOM サービス間の現在の統合では、作成 および 更新 イベントのみがサポートされています。 新しいイメージング スタディ、シリーズ、インスタンスを作成したり、既存のものを更新したりすることもできます。 ただし、統合では 削除 イベントはまだサポートされていません。 DICOM サービスで study、シリーズ、またはインスタンスを削除した場合、DICOM データ変換機能ではこの変更を反映しません。 画像データは変更されず、削除されません。

表の警告

1つ以上の列が複雑なオブジェクト指向データ型を使用してデータを表す各 レイクハウス 内のすべてのテーブルに対して警告が表示されます。 ImagingDicom および ImagingMetastore テーブルでは、 metadata_string 列はJSON構造を使用してDICOMタグをキーと値のペアとしてマッピングします。 この設計は、構造体、配列、マップなどの複雑なデータ型をサポートしないFabric SQLエンドポイントの制限に対応しています。 SQLエンドポイント (T-SQL) を使用してこれらの列を文字列としてクエリしたり、Sparkを使用してネイティブ タイプ (構造体、配列、マップ) を操作したりできます。