Configurare una connessione per l'uso dell'inferenza del modello di intelligenza artificiale di Azure nel progetto di intelligenza artificiale

Importante

Gli elementi contrassegnati (anteprima) in questo articolo sono attualmente disponibili in anteprima pubblica. Questa anteprima viene fornita senza un contratto di servizio e non è consigliabile per i carichi di lavoro di produzione. Alcune funzionalità potrebbero non essere supportate o potrebbero presentare funzionalità limitate. Per altre informazioni, vedere le Condizioni supplementari per l'uso delle anteprime di Microsoft Azure.

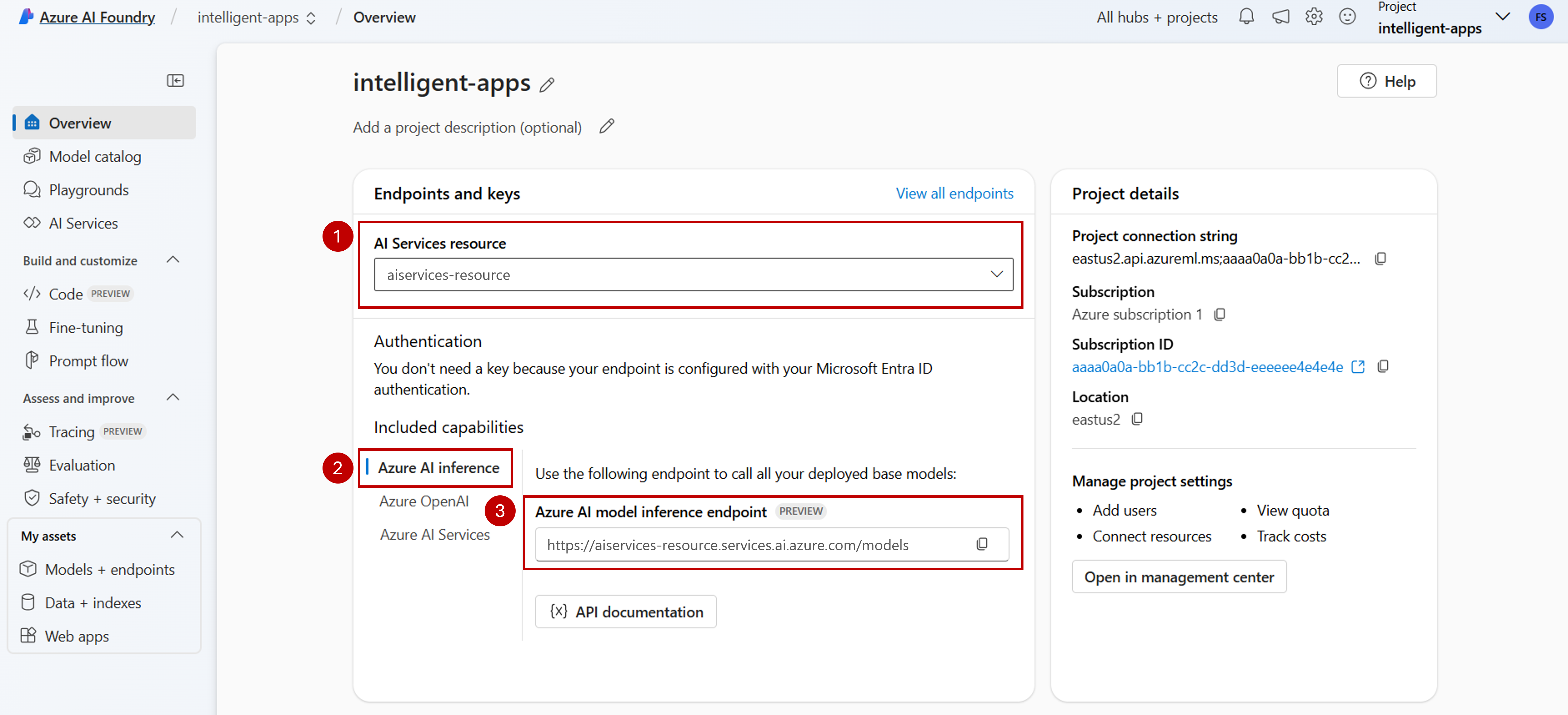

È possibile usare l'inferenza del modello di intelligenza artificiale di Azure nei progetti in Azure AI Foundry per creare applicazioni raggiungibili e interagire/gestire i modelli disponibili. Per usare il servizio di inferenza del modello di intelligenza artificiale di Azure nel progetto, è necessario creare una connessione alla risorsa di Servizi di intelligenza artificiale di Azure.

L'articolo seguente illustra come creare una connessione alla risorsa di Servizi di intelligenza artificiale di Azure per usare l'endpoint di inferenza.

Prerequisiti

Per completare questo articolo, devi avere quanto segue:

Una sottoscrizione di Azure. Se si usano i modelli GitHub, è possibile aggiornare l'esperienza e creare una sottoscrizione di Azure nel processo. Leggere Eseguire l'aggiornamento dai modelli GitHub all'inferenza del modello di intelligenza artificiale di Azure, se questo è il caso.

Una risorsa dei servizi di intelligenza artificiale di Azure. Per altri dettagli, vedere Creare una risorsa di Servizi di intelligenza artificiale di Azure.

- Un progetto di intelligenza artificiale connesso alla risorsa di Servizi di intelligenza artificiale di Azure. Chiamare la procedura descritta in Configurare il servizio di inferenza del modello di intelligenza artificiale di Azure nel progetto in Azure AI Foundry.

Aggiunta di una connessione

È possibile creare una connessione a una risorsa dei servizi di intelligenza artificiale di Azure seguendo questa procedura:

Passare al portale di Azure AI Foundry.

Nell'angolo inferiore sinistro della schermata selezionare Centro gestione.

Nella sezione Connessioni selezionare Nuova connessione.

Selezionare Servizi di intelligenza artificiale di Azure.

Nel browser cercare una risorsa di Servizi di intelligenza artificiale di Azure esistente nella sottoscrizione.

Selezionare Aggiungi connessione.

La nuova connessione viene aggiunta all'hub.

Tornare alla pagina di destinazione del progetto per continuare e selezionare la nuova connessione creata. Aggiornare la pagina se non viene visualizzata immediatamente.

Visualizzare le distribuzioni di modelli nella risorsa connessa

È possibile visualizzare le distribuzioni del modello disponibili nella risorsa connessa seguendo questa procedura:

Passare al portale di Azure AI Foundry.

Sulla barra di spostamento a sinistra selezionare Modelli e endpoint.

Nella pagina vengono visualizzate le distribuzioni del modello disponibili per il nome della connessione, raggruppate in base al nome della connessione. Individuare la connessione appena creata, che deve essere di tipo Servizi di intelligenza artificiale di Azure.

Selezionare qualsiasi distribuzione del modello da esaminare.

La pagina dei dettagli mostra informazioni sulla distribuzione specifica. Se si vuole testare il modello, è possibile usare l'opzione Apri nel playground.

Viene visualizzato il playground di Azure AI Foundry, in cui è possibile interagire con il modello specificato.

Importante

Gli elementi contrassegnati (anteprima) in questo articolo sono attualmente disponibili in anteprima pubblica. Questa anteprima viene fornita senza un contratto di servizio e non è consigliabile per i carichi di lavoro di produzione. Alcune funzionalità potrebbero non essere supportate o potrebbero presentare funzionalità limitate. Per altre informazioni, vedere le Condizioni supplementari per l'uso delle anteprime di Microsoft Azure.

È possibile usare l'inferenza del modello di intelligenza artificiale di Azure nei progetti in Azure AI Foundry per creare applicazioni raggiungibili e interagire/gestire i modelli disponibili. Per usare il servizio di inferenza del modello di intelligenza artificiale di Azure nel progetto, è necessario creare una connessione alla risorsa di Servizi di intelligenza artificiale di Azure.

L'articolo seguente illustra come creare una connessione alla risorsa di Servizi di intelligenza artificiale di Azure per usare l'endpoint di inferenza.

Prerequisiti

Per completare questo articolo, devi avere quanto segue:

Una sottoscrizione di Azure. Se si usano i modelli GitHub, è possibile aggiornare l'esperienza e creare una sottoscrizione di Azure nel processo. Leggere Eseguire l'aggiornamento dai modelli GitHub all'inferenza del modello di intelligenza artificiale di Azure, se questo è il caso.

Una risorsa dei servizi di intelligenza artificiale di Azure. Per altri dettagli, vedere Creare una risorsa di Servizi di intelligenza artificiale di Azure.

Installare l'interfaccia della riga di comando di Azure e l'estensione

mlper Azure AI Foundry:az extension add -n mlIdentificare le informazioni seguenti:

L'ID sottoscrizione di Azure.

Nome della risorsa di Servizi di intelligenza artificiale di Azure.

Gruppo di risorse in cui viene distribuita la risorsa di Servizi di intelligenza artificiale di Azure.

Aggiunta di una connessione

Per aggiungere un modello, è prima necessario identificare il modello da distribuire. È possibile eseguire query sui modelli disponibili nel modo seguente:

Accedere alla sottoscrizione di Azure:

az loginConfigurare l'interfaccia della riga di comando in modo che punti al progetto:

az account set --subscription <subscription> az configure --defaults workspace=<project-name> group=<resource-group> location=<location>Creare una definizione di connessione:

connection.yml

name: <connection-name> type: aiservices endpoint: https://<ai-services-resourcename>.services.ai.azure.com api_key: <resource-api-key>Creare la connessione:

az ml connection create -f connection.ymlA questo punto, la connessione è disponibile per l'uso.

Importante

Gli elementi contrassegnati (anteprima) in questo articolo sono attualmente disponibili in anteprima pubblica. Questa anteprima viene fornita senza un contratto di servizio e non è consigliabile per i carichi di lavoro di produzione. Alcune funzionalità potrebbero non essere supportate o potrebbero presentare funzionalità limitate. Per altre informazioni, vedere le Condizioni supplementari per l'uso delle anteprime di Microsoft Azure.

È possibile usare l'inferenza del modello di intelligenza artificiale di Azure nei progetti in Azure AI Foundry per creare applicazioni raggiungibili e interagire/gestire i modelli disponibili. Per usare il servizio di inferenza del modello di intelligenza artificiale di Azure nel progetto, è necessario creare una connessione alla risorsa di Servizi di intelligenza artificiale di Azure.

L'articolo seguente illustra come creare una connessione alla risorsa di Servizi di intelligenza artificiale di Azure per usare l'endpoint di inferenza.

Prerequisiti

Per completare questo articolo, devi avere quanto segue:

Una sottoscrizione di Azure. Se si usano i modelli GitHub, è possibile aggiornare l'esperienza e creare una sottoscrizione di Azure nel processo. Leggere Eseguire l'aggiornamento dai modelli GitHub all'inferenza del modello di intelligenza artificiale di Azure, se questo è il caso.

Una risorsa dei servizi di intelligenza artificiale di Azure. Per altri dettagli, vedere Creare una risorsa di Servizi di intelligenza artificiale di Azure.

Un progetto di intelligenza artificiale di Azure con un hub di intelligenza artificiale.

Installare l'interfaccia della riga di comando di Azure.

Identificare le informazioni seguenti:

L'ID sottoscrizione di Azure.

Nome della risorsa di Servizi di intelligenza artificiale di Azure.

ID risorsa di Servizi di intelligenza artificiale di Azure.

Nome dell'hub di intelligenza artificiale di Azure in cui viene distribuito il progetto.

Gruppo di risorse in cui viene distribuita la risorsa di Servizi di intelligenza artificiale di Azure.

Aggiunta di una connessione

Usare il modello

ai-services-connection-template.bicepper descrivere la connessione:ai-services-connection-template.bicep

@description('Name of the hub where the connection will be created') param hubName string @description('Name of the connection') param name string @description('Category of the connection') param category string = 'AIServices' @allowed(['AAD', 'ApiKey', 'ManagedIdentity', 'None']) param authType string = 'AAD' @description('The endpoint URI of the connected service') param endpointUri string @description('The resource ID of the connected service') param resourceId string = '' @secure() param key string = '' resource connection 'Microsoft.MachineLearningServices/workspaces/connections@2024-04-01-preview' = { name: '${hubName}/${name}' properties: { category: category target: endpointUri authType: authType isSharedToAll: true credentials: authType == 'ApiKey' ? { key: key } : null metadata: { ApiType: 'Azure' ResourceId: resourceId } } }Eseguire la distribuzione:

RESOURCE_GROUP="<resource-group-name>" ACCOUNT_NAME="<azure-ai-model-inference-name>" ENDPOINT_URI="https://<azure-ai-model-inference-name>.services.ai.azure.com" RESOURCE_ID="<resource-id>" HUB_NAME="<hub-name>" az deployment group create \ --resource-group $RESOURCE_GROUP \ --template-file ai-services-connection-template.bicep \ --parameters accountName=$ACCOUNT_NAME hubName=$HUB_NAME endpointUri=$ENDPOINT_URI resourceId=$RESOURCE_ID