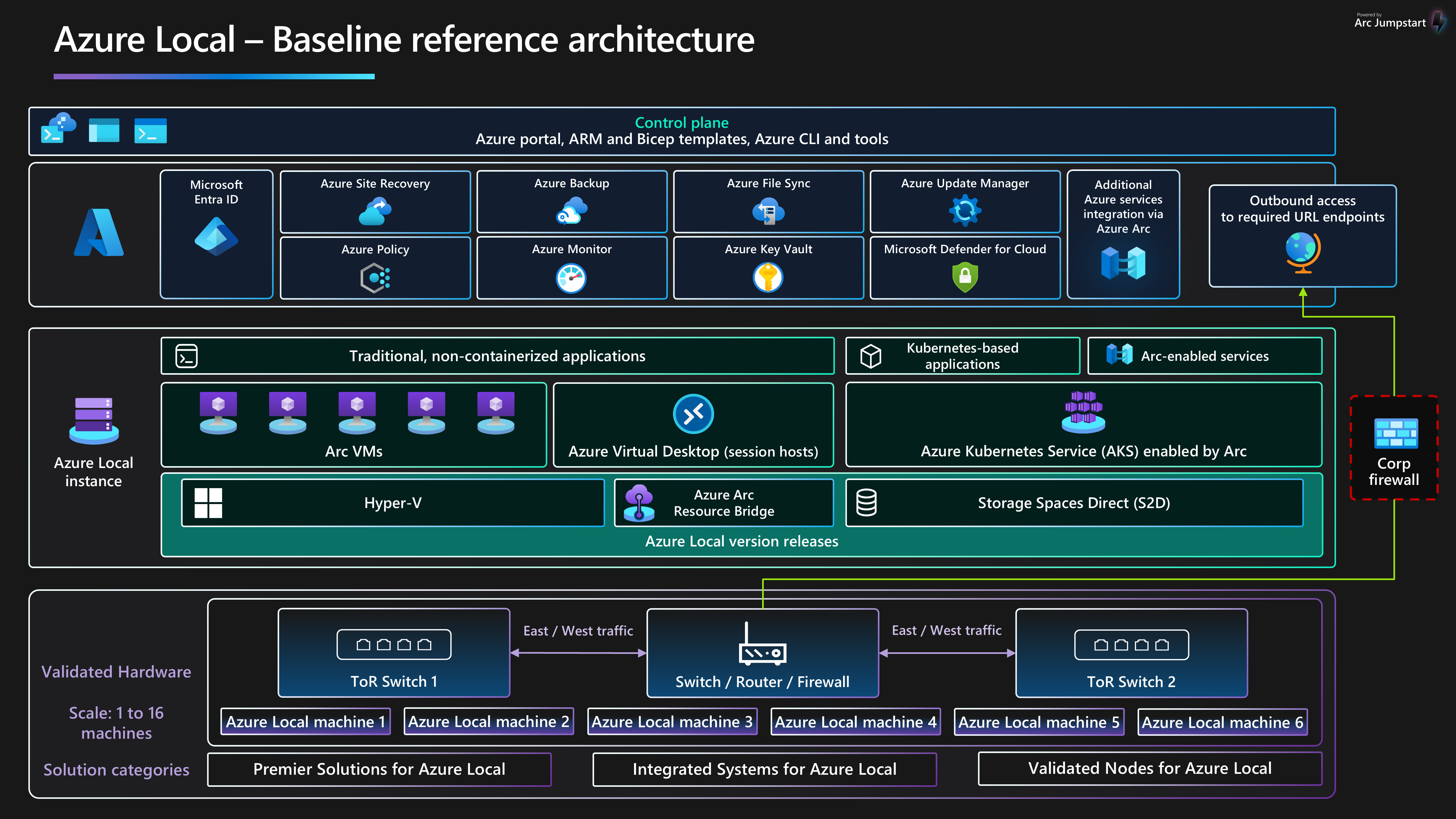

Cette architecture de référence de référence fournit des conseils et des recommandations indépendantes de la charge de travail pour la configuration d’Azure Local, version 23H2, version 2311 et ultérieure de l’infrastructure pour garantir une plateforme fiable qui peut déployer et gérer des charges de travail virtualisées et conteneurisées hautement disponibles. Cette architecture décrit les composants de ressources et les choix de conception de cluster pour les nœuds physiques qui fournissent des fonctionnalités de calcul, de stockage et de mise en réseau locales. Il décrit également comment utiliser des services Azure pour simplifier et simplifier la gestion quotidienne d’Azure Local.

Pour plus d’informations sur les modèles d’architecture de charge de travail optimisés pour s’exécuter sur Azure Local, consultez le contenu situé dans la charges de travail locales Azure menu de navigation.

Cette architecture est un point de départ pour l’utilisation de la conception réseau commutée de stockage pour déployer une instance locale Azure multinode. Les applications de charge de travail déployées sur une instance locale Azure doivent être bien conçues. Les applications de charge de travail bien conçues doivent être déployées à l’aide de plusieurs instances ou d’une haute disponibilité de tous les services de charge de travail critiques et disposer de contrôles de continuité d’activité et de récupération d’urgence appropriés en place. Ces contrôles BCDR incluent des sauvegardes régulières et des fonctionnalités de basculement de récupération d’urgence. Pour vous concentrer sur la plateforme d’infrastructure HCI, ces aspects de conception de charge de travail sont intentionnellement exclus de cet article.

Pour plus d’informations sur les instructions et les recommandations relatives aux cinq piliers d’Azure Well-Architected Framework, consultez le guide de service Azure Local Well-Architected Framework.

Disposition de l’article

| Architecture | Décisions de conception | Approche de Well-Architected Framework |

|---|---|---|

| ▪ architecture ▪ cas d’usage potentiels ▪ détails du scénario ▪ ressources de plateforme ▪ ressources de prise en charge de la plateforme ▪ Déployer ce scénario |

▪ choix de conception de cluster ▪ disques physiques ▪ de conception réseau ▪ de surveillance ▪ Update Management |

▪ fiabilité ▪ sécurité ▪ d’optimisation des coûts ▪ excellence opérationnelle ▪ efficacité des performances |

Pourboire

Le modèle local Azure montre comment utiliser un modèle Azure Resource Management (modèle ARM) et un fichier de paramètres pour déployer un déploiement multiserveur commuté d’Azure Local. Sinon, l’exemple Bicep montre comment utiliser un modèle Bicep pour déployer une instance locale Azure et ses ressources requises.

Architecture

Pour plus d’informations, consultez ressources associées.

Cas d’usage potentiels

Les cas d’usage classiques pour Azure Local incluent la possibilité d’exécuter des charges de travail haute disponibilité (HA) dans des emplacements locaux ou edge, qui fournissent une solution pour répondre aux exigences de la charge de travail. Vous pouvez:

Fournissez une solution cloud hybride déployée localement pour répondre aux exigences de souveraineté, de réglementation et de conformité des données ou de latence.

Déployez et gérez des charges de travail de périphérie basées sur la haute disponibilité ou basées sur des conteneurs déployées dans un emplacement unique ou dans plusieurs emplacements. Cette stratégie permet aux applications et services critiques pour l’entreprise de fonctionner de manière résiliente, rentable et évolutive.

Réduisez le coût total de possession (TCO) à l’aide de solutions certifiées par Microsoft, le déploiement basé sur le cloud, la gestion centralisée et la surveillance et les alertes.

Fournir une fonctionnalité d’approvisionnement centralisée à l’aide d’Azure et d’Azure Arc pour déployer des charges de travail sur plusieurs emplacements de manière cohérente et sécurisée. Les outils tels que le portail Azure, Azure CLI ou les modèles d’infrastructure en tant que code (IaC) utilisent Kubernetes pour la conteneurisation ou la virtualisation de charge de travail traditionnelle pour stimuler l’automatisation et la répétabilité.

Respectez les exigences strictes en matière de sécurité, de conformité et d’audit. Azure Local est déployé avec une posture de sécurité renforcée configurée par défaut, ou sécurisée par défaut. Azure Local intègre du matériel certifié, un démarrage sécurisé, un module de plateforme sécurisée (TPM), une sécurité basée sur la virtualisation (VBS), Credential Guard et des stratégies windows Defender Application Control appliquées. Il s’intègre également aux services modernes de sécurité et de gestion des menaces basés sur le cloud, tels que Microsoft Defender pour Cloud et Microsoft Sentinel.

Détails du scénario

Les sections suivantes fournissent plus d’informations sur les scénarios et les cas d’usage potentiels pour cette architecture de référence. Ces sections incluent une liste des avantages métier et des exemples de types de ressources de charge de travail que vous pouvez déployer sur Azure Local.

Utiliser Azure Arc avec Azure Local

Azure Local s’intègre directement à Azure à l’aide d’Azure Arc pour réduire la surcharge opérationnelle et le coût total de possession. Azure Local est déployé et géré via Azure, qui fournit une intégration intégrée d’Azure Arc via le déploiement du composant de pont de ressources Azure Arc. Ce composant est installé pendant le processus de déploiement du cluster HCI. Les nœuds de cluster local Azure sont inscrits auprès Azure Arc pour les serveurs en tant que prérequis pour lancer le déploiement basé sur le cloud du cluster. Pendant le déploiement, les extensions obligatoires sont installées sur chaque nœud de cluster, comme Lifecycle Manager, Microsoft Edge Device Management et Les données de télémétrie et de diagnostic. Vous pouvez utiliser Azure Monitor et Log Analytics pour surveiller le cluster HCI après le déploiement en activant Insights pour Azure Local. mises à jour des fonctionnalités pour azure Local sont publiées régulièrement pour améliorer l’expérience client. Les mises à jour sont contrôlées et gérées via Azure Update Manager.

Vous pouvez déployer des ressources de charge de travail telles que machines virtuelles Azure Arc, Azure Kubernetes Service (AKS) avec Azure Arc et hôtes de session Azure Virtual Desktop qui utilisent le portail Azure en sélectionnant un emplacement personnalisé d’instance locale Azure comme cible pour le déploiement de la charge de travail. Ces composants fournissent une administration, une gestion et un support centralisés. Si vous disposez d’une assurance logicielle active sur vos licences principales Windows Server Datacenter existantes, vous pouvez réduire les coûts en appliquant Azure Hybrid Benefit aux clusters Azure Local, Windows Server VMs et AKS. Cette optimisation permet de gérer efficacement les coûts de ces services.

L’intégration d’Azure et d’Azure Arc étendent les fonctionnalités des charges de travail virtualisées et conteneurisées Azure à inclure :

machines virtuelles Azure Arc pour les applications ou services traditionnels qui s’exécutent dans des machines virtuelles sur Azure Local.

AKS sur Azure Local pour les applications ou services conteneurisés qui tirent parti de l’utilisation de Kubernetes comme plateforme d’orchestration.

Azure Virtual Desktop déployer vos hôtes de session pour les charges de travail Azure Virtual Desktop sur Azure Local (local). Vous pouvez utiliser le plan de contrôle et de gestion dans Azure pour lancer la création et la configuration du pool d’hôtes.

services de données avec Azure Arc pour Azure SQL Managed Instance conteneurisé ou un serveur Azure Database pour PostgreSQL qui utilise AKS avec Azure Arc hébergé sur Azure Local.

L'extension Azure Event Grid compatible avec Azure Arc pour Kubernetes pour déployer le répartiteur Event Grid et l’opérateur Event Grid . Ce déploiement permet des fonctionnalités telles que les rubriques et les abonnements Event Grid pour le traitement des événements.

machine learning avec Azure Arc avec un cluster AKS déployé sur Azure Local en tant que cible de calcul pour exécuter Azure Machine Learning. Vous pouvez utiliser cette approche pour entraîner ou déployer des modèles Machine Learning à la périphérie.

Les charges de travail connectées à Azure Arc offrent une cohérence et une automatisation Azure améliorées pour les déploiements locaux Azure, telles que l’automatisation de la configuration du système d’exploitation invité avec extensions de machine virtuelle Azure Arc ou l’évaluation de la conformité aux réglementations du secteur ou aux normes d’entreprise via Azure Policy. Vous pouvez activer Azure Policy via le portail Azure ou l’automatisation IaC.

Tirer parti de la configuration de sécurité par défaut d’Azure Local

La configuration de sécurité par défaut d’Azure Local fournit une stratégie de défense en profondeur pour simplifier les coûts de sécurité et de conformité. Le déploiement et la gestion des services informatiques pour les scénarios de vente au détail, de fabrication et de bureau à distance présentent des défis uniques en matière de sécurité et de conformité. La sécurisation des charges de travail contre les menaces internes et externes est essentielle dans les environnements qui ont un support informatique limité ou un manque ou des centres de données dédiés. Azure Local dispose d’un renforcement de la sécurité par défaut et d’une intégration approfondie avec les services Azure pour vous aider à relever ces défis.

Le matériel certifié local Azure garantit le démarrage sécurisé intégré, l’interface UEFI (Unified Extensible Firmware Interface) et la prise en charge du module TPM. Utilisez ces technologies en combinaison avec VBS pour protéger vos charges de travail sensibles à la sécurité. Vous pouvez utiliser le chiffrement de lecteur BitLocker pour chiffrer les volumes de disque de démarrage et les espaces de stockage directs au repos. Le chiffrement SMB (Server Message Block) fournit le chiffrement automatique du trafic entre les serveurs du cluster (sur le réseau de stockage) et la signature du trafic SMB entre les nœuds du cluster et d’autres systèmes. Le chiffrement SMB permet également d’éviter les attaques de relais et facilite la conformité aux normes réglementaires.

Vous pouvez intégrer des machines virtuelles locales Azure dans Defender pour Cloud pour activer l’analytique comportementale basée sur le cloud, la détection et la correction des menaces, les alertes et les rapports. Gérez les machines virtuelles locales Azure dans Azure Arc afin de pouvoir utiliser azure Policy pour évaluer leur conformité aux réglementations du secteur et aux normes d’entreprise.

Composants

Cette architecture se compose de matériel de serveur physique que vous pouvez utiliser pour déployer des instances locales Azure dans des emplacements locaux ou edge. Pour améliorer les fonctionnalités de plateforme, Azure Local s’intègre à Azure Arc et à d’autres services Azure qui fournissent des ressources de prise en charge. Azure Local fournit une plateforme résiliente pour déployer, gérer et exploiter des applications utilisateur ou des systèmes métier. Les ressources et services de plateforme sont décrits dans les sections suivantes.

Ressources de plateforme

L’architecture nécessite les ressources et composants obligatoires suivants :

azure Local est une solution d’infrastructure hyperconvergée (HCI) déployée localement ou dans des emplacements de périphérie à l’aide d’une infrastructure matérielle et réseau de serveur physique. Azure Local fournit une plateforme pour déployer et gérer des charges de travail virtualisées telles que des machines virtuelles, des clusters Kubernetes et d’autres services activés par Azure Arc. Les instances locales Azure peuvent passer d’un déploiement à un nœud unique à un maximum de seize nœuds à l’aide de catégories matérielles validées, intégrées ou premium fournies par les partenaires OEM (Original Equipment Manufacturer).

Azure Arc est un service cloud qui étend le modèle de gestion basé sur Azure Resource Manager à Azure Local et à d’autres emplacements non-Azure. Azure Arc utilise Azure comme plan de contrôle et de gestion pour permettre la gestion de différentes ressources telles que des machines virtuelles, des clusters Kubernetes et des données conteneurisées et des services Machine Learning.

azure Key Vault est un service cloud que vous pouvez utiliser pour stocker et accéder en toute sécurité aux secrets. Un secret est tout ce que vous souhaitez restreindre étroitement l’accès, comme les clés API, les mots de passe, les certificats, les clés de chiffrement, les informations d’identification d’administrateur local et les clés de récupération BitLocker.

témoin cloud est une fonctionnalité du stockage Azure qui agit comme quorum de cluster de basculement. Les nœuds de cluster local Azure utilisent ce quorum pour le vote, ce qui garantit une haute disponibilité pour le cluster. Le compte de stockage et la configuration du témoin sont créés pendant le processus de déploiement du cloud local Azure.

update Manager est un service unifié conçu pour gérer et régir les mises à jour pour Azure Local. Vous pouvez utiliser Update Manager pour gérer les charges de travail déployées sur Azure Local, y compris la conformité des mises à jour du système d’exploitation invité pour les machines virtuelles Windows et Linux. Cette approche unifiée simplifie la gestion des correctifs dans les environnements Azure, locaux et d’autres plateformes cloud via un tableau de bord unique.

Ressources de prise en charge de la plateforme

L’architecture inclut les services de prise en charge facultatifs suivants pour améliorer les fonctionnalités de la plateforme :

Monitor est un service cloud permettant de collecter, d’analyser et d’agir sur les journaux de diagnostic et les données de télémétrie à partir de vos charges de travail cloud et locales. Vous pouvez utiliser Monitor pour optimiser la disponibilité et les performances de vos applications et services par le biais d’une solution de supervision complète. Déployez Insights pour Azure Local pour simplifier la création de la règle de collecte de données Monitor (DCR) et activer rapidement la surveillance des instances locales Azure.

azure Policy est un service qui évalue les ressources Azure et locales. Azure Policy évalue les ressources via l’intégration à Azure Arc à l’aide des propriétés de ces ressources aux règles métier, appelées définitions de stratégie, pour déterminer la conformité ou les fonctionnalités que vous pouvez utiliser pour appliquer la configuration d’invité de machine virtuelle à l’aide des paramètres de stratégie.

Defender for Cloud est un système complet de gestion de la sécurité de l’infrastructure. Il améliore la posture de sécurité de vos centres de données et offre une protection avancée contre les menaces pour les charges de travail hybrides, qu’elles résident dans Azure ou ailleurs, et entre les environnements locaux.

sauvegarde Azure est un service cloud qui fournit une solution simple, sécurisée et économique pour sauvegarder vos données et les récupérer à partir du cloud Microsoft. Le serveur de sauvegarde Azure est utilisé pour effectuer la sauvegarde de machines virtuelles déployées sur Azure Local et les stocker dans le service de sauvegarde.

Site Recovery est un service de récupération d’urgence qui fournit des fonctionnalités BCDR en permettant aux applications métier et aux charges de travail de basculer en cas de sinistre ou de panne. Site Recovery gère la réplication et le basculement des charges de travail qui s’exécutent sur des serveurs physiques et des machines virtuelles entre leur site principal (local) et un emplacement secondaire (Azure).

Choix de conception de cluster

Il est important de comprendre les exigences en matière de performances et de résilience de la charge de travail lorsque vous concevez une instance locale Azure. Ces exigences incluent l’objectif de délai de récupération (RTO) et l’objectif de point de récupération (RPO), le calcul (PROCESSEUR), la mémoire et les exigences de stockage pour toutes les charges de travail déployées sur l’instance locale Azure. Plusieurs caractéristiques de la charge de travail affectent le processus décisionnel et incluent :

Fonctionnalités d’architecture de l’unité de traitement centrale (UC), notamment les fonctionnalités de technologie de sécurité matérielle, le nombre de processeurs, la fréquence GHz (vitesse) et le nombre de cœurs par socket d’UC.

Exigences de l’unité de traitement graphique (GPU) de la charge de travail, telles que pour l’IA ou le Machine Learning, l’inférence ou le rendu graphique.

Mémoire par nœud ou quantité de mémoire physique requise pour exécuter la charge de travail.

Nombre de nœuds physiques dans le cluster qui sont de 1 à 16 nœuds à l’échelle. Le nombre maximal de nœuds est de trois lorsque vous utilisez l’architecture réseau sans commutateur de stockage .

Pour maintenir la résilience de calcul, vous devez réserver au moins N+1 nœuds de capacité dans le cluster. Cette stratégie permet de vider les nœuds pour les mises à jour ou la récupération à partir de pannes soudaines telles que les pannes de courant ou les défaillances matérielles.

Pour les charges de travail stratégiques ou stratégiques, envisagez de réserver des nœuds N+2 de capacité pour augmenter la résilience. Par exemple, si deux nœuds du cluster sont hors connexion, la charge de travail peut rester en ligne. Cette approche offre une résilience pour les scénarios dans lesquels un nœud qui exécute une charge de travail est hors connexion pendant une procédure de mise à jour planifiée et entraîne la connexion simultanée de deux nœuds.

Résilience, capacité et performances du stockage requises :

résilience: nous vous recommandons de déployer trois nœuds ou plus pour activer la mise en miroir tridirectionnel, qui fournit trois copies des données, pour les volumes d’infrastructure et d’utilisateur. La mise en miroir tridirectionnel augmente les performances et la fiabilité maximale pour le stockage.

capacité: le stockage utilisable total requis après tolérance de panne, ou copies, est pris en compte. Ce nombre est d’environ 33% de l’espace de stockage brut de vos disques de niveau capacité lorsque vous utilisez la mise en miroir tridirectionnel.

performance: opérations d’entrée/sortie par seconde (IOPS) de la plateforme qui détermine les fonctionnalités de débit de stockage de la charge de travail lorsqu’elles sont multipliées par la taille de bloc de l’application.

Pour concevoir et planifier un déploiement local Azure, nous vous recommandons d’utiliser l’outil de dimensionnement local Azure et de créer un nouveau projet pour dimensionnement de vos clusters HCI. L’utilisation de l’outil de dimensionnement nécessite que vous compreniez vos besoins en charge de travail. Lorsque vous envisagez le nombre et la taille des machines virtuelles de charge de travail qui s’exécutent sur votre cluster, veillez à prendre en compte les facteurs tels que le nombre de processeurs virtuels, les besoins en mémoire et la capacité de stockage nécessaire pour les machines virtuelles.

L’outil de dimensionnement Préférences section vous guide tout au long des questions relatives au type de système (Premier, Système intégré ou Nœud validé) et aux options de famille d’UC. Il vous aide également à sélectionner vos exigences de résilience pour le cluster. Veillez à :

Réservez un minimum de N+1 nœuds de capacité, ou un nœud, sur le cluster.

Réservez N+2 nœuds de capacité sur le cluster pour une résilience supplémentaire. Cette option permet au système de résister à une défaillance de nœud lors d’une mise à jour ou d’un autre événement inattendu qui affecte simultanément deux nœuds. Il garantit également qu’il y a suffisamment de capacité dans le cluster pour que la charge de travail s’exécute sur les nœuds en ligne restants.

Ce scénario nécessite l’utilisation de la mise en miroir tridirectionnel pour les volumes utilisateur, qui est la valeur par défaut pour les clusters qui ont trois nœuds physiques ou plus.

La sortie de l’outil de dimensionnement local Azure est une liste des références SKU de solution matérielle recommandées qui peuvent fournir les exigences de résilience de la charge de travail et de la plateforme requises en fonction des valeurs d’entrée dans le projet sizer. Pour plus d’informations sur les solutions de partenaires matériels OEM disponibles, consultez catalogue de solutions locales Azure. Pour aider à rightsizer les références SKU de solution pour répondre à vos besoins, contactez votre fournisseur de solutions matérielle ou partenaire d’intégration de système (SI) préféré.

Lecteurs de disque physique

espaces de stockage direct prend en charge plusieurs types de disques physiques qui varient en performances et en capacité. Lorsque vous concevez une instance locale Azure, collaborez avec votre partenaire OEM matériel choisi pour déterminer les types de lecteurs de disque physique les plus appropriés pour répondre aux exigences de capacité et de performances de votre charge de travail. Parmi les exemples, citons l’épinglage de lecteurs de disque dur (HDD) ou les disques SSD (SSD) et NVMe. Ces lecteurs sont souvent appelés lecteurs flash, ou stockage de mémoire persistante (PMem), appelé mémoire de classe de stockage (SCM).

La fiabilité de la plateforme dépend des performances des dépendances de plateforme critiques, telles que les types de disques physiques. Veillez à choisir les types de disques appropriés pour vos besoins. Utilisez des solutions de stockage flash telles que NVMe ou disques SSD pour les charges de travail qui ont des exigences élevées en matière de performances ou de faible latence. Ces charges de travail incluent, mais ne sont pas limitées aux technologies de base de données transactionnelles hautement transactionnelles, aux clusters AKS de production ou à toute charge de travail stratégique ou critique pour l’entreprise qui ont des exigences de stockage à faible latence ou à débit élevé. Utilisez des déploiements flash pour optimiser les performances de stockage. All-NVMe des configurations de lecteur ou de lecteur ssd, en particulier à petite échelle, améliorez l’efficacité du stockage et optimisez les performances, car aucun lecteur n’est utilisé comme niveau de cache Pour plus d’informations, consultez stockage flash.

Pour les charges de travail à usage général, une configuration de stockage hybride, comme les lecteurs NVMe ou les disques SSD pour le cache et les disques DURS pour la capacité, peut fournir davantage d’espace de stockage. Le compromis est que les disques épinglants ont des performances inférieures si votre charge de travail dépasse le jeu de travail du cache, et les disques HDD ont un temps moyen inférieur entre la valeur de défaillance par rapport aux lecteurs NVMe et SSD.

Les performances de votre stockage de cluster sont influencées par le type de lecteur de disque physique, qui varie en fonction des caractéristiques de performances de chaque type de lecteur et du mécanisme de mise en cache que vous choisissez. Le type de lecteur de disque physique fait partie intégrante de toute conception et configuration d’espaces de stockage direct. En fonction des exigences de charge de travail locales Azure et des contraintes budgétaires, vous pouvez choisir de optimiser les performances, d’optimiser lade capacité ou d’implémenter une configuration de type de lecteur mixte qui équilibre les performances et lesde capacité.

Les espaces de stockage direct fournissent une intégrée, permanente, en temps réel, en lecture, écriture, cache côté serveur qui optimise les performances de stockage. Le cache doit être dimensionné et configuré pour prendre en charge l’ensemble de travail de vos applications et charges de travail. Les disques virtuels directs d’espaces de stockage ou les volumes , sont utilisés en combinaison avec le cache de lecture en mémoire partagé de cluster (CSV) pour améliorer lesde performances Hyper-V, en particulier pour l’accès en entrée non chiffré aux fichiers de disque dur virtuel de charge de travail (VHD) ou disque dur virtuel v2 (VHDX).

Pourboire

Pour les charges de travail à haute performance ou sensibles à la latence, nous vous recommandons d’utiliser un la configuration de stockage tout flash (tous NVMe ou ssd) et une taille de cluster de trois nœuds physiques ou plus. Le déploiement de cette conception avec les paramètres de de configuration de stockage par défaut

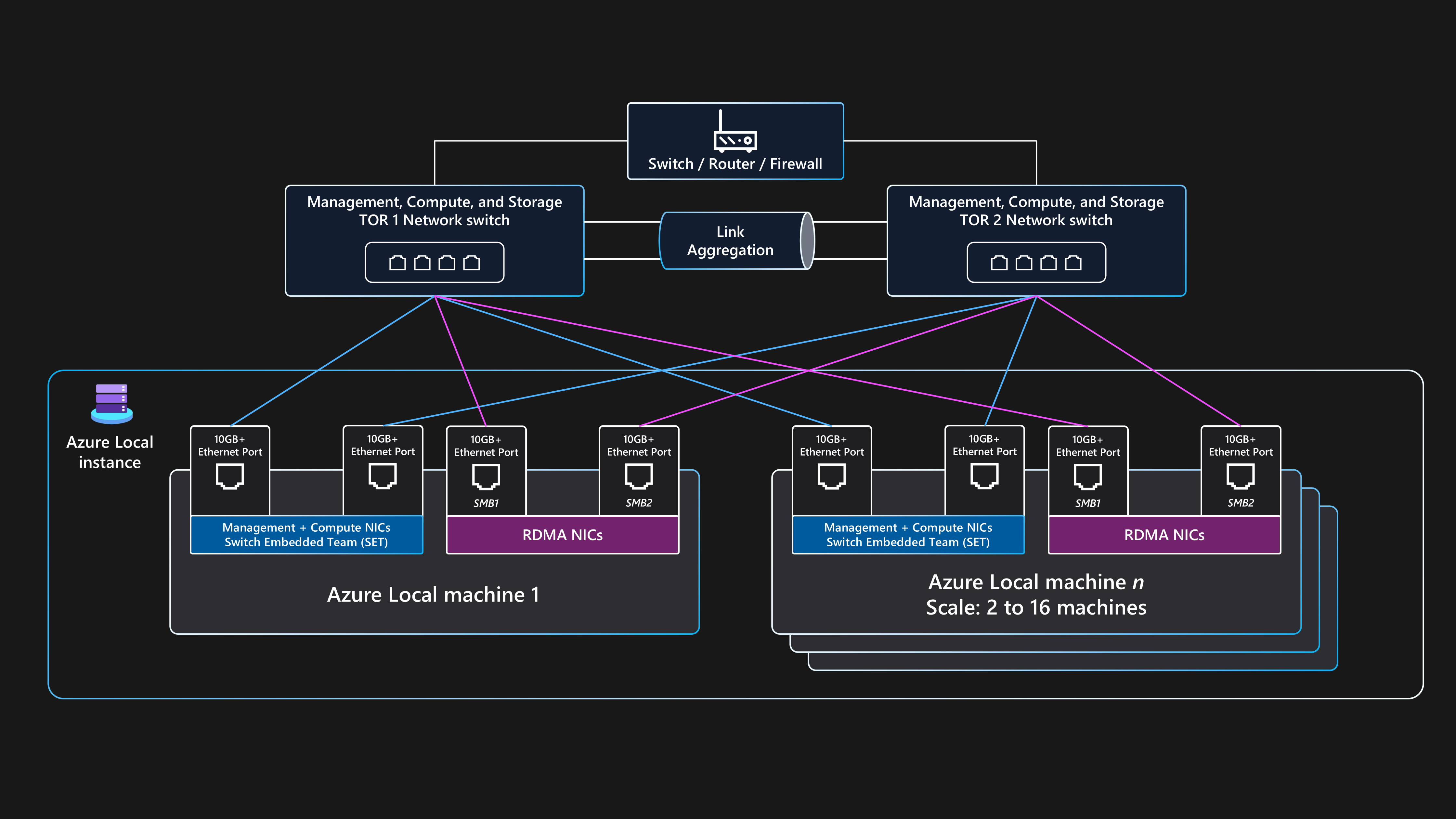

Conception du réseau

La conception du réseau est la disposition globale des composants au sein de l’infrastructure physique et des configurations logiques du réseau. Vous pouvez utiliser les mêmes ports de carte d’interface réseau physique pour toutes les combinaisons d’intentions de gestion, de calcul et de réseau de stockage. L’utilisation des mêmes ports de carte réseau à toutes fins liées à l’intention est appelée configuration réseau entièrement convergée.

Bien qu’une configuration réseau entièrement convergée soit prise en charge, la configuration optimale pour les performances et la fiabilité concerne l’intention de stockage d’utiliser des ports de carte réseau dédiés. Par conséquent, cette architecture de base fournit des exemples d’instructions pour déployer une instance locale Azure multinode à l’aide de l’architecture réseau commutée de stockage avec deux ports de carte réseau convergés pour la gestion et les intentions de calcul et deux ports de carte réseau dédiés pour l’intention de stockage. Pour plus d’informations, consultez Considérations relatives au réseau pour les déploiements cloud d’Azure Local.

Cette architecture nécessite au moins deux nœuds physiques et jusqu’à un maximum de 16 nœuds à l’échelle. Chaque nœud nécessite quatre ports de carte réseau connectés à deux commutateurs ToR (Top-of-Rack). Les deux commutateurs ToR doivent être interconnectés via des liaisons multi-châssis (MLAG). Les deux ports de carte réseau utilisés pour le trafic d’intention de stockage doivent prendre en charge accès à la mémoire directe à distance (RDMA). Ces ports nécessitent une vitesse minimale de liaison de 10 Gbits/s, mais nous vous recommandons une vitesse de 25 Gbits/s ou plus. Les deux ports de carte réseau utilisés pour la gestion et les intentions de calcul sont convergés à l’aide de la technologie SET (Switch Embedded Teaming). La technologie SET fournit des fonctionnalités de redondance de liaison et d’équilibrage de charge. Ces ports nécessitent une vitesse minimale de liaison de 1 Gbit/s, mais nous recommandons une vitesse de 10 Gbits/s ou plus.

Topologie de réseau physique

La topologie de réseau physique suivante montre les connexions physiques réelles entre les nœuds et les composants réseau.

Vous avez besoin des composants suivants lorsque vous concevez un déploiement local Azure à plusieurs nœuds qui utilise cette architecture de base de référence :

Commutateurs Double ToR :

Les commutateurs réseau Double ToR sont requis pour la résilience réseau et la possibilité de service ou d’appliquer des mises à jour de microprogramme aux commutateurs sans temps d’arrêt. Cette stratégie empêche un point de défaillance unique (SPoF).

Les commutateurs ToR doubles sont utilisés pour le stockage, ou l’est-ouest, le trafic. Ces commutateurs utilisent deux ports Ethernet dédiés qui ont des réseaux locaux virtuels de stockage spécifiques (VLAN) et des classes de trafic PFC (Priority Flow Control) définies pour fournir une communication RDMA sans perte.

Ces commutateurs se connectent aux nœuds via des câbles Ethernet.

Au moins deux nœuds physiques et jusqu’à un maximum de 16 nœuds :

Chaque nœud est un serveur physique qui exécute le système d’exploitation Azure Stack HCI.

Chaque nœud nécessite quatre ports de carte réseau au total : deux ports compatibles RDMA pour le stockage et deux ports de carte réseau pour la gestion et le trafic de calcul.

Le stockage utilise les deux ports de carte réseau compatibles RDMA dédiés qui se connectent avec un chemin d’accès à chacun des deux commutateurs ToR. Cette approche fournit une redondance de chemin de liaison et une bande passante hiérarchisée dédiée pour le trafic de stockage direct SMB.

La gestion et le calcul utilisent deux ports de carte réseau qui fournissent un chemin d’accès à chacun des deux commutateurs ToR pour la redondance de chemin de liaison.

Connectivité externe :

Les commutateurs Double ToR se connectent au réseau externe, tel que votre réseau local d’entreprise interne, pour fournir l’accès aux URL sortantes requises à l’aide de votre périphérique réseau de bordure. Cet appareil peut être un pare-feu ou un routeur. Ces commutateurs routent le trafic entrant et sortant de l’instance locale Azure ou du trafic nord-sud.

La connectivité du trafic nord-sud externe prend en charge l’intention de gestion du cluster et les intentions de calcul. Pour ce faire, utilisez deux ports de commutateur et deux ports de carte réseau par nœud qui sont convergés par le biais de l’association incorporée de commutateur (SET) et d’un commutateur virtuel dans Hyper-V pour garantir la résilience. Ces composants fonctionnent pour fournir une connectivité externe pour les machines virtuelles Azure Arc et d’autres ressources de charge de travail déployées dans les réseaux logiques créés dans Resource Manager à l’aide du portail Azure, de l’interface CLI ou des modèles IaC.

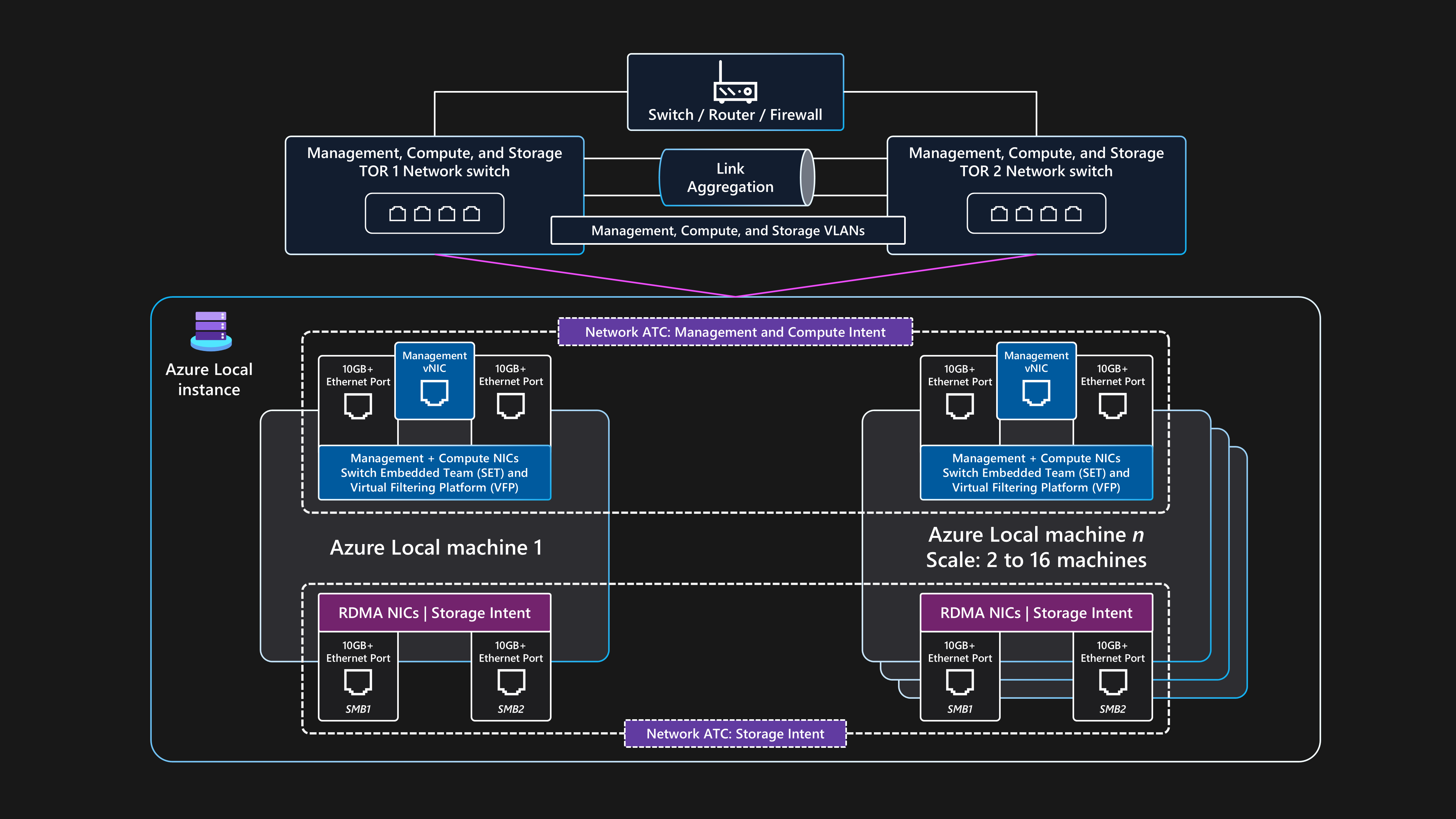

Topologie de réseau logique

La topologie de réseau logique montre une vue d’ensemble de la façon dont les données réseau circulent entre les appareils, quelles que soient leurs connexions physiques.

Voici un résumé de la configuration logique pour cette architecture de base de référence commutée de stockage multinode pour Azure Local :

Commutateurs Double ToR :

- Avant de déployer le cluster, les deux commutateurs réseau ToR doivent être configurés avec les ID de réseau local virtuel requis, les paramètres d’unité de transmission maximale et la configuration de pontage du centre de données pour lesde gestion des

, de calcul et de stockage ports. Pour plus d’informations, consultez configuration réseau physique requise pour Azure Local, ou demandez à votre fournisseur de matériel de commutateur ou partenaire SI d’obtenir de l’aide.

- Avant de déployer le cluster, les deux commutateurs réseau ToR doivent être configurés avec les ID de réseau local virtuel requis, les paramètres d’unité de transmission maximale et la configuration de pontage du centre de données pour lesde gestion des

Azure Local utilise l’approche Network ATC pour appliquer l’automatisation du réseau et la configuration réseau basée sur l’intention.

Network ATC est conçu pour garantir une configuration réseau optimale et un flux de trafic à l’aide du trafic réseau intentions. Network ATC définit les ports de carte réseau physiques utilisés pour les différentes intentions de trafic réseau (ou types), comme pour les de gestion du cluster, la charge de travail lesde calcul et les intentions de stockage de cluster stockage.

Les stratégies basées sur les intentions simplifient les exigences de configuration réseau en automatisant la configuration réseau du nœud en fonction des entrées de paramètres spécifiées dans le cadre du processus de déploiement du cloud local Azure.

Communication externe :

Lorsque les nœuds ou la charge de travail doivent communiquer en externe en accédant au réseau local d’entreprise, à Internet ou à un autre service, ils routent à l’aide des commutateurs ToR doubles. Ce processus est décrit dans la section précédente topologie de réseau physique.

Lorsque les deux commutateurs ToR agissent en tant qu’appareils de couche 3, ils gèrent le routage et fournissent une connectivité au-delà du cluster à l’appareil de bordure de périphérie, tel que votre pare-feu ou votre routeur.

L’intention du réseau de gestion utilise l’interface virtuelle de l’équipe SET convergée, qui permet aux ressources de plan de contrôle et d’adresse IP de gestion du cluster de communiquer en externe.

Pour l’intention du réseau de calcul, vous pouvez créer un ou plusieurs réseaux logiques dans Azure avec les ID de réseau local virtuel spécifiques pour votre environnement. Les ressources de charge de travail, telles que les machines virtuelles, utilisent ces ID pour donner accès au réseau physique. Les réseaux logiques utilisent les deux ports de carte réseau physique qui sont convergés à l’aide d’une équipe SET pour les intentions de calcul et de gestion.

Trafic de stockage :

Les nœuds physiques communiquent entre eux à l’aide de deux ports de carte réseau dédiés connectés aux commutateurs ToR pour fournir une bande passante élevée et une résilience pour le trafic de stockage.

Les ports de stockage SMB1 et SMB2 se connectent à deux réseaux non routables distincts (ou couche 2). Chaque réseau dispose d’un ID de réseau local virtuel spécifique configuré qui doit correspondre à la configuration des ports de commutateur sur le ID de réseau local virtuel de stockage par défaut : 711 et 712.

Il n’existe aucune passerelle par défaut configurée sur les deux ports de carte réseau d’intention de stockage dans le système d’exploitation Azure Stack HCI.

Chaque nœud peut accéder aux fonctionnalités directes des espaces de stockage du cluster, telles que les disques physiques distants utilisés dans le pool de stockage, les disques virtuels et les volumes. L’accès à ces fonctionnalités est facilité par le biais du protocole RDMA SMB-Direct sur les deux ports de carte réseau de stockage dédiés disponibles dans chaque nœud. SMB Multichannel est utilisé pour la résilience.

Cette configuration fournit une vitesse de transfert de données suffisante pour les opérations liées au stockage, telles que la maintenance de copies cohérentes de données pour les volumes mis en miroir.

Configuration requise pour le commutateur réseau

Vos commutateurs Ethernet doivent respecter les différentes spécifications requises par Azure Local et définies par l’Institute of Electrical and Electronics Engineers Standards Association (IEEE SA). Par exemple, pour les déploiements commutés de stockage multinode, le réseau de stockage est utilisé pour RDMA via RoCE v2 ou iWARP. Ce processus nécessite la spécification PFC IEEE 802.1Qbb pour garantir une communication sans perte pour la classe de trafic de stockage . Vos commutateurs ToR doivent prendre en charge IEEE 802.1Q pour les réseaux locaux virtuels et IEEE 802.1AB pour le protocole de découverte de couche de liaison.

Si vous envisagez d’utiliser des commutateurs réseau existants pour un déploiement local Azure, passez en revue la liste des normes et spécifications IEEE obligatoires que les commutateurs réseau et la configuration doivent fournir. Lors de l’achat de nouveaux commutateurs réseau, passez en revue la liste des modèles de commutateur certifiés par le fournisseur de matériel qui prennent en charge les exigences du réseau local Azure.

Exigences relatives à l’adresse IP

Dans un déploiement commuté de stockage multinode, le nombre d’adresses IP nécessaires augmente avec l’ajout de chaque nœud physique, jusqu’à un maximum de 16 nœuds au sein d’un seul cluster. Par exemple, pour déployer une configuration commutée de stockage à deux nœuds d’Azure Local, l’infrastructure de cluster nécessite un minimum de 11 adresses IP x à allouer. D’autres adresses IP sont requises si vous utilisez la microsegmentation ou la mise en réseau définie par logiciel. Pour plus d’informations, consultez Passer en revue les exigences d’adresse IP du modèle de référence de stockage à deux nœuds pour azure Local.

Lorsque vous concevez et planifiez les exigences d’adresse IP pour Azure Local, n’oubliez pas de tenir compte des adresses IP supplémentaires ou des plages réseau nécessaires à votre charge de travail au-delà des exigences de l’instance locale Azure et des composants d’infrastructure. Si vous envisagez de déployer AKS sur Azure Local, consultez AKS activé par la configuration réseau requise pour Azure Arc.

Surveillance

Pour améliorer la surveillance et les alertes, activez Monitor Insights sur Azure Local. Insights peut être mis à l’échelle pour surveiller et gérer plusieurs clusters locaux à l’aide d’une expérience cohérente Azure. Insights utilise des compteurs de performances de cluster et des canaux de journal des événements pour surveiller les principales fonctionnalités locales d’Azure. Les journaux sont collectés par le DCR configuré via Monitor et Log Analytics.

Insights pour Azure Local est créé à l’aide de Monitor et de Log Analytics, ce qui garantit une solution toujours up-to-date et évolutive hautement personnalisable. Insights fournit l’accès aux classeurs par défaut avec des métriques de base, ainsi que des classeurs spécialisés créés pour la surveillance des fonctionnalités clés d’Azure Local. Ces composants fournissent une solution de supervision en temps quasi réel et permettent la création de graphiques, la personnalisation des visualisations via l’agrégation et le filtrage, ainsi que la configuration des règles d’alerte d’intégrité des ressources personnalisées.

Gestion des mises à jour

Les instances locales Azure et les ressources de charge de travail déployées, telles que les machines virtuelles Azure Arc, doivent être mises à jour et corrigées régulièrement. En appliquant régulièrement des mises à jour, vous assurez que votre organisation maintient une posture de sécurité forte et que vous améliorez la fiabilité et la prise en charge globales de votre patrimoine. Nous vous recommandons d’utiliser des évaluations manuelles automatiques et périodiques pour la détection anticipée et l’application des correctifs de sécurité et des mises à jour du système d’exploitation.

Mises à jour de l’infrastructure

Azure Local est mis à jour en continu pour améliorer l’expérience client et ajouter de nouvelles fonctionnalités et fonctionnalités. Ce processus est géré par le biais de trains de mise en production, qui fournissent de nouvelles builds de base trimestrielles. Les builds de référence sont appliquées aux instances locales Azure pour les maintenir à jour. Outre les mises à jour de build de base régulières, Azure Local est mis à jour avec des mises à jour mensuelles de sécurité et de fiabilité du système d’exploitation.

Update Manager est un service Azure que vous pouvez utiliser pour appliquer, afficher et gérer les mises à jour pour Azure Local. Ce service fournit un mécanisme permettant d’afficher toutes les instances localesAzure sur l’ensemble de votre infrastructure et des emplacements de périphérie à l’aide du portail Azure pour offrir une expérience de gestion centralisée. Pour plus d’informations, consultez les ressources suivantes :

Il est important de vérifier régulièrement les nouvelles mises à jour du pilote et du microprogramme, comme toutes les trois à six mois. Si vous utilisez une version de catégorie de solution Premier pour votre matériel Azure Local, les mises à jour du package d’extension Solution Builder sont intégrées à Update Manager pour offrir une expérience de mise à jour simplifiée. Si vous utilisez des nœuds validés ou une catégorie de système intégrée, il peut être nécessaire de télécharger et d’exécuter un package de mise à jour spécifique à l’OEM qui contient les mises à jour du microprogramme et du pilote pour votre matériel. Pour déterminer la façon dont les mises à jour sont fournies pour votre matériel, contactez votre partenaire OEM ou SI matériel.

Mise à jour corrective du système d’exploitation invité de charge de travail

Vous pouvez inscrire des machines virtuelles Azure Arc déployées sur Azure Local à l’aide de azure Update Manager (AUM) pour fournir une expérience de gestion unifiée des correctifs à l’aide du même mécanisme utilisé pour mettre à jour les nœuds physiques du cluster local Azure. Vous pouvez utiliser AUM pour créer configurations de maintenance invité. Ces configurations contrôlent les paramètres tels que le paramètre redémarrage redémarrer si nécessaire, la planification (dates, heures et options de répétition) et une liste dynamique (abonnement) ou statique des machines virtuelles Azure Arc pour l’étendue. Ces paramètres contrôlent la configuration pour laquelle les correctifs de sécurité du système d’exploitation sont installés à l’intérieur du système d’exploitation invité de votre machine virtuelle de charge de travail.

Considérations

Ces considérations implémentent les piliers d’Azure Well-Architected Framework, qui est un ensemble d’ensembles guidants qui peuvent être utilisés pour améliorer la qualité d’une charge de travail. Pour plus d’informations, consultez Microsoft Azure Well-Architected Framework.

Fiabilité

La fiabilité garantit que votre application peut respecter les engagements que vous prenez à vos clients. Pour plus d’informations, consultez liste de vérification de la révision de conception pour lede fiabilité.

Identifier les points d’échec potentiels

Chaque architecture est susceptible d’être défaillante. Vous pouvez anticiper les défaillances et être préparé avec des atténuations avec l’analyse du mode d’échec. Le tableau suivant décrit quatre exemples de points de défaillance potentiels dans cette architecture :

| Composant | Risque | Vraisemblance | Effet/atténuation/note | Panne |

|---|---|---|---|---|

| Panne d’instance locale Azure | Panne de l’alimentation, du réseau, du matériel ou du logiciel | Douleur moyenne | Pour éviter une panne prolongée de l’application causée par l’échec d’une instance locale Azure pour les cas d’utilisation métier ou stratégiques, votre charge de travail doit être conçue à l’aide de principes de haute disponibilité et de récupération d’urgence. Par exemple, vous pouvez utiliser des technologies de réplication de données de charge de travail standard pour gérer plusieurs copies de données d’état persistantes déployées à l’aide de plusieurs machines virtuelles Azure Arc ou d’instances AKS déployées sur des instances locales Azure distinctes et dans des emplacements physiques distincts. | Panne potentielle |

| Panne de nœud physique unique Azure Local | Panne de l’alimentation, du matériel ou du logiciel | Douleur moyenne | Pour empêcher une interruption prolongée de l’application causée par l’échec d’une seule machine locale Azure, votre instance Locale Azure doit avoir plusieurs nœuds physiques. Vos besoins en capacité de charge de travail pendant la phase de conception du cluster déterminent le nombre de nœuds. Nous vous recommandons d’avoir trois nœuds ou plus. Nous vous recommandons également d’utiliser la mise en miroir tridirectionnel, qui est le mode de résilience de stockage par défaut pour les clusters avec trois nœuds ou plus. Pour empêcher un SPoF et augmenter la résilience des charges de travail, déployez plusieurs instances de votre charge de travail à l’aide de deux machines virtuelles Azure Arc ou de pods de conteneur qui s’exécutent dans plusieurs nœuds worker AKS. En cas d’échec d’un nœud unique, les machines virtuelles Azure Arc et les services de charge de travail/ d’application sont redémarrés sur les nœuds physiques en ligne restants dans le cluster. | Panne potentielle |

| Machine virtuelle Azure Arc ou nœud Worker AKS (charge de travail) | Configuration incorrecte | Douleur moyenne | Les utilisateurs de l’application ne peuvent pas se connecter ou accéder à l’application. Les configurations incorrectes doivent être interceptées pendant le déploiement. Si ces erreurs se produisent lors d’une mise à jour de configuration, l’équipe DevOps doit restaurer les modifications. Vous pouvez redéployer la machine virtuelle si nécessaire. Le redéploiement prend moins de 10 minutes pour le déploiement, mais peut prendre plus de temps en fonction du type de déploiement. | Panne potentielle |

| Connectivité à Azure | Panne du réseau | Douleur moyenne | Le cluster doit atteindre régulièrement le plan de contrôle Azure pour la facturation, la gestion et les fonctionnalités de supervision. Si votre cluster perd la connectivité à Azure, il fonctionne dans un état détérioré. Par exemple, il n’est pas possible de déployer de nouvelles machines virtuelles Azure Arc ou des clusters AKS si votre cluster perd la connectivité à Azure. Les charges de travail existantes qui s’exécutent sur le cluster HCI continuent d’être exécutées, mais vous devez restaurer la connexion dans les 48 à 72 heures pour garantir une opération ininterrompue. | Aucun |

Pour plus d’informations, consultez Recommandations pour effectuer une analyse du mode d’échec.

Cibles de fiabilité

Cette section décrit un exemple de scénario. Un client fictif appelé Contoso Manufacturing utilise cette architecture de référence pour déployer Azure Local. Ils souhaitent répondre à leurs besoins et déployer et gérer des charges de travail locales. Contoso Manufacturing a un objectif de niveau de service interne (SLO) de 99,8% que les parties prenantes de l’entreprise et de l’application conviennent de leurs services.

Un SLO de 99,8% temps d’activité ou de disponibilité entraîne les périodes suivantes de temps d’arrêt autorisé, ou d’indisponibilité, pour les applications déployées à l’aide de machines virtuelles Azure Arc qui s’exécutent sur Azure Local :

Hebdomadaire : 20 minutes et 10 secondes

Mensuel : 1 heure, 26 minutes et 56 secondes

Trimestre : 4 heures, 20 minutes et 49 secondes

Annuel : 17 heures, 23 minutes et 16 secondes

Pour aider à atteindre les cibles SLO, Contoso Manufacturing implémente le principe de privilège minimum (PoLP) pour limiter le nombre d’administrateurs d’instances locales Azure à un petit groupe de personnes approuvées et qualifiées. Cette approche permet d’éviter les temps d’arrêt en raison d’actions accidentelles ou accidentelles effectuées sur les ressources de production. En outre, les journaux des événements de sécurité pour les contrôleurs de domaine Active Directory (AD DS) locaux sont surveillés pour détecter et signaler les modifications d’appartenance aux groupes de comptes d’utilisateur, appelées ajouter et supprimer des actions, pour les administrateurs d’instances locales Azure groupe groupe à l’aide d’une solution SIEM (Security Information Event Management). La surveillance augmente la fiabilité et améliore la sécurité de la solution.

Pour plus d’informations, consultez Recommandations pour la gestion des identités et des accès.

procédures strictes de contrôle des modifications sont en place pour les systèmes de production de Contoso Manufacturing. Ce processus nécessite que toutes les modifications soient testées et validées dans un environnement de test représentatif avant l’implémentation en production. Toutes les modifications soumises au processus hebdomadaire du conseil consultatif des modifications doivent inclure un plan d’implémentation détaillé (ou un lien vers le code source), un score de niveau de risque, un plan de restauration complet, des tests et des vérifications post-mise en production, et des critères de réussite clairs pour une modification à examiner ou approuver.

Pour plus d’informations, consultez Recommandations relatives aux pratiques de déploiement sécurisé.

correctifs de sécurité mensuels et les mises à jour de base trimestrielles sont appliquées à l’instance locale Azure de production uniquement après leur validation par l’environnement de préproduction. Update Manager et la fonctionnalité de mise à jour prenant en charge le cluster automatisent le processus d’utilisation de migration dynamique de machine virtuelle pour réduire les temps d’arrêt des charges de travail critiques pour l’entreprise pendant les opérations de maintenance mensuelles. Les procédures d’exploitation standard de Contoso Manufacturing nécessitent que les mises à jour de build de sécurité, de fiabilité ou de base de référence soient appliquées à tous les systèmes de production dans les quatre semaines suivant leur date de publication. Sans cette stratégie, les systèmes de production ne peuvent pas rester à jour avec les mises à jour mensuelles du système d’exploitation et de sécurité. Les systèmes obsolètes affectent négativement la fiabilité et la sécurité de la plateforme.

Pour plus d’informations, consultez Recommandations pour établir une base de référence de sécurité.

Contoso Manufacturing implémente quotidiennement, les sauvegardes hebdomadaires et mensuelles pour conserver les 6 derniers jours de sauvegardes quotidiennes (lundis à samedis), les 3 dernières sauvegardes hebdomadaires (chaque dimanche) et 3 x sauvegardes mensuelles, chaque semaine du dimanche 4 étant conservée pour devenir les sauvegardes mensuelles 1, mois 2 et mois 3 à l’aide d’une planification basée sur un calendrier propagé en fonction de la planification basée sur les calendriers documentés et auditables. Cette approche répond aux exigences de fabrication de Contoso pour un équilibre adéquat entre le nombre de points de récupération de données disponibles et la réduction des coûts pour le service de stockage de sauvegarde hors site ou cloud.

Pour plus d’informations, consultez Recommandations pour la conception d’une stratégie de récupération d’urgence.

processus de sauvegarde et de récupération des données sont testés pour chaque système métier tous les six mois. Cette stratégie garantit que les processus BCDR sont valides et que l’entreprise est protégée en cas de sinistre ou de cyber-incident de centre de données.

Pour plus d’informations, consultez Recommandations pour la conception d’une stratégie de test de fiabilité.

Les processus opérationnels et les procédures décrits précédemment dans l’article, ainsi que les recommandations du guide de service Well-Architected Framework pour Azure Local, permettent à Contoso Manufacturing de répondre à leur cible SLO 99,8% et de mettre à l’échelle efficacement et de gérer efficacement les déploiements azure Local et charges de travail sur plusieurs sites de fabrication répartis dans le monde entier.

Pour plus d’informations, consultez Recommandations pour définir des cibles de fiabilité.

Redondance

Considérez une charge de travail que vous déployez sur une instance locale Azure unique en tant que déploiement localement redondant. Le cluster fournit une haute disponibilité au niveau de la plateforme, mais vous devez déployer le cluster dans un seul rack. Pour les cas d’usage critiques ou stratégiques pour l’entreprise, nous vous recommandons de déployer plusieurs instances d’une charge de travail ou d’un service sur deux ou plusieurs instances Locales Azure distinctes, idéalement dans des emplacements physiques distincts.

Utilisez des modèles de haute disponibilité standard et standard pour les charges de travail qui fournissent une réplication active/passive, une réplication synchrone ou une réplication asynchrone telle que SQL Server Always On. Vous pouvez également utiliser une technologie d’équilibrage de charge réseau externe qui achemine les requêtes utilisateur sur plusieurs instances de charge de travail qui s’exécutent sur des instances locales Azure que vous déployez dans des emplacements physiques distincts. Envisagez d’utiliser un appareil NLB externe partenaire. Vous pouvez également évaluer les options d’équilibrage de charge qui prennent en charge le routage du trafic pour les services hybrides et locaux, comme une instance Azure Application Gateway qui utilise Azure ExpressRoute ou un tunnel VPN pour se connecter à un service local.

Pour plus d’informations, consultez Recommandations de conception pour la redondance.

Sécurité

La sécurité offre des garanties contre les attaques délibérées et l’abus de vos données et systèmes précieux. Pour plus d’informations, consultez liste de vérification de la révision de conception pour security.

Les considérations relatives à la sécurité sont les suivantes :

Une base sécurisée pour la plateforme locale Azure: l' locale Azure est un produit sécurisé par défaut qui utilise des composants matériels validés avec un module TPM, UEFI et un démarrage sécurisé pour créer une base sécurisée pour la plateforme locale Azure et la sécurité de la charge de travail. Lorsqu’il est déployé avec les paramètres de sécurité par défaut, Azure Local a activé windows Defender Application Control, Credential Guard et BitLocker. Pour simplifier la délégation des autorisations à l’aide de PoLP, utilisez rôles de contrôle d’accès en fonction du rôle intégré (RBAC) Azure Local tels qu’Administrateur local Azure pour les administrateurs de plateforme et Contributeur de machines virtuelles locales Azure ou Lecteur de machine virtuelle locale Azure pour les opérateurs de charge de travail.

paramètres de sécurité par défaut: de sécurité locale Azure applique les paramètres de sécurité par défaut pour votre instance Locale Azure pendant le déploiement et permet de contrôler la dérive de conserver les nœuds dans un état correct connu. Vous pouvez utiliser les paramètres de sécurité par défaut pour gérer la sécurité du cluster, le contrôle de dérive et les paramètres de serveur principal sécurisés sur votre cluster.

journaux des événements de sécurité :de transfert syslog Azure Local s’intègre aux solutions de surveillance de la sécurité en récupérant les journaux d’événements de sécurité pertinents pour agréger et stocker les événements pour la rétention dans votre propre plateforme SIEM.Protection contre les menaces et les vulnérabilités: Defender pour Cloud protège votre instance locale Azure contre diverses menaces et vulnérabilités. Ce service permet d’améliorer la posture de sécurité de votre environnement local Azure et de vous protéger contre les menaces existantes et en constante évolution.

détection et correction des menaces: Microsoft Advanced Threat Analytics détecte et corrige les menaces, telles que celles ciblant AD DS, qui fournissent des services d’authentification aux nœuds d’instance locale Azure et à leurs charges de travail de machine virtuelle Windows Server.

d’isolation réseau : isolez les réseaux si nécessaire. Par exemple, vous pouvez approvisionner plusieurs réseaux logiques qui utilisent des réseaux locaux virtuels distincts et des plages d’adresses réseau. Lorsque vous utilisez cette approche, assurez-vous que le réseau de gestion peut atteindre chaque réseau logique et réseau local virtuel afin que les nœuds d’instance locale Azure puissent communiquer avec les réseaux VLAN via les commutateurs toR ou les passerelles. Cette configuration est requise pour la gestion de la charge de travail, par exemple pour permettre aux agents de gestion de l’infrastructure de communiquer avec le système d’exploitation invité de la charge de travail.

Pour plus d’informations, consultez Recommandations pour la création d’une stratégie de segmentation.

Optimisation des coûts

L’optimisation des coûts consiste à examiner les moyens de réduire les dépenses inutiles et d’améliorer l’efficacité opérationnelle. Pour plus d’informations, consultez liste de vérification de la révision de conception pour l’optimisation des coûts.

Les considérations relatives à l’optimisation des coûts sont les suivantes :

modèle de facturation de style cloud pour les licences: la tarification locale Azure suit le modèle de facturation d’abonnement mensuel avec un taux fixe par cœur de processeur physique dans une instance locale Azure. Des frais d’utilisation supplémentaires s’appliquent si vous utilisez d’autres services Azure. Si vous possédez des licences principales locales pour l’édition Windows Server Datacenter avec Software Assurance active, vous pouvez choisir d’échanger ces licences pour activer les frais d’abonnement à l’instance locale Azure et à la machine virtuelle Windows Server.

mise à jour corrective automatique d’invité de machine virtuelle pour les machines virtuelles Azure Arc: cette fonctionnalité permet de réduire la surcharge liée à la mise à jour corrective manuelle et aux coûts de maintenance associés. Non seulement cette action contribue-t-elle à rendre le système plus sécurisé, mais il optimise également l’allocation des ressources et contribue à l’efficacité globale des coûts.

consolidation des coûts: pour consolider les coûts de supervision, utilisez Insights pour azure Local et correctif à l’aide de Update Manager pour Azure Local. Insights utilise Monitor pour fournir des métriques et des fonctionnalités d’alerte enrichies. Le composant gestionnaire de cycle de vie d’Azure Localintegrates avec Update Manager pour simplifier la tâche de maintenir vos clusters à jour en consolidant les flux de travail de mise à jour pour différents composants en une seule expérience. Utilisez Monitor et Update Manager pour optimiser l’allocation des ressources et contribuer à l’efficacité globale des coûts.

Pour plus d’informations, consultez Recommandations pour optimiser le temps du personnel.

capacité de charge de travail initiale etde croissance : lorsque vous planifiez votre déploiement local Azure, tenez compte de votre capacité de charge de travail initiale, des exigences de résilience et des considérations de croissance futures. Envisagez d’utiliser une architecture sans commutateur de stockage à deux ou trois nœuds, par exemple en supprimant la nécessité d’acheter des commutateurs réseau de classe de stockage. L’achat de commutateurs réseau de classes de stockage supplémentaires peut être un composant coûteux des nouveaux déploiements d’instances locales Azure. Au lieu de cela, vous pouvez utiliser des commutateurs existants pour la gestion et les réseaux de calcul, ce qui simplifie l’infrastructure. Si la capacité et la résilience de votre charge de travail ne sont pas mises à l’échelle au-delà d’une configuration à trois nœuds, envisagez si vous pouvez utiliser des commutateurs existants pour les réseaux de gestion et de calcul, et utilisez l’architecture sans commutateur de stockage à trois nœuds pour déployer Azure Local.

Pour plus d’informations, consultez Recommandations pour optimiser les coûts des composants.

Pourboire

Vous pouvez économiser sur les coûts avec Azure Hybrid Benefit si vous disposez de licences Windows Server Datacenter avec Software Assurance active. Pour plus d’informations, consultez Azure Hybrid Benefit pour Azure Local.

Excellence opérationnelle

L’excellence opérationnelle couvre les processus d’exploitation qui déploient une application et la conservent en production. Pour plus d’informations, consultez liste de vérification de la révision de conception pour l’excellence opérationnelle.

Les considérations relatives à l’excellence opérationnelle sont les suivantes :

expérience de gestion et d’approvisionnement simplifiée intégrée à Azure: le déploiement cloud dans Azure fournit une interface pilotée par l’Assistant qui vous montre comment créer une instance locale Azure. De même, Azure simplifie le processus de gestion des instances locales Azure et machines virtuelles Azure Arc. Vous pouvez automatiser le déploiement basé sur le portail de l’instance locale Azure à l’aide de le modèle ARM. Ce modèle fournit une cohérence et une automatisation pour déployer Azure Local à grande échelle, en particulier dans des scénarios de périphérie tels que des magasins de vente au détail ou des sites de fabrication qui nécessitent une instance locale Azure pour exécuter des charges de travail critiques pour l’entreprise.

fonctionnalités Automation pour les machines virtuelles: Azure Local fournit un large éventail de fonctionnalités d’automatisation pour la gestion des charges de travail, telles que les machines virtuelles Azure Arc, avec le déploiement automatisé de machines virtuelles Azure Arc à l’aide d’Azure CLI, ARM ou Bicep, avec des mises à jour du système d’exploitation de machine virtuelle à l’aide de l’extension Azure Arc pour mises à jour et Azure Update Manager pour mettre à jour chaque instance locale Azure. Azure Local prend également en charge gestion des machines virtuelles Azure Arc à l’aide d’Azure CLI et d'machines virtuelles autres qu’Azure Arc à l’aide de Windows PowerShell. Vous pouvez exécuter des commandes Azure CLI localement à partir de l’une des machines locales Azure ou à distance à partir d’un ordinateur de gestion. L’intégration avec Azure Automation et Azure Arc facilite un large éventail de scénarios d’automatisation supplémentaires pour charges de travail de machine virtuelle via des extensions Azure Arc.

Pour plus d’informations, consultez Recommandations pour l’utilisation de l'IaC.

fonctionnalités Automation pour les conteneurs sur AKS: Azure Local fournit un large éventail de fonctionnalités d’automatisation pour la gestion des charges de travail, telles que les conteneurs, sur AKS. Vous pouvez automatiser le déploiement de clusters AKS à l’aide d’Azure CLI. Mettez à jour les clusters de charge de travail AKS à l’aide de l’extension Azure Arc pour mises à jour Kubernetes. Vous pouvez également gérer AKS avec Azure Arc à l’aide d’Azure CLI. Vous pouvez exécuter des commandes Azure CLI localement à partir de l’une des machines locales Azure ou à distance à partir d’un ordinateur de gestion. Intégrez Azure Arc pour un large éventail de scénarios d’automatisation supplémentaires pour charges de travail conteneurisées via des extensions Azure Arc.

Pour plus d’informations, consultez Recommandations pour activer l’automatisation.

Efficacité des performances

L’efficacité des performances est la capacité de votre charge de travail à répondre aux demandes qu’elle impose aux utilisateurs de manière efficace. Pour plus d’informations, consultez liste de vérification de la révision de conception pour l’efficacité des performances.

Les considérations relatives à l’efficacité des performances sont les suivantes :

performances de stockage de charge de travail: envisagez d’utiliser l’outil DiskSpd pour tester les fonctionnalités de performances de stockage de charge de travail d’une instance locale Azure. Vous pouvez utiliser l’outil VMFleet pour générer la charge et mesurer les performances d’un sous-système de stockage. Déterminez si vous devez utiliser vmFleet pour mesurer les performances du sous-système de stockage.

Nous vous recommandons d’établir une base de référence pour les performances de vos instances locales Azure avant de déployer des charges de travail de production. DiskSpd utilise différents paramètres de ligne de commande qui permettent aux administrateurs de tester les performances de stockage du cluster. La fonction principale de DiskSpd consiste à émettre des opérations de lecture et d’écriture et des métriques de performances de sortie, telles que la latence, le débit et les E/S par seconde.

Pour plus d’informations, consultez Recommandations pour les tests de performances.

résilience du stockage de charge de travail: tenez compte des avantages de résilience de stockage, de l’efficacité de l’utilisation (ou de la capacité) et des performances. La planification des volumes locaux Azure inclut l’identification de l’équilibre optimal entre la résilience, l’efficacité de l’utilisation et les performances. Il peut être difficile d’optimiser cet équilibre, car l’optimisation de l’une de ces caractéristiques a généralement un effet négatif sur une ou plusieurs des autres caractéristiques. L’augmentation de la résilience réduit la capacité utilisable. Par conséquent, les performances peuvent varier en fonction du type de résilience sélectionné. Lorsque la résilience et les performances sont la priorité et que vous utilisez trois nœuds ou plus, la configuration de stockage par défaut utilise la mise en miroir tridirectionnel pour les volumes d’infrastructure et d’utilisateurs.

Pour plus d’informations, consultez Recommandations pour la planification de la capacité.

optimisation des performances réseau: envisagez d’optimiser les performances du réseau. Dans le cadre de votre conception, veillez à inclure une allocation de bande passante de trafic réseau projetée lors de la détermination de votre configuration matérielle réseau optimale.

Pour optimiser les performances de calcul dans Azure Local, vous pouvez utiliser l’accélération GPU. L’accélération GPU est bénéfique pour charges de travail d’IA ou de Machine Learning hautes performances qui impliquent des insights de données ou une inférence. Ces charges de travail nécessitent un déploiement à des emplacements de périphérie en raison de considérations telles que la gravité des données ou les exigences de sécurité. Dans un déploiement hybride ou un déploiement local, il est important de prendre en compte les exigences de performances de votre charge de travail, y compris les GPU. Cette approche vous aide à sélectionner les services appropriés lorsque vous concevez et procurez vos instances Locales Azure.

Pour plus d’informations, consultez Recommandations pour sélectionner les services appropriés.

Déployer ce scénario

La section suivante fournit une liste d’exemples de tâches générales ou de flux de travail standard utilisés pour déployer Azure Local, y compris les tâches requises et les considérations. Cette liste de flux de travail est conçue comme un exemple de guide uniquement. Il ne s’agit pas d’une liste exhaustive de toutes les actions requises, qui peuvent varier en fonction des exigences organisationnelles, géographiques ou propres au projet.

Scénario : il existe un projet ou une exigence de cas d’usage pour déployer une solution cloud hybride dans un emplacement local ou edge fournir un calcul local pour les fonctionnalités de traitement des données et un désir d’utiliser des expériences de gestion et de facturation cohérentes avec Azure. Pour plus d’informations, consultez les cas d’usage potentiels section de cet article. Les étapes restantes supposent qu’Azure Local est la solution de plateforme d’infrastructure choisie pour le projet.

Collecter les exigences de charge de travail et de cas d’usage des parties prenantes pertinentes. Cette stratégie permet au projet de confirmer que les fonctionnalités et les fonctionnalités d’Azure Local répondent aux exigences relatives à l’échelle, aux performances et aux fonctionnalités de la charge de travail. Ce processus de révision doit inclure la compréhension de l’échelle ou de la taille de la charge de travail, ainsi que les fonctionnalités requises telles que les machines virtuelles Azure Arc, AKS, Azure Virtual Desktop ou Azure Arc- enabled Data Services ou le service Machine Learning avec Azure Arc. Les valeurs RTO et RPO (fiabilité) de la charge de travail et d’autres exigences non fonctionnelles (scalabilité des performances/charge) doivent être documentées dans le cadre de cette étape de collecte des exigences.

Passez en revue la sortie du sizer local Azure pour la solution de partenaire matériel recommandée. Cette sortie inclut des détails sur le matériel du serveur physique recommandé et le modèle, le nombre de nœuds physiques et les spécifications de l’UC, de la mémoire et de la configuration de stockage de chaque nœud physique requis pour déployer et exécuter vos charges de travail.

Utiliser l’outil de dimensionnement Azure Local pour créer un projet qui modélise le type de charge de travail et met à l’échelle. Ce projet inclut la taille et le nombre de machines virtuelles et leurs besoins de stockage. Ces détails sont entrés avec des choix pour le type de système, la famille d’UC préférée et vos exigences de résilience pour la haute disponibilité et la tolérance de panne de stockage, comme expliqué dans la section précédente Choix de conception de cluster.

passez en revue la sortie du Sizer local Azure pour la solution partenaire matérielle recommandée. Cette solution inclut des détails sur le matériel du serveur physique recommandé (make et model), le nombre de nœuds physiques et les spécifications de l’UC, de la mémoire et de la configuration de stockage de chaque nœud physique requis pour déployer et exécuter vos charges de travail.

Contactez le partenaire OEM ou SI matériel pour qualifier davantage l’adéquation de la version matérielle recommandée par rapport aux exigences de votre charge de travail. Si elle est disponible, utilisez des outils de dimensionnement propres à l’OEM pour déterminer les exigences de dimensionnement matérielle spécifiques à l’OEM pour les charges de travail prévues. Cette étape inclut généralement des discussions avec le partenaire OEM ou SI matériel pour les aspects commerciaux de la solution. Ces aspects incluent les guillemets, la disponibilité du matériel, les délais d’exécution et tous les services professionnels ou à valeur ajoutée fournis par le partenaire pour accélérer votre projet ou vos résultats métier.

Déployer deux commutateurs ToR pour l’intégration réseau. Pour les solutions à haute disponibilité, les clusters HCI nécessitent le déploiement de deux commutateurs ToR. Chaque nœud physique nécessite quatre cartes réseau, dont deux doivent être compatibles RDMA, qui fournissent deux liens entre chaque nœud et les deux commutateurs ToR. Deux cartes réseau, une connectée à chaque commutateur, sont convergées pour la connectivité nord-sud sortante pour les réseaux de calcul et de gestion. Les deux autres cartes réseau compatibles RDMA sont dédiées au trafic est-ouest de stockage. Si vous envisagez d’utiliser des commutateurs réseau existants, vérifiez que la création et le modèle de vos commutateurs figurent dans la liste approuvée des commutateurs réseau pris en charge par azure Local.

Collaborez avec le partenaire OEM ou SI matériel pour organiser la livraison du matériel. Le partenaire SI ou vos employés sont ensuite tenus d’intégrer le matériel à votre centre de données local ou à votre emplacement de périphérie, comme le racking et le pile du matériel, du réseau physique et du câblage d’unité d’alimentation pour les nœuds physiques.

Effectuer le déploiement d’instance locale Azure. Selon la version de votre solution choisie (solution Premier, système intégré ou nœuds validés), le partenaire matériel, le partenaire SI ou vos employés peuvent déployer le logiciel local Azure. Cette étape commence par intégrer le système d’exploitation Azure Stack HCI aux nœuds physiques dans les serveurs avec Azure Arc, puis en démarrant le processus de déploiement du cloud local Azure. Les clients et les partenaires peuvent déclencher une demande de support directement avec Microsoft dans le portail Azure en sélectionnant l’icône Support + Résolution des problèmes ou en contactant leur partenaire OEM ou SI matériel, en fonction de la nature de la demande et de la catégorie de solution matérielle.

Pourboire

L’implémentation de référence du système d’exploitation Azure Stack HCI version 23H2 montre comment déployer un déploiement multiserveur commuté d’Azure Local à l’aide d’un modèle ARM et d’un fichier de paramètres. Vous pouvez également l’exemple Bicep montre comment utiliser un modèle Bicep pour déployer une instance locale Azure, y compris ses ressources requises.

Déployer des charges de travail hautement disponibles sur Azure Local à l’aide du portail Azure, de l’interface CLI ou des modèles ARM + Azure Arc pour l’automatisation. Utilisez l’emplacement personnalisé ressource du nouveau cluster HCI comme région cible lorsque vous déployer des ressources de charge de travail telles que des machines virtuelles Azure Arc, AKS, des hôtes de session Azure Virtual Desktop ou d’autres services avec Azure Arc que vous pouvez activer via les extensions AKS et la conteneurisation sur Azure Local.

Installer des mises à jour mensuelles pour améliorer la sécurité et la fiabilité de la plateforme. Pour maintenir vos instances locales Azure à jour, il est important d’installer les mises à jour logicielles Microsoft et les mises à jour du pilote OEM oem matériel et du microprogramme. Ces mises à jour améliorent la sécurité et la fiabilité de la plateforme. Update Manager applique les mises à jour et fournit une solution centralisée et évolutive pour installer des mises à jour sur un seul cluster ou plusieurs clusters. Contactez votre partenaire OEM matériel pour déterminer le processus d’installation des mises à jour du pilote matériel et du microprogramme, car ce processus peut varier en fonction du type de catégorie de solution matérielle choisi (solution Premier, système intégré ou nœuds validés). Pour plus d’informations, consultez mises à jour de l’infrastructure.

Ressources associées

- conception d’architecture hybride

- options hybrides Azure

- Automation dans un environnement hybride

- Azure Automation State Configuration

- Optimiser l’administration des instances SQL Server dans des environnements locaux et multiclouds à l’aide d’Azure Arc

Étapes suivantes

Documentation produit :

- système d’exploitation Azure Stack HCI, version 23H2

- AKS sur Azure Local

- Azure Virtual Desktop pour Azure Local

- Qu’est-ce qu’Azure Local Monitoring ?

- Protéger les charges de travail de machine virtuelle avec Site Recovery sur Azure Local

- Vue d’ensemble Monitor

- vue d’ensemble du suivi des modifications et de l’inventaire

- vue d’ensemble Update Manager

- Qu’est-ce que les services de données avec Azure Arc ?

- Qu’est-ce que les serveurs avec Azure Arc ?

- Qu’est-ce que le service de sauvegarde ?

Documentation produit pour plus d’informations sur des services Azure spécifiques :

- locale Azure

- azure Arc

- Key Vault

- Stockage Blob Azure

- Surveiller

- Azure Policy

- Azure Container Registry

- Defender pour cloud

- Site Recovery

- de sauvegarde

Modules Microsoft Learn :

- configurer le Monitor

- Concevoir votre solution site recovery dans Azure

- Présentation des serveurs avec Azure Arc

- Présentation des services de données avec Azure Arc

- Présentation de akS

- déploiement de modèle de mise à l’échelle avec Machine Learning n’importe où - Blog Tech Community

- La réalisation de Machine Learning n’importe où avec AKS et Le Machine Learning avec Azure Arc - Blog Tech Community

- Machine Learning sur AKS hybride et Stack HCI à l’aide du Machine Learning avec Azure Arc - Blog Tech Community

- Présentation de cible de calcul Kubernetes dans Machine Learning

- Maintenir vos machines virtuelles mises à jour

- Protéger vos paramètres de machine virtuelle avec la configuration d’état Automation

- protéger vos machines virtuelles à l’aide du de sauvegarde