Configure and run data quality scan

Data quality scans review your data assets based on their applied data quality rules and produce a score. Your data stewards can use that score to assess the data health and address any issues that might be lowering the quality of your data.

Prerequisites

- To run and schedule data quality assessment scans, your users must be in the data quality steward role.

- Currently, the Microsoft Purview account can be set to allow public access or managed vNet access so that data quality scans can run.

Data quality life cycle

Data quality scanning is the seventh step the data quality life cycle for a data asset. Previous steps are:

- Assign users(s) data quality steward permissions in Unified Catalog to use all data quality features.

- Register and scan a data source in your Microsoft Purview Data Map.

- Add your data asset to a data product

- Set up a data source connection to prepare your source for data quality assessment.

- Configure and run data profiling for an asset in your data source.

- When profiling is complete, browse the results for each column in the data asset to understand your data's current structure and state.

- Set up data quality rules based on the profiling results, and apply them to your data asset.

Supported multi-cloud data sources

- Azure Data Lake Storage (ADLS Gen2)

- File Types: Delta Parquet and Parquet

- Azure SQL Database

- Fabric data estate in OneLake is including shortcut and mirroring data estate. Data Quality scanning is supported only for Lakehouse delta tables and parquet files.

- Mirroring data estate: CosmosDB, Snowflake, Azure SQL

- Shortcut data estate: AWS S3, GCS, AdlsG2, and dataverse

- Azure Synapse serverless and data warehouse

- Azure Databricks Unity Catalog

- Snowflake

- Google Big Query (Private Preview)

Important

Data Quality for Parquet file is designed to support:

- A directory with Parquet Part File. For example: ./Sales/{Parquet Part Files}. The Fully Qualified Name must follow

https://(storage account).dfs.core.windows.net/(container)/path/path2/{SparkPartitions}. Make sure we do not have {n} patterns in directory/sub-directory structure, must rather be a direct FQN leading to {SparkPartitions}. - A directory with Partitioned Parquet Files, partitioned by Columns within the dataset like sales data partitioned by year and month. for example: ./Sales/{Year=2018}/{Month=Dec}/{Parquet Part Files}.

Both of these essential scenario which present a consistent parquet dataset schema are supported.

Limitation: It is not designed to or will not support N arbitrary Hierarchies of Directories with Parquet Files. We advise the customer to present data in (1) or (2) constructed structure.

Supported authentication methods

Currently, Microsoft Purview can only run data quality scans using Managed Identity as authentication option. Data Quality services run on Apache Spark 3.4 and Delta Lake 2.4. For more information about supported regions, see data quality overview.

Important

If the schema is updated on the data source, it is necessary to rerun data map scan before running a data quality scan.

Run a data quality scan

Configure a data source connections to the assets you're scanning for data quality, if you haven't already created them.

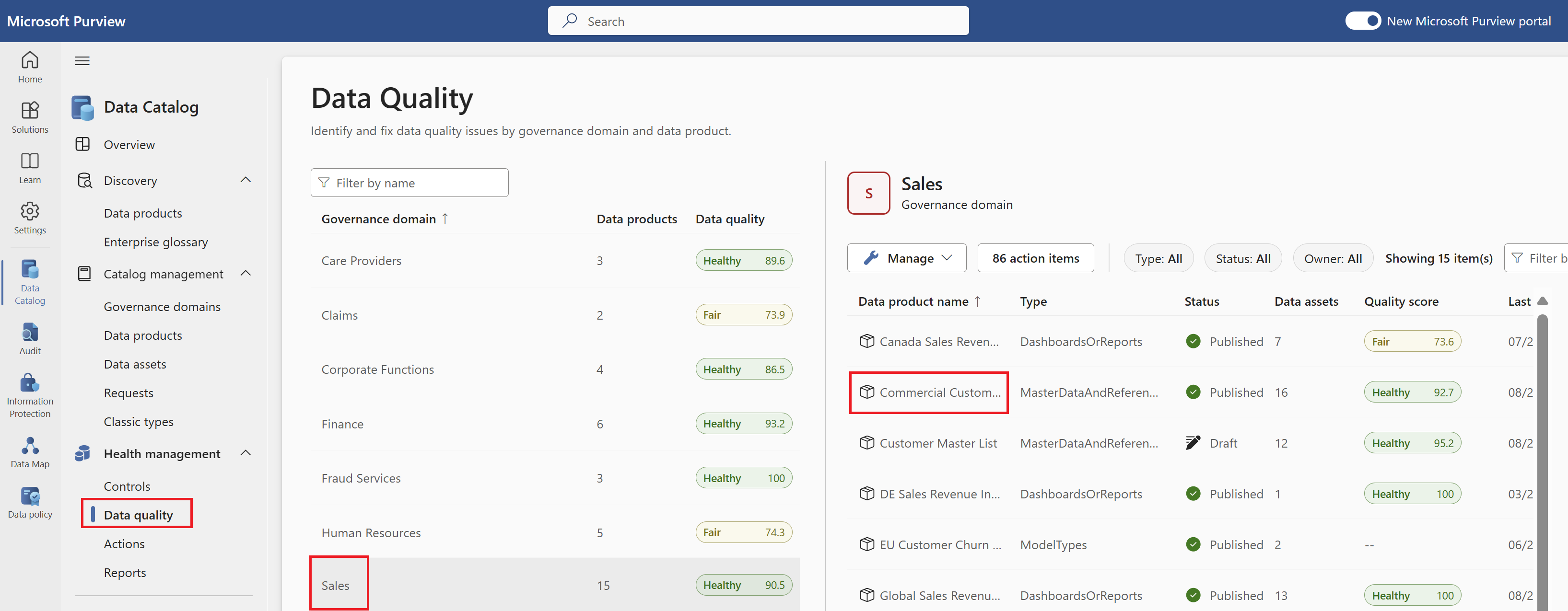

From Microsoft Purview Unified Catalog, select Health Management menu and Data quality submenu.

Select a governance domain from the list.

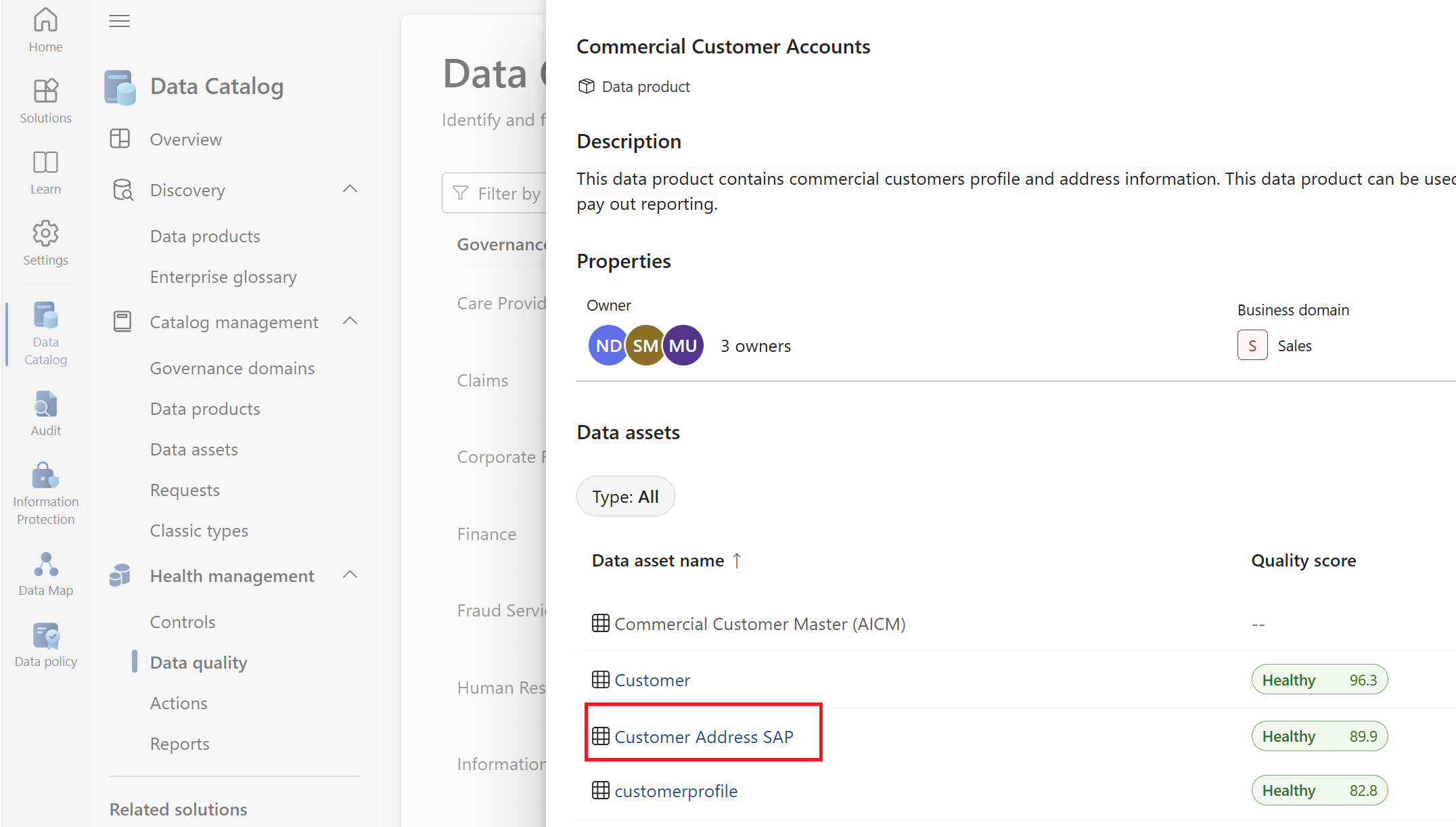

Select a data product to assess data quality of the data assets linked to that product.

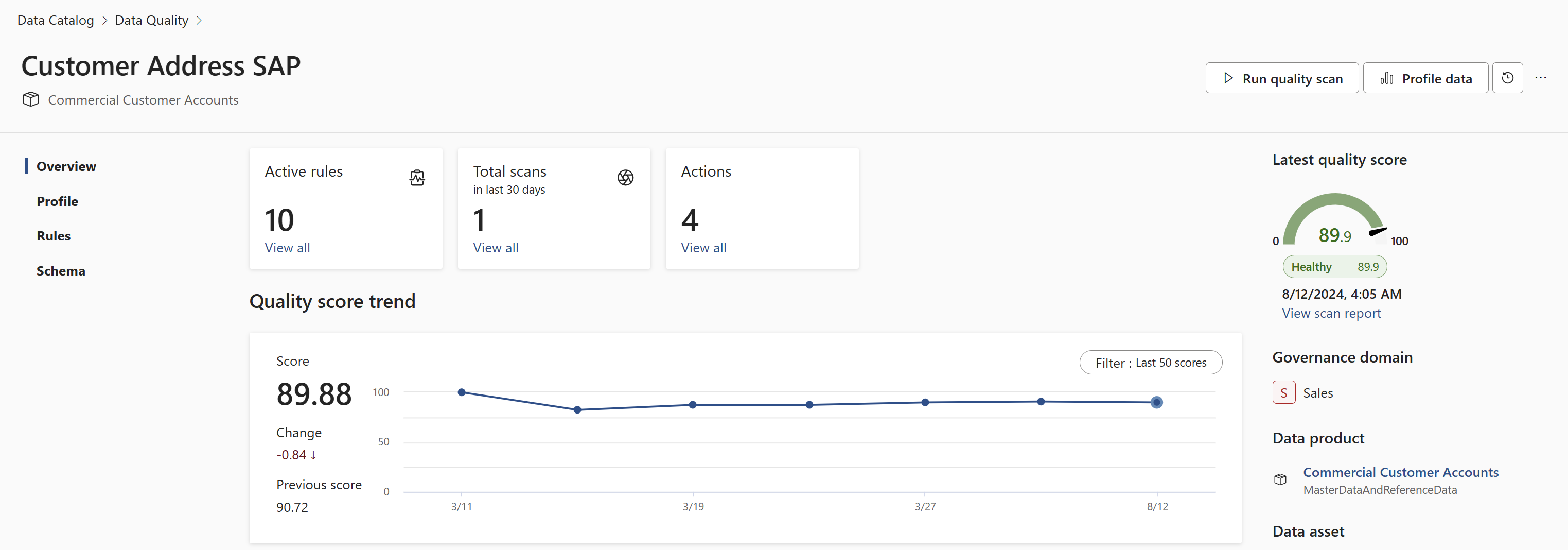

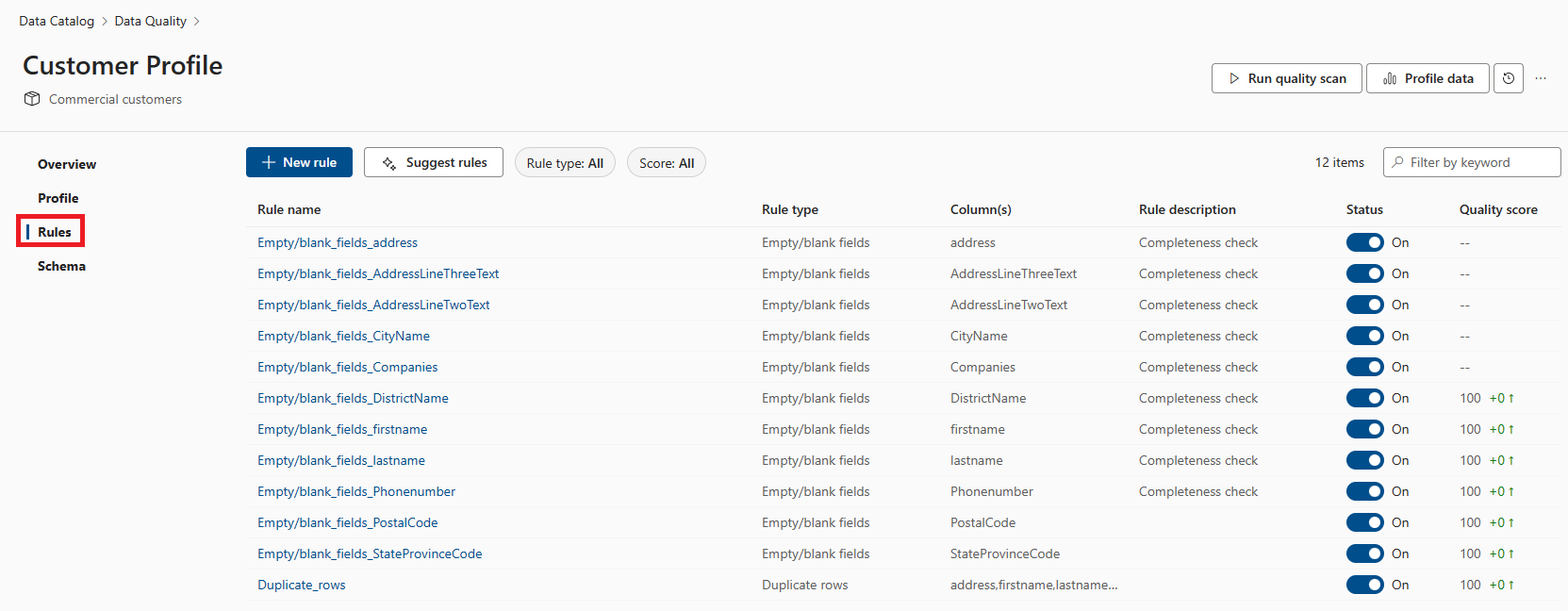

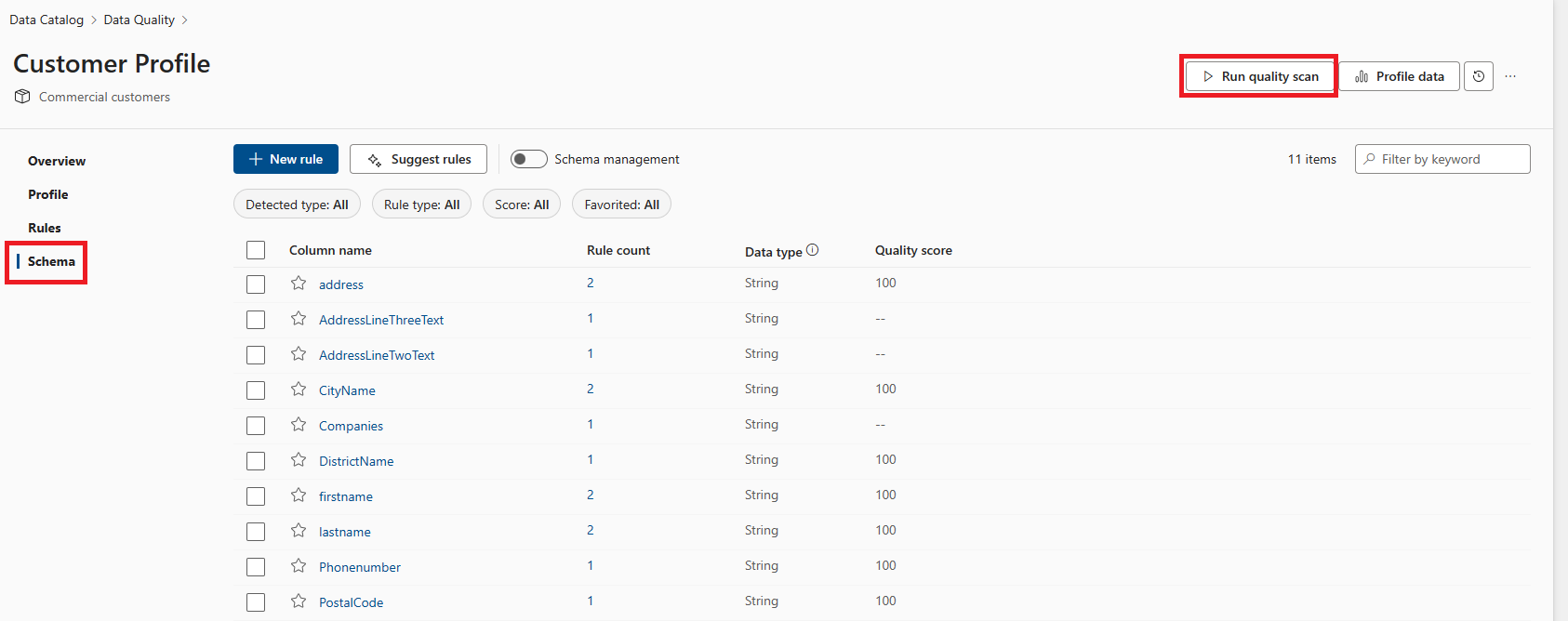

Selecting the data product takes you to the data quality Overview page. You can browse the existing data quality rules and add new rules by selecting Rules menu on this page. You can browse schema of the data asset by selecting the Schema menu from this page.

Browse the rules that already added to the scan for the selected assets, and toggle them on or off in the Status column.

Run the quality scan by selecting the Run quality scan button on the overview page.

While the scan is running, you can track its progress from the data quality monitoring page in the governance domain.

Schedule data quality scans

Although data quality scans can be run on an ad-hoc basis by selecting the Run quality scan button, in production scenarios it's likely that the source data is being constantly updated and, so we want to make sure we're regularly monitoring its data quality in order to detect any issues. To enable us to manage regularly updating quality scans we can automate the scanning process.

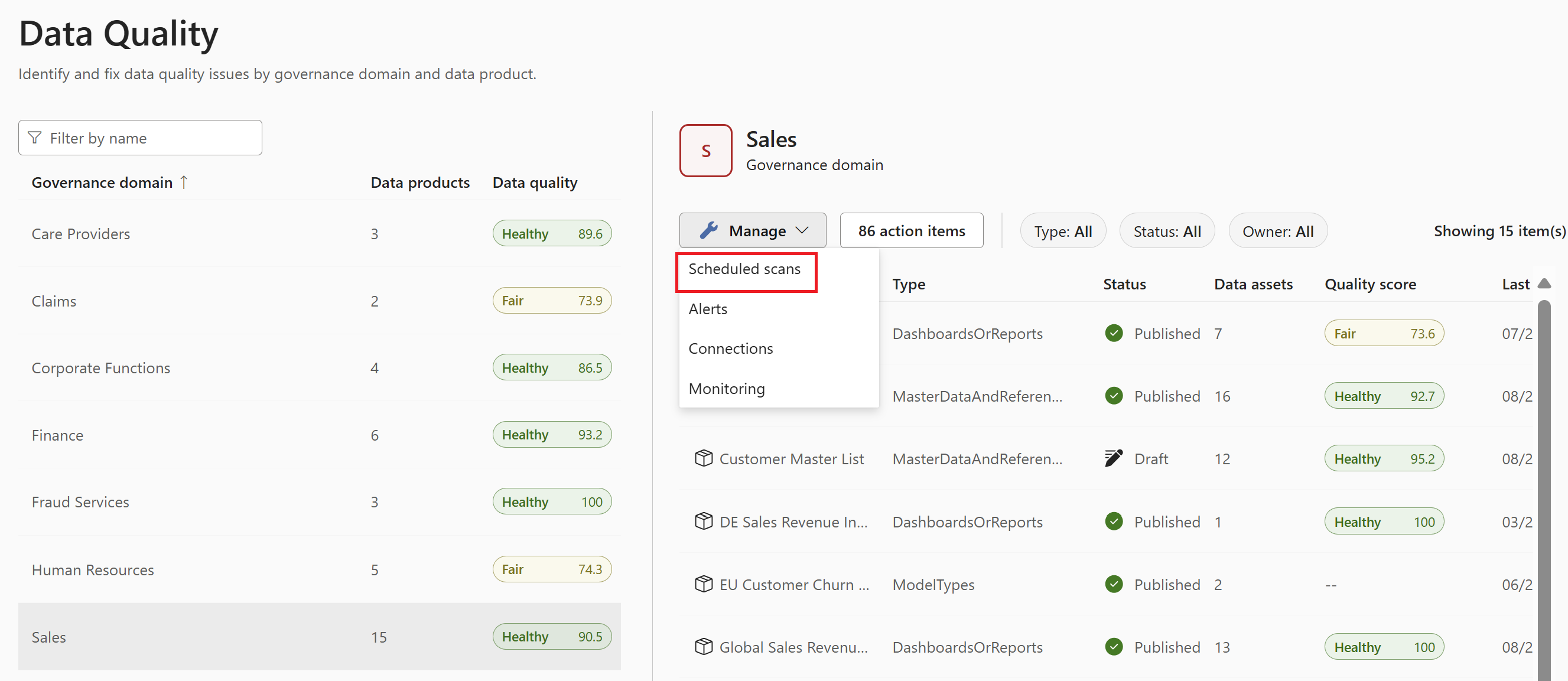

From Microsoft Purview Unified Catalog, select Health Management menu and Data quality submenu.

Select a governance domain from the list.

Select the Manage button from the right side of the page and select Scheduled scans.

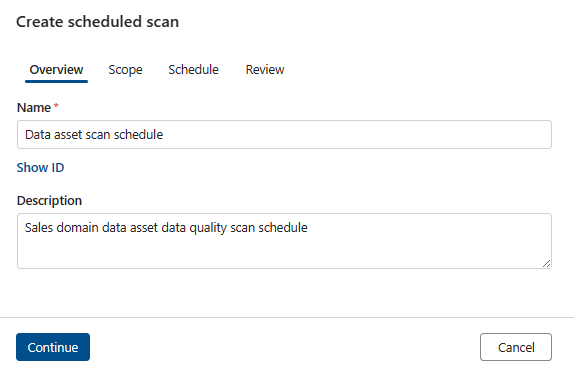

Fill out the form on the Create scheduled scan page. Add a name and description for the source you're setting up the schedule.

Select Continue.

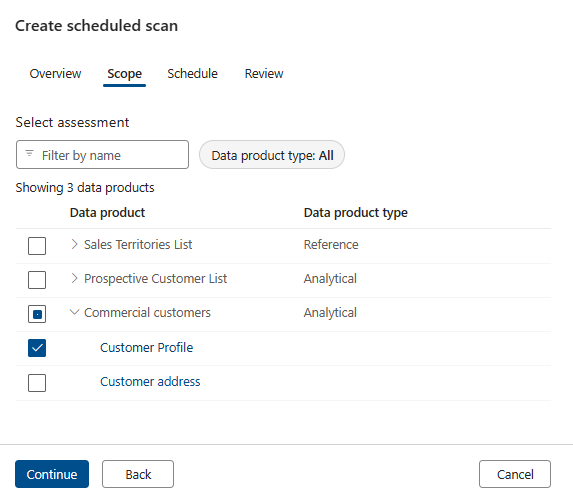

On the Scope tab, select individual data product and assets or all data products and data assets of the entire governance domain.

Select Continue.

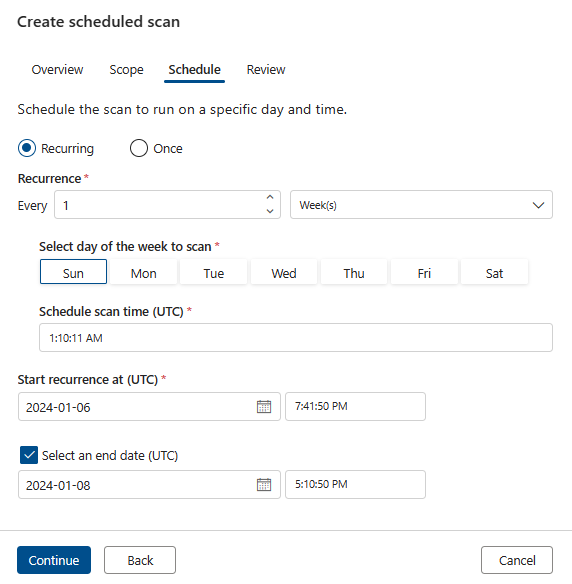

Set a schedule based on your preferences and select Continue.

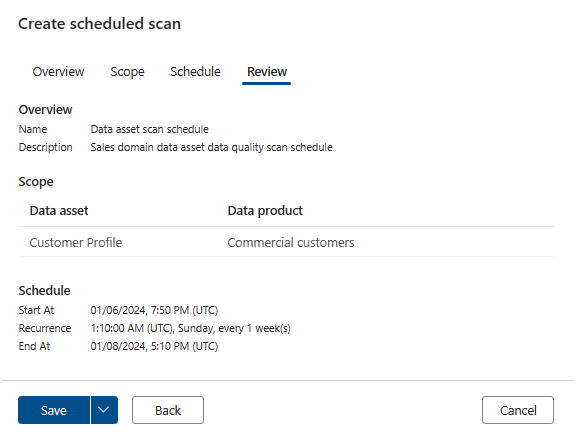

On the Review tab, select Save (or Save and run to test immediately) to complete scheduling the data quality assessment scan.

You can monitor scheduled scans on the data quality job monitoring page under the Scans tab.

Delete previous data quality scans

- From Microsoft Purview Unified Catalog, select Health Management menu and Data quality submenu.

- Select a governance domain from the list.

- Select the ellipsis ('...') button at the top of the page.

- Select Delete data quality data to delete the history of data quality runs.

Note

We recommend only using this delete for test runs, errored data quality runs, or in the case that you are removing a data asset from a data product.

If you want to remove a data asset from a data product, if that data asset has a data quality score, first you need to delete the data quality score, then remove the data asset from the data product.

Important

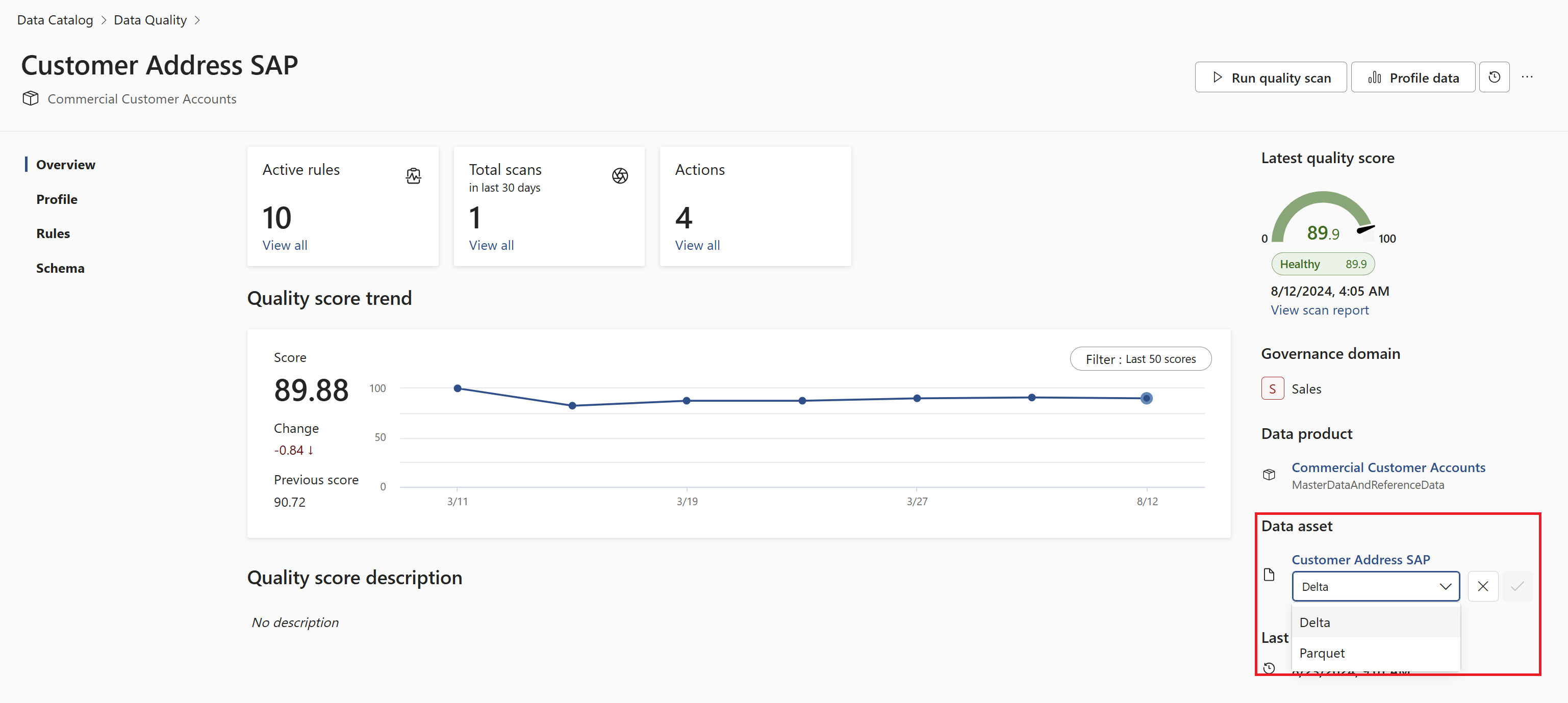

Delta format is mostly autodetected if the format is standard and correct in the source systems. To scan Parquet or iceberg file format for data quality scoring you need to change the data asset type to Parquet or iceberg. As shown in the screenshot below, change the default data asset type *Parquet or other supported format if your data asset file format is not delta. This change has to be done before configuring Data Quality scanning job.

Related contents

- Data Quality for Fabric Data estate

- Data Quality for Fabric Mirrored data sources

- Data Quality for Fabric shortcut data sources

- Data Quality for Azure Synapse serverless and data warehouses

- Data Quality for Azure Databricks Unity Catalog

- Data Quality for Snowflake data sources

- Data Quality for Google Big Query

Next steps

- Monitor data quality scan

- Review your scan results to evaluate your data product's current data quality.

- Configure alerts for data quality scan results