Understand large volumes in Azure NetApp Files

Volumes in Azure NetApp Files are the way you present high performance, cost-effective storage to your network attached storage (NAS) clients in the Azure cloud. Volumes act as independent file systems with their own capacity, file counts, ACLs, snapshots, and file system IDs. These qualities provide a way to separate datasets into individual secure tenants.

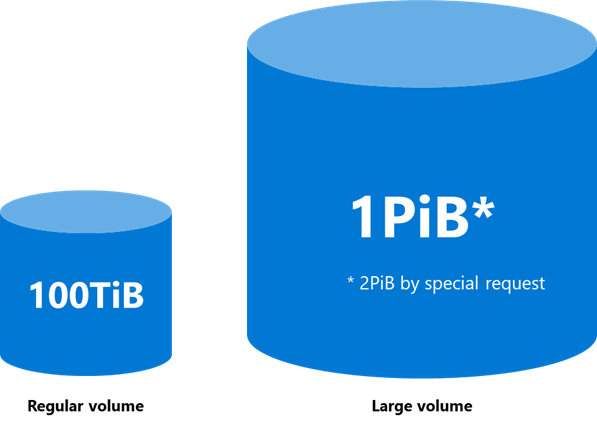

All resources in Azure NetApp files have limits. Regular volumes have the following limits:

| Limit type | Limits |

|---|---|

| Capacity |

|

| File count | 2,147,483,632 |

| Performance |

|

Large volumes have the following limits:

| Limit type | Values |

|---|---|

| Capacity |

|

| File count | 15,938,355,048 |

| Performance |

|

Large volumes effect on performance

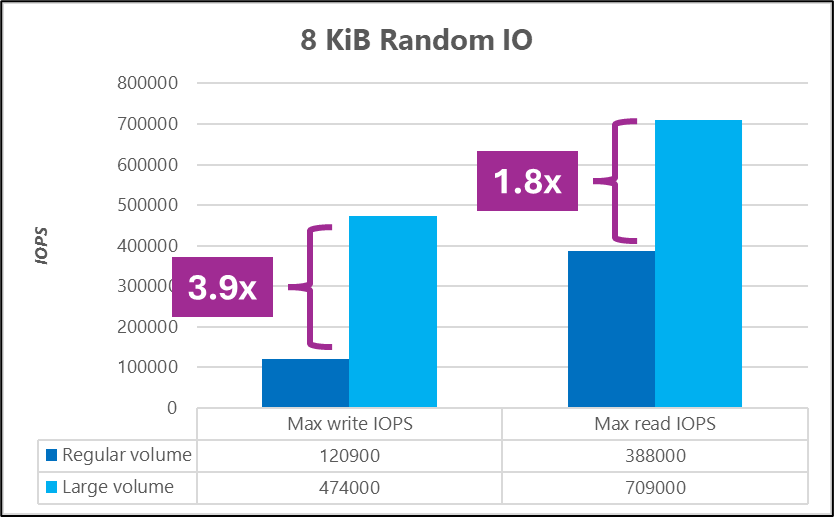

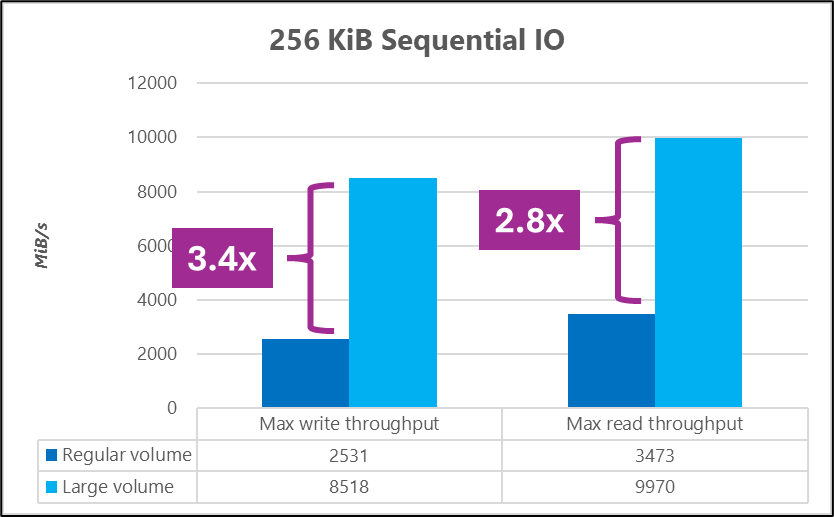

In many cases, a regular volume can handle the performance needs for a production workload, particularly when dealing with database workloads, general file shares, and Azure VMware Service or virtual desktop infrastructure (VDI) workloads. When workloads are metadata heavy or require scale beyond what a regular volume can handle, a large volume can increase performance needs with minimal cost impact.

For instance, the following graphs show that a large volume can deliver two to three times the performance at scale of a regular volume.

For more information about performance tests, see Large volume performance benchmarks for Linux and Regular volume performance benchmarks for Linux.

For example, in benchmark tests using Flexible I/O Tester (FIO), a large volume achieved higher I/OPS and throughput than a regular volume.

Work load types and use cases

Regular volumes can handle most workloads. Once capacity, file count, performance, or scale limits are reached, new volumes must be created. This condition adds unnecessary complexity to a solution.

Large volumes allow workloads to extend beyond the current limitations of regular volumes. The following table shows some examples of use cases for each volume type.

| Volume type | Primary use cases |

|---|---|

| Regular volumes |

|

| Large volumes |

|