Metrics in Application Insights

Application Insights supports three different types of metrics: standard (preaggregated), log-based, and custom metrics. Each one brings a unique value in monitoring application health, diagnostics, and analytics. Developers who are instrumenting applications can decide which type of metric is best suited to a particular scenario. Decisions are based on the size of the application, expected volume of telemetry, and business requirements for metrics precision and alerting. This article explains the difference between all supported metrics types.

Standard metrics

Standard metrics in Application Insights are predefined metrics which are automatically collected and monitored by the service. These metrics cover a wide range of performance and usage indicators, such as CPU usage, memory consumption, request rates, and response times. Standard metrics provide a comprehensive overview of your application's health and performance without requiring any additional configuration. Standard metrics are preaggregated during collection and stored as a time series in a specialized repository with only key dimensions, which gives them better performance at query time. This makes standard metrics the best choice for near real time alerting on dimensions of metrics and more responsive dashboards.

Log-based metrics

Log-based metrics in Application Insights are a query-time concept, represented as a time series on top of the log data of your application. The underlying logs aren't preaggregated at the collection or storage time and retain all properties of each log entry. This retention makes it possible to use log properties as dimensions on log-based metrics at query time for metric chart filtering and metric splitting, giving log-based metrics superior analytical and diagnostic value. However, telemetry volume reduction techniques such as sampling and telemetry filtering, commonly used with monitoring applications generating large volumes of telemetry, impacts the quantity of the collected log entries and therefore reduce the accuracy of log-based metrics.

Custom metrics (preview)

Custom metrics in Application Insights allow you to define and track specific measurements that are unique to your application. These metrics can be created by instrumenting your code to send custom telemetry data to Application Insights. Custom metrics provide the flexibility to monitor any aspect of your application that isn't covered by standard metrics, enabling you to gain deeper insights into your application's behavior and performance.

For more information, see Custom metrics in Azure Monitor (preview).

Note

Application Insights also provides a feature called Live Metrics stream, which allows for near real-time monitoring of your web applications and doesn't store any telemetry data.

Metrics comparison

| Feature | Standard metrics | Log-based metrics | Custom metrics |

|---|---|---|---|

| Data source | Preaggregated time series data collected during runtime. | Derived from log data using Kusto queries. | User-defined metrics collected via the Application Insights SDK or API. |

| Granularity | Fixed intervals (1 minute). | Depends on the granularity of the log data itself. | Flexible granularity based on user-defined metrics. |

| Accuracy | High, not affected by log sampling. | Can be affected by sampling and filtering. | High accuracy, especially when using preaggregated methods like GetMetric. |

| Cost | Included in Application Insights pricing. | Based on log data ingestion and query costs. | See Pricing model and retention. |

| Configuration | Automatically available with minimal configuration. | Require configuration of log queries to extract the desired metrics from log data. | Requires custom implementation and configuration in code. |

| Query performance | Fast, due to preaggregation. | Slower, as it involves querying log data. | Depends on data volume and query complexity. |

| Storage | Stored as time series data in the Azure Monitor metrics store. | Stored as logs in Log Analytics workspace. | Stored in both Log Analytics and the Azure Monitor metrics store. |

| Alerting | Supports real-time alerting. | Allows for complex alerting scenarios based on detailed log data. | Flexible alerting based on user-defined metrics. |

| Service limit | Subject to Application Insights limits. | Subject to Log Analytics workspace limits. | Limited by the quota for free metrics and the cost for additional dimensions. |

| Use cases | Real-time monitoring, performance dashboards, and quick insights. | Detailed diagnostics, troubleshooting, and in-depth analysis. | Tailored performance indicators and business-specific metrics. |

| Examples | CPU usage, memory usage, request duration. | Request counts, exception traces, dependency calls. | Custom application-specific metrics like user engagement, feature usages. |

Metrics preaggregation

OpenTelemetry SDKs and newer Application Insights SDKs (Classic API) preaggregate metrics during collection to reduce the volume of data sent from the SDK to the telemetry channel endpoint. This process applies to standard metrics sent by default, so the accuracy isn't affected by sampling or filtering. It also applies to custom metrics sent using the OpenTelemetry API or GetMetric and TrackValue, which results in less data ingestion and lower cost. If your version of the Application Insights SDK supports GetMetric and TrackValue, it's the preferred method of sending custom metrics.

For SDKs that don't implement preaggregation (that is, older versions of Application Insights SDKs or for browser instrumentation), the Application Insights back end still populates the new metrics by aggregating the events received by the Application Insights telemetry channel endpoint. For custom metrics, you can use the trackMetric method. Although you don't benefit from the reduced volume of data transmitted over the wire, you can still use the preaggregated metrics and experience better performance and support of the near real time dimensional alerting with SDKs that don't preaggregate metrics during collection.

The telemetry channel endpoint preaggregates events before ingestion sampling. For this reason, ingestion sampling never affects the accuracy of preaggregated metrics, regardless of the SDK version you use with your application.

The following tables list where preaggregation are preaggregated.

Metrics preaggregation with Azure Monitor OpenTelemetry Distro

| Current production SDK | Standard metrics preaggregation | Custom metrics preaggregation |

|---|---|---|

| ASP.NET Core | SDK | SDK via OpenTelemetry API |

| .NET (via Exporter) | SDK | SDK via OpenTelemetry API |

| Java (3.x) | SDK | SDK via OpenTelemetry API |

| Java native | SDK | SDK via OpenTelemetry API |

| Node.js | SDK | SDK via OpenTelemetry API |

| Python | SDK | SDK via OpenTelemetry API |

Metrics preaggregation with Application Insights SDK (Classic API)

| Current production SDK | Standard metrics preaggregation | Custom metrics preaggregation |

|---|---|---|

| .NET Core and .NET Framework | SDK (V2.13.1+) | SDK (V2.7.2+) via GetMetric Telemetry channel endpoint via TrackMetric |

| Java (2.x) | Telemetry channel endpoint | Telemetry channel endpoint via TrackMetric |

| JavaScript (Browser) | Telemetry channel endpoint | Telemetry channel endpoint via TrackMetric |

| Node.js | Telemetry channel endpoint | Telemetry channel endpoint via TrackMetric |

| Python | Telemetry channel endpoint | SDK via OpenCensus.stats (retired) Telemetry channel endpoint via TrackMetric |

Caution

The Application Insights Java 2.x SDK is no longer recommended. Use the OpenTelemetry-based Java offering instead.

The OpenCensus Python SDK is retired. We recommend the OpenTelemetry-based Python offering and provide migration guidance.

Metrics preaggregation with autoinstrumentation

With autoinstrumentation, the SDK is automatically added to your application code and can't be customized. For custom metrics, manual instrumentation is required.

| Current production SDK | Standard metrics preaggregation | Custom metrics preaggregation |

|---|---|---|

| ASP.NET Core | SDK 1 | Not supported |

| ASP.NET | SDK 2 | Not supported |

| Java | SDK | Supported 3 |

| Node.js | SDK | Not supported |

| Python | SDK | Not supported |

Footnotes

- 1 ASP.NET Core autoinstrumentation on App Service emits standard metrics without dimensions. Manual instrumentation is required for all dimensions.

- 2 ASP.NET autoinstrumentation on virtual machines/virtual machine scale sets and on-premises emits standard metrics without dimensions. The same is true for Azure App Service, but the collection level must be set to recommended. Manual instrumentation is required for all dimensions.

- 3 The Java agent used with autoinstrumentation captures metrics emitted by popular libraries and sends them to Application Insights as custom metrics.

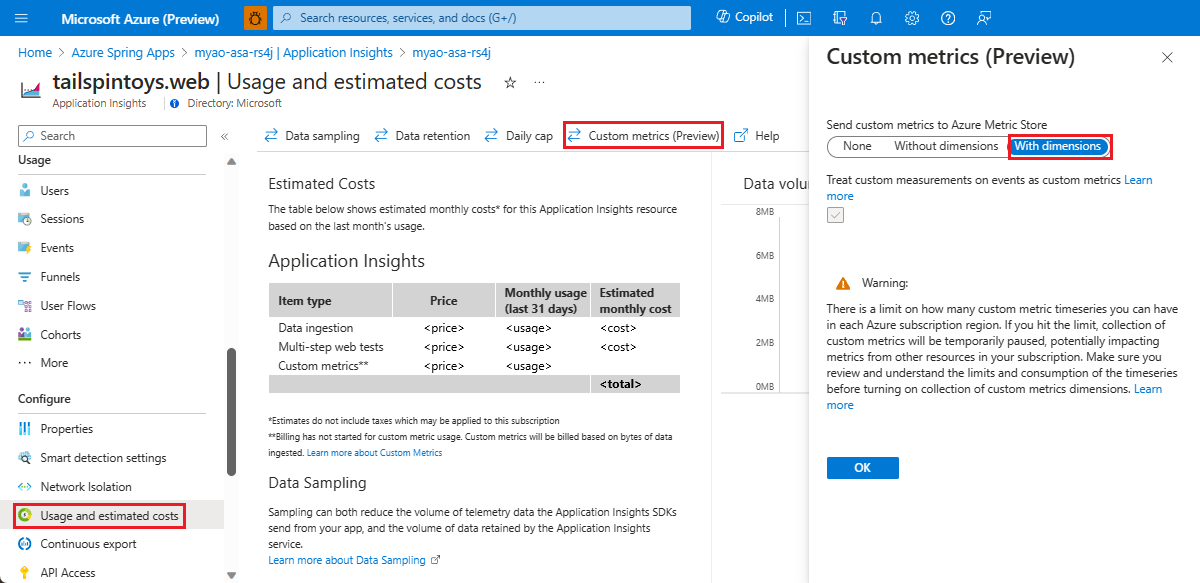

Custom metrics dimensions and preaggregation

All metrics that you send using OpenTelemetry, trackMetric, or GetMetric and TrackValue API calls are automatically stored in both the metrics store and logs. These metrics can be found in the customMetrics table in Application Insights and in Metrics Explorer under the Custom Metric Namespace called azure.applicationinsights. Although the log-based version of your custom metric always retains all dimensions, the preaggregated version of the metric is stored by default with no dimensions. Retaining dimensions of custom metrics is a Preview feature that can be turned on from the Usage and estimated cost tab by selecting With dimensions under Send custom metrics to Azure Metric Store.

Quotas

Preaggregated metrics are stored as time series in Azure Monitor. Azure Monitor quotas on custom metrics apply.

Note

Going over the quota might have unintended consequences. Azure Monitor might become unreliable in your subscription or region. To learn how to avoid exceeding the quota, see Design limitations and considerations.

Why is collection of custom metrics dimensions turned off by default?

The collection of custom metrics dimensions is turned off by default because in the future, storing custom metrics with dimensions will be billed separately from Application Insights. Storing the nondimensional custom metrics remain free (up to a quota). You can learn about the upcoming pricing model changes on our official pricing page.

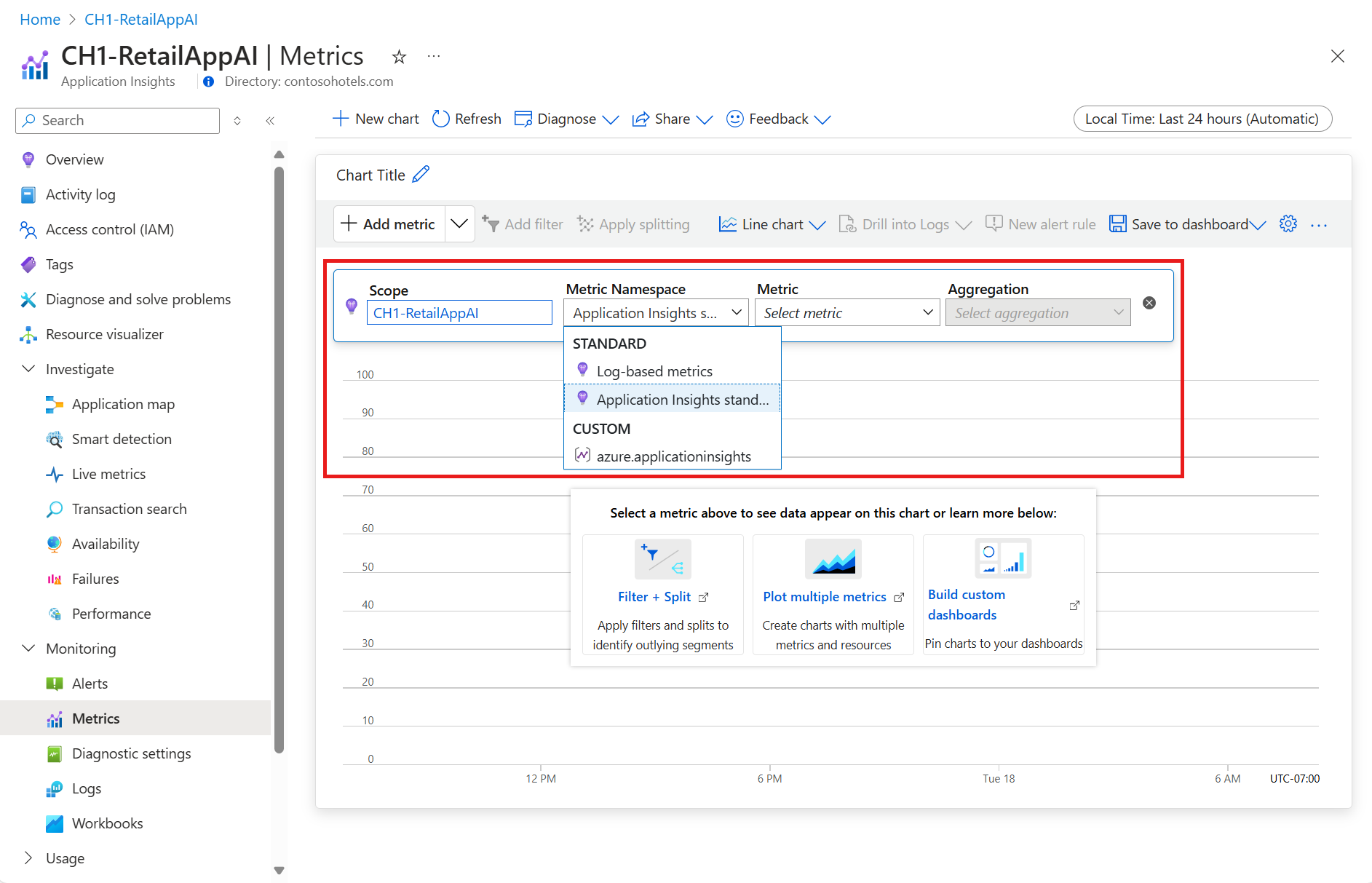

Create charts and explore metrics

Use Azure Monitor metrics explorer to plot charts from preaggregated, log-based, and custom metrics, and to author dashboards with charts. After you select the Application Insights resource you want, use the namespace picker to switch between metrics.

Pricing models for Application Insights metrics

Ingesting metrics into Application Insights, whether log-based or preaggregated, generates costs based on the size of the ingested data. For more information, see Azure Monitor Logs pricing details. Your custom metrics, including all its dimensions, are always stored in the Application Insights log store. Also, a preaggregated version of your custom metrics with no dimensions is forwarded to the metrics store by default.

Selecting the Enable alerting on custom metric dimensions option to store all dimensions of the preaggregated metrics in the metric store can generate extra costs based on custom metrics pricing.

Available metrics

The following sections list metrics with supported aggregations and dimensions. The details about log-based metrics include the underlying Kusto query statements.

Availability metrics

Metrics in the Availability category enable you to see the health of your web application as observed from points around the world. Configure the availability tests to start using any metrics from this category.

Availability (availabilityResults/availabilityPercentage)

The Availability metric shows the percentage of the web test runs that didn't detect any issues. The lowest possible value is 0, which indicates that all of the web test runs have failed. The value of 100 means that all of the web test runs passed the validation criteria.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Percentage | Avg | Run location, Test name |

Availability test duration (availabilityResults/duration)

The Availability test duration metric shows how much time it took for the web test to run. For the multi-step web tests, the metric reflects the total execution time of all steps.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Milliseconds | Avg, Max, Min | Run location, Test name, Test result |

Availability tests (availabilityResults/count)

The Availability tests metric reflects the count of the web tests runs by Azure Monitor.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Count | Run location, Test name, Test result |

Browser metrics

Browser metrics are collected by the Application Insights JavaScript SDK from real end-user browsers. They provide great insights into your users' experience with your web app. Browser metrics are typically not sampled, which means that they provide higher precision of the usage numbers compared to server-side metrics which might be skewed by sampling.

Note

To collect browser metrics, your application must be instrumented with the Application Insights JavaScript SDK.

Browser page load time (browserTimings/totalDuration)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Milliseconds | Avg, Max, Min | None |

Client processing time (browserTiming/processingDuration)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Milliseconds | Avg, Max, Min | None |

Page load network connect time (browserTimings/networkDuration)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Milliseconds | Avg, Max, Min | None |

Receiving response time (browserTimings/receiveDuration)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Milliseconds | Avg, Max, Min | None |

Send request time (browserTimings/sendDuration)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Milliseconds | Avg, Max, Min | None |

Failure metrics

The metrics in Failures show problems with processing requests, dependency calls, and thrown exceptions.

Browser exceptions (exceptions/browser)

This metric reflects the number of thrown exceptions from your application code running in browser. Only exceptions that are tracked with a trackException() Application Insights API call are included in the metric.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Count | Cloud role name |

Dependency call failures (dependencies/failed)

The number of failed dependency calls.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Count | Cloud role instance, Cloud role name, Dependency performance, Dependency type, Is traffic synthetic, Result code, Target of dependency call |

Exceptions (exceptions/count)

Each time when you log an exception to Application Insights, there's a call to the trackException() method of the SDK. The Exceptions metric shows the number of logged exceptions.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Count | Cloud role instance, Cloud role name, Device type |

Failed requests (requests/failed)

The count of tracked server requests that were marked as failed. By default, the Application Insights SDK automatically marks each server request that returned HTTP response code 5xx or 4xx as a failed request. You can customize this logic by modifying success property of request telemetry item in a custom telemetry initializer.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Count | Cloud role instance, Cloud role name, Is synthetic traffic, Request performance, Result code |

Server exceptions (exceptions/server)

This metric shows the number of server exceptions.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Count | Cloud role instance, Cloud role name |

Performance counters

Use metrics in the Performance counters category to access system performance counters collected by Application Insights.

Available memory (performanceCounters/availableMemory)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Megabytes / Gigabytes (data dependent) | Avg, Max, Min | Cloud role instance |

Exception rate (performanceCounters/exceptionRate)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Avg, Max, Min | Cloud role instance |

HTTP request execution time (performanceCounters/requestExecutionTime)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Milliseconds | Avg, Max, Min | Cloud role instance |

HTTP request rate (performanceCounters/requestsPerSecond)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Requests per second | Avg, Max, Min | Cloud role instance |

HTTP requests in application queue (performanceCounters/requestsInQueue)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Avg, Max, Min | Cloud role instance |

Process CPU (performanceCounters/processCpuPercentage)

The metric shows how much of the total processor capacity is consumed by the process that is hosting your monitored app.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Percentage | Avg, Max, Min | Cloud role instance |

Note

The range of the metric is between 0 and 100 * n, where n is the number of available CPU cores. For example, the metric value of 200% could represent full utilization of two CPU core or half utilization of 4 CPU cores and so on. The Process CPU Normalized is an alternative metric collected by many SDKs which represents the same value but divides it by the number of available CPU cores. Thus, the range of Process CPU Normalized metric is 0 through 100.

Process IO rate (performanceCounters/processIOBytesPerSecond)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Bytes per second | Average, Min, Max | Cloud role instance |

Process private bytes (performanceCounters/processPrivateBytes)

Amount of nonshared memory that the monitored process allocated for its data.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Bytes | Average, Min, Max | Cloud role instance |

Processor time (performanceCounters/processorCpuPercentage)

CPU consumption by all processes running on the monitored server instance.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Percentage | Average, Min, Max | Cloud role instance |

Note

The processor time metric is not available for the applications hosted in Azure App Services. Use the Process CPU metric to track CPU utilization of the web applications hosted in App Services.

Server metrics

Dependency calls (dependencies/count)

This metric is in relation to the number of dependency calls.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Count | Cloud role instance, Cloud role name, Dependency performance, Dependency type, Is traffic synthetic, Result code, Successful call, Target of a dependency call |

Dependency duration (dependencies/duration)

This metric refers to duration of dependency calls.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Milliseconds | Avg, Max, Min | Cloud role instance, Cloud role name, Dependency performance, Dependency type, Is traffic synthetic, Result code, Successful call, Target of a dependency call |

Server request rate (requests/rate)

This metric reflects the number of incoming server requests that were received by your web application.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count per second | Avg | Cloud role instance, Cloud role name, Is traffic synthetic, Request performance Result code, Successful request |

Server requests (requests/count)

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Count | Cloud role instance, Cloud role name, Is traffic synthetic, Request performance Result code, Successful request |

Server response time (requests/duration)

This metric reflects the time it took for the servers to process incoming requests.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Milliseconds | Avg, Max, Min | Cloud role instance, Cloud role name, Is traffic synthetic, Request performance Result code, Successful request |

Usage metrics

Page view load time (pageViews/duration)

This metric refers to the amount of time it took for PageView events to load.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Milliseconds | Avg, Max, Min | Cloud role name, Is traffic synthetic |

Page views (pageViews/count)

The count of PageView events logged with the TrackPageView() Application Insights API.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Count | Cloud role name, Is traffic synthetic |

Traces (traces/count)

The count of trace statements logged with the TrackTrace() Application Insights API call.

| Unit of measure | Supported aggregations | Supported dimensions |

|---|---|---|

| Count | Count | Cloud role instance, Cloud role name, Is traffic synthetic, Severity level |

Custom metrics

Access log-based metrics directly with the Application Insights REST API

The Application Insights REST API enables programmatic retrieval of log-based metrics. It also features an optional parameter ai.include-query-payload that when added to a query string, prompts the API to return not only the time series data, but also the Kusto Query Language (KQL) statement used to fetch it. This parameter can be particularly beneficial for users aiming to comprehend the connection between raw events in Log Analytics and the resulting log-based metric.

To access your data directly, pass the parameter ai.include-query-payload to the Application Insights API in a query using KQL.

Note

To retrieve the underlying logs query, DEMO_APP and DEMO_KEY don't have to replaced. If you just want to retrieve the KQL statement and not the time series data of your own application, you can copy and paste it directly into your browser search bar.

api.applicationinsights.io/v1/apps/DEMO_APP/metrics/users/authenticated?api_key=DEMO_KEY&prefer=ai.include-query-payload

The following is an example of a return KQL statement for the metric "Authenticated Users.” (In this example, "users/authenticated" is the metric ID.)

output

{

"value": {

"start": "2024-06-21T09:14:25.450Z",

"end": "2024-06-21T21:14:25.450Z",

"users/authenticated": {

"unique": 0

}

},

"@ai.query": "union (traces | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (requests | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (pageViews | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (dependencies | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (customEvents | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (availabilityResults | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (exceptions | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (customMetrics | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)), (browserTimings | where timestamp >= datetime(2024-06-21T09:14:25.450Z) and timestamp < datetime(2024-06-21T21:14:25.450Z)) | where notempty(user_AuthenticatedId) | summarize ['users/authenticated_unique'] = dcount(user_AuthenticatedId)"

}