Generative answers based on public websites

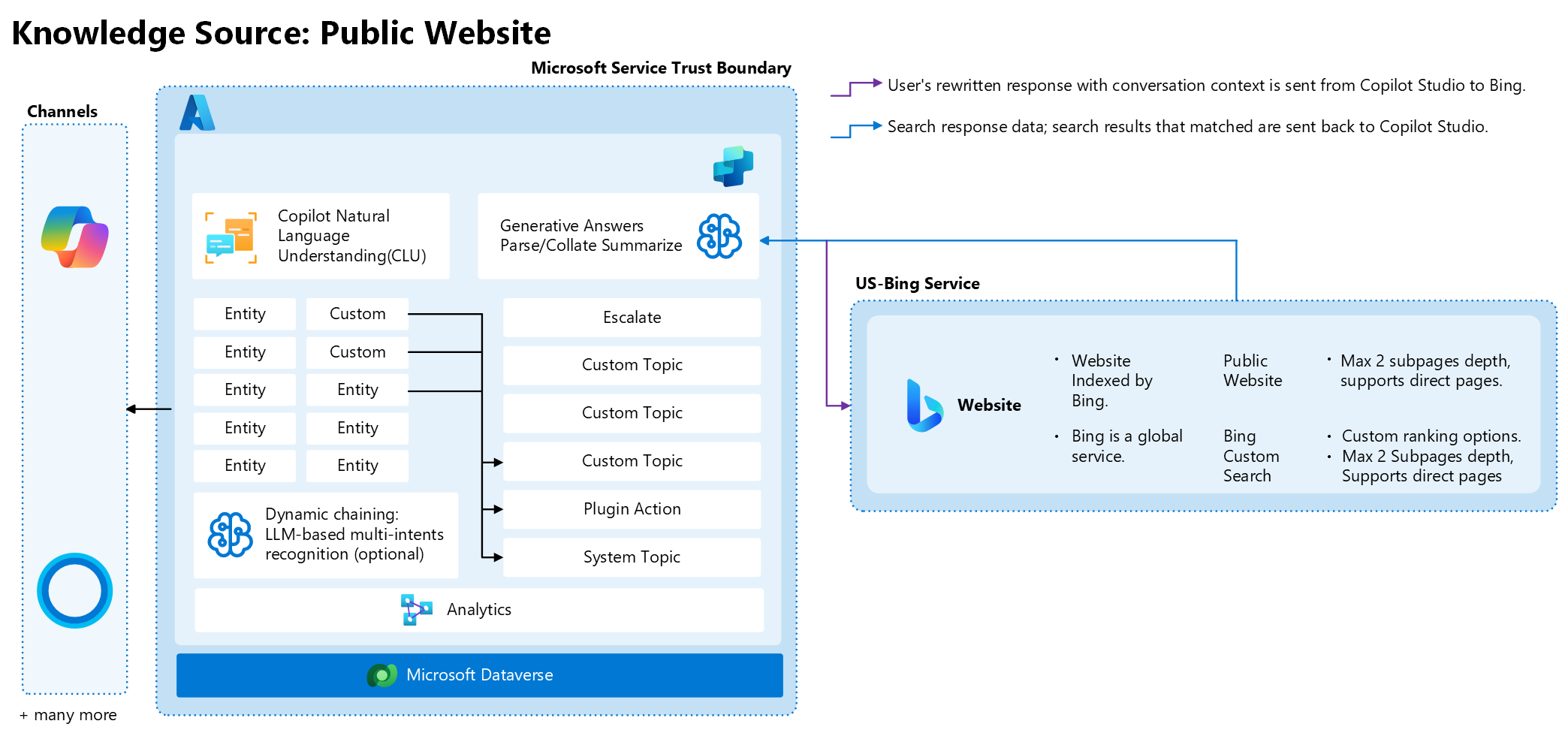

The following graphic illustrates the architecture when public websites or Bing Custom Search are used as a knowledge source:

How Microsoft Bing Custom Search provides results

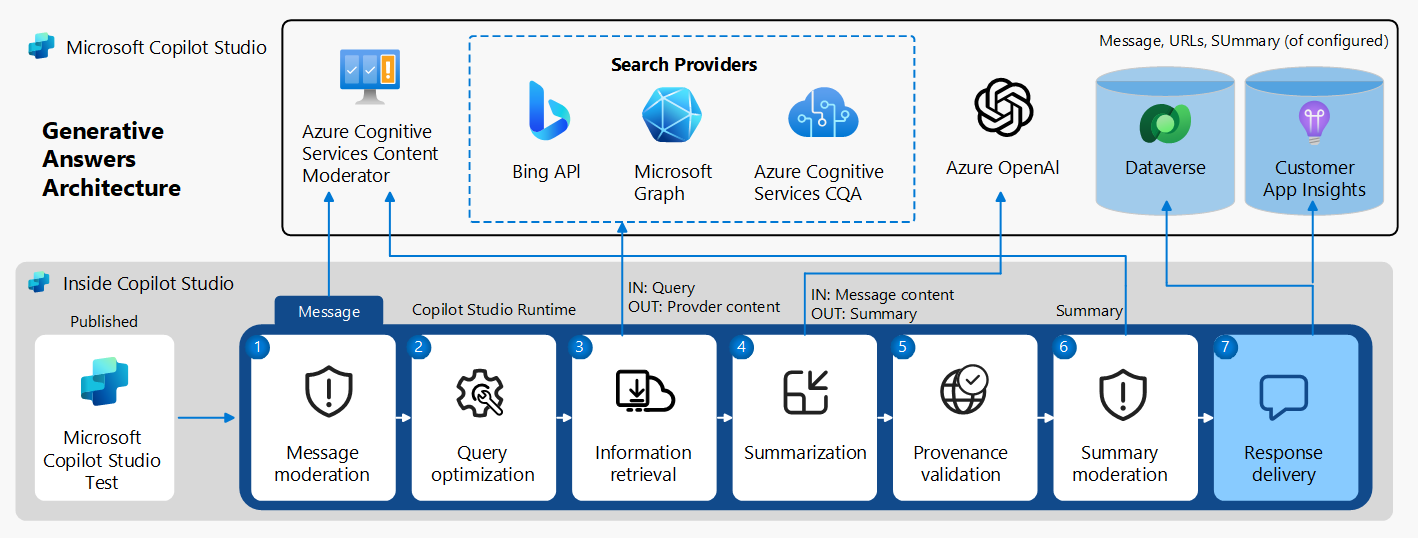

When a generative answers node is configured to use Bing Search, Copilot Studio performs the following operations:

- Message moderation: Parses the user's query and filters malicious content.

- Query optimization: Adds query context from the conversation history, such as location and time related information.

- Information retrieval: Converts the user's response to a search query, which is passed to Bing Custom Search service, and restricted to the customer's configured domains.

Bing's complex systems use these criteria to deliver search results from the Bing Custom Search index. Since Bing Custom Search is a global service, no regional boundary is possible.

The search results are returned, and Copilot Studio performs the following operations:

- Collates, and parses the relevant top results from a specified source or from the customer's configured domains.

- Performs grounding check, provenance checks, and semantic similarity cross checks.

- Summarizes search results into plain language delivered to the agent's user.

All content is checked twice: first during user input and again when the agent is about to respond. If the system finds harmful, offensive, or malicious content, it prevents your agent from responding.

Generative AI models

Generative AI models are hosted on internal Microsoft Azure OpenAI services, honoring the Microsoft Services Trust boundary. Models are accessed and used following Microsoft Responsible AI principles and policies.

Data exchange between Copilot Studio and Bing Custom Search

The user's rewritten utterance with conversational context is sent from Copilot Studio to Bing. Context is derived from the last few multi-turn conversations.

Then, Bing response data (the search results) are sent back to Copilot Studio.

In addition, Copilot Studio doesn't collect or provide any customer data used in the training of those models during data exchange.

Exchange of personal data between Copilot Studio and Bing Custom Search

Microsoft doesn't send structured End User Pseudonymous Identifier (EUPI), meaning an identifier created by Microsoft tied to the user of a Microsoft service to Bing Search.

However, if the user adds something that might be construed as personal data, generative answers doesn't detect, scrub, or mask such data. This lack of removal is because in many authenticated use cases in other industries, personal data information is required for legitimate processing.

Predeveloped guardrails for generative answers

Microsoft policies require an assessment to demonstrate appropriate adherence to responsible AI practices before releasing Microsoft products and research that develop, deploy, or integrate generative AI capabilities. All harms for which Microsoft develops mitigations undergo thorough red teaming, where mitigations are tested for their prevalance. Only after testing and mitigation implementations are completed is a generative AI system deployed.

Copilot Studio applies content moderation policies on all generative AI requests to protect against offensive or harmful content. These content moderation policies also extend to malicious attempts at jailbreaking, prompt injection, prompt exfiltration, and copyright infringement.

How generative answers prevent incorrect information from Bing Search results

Copilot Studio uses retrieval augmented generation, which separates the steps of retrieving search results and summarizing those search results into a cohesive response. Search results returned from websites are checked for proper citations, and can be traced back to their source. In addition, relevance of search results relative to the question asked by the user is validated.

Note

If you turn on the Allow the AI to use its own general knowledge setting, the citation restriction is loosened.

Managing harmful content when generating responses from Bing Search results

Toxic output and profanity mitigations for harmful content categories, such as hate, violence, sexual content, and self-harm are available as predeveloped guardrails. User queries and search results returned from a website are checked for violations, and questions and search results with such content are ignored.

In addition, generative AI prompts also include instructions to ignore questions and search results classified as jailbreak, prompt injection, and privacy violation.

Customization of generative answers nodes to ignore personal data queries

It's possible to write a custom prompt for your agent or create custom node instructions to detect personal data or sensitive business information. Then you can instruct the generative answers node to not respond.

Note

This approach, however, doesn't prevent the personal data or sensitive business information from being sent to Bing Search or other knowledge sources.

Flow of personal data to generative answers

Generative answers are conversation aware, which means that the generative answers node internally contextualizes the user's query from previous interactions during a multi-turn conversation. Any queries in the last few conversations get contextualized and become part of a rewritten query by the generative answers node.

While AI Builder prompts or the Azure OpenAI model with personal data detection capabilities can identify personal data in agent conversations, it's not sufficient to only check the user's last query before generating answers.

Alternatives to generative answers without Bing as a knowledge source

Use Azure AI Search index as a knowledge source in agents. This feature uses prebuilt Azure AI Search indexes as grounding data for agents. Azure AI Search provides a powerful search engine that can search through a large collection of documents. The Azure AI Search indexes are built by developers. This gives the indexes the flexibility of searching for their own content within geos, while still using the generative answers feature to use the generative AI to craft a moderated and summarized answer.

Users can also opt for a custom solution using a compliant search engine API or a way to query content management system directly and transform the results into data for the custom data source field in a generative answers node. This option is used where the data might not reside in one of the supported knowledge sources. In these scenarios, the agent is provided with grounding data through Power Automate flows or through HTTP requests. These options typically return a JSON object, which you can then parse into a Table format to generate answers.

Security of data exchanged between Copilot Studio and Bing Search

Microsoft Search in Bing requests are made over HTTPS. The connection is encrypted end-to-end for enhanced security.

Data collection

Microsoft might collect information from end users such as, but not limited to, an end user's IP address, requests, time of submissions, and the results returned to the end user, with transaction requests to the Services. Microsoft doesn't claim ownership of any data, information, or content provided related to the feature.

All access to and use of the Services is subject to the data practices provided in the Privacy Statement.

Data retention

For Bing search queries, Microsoft de-identifies stored queries by removing the entirety of the IP address after six months, and cookie IDs and other cross-session identifiers that are used to identify a particular account or device after 18 months (https://www.microsoft.com/en-us/privacy/privacystatement#mainwherewestoreandprocessdatamodule).

Bing search results

Real-time search operation involves complex, near-instantaneous algorithmic calculations. Bing uses algorithms to rank and optimize stored index of available web pages to give users the best, highest quality search results available. Crawling is how Bingbot (Bing crawler) discovers new and updated pages and content to add to search index.

Frequency of Bing web crawling

Bingbot (Bing crawler) uses an algorithm to decide what to crawl and how often, working to minimize its impact on websites as it crawls billions of URLs every day. As Bingbot crawls the web, it sends information to Bing about what it finds. Bing prioritizes relevant known pages that aren’t indexed yet and ones that detected as updated. These pages are then added to the Bing index and algorithms are used to analyze the pages to effectively include them in search results, including determining which sites, news articles, images, or videos are included in the index and available when users search for specific keywords.

Rank of search results

Bing relies on machine learning to ensure users see the best results for the query. The following are the main parameters of ranking impacting search within URL provided as knowledge source. The relative importance of each of the following parameters might vary from search to search and evolve over time.

Relevance (content matches a user's intent behind a search query.)

User engagement (algorithm prefers fresh content.)

Freshness (user interaction with webpages.)

Bing designs and continually improves its algorithms to provide the most comprehensive, relevant, and valuable collection of search results available.

Generative answers node improvement of Bing search

Since users can ask out of context questions, Bing search can be improved by providing another and specific information in the Generative Answers custom prompt to guide the search engine to query relevant results. User utterances and query can be enriched with specific data using Formulae and injected in Generative Answers custom prompt.

Best practices to improve Bing index creation

The following representative guidelines help with effective indexing sites on Bing. It also helps optimize sites to increase opportunities to rank relevant queries in Bing's search results.

Updated Sitemaps for Bing to discover URLs and content for websites.

IndexNow API or the Bing URL or Content Submission API to instantly reflect website changes.

Linking all pages on a site to at least one other discoverable and crawlable page as a signal for determining website popularity.

Limiting the number of pages on website.

Using redirects as appropriate.

Dynamic Rendering to switch between client-side rendered and prerendered content for Bingbot.

Avoid tags such as

nofollowornoindex, which prevent search engines from indexing webpages.A

robots.txtfile to inform search engine crawlers (Bingbot) which pages the crawler can or can't access.

Note

Search Engine Optimization (SEO) is a specialized skill best managed by the CBA SEO/Content Management teams within your organization. For more information, see Bing Webmasters Guidelines.