Migrate Spark pools from Azure Synapse Analytics to Fabric

While Azure Synapse provides Spark pools, Fabric offers Starter pools and Custom pools. The Starter pool can be a good choice if you have a single pool with no custom configurations or libraries in Azure Synapse, and if the Medium node size meets your requirements. However, if you seek more flexibility with your Spark pool configurations, we recommended using Custom pools. There are two options here:

- Option 1: Move your Spark pool to a workspace's default pool.

- Option 2: Move your Spark pool to a custom environment in Fabric.

If you have more than one Spark pool and you plan to move those to the same Fabric workspace, we recommended using Option 2, creating multiple custom environments and pools.

For Spark pool considerations, refer to differences between Azure Synapse Spark and Fabric.

Prerequisites

If you don’t have one already, create a Fabric workspace in your tenant.

Option 1: From Spark pool to workspace's default pool

You can create a custom Spark pool from your Fabric workspace and use it as the default pool in the workspace. The default pool is used by all notebooks and Spark job definitions in the same workspace.

To move from an existing Spark pool from Azure Synapse to a workspace default pool:

- Access Azure Synapse workspace: Sign-in into Azure. Navigate to your Azure Synapse workspace, go to Analytics Pools and select Apache Spark pools.

- Locate the Spark pool: From Apache Spark pools, locate the Spark pool you want to move to Fabric and check the pool Properties.

- Get properties: Get the Spark pool properties such as Apache Spark version, node size family, node size or autoscale. Refer to Spark pool considerations to see any differences.

- Create a custom Spark pool in Fabric:

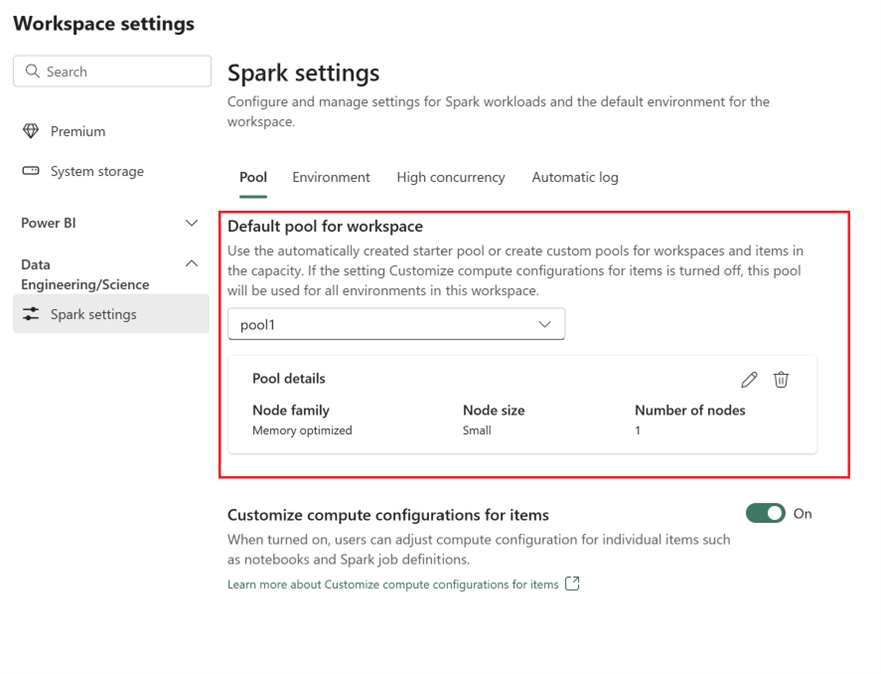

- Go to your Fabric workspace and select Workspace settings.

- Go to Data Engineering/Science and select Spark settings.

- From the Pool tab and in Default pool for workspace section, expand the dropdown menu and select create New pool.

- Create your custom pool with the corresponding target values. Fill the name, node family, node size, autoscaling and dynamic executor allocation options.

- Select a runtime version:

- Go to Environment tab, and select the required Runtime Version. See available runtimes here.

- Disable the Set default environment option.

Note

In this option, pool level libraries or configurations are not supported. However, you can adjust compute configuration for individual items such as notebooks and Spark job definitions, and add inline libraries. If you need to add custom libraries and configurations to an environment, consider a custom environment.

Option 2: From Spark pool to custom environment

With custom environments, you can set up custom Spark properties and libraries. To create a custom environment:

- Access Azure Synapse workspace: Sign-in into Azure. Navigate to your Azure Synapse workspace, go to Analytics Pools and select Apache Spark pools.

- Locate the Spark pool: From Apache Spark pools, locate the Spark pool you want to move to Fabric and check the pool Properties.

- Get properties: Get the Spark pool properties such as Apache Spark version, node size family, node size or autoscale. Refer to Spark pool considerations to see any differences.

- Create a custom Spark pool:

- Go to your Fabric workspace and select Workspace settings.

- Go to Data Engineering/Science and select Spark settings.

- From the Pool tab and in Default pool for workspace section, expand the dropdown menu and select create New pool.

- Create your custom pool with the corresponding target values. Fill the name, node family, node size, autoscaling and dynamic executor allocation options.

- Create an Environment item if you don’t have one.

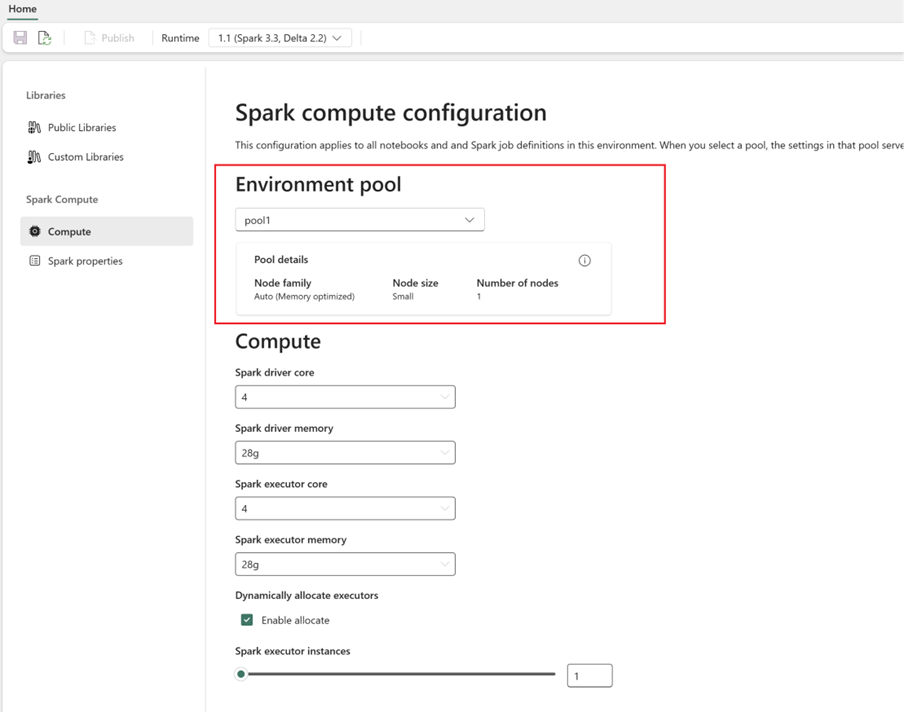

- Configure Spark compute:

- Within the Environment, go to Spark Compute > Compute.

- Select the newly created pool for the new environment.

- You can configure driver and executors cores and memory.

- Select a runtime version for the environment. See available runtimes here.

- Click on Save and Publish changes.

Learn more on creating and using an Environment.