How to generate image embeddings with Azure AI model inference

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

This article explains how to use image embeddings API with models deployed to Azure AI model inference in Azure AI Foundry.

Prerequisites

To use embedding models in your application, you need:

An Azure subscription. If you're using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if that's your case.

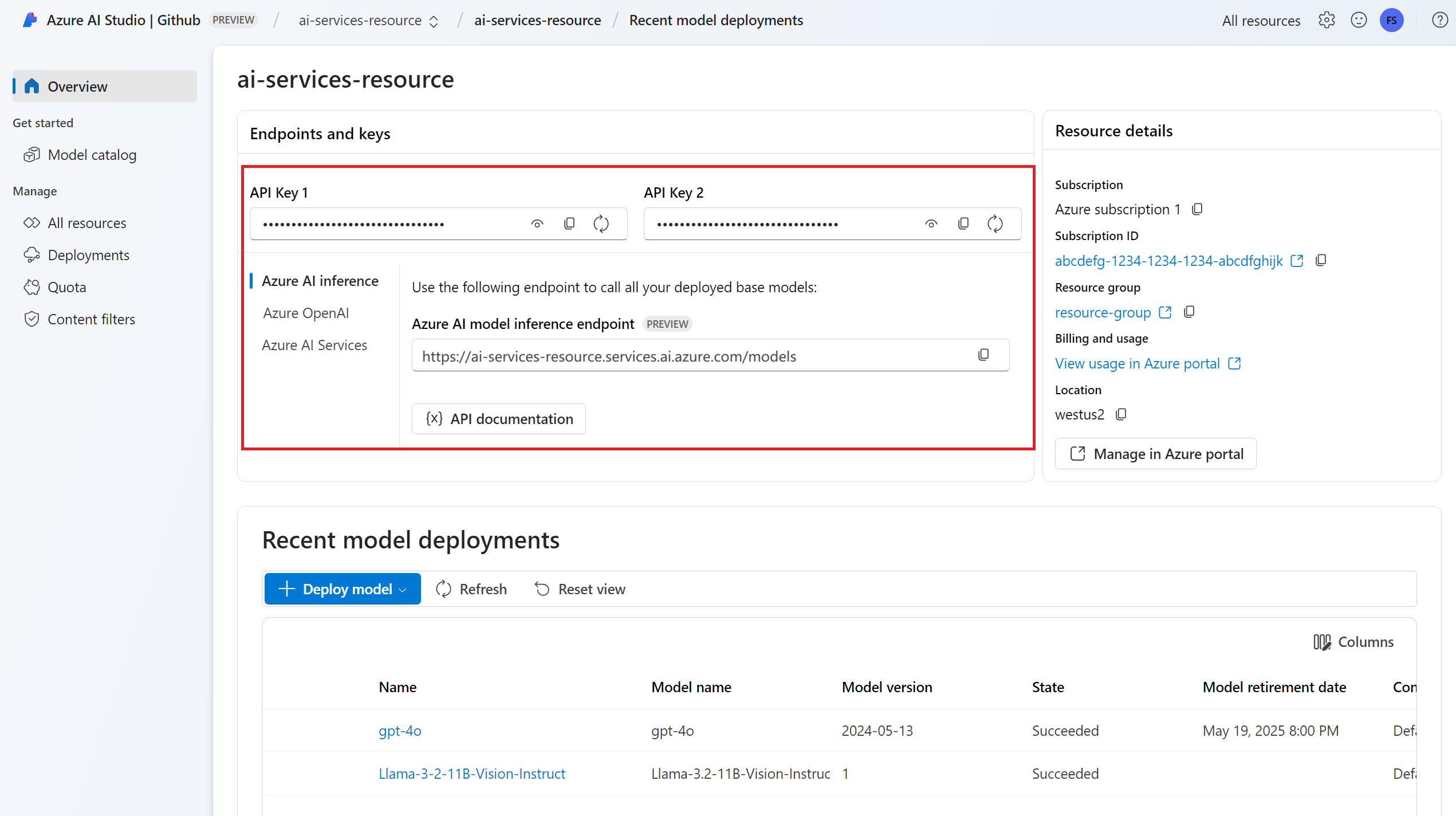

An Azure AI services resource. For more information, see Create an Azure AI Services resource.

The endpoint URL and key.

An image embeddings model deployment. If you don't have one, read Add and configure models to Azure AI services to add an embeddings model to your resource.

- This example uses

Cohere-embed-v3-englishfrom Cohere.

- This example uses

Install the Azure AI inference package with the following command:

pip install -U azure-ai-inferenceTip

Read more about the Azure AI inference package and reference.

Use image embeddings

First, create the client to consume the model. The following code uses an endpoint URL and key that are stored in environment variables.

import os

from azure.ai.inference import ImageEmbeddingsClient

from azure.core.credentials import AzureKeyCredential

model = ImageEmbeddingsClient(

endpoint=os.environ["AZURE_INFERENCE_ENDPOINT"],

credential=AzureKeyCredential(os.environ["AZURE_INFERENCE_CREDENTIAL"]),

model="Cohere-embed-v3-english"

)

If you configured the resource to with Microsoft Entra ID support, you can use the following code snippet to create a client.

import os

from azure.ai.inference import ImageEmbeddingsClient

from azure.identity import DefaultAzureCredential

model = ImageEmbeddingsClient(

endpoint=os.environ["AZURE_INFERENCE_ENDPOINT"],

credential=DefaultAzureCredential(),

model="Cohere-embed-v3-english"

)

Create embeddings

To create image embeddings, you need to pass the image data as part of your request. Image data should be in PNG format and encoded as base64.

from azure.ai.inference.models import ImageEmbeddingInput

image_input= ImageEmbeddingInput.load(image_file="sample1.png", image_format="png")

response = model.embed(

input=[ image_input ],

)

Tip

When creating a request, take into account the token's input limit for the model. If you need to embed larger portions of text, you would need a chunking strategy.

The response is as follows, where you can see the model's usage statistics:

import numpy as np

for embed in response.data:

print("Embeding of size:", np.asarray(embed.embedding).shape)

print("Model:", response.model)

print("Usage:", response.usage)

Important

Computing embeddings in batches may not be supported for all the models. For example, for Cohere-embed-v3-english model, you need to send one image at a time.

Embedding images and text pairs

Some models can generate embeddings from images and text pairs. In this case, you can use the image and text fields in the request to pass the image and text to the model. The following example shows how to create embeddings for images and text pairs:

text_image_input= ImageEmbeddingInput.load(image_file="sample1.png", image_format="png")

text_image_input.text = "A cute baby sea otter"

response = model.embed(

input=[ text_image_input ],

)

Create different types of embeddings

Some models can generate multiple embeddings for the same input depending on how you plan to use them. This capability allows you to retrieve more accurate embeddings for RAG patterns.

The following example shows how to create embeddings that are used to create an embedding for a document that will be stored in a vector database:

from azure.ai.inference.models import EmbeddingInputType

response = model.embed(

input=[ image_input ],

input_type=EmbeddingInputType.DOCUMENT,

)

When you work on a query to retrieve such a document, you can use the following code snippet to create the embeddings for the query and maximize the retrieval performance.

from azure.ai.inference.models import EmbeddingInputType

response = model.embed(

input=[ image_input ],

input_type=EmbeddingInputType.QUERY,

)

Notice that not all the embedding models support indicating the input type in the request and on those cases a 422 error is returned.

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

This article explains how to use image embeddings API with models deployed to Azure AI model inference in Azure AI Foundry.

Prerequisites

To use embedding models in your application, you need:

An Azure subscription. If you're using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if that's your case.

An Azure AI services resource. For more information, see Create an Azure AI Services resource.

The endpoint URL and key.

An image embeddings model deployment. If you don't have one read Add and configure models to Azure AI services to add an embeddings model to your resource.

- This example uses

Cohere-embed-v3-englishfrom Cohere.

- This example uses

Install the Azure Inference library for JavaScript with the following command:

npm install @azure-rest/ai-inferenceTip

Read more about the Azure AI inference package and reference.

Use image embeddings

First, create the client to consume the model. The following code uses an endpoint URL and key that are stored in environment variables.

import ModelClient from "@azure-rest/ai-inference";

import { isUnexpected } from "@azure-rest/ai-inference";

import { AzureKeyCredential } from "@azure/core-auth";

const client = new ModelClient(

process.env.AZURE_INFERENCE_ENDPOINT,

new AzureKeyCredential(process.env.AZURE_INFERENCE_CREDENTIAL)

);

If you configured the resource to with Microsoft Entra ID support, you can use the following code snippet to create a client.

import ModelClient from "@azure-rest/ai-inference";

import { isUnexpected } from "@azure-rest/ai-inference";

import { DefaultAzureCredential } from "@azure/identity";

const client = new ModelClient(

process.env.AZURE_INFERENCE_ENDPOINT,

new DefaultAzureCredential()

);

Create embeddings

To create image embeddings, you need to pass the image data as part of your request. Image data should be in PNG format and encoded as base64.

var image_path = "sample1.png";

var image_data = fs.readFileSync(image_path);

var image_data_base64 = Buffer.from(image_data).toString("base64");

var response = await client.path("/images/embeddings").post({

body: {

input: [ { image: image_data_base64 } ],

model: "Cohere-embed-v3-english",

}

});

Tip

When creating a request, take into account the token's input limit for the model. If you need to embed larger portions of text, you would need a chunking strategy.

The response is as follows, where you can see the model's usage statistics:

if (isUnexpected(response)) {

throw response.body.error;

}

console.log(response.embedding);

console.log(response.body.model);

console.log(response.body.usage);

Important

Computing embeddings in batches may not be supported for all the models. For example, for Cohere-embed-v3-english model, you need to send one image at a time.

Embedding images and text pairs

Some models can generate embeddings from images and text pairs. In this case, you can use the image and text fields in the request to pass the image and text to the model. The following example shows how to create embeddings for images and text pairs:

var image_path = "sample1.png";

var image_data = fs.readFileSync(image_path);

var image_data_base64 = Buffer.from(image_data).toString("base64");

var response = await client.path("images/embeddings").post({

body: {

input: [

{

text: "A cute baby sea otter",

image: image_data_base64

}

],

model: "Cohere-embed-v3-english",

}

});

Create different types of embeddings

Some models can generate multiple embeddings for the same input depending on how you plan to use them. This capability allows you to retrieve more accurate embeddings for RAG patterns.

The following example shows how to create embeddings that are used to create an embedding for a document that will be stored in a vector database:

var response = await client.path("/embeddings").post({

body: {

input: [ { image: image_data_base64 } ],

input_type: "document",

model: "Cohere-embed-v3-english",

}

});

When you work on a query to retrieve such a document, you can use the following code snippet to create the embeddings for the query and maximize the retrieval performance.

var response = await client.path("/embeddings").post({

body: {

input: [ { image: image_data_base64 } ],

input_type: "query",

model: "Cohere-embed-v3-english",

}

});

Notice that not all the embedding models support indicating the input type in the request and on those cases a 422 error is returned.

Note

Using image embeddings is only supported using Python, JavaScript, C#, or REST requests.

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

This article explains how to use image embeddings API with models deployed to Azure AI model inference in Azure AI Foundry.

Prerequisites

To use embedding models in your application, you need:

An Azure subscription. If you're using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if that's your case.

An Azure AI services resource. For more information, see Create an Azure AI Services resource.

The endpoint URL and key.

An image embeddings model deployment. If you don't have one, read Add and configure models to Azure AI services to add an embeddings model to your resource.

- This example uses

Cohere-embed-v3-englishfrom Cohere.

- This example uses

Install the Azure AI inference package with the following command:

dotnet add package Azure.AI.Inference --prereleaseTip

Read more about the Azure AI inference package and reference.

If you're using Entra ID, you also need the following package:

dotnet add package Azure.Identity

Use image embeddings

First, create the client to consume the model. The following code uses an endpoint URL and key that are stored in environment variables.

EmbeddingsClient client = new EmbeddingsClient(

new Uri("https://<resource>.services.ai.azure.com/models"),

new AzureKeyCredential(Environment.GetEnvironmentVariable("AZURE_INFERENCE_CREDENTIAL"))

);

If you configured the resource with Microsoft Entra ID support, you can use the following code snippet to create a client. Notice that includeInteractiveCredentials is set to true only for demonstration purposes so authentication can happen using the web browser. For production workloads, you should remove the parameter.

TokenCredential credential = new DefaultAzureCredential(includeInteractiveCredentials: true);

AzureAIInferenceClientOptions clientOptions = new AzureAIInferenceClientOptions();

BearerTokenAuthenticationPolicy tokenPolicy = new BearerTokenAuthenticationPolicy(credential, new string[] { "https://cognitiveservices.azure.com/.default" });

clientOptions.AddPolicy(tokenPolicy, HttpPipelinePosition.PerRetry);

client = new EmbeddingsClient(

new Uri("https://<resource>.services.ai.azure.com/models"),

credential,

clientOptions

);

Create embeddings

To create image embeddings, you need to pass the image data as part of your request. Image data should be in PNG format and encoded as base64.

List<ImageEmbeddingInput> input = new List<ImageEmbeddingInput>

{

ImageEmbeddingInput.Load(imageFilePath:"sampleImage.png", imageFormat:"png")

};

var requestOptions = new ImageEmbeddingsOptions()

{

Input = input,

Model = "Cohere-embed-v3-english"

};

Response<EmbeddingsResult> response = client.Embed(requestOptions);

Tip

When creating a request, take into account the token's input limit for the model. If you need to embed larger portions of text, you would need a chunking strategy.

The response is as follows, where you can see the model's usage statistics:

foreach (EmbeddingItem item in response.Value.Data)

{

List<float> embedding = item.Embedding.ToObjectFromJson<List<float>>();

Console.WriteLine($"Index: {item.Index}, Embedding: <{string.Join(", ", embedding)}>");

}

Important

Computing embeddings in batches might not be supported for all the models. For example, for Cohere-embed-v3-english model, you need to send one image at a time.

Embedding images and text pairs

Some models can generate embeddings from images and text pairs. In this case, you can use the image and text fields in the request to pass the image and text to the model. The following example shows how to create embeddings for images and text pairs:

var image_input = ImageEmbeddingInput.Load(imageFilePath:"sampleImage.png", imageFormat:"png")

image_input.text = "A cute baby sea otter"

var requestOptions = new ImageEmbeddingsOptions()

{

Input = new List<ImageEmbeddingInput>

{

image_input

},

Model = "Cohere-embed-v3-english"

};

Response<EmbeddingsResult> response = client.Embed(requestOptions);

Create different types of embeddings

Some models can generate multiple embeddings for the same input depending on how you plan to use them. This capability allows you to retrieve more accurate embeddings for RAG patterns.

The following example shows how to create embeddings for a document that will be stored in a vector database:

var requestOptions = new EmbeddingsOptions()

{

Input = image_input,

InputType = EmbeddingInputType.DOCUMENT,

Model = "Cohere-embed-v3-english"

};

Response<EmbeddingsResult> response = client.Embed(requestOptions);

When you work on a query to retrieve such a document, you can use the following code snippet to create the embeddings for the query and maximize the retrieval performance.

var requestOptions = new EmbeddingsOptions()

{

Input = image_input,

InputType = EmbeddingInputType.QUERY,

Model = "Cohere-embed-v3-english"

};

Response<EmbeddingsResult> response = client.Embed(requestOptions);

Notice that not all the embedding models support indicating the input type in the request and on those cases a 422 error is returned.

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

This article explains how to use image embeddings API with models deployed to Azure AI model inference in Azure AI Foundry.

Prerequisites

To use embedding models in your application, you need:

An Azure subscription. If you're using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if that's your case.

An Azure AI services resource. For more information, see Create an Azure AI Services resource.

The endpoint URL and key.

An image embeddings model deployment. If you don't have one read Add and configure models to Azure AI services to add an embeddings model to your resource.

- This example uses

Cohere-embed-v3-englishfrom Cohere.

- This example uses

Use image embeddings

To use the text embeddings, use the route /images/embeddings appended to your base URL along with your credential indicated in api-key. Authorization header is also supported with the format Bearer <key>.

POST https://<resource>.services.ai.azure.com/models/images/embeddings?api-version=2024-05-01-preview

Content-Type: application/json

api-key: <key>

If you configured the resource with Microsoft Entra ID support, pass you token in the Authorization header:

POST https://<resource>.services.ai.azure.com/models/images/embeddings?api-version=2024-05-01-preview

Content-Type: application/json

Authorization: Bearer <token>

Create embeddings

To create image embeddings, you need to pass the image data as part of your request. Image data should be in PNG format and encoded as base64.

{

"model": "Cohere-embed-v3-english",

"input": [

{

"image": "data:image/png;base64,iVBORw0KGgoAAAANSUh..."

}

]

}

Tip

When creating a request, take into account the token's input limit for the model. If you need to embed larger portions of text, you would need a chunking strategy.

The response is as follows, where you can see the model's usage statistics:

{

"id": "0ab1234c-d5e6-7fgh-i890-j1234k123456",

"object": "list",

"data": [

{

"index": 0,

"object": "embedding",

"embedding": [

0.017196655,

// ...

-0.000687122,

-0.025054932,

-0.015777588

]

}

],

"model": "Cohere-embed-v3-english",

"usage": {

"prompt_tokens": 9,

"completion_tokens": 0,

"total_tokens": 9

}

}

Important

Computing embeddings in batches may not be supported for all the models. For example, for Cohere-embed-v3-english model, you need to send one image at a time.

Embedding images and text pairs

Some models can generate embeddings from images and text pairs. In this case, you can use the image and text fields in the request to pass the image and text to the model. The following example shows how to create embeddings for images and text pairs:

{

"model": "Cohere-embed-v3-english",

"input": [

{

"image": "data:image/png;base64,iVBORw0KGgoAAAANSUh...",

"text": "A photo of a cat"

}

]

}

Create different types of embeddings

Some models can generate multiple embeddings for the same input depending on how you plan to use them. This capability allows you to retrieve more accurate embeddings for RAG patterns.

The following example shows how to create embeddings that are used to create an embedding for a document that will be stored in a vector database:

{

"model": "Cohere-embed-v3-english",

"input": [

{

"image": "data:image/png;base64,iVBORw0KGgoAAAANSUh..."

}

],

"input_type": "document"

}

When you work on a query to retrieve such a document, you can use the following code snippet to create the embeddings for the query and maximize the retrieval performance.

{

"model": "Cohere-embed-v3-english",

"input": [

{

"image": "data:image/png;base64,iVBORw0KGgoAAAANSUh..."

}

],

"input_type": "query"

}

Notice that not all the embedding models support indicating the input type in the request and on those cases a 422 error is returned.