GPU-accelerated custom effects for WPF

With WPF 3.5 SP1 on the horizon (and the Beta available now), I plan to discuss some of the new graphics features that are coming into WPF in this release. There are a number of great new additions as well as improvements on existing features, but I will say that the one that I’m the most excited about is GPU-accelerated custom effects. I’m going to go into a good amount of depth in a series of upcoming blog posts, so I’ll dedicate this one to talking about the basics of what we’re offering and the motivation behind the feature.

One of the hallmarks of WPF is the ability to mix and match media types, to compose visual elements, and to give the developer a substantial amount of freedom in the way they construct the interfaces for their applications. However, they are typically restricted to using the building blocks that WPF provides, such as rectangles, text, video, paths, gradients, images, etc. As rich as that set of primitives is, it is still a fixed set of primitives that only has the ability to grow as new releases of WPF come out. At the same time, the graphics processing unit (GPU) is becoming ever more powerful and ever more flexible. These two facts are somewhat at odds with each other, and this post talks about ways we bridge the gap by providing for GPU-accelerated custom effects (which I’ll just shorten to “Effects” from this point on).

With Effects, you can harness the programmability of the GPU and still benefit from all the power of animation, data binding, element composition and media integration that WPF offers. Moreover, 3rd parties can offer very powerful libraries of Effects that other developers using WPF can just use without needing to understand anything about GPU programming.

Applying motion blur to a scrolling list

As an initial example to see the sorts of power and flexibility one has with Effects, consider this UI:

The "film strip" at the top can be scrolled through. Effects have been used as part of this film strip control so that when you move quickly through them, a "motion blur" effect gets applied, slowing down as you come to rest. Here's a resultant snapshot of that:

The rest of this post will look at a simpler example for explanatory purposes.

A simple example of using a built-in Effect

As a first simple example, we'll use the built in DropShadow Effect to make this Button:

look like this:

To do this, all we do is add an Effect property to our button:

<Button ... >

<Button.Effect>

<DropShadowEffect />

</Button.Effect>

Hello

</Button>

This Effect uses the built-in "DropShadowEffect".

A simple example of using a custom Effect

Using a custom Effect is really identical to using a built-in Effect.

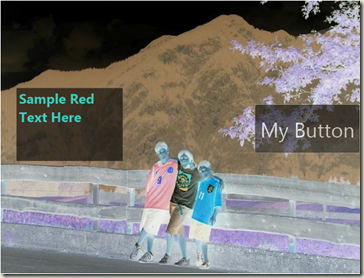

The following is a simple semi-transparent Button and TextBox and an Image placed inside of a Grid.

The XAML for the above is roughly this (I’ve removed properties of Image and Button and TextBox that just aren’t relevant to this discussion):

<Grid >

<Image ... />

<Button ... />

<TextBox ... />

</Grid>

What I’ll do now is apply a very simple Effect called ColorComplementEffect that’s part of a custom assembly I’ve created. All we do is this:

<Grid >

<Grid.Effect>

<eff:ColorComplementEffect />

</Grid.Effect>

<Image ... />

<Button ... />

<TextBox ... />

</Grid>

What we’ve done is established the Effect property on the Grid, and set it to an instance of ColorComplementEffect. Note the “eff:” namespace on this… this indicates my custom assembly, and I need to add the following xmlns declaration to ensure this is found in the namespace MyEffects in the custom assembly MyEffects.dll:

xmlns:eff="clr-namespace:MyEffects;assembly=MyEffects"

ColorComplementEffect, for every pixel in the element it’s being attached to, outputs the complement of that pixel (that is, 1.0 - val, for each of the RGB components of the element). The result looks like this:

Note how the button and text box are still present and remain interactive, just with the colors complemented (which makes it look like a photographic negative).

(A later post will show how to create Effects like ColorComplementEffect, here we just discuss using them.)

What’s happening here?

So what’s a good way to think about what we just did above? The Effects that can be written will operate on pixels, and (roughly) will take bitmaps in (which are just arrays of pixels) and for each pixel that it needs to output, the Effect will generate a color. Yet we applied the Effect to, in the above case, a Grid with multiple children, which we know isn’t a bitmap.

What’s happening is that WPF sees that an Effect is applied to a UIElement, and rasterizes the UIElement at the proper size into a bitmap, and then applies the Effect. Thus, Effects can be applied to any UIElement (or Visual, for that matter). This includes video, and some of the more impactful uses apply Effects to video.

What can I do with these?

It’s the possibilities that GPU-accelerated Effects unlock that have me so excited about this addition. Basically, at GPU processing speeds, applications can apply effects that do, for instance:

- Color modification: channel separation, tinting, saturation, contrast, monochrome, toning, thresholding, pixellation, bloom, chromakey, etc.

- Displacement effects: swirl, motion blur, ripples, pixellation, sharpen, pinch, bulge, etc.

- Generative effects: algorithmic generation of interesting texture patterns, fractals, etc.

- Multiple-input effects: blending, masking, storing computational results in textures, etc.

But, the most exciting thing about all of this is that the above are just a small sampling of possibilities we’ve discussed in the team. What we’re certain of is that folks in the WPF community can and are going to be creating Effects that we would have never anticipated and will just blow everyone away.

What are these Effects?

While we don’t discuss the specifics of writing Effects in this posting (later posts will do so at length), we do describe what they are. Let me first describe how one programs a GPU, independent of WPF:

- GPU’s are fundamentally SIMD processors, able to operate on a very large number of pixels in parallel. A class of GPU “program” called a “pixel shader” (or a “fragment shader”) is basically a program that will execute on each and every pixel it outputs.

- In the Microsoft ecosystem, GPUs are programmed and controlled through DirectX. You download a GPU “program” into the GPU through DirectX, and you use DirectX to invoke that program.

- There is a GPU assembly language that the GPU’s operate on. There is also a higher-level language called HLSL that is part of DirectX. DirectX provides a compiler to compile the HLSL down into GPU assembly code.

Given this, an Effect is two things:

- A compiled HLSL (or assembly) pixel shader with shader constants used to represent variable inputs from the application.

- A .NET class that declares contains the pixel shader, and exposes WPF DependencyProperties that are bound to the shader constants used in the pixel shader. This allows modification of properties and parameters of shaders in the exact same way that properties of other WPF entities are manipulating (including support for animation and databinding of these properties and parameters).

But isn’t writing HLSL hard?

Aspects of writing HLSL can be tricky, particularly adapting to the SIMD mindset and the limit to the number of instructions that can be executed.

In a way, though, the question is missing the point. Our expectation is that there will be many, many consumers of Effects (app developers as well as component developers who just want to use an Effect). Consuming an Effect, as the example above shows, is identical to consuming any other custom-written WPF control or library component. There will be fewer creators of Effects, just because the bar is somewhat higher, requiring an understanding of HLSL. However, we do expect/hope even that number to be pretty high, just because the Effects written can be very highly leveraged by users of the WPF system.

That’s it for this initial post on Effects. Lots more goodness to come in subsequent posts.

Comments

Anonymous

May 12, 2008

A quick one: I just installed .NET 3.5 SP1 and I've noticed that my WPF application no longer seems to be rendered as resolution independent. When I zoom in using Magnifier the text and lines are pixelated instead of looking like vectors. What could be causing this?Anonymous

May 12, 2008

That's happening for me too. On vista SP1 "normal" WPF zooming looks great, but with the the magnifier it looks pixelated and blocky. Has something changed in the way the rendering happens?Anonymous

May 13, 2008

Both 'bp' and 'Joseph Cooney' have noticed that magnification of WPF content using the built in OS Magnifier no longer does resolution independent zoom of content. That observation is correct. As a result of a series of changes that are too numerous to describe here, the OS magnifier is no longer "WPF-aware", and does bitmap scaling just like it does of other content. Although we do lose this feature, we believe that without the dependencies that enabled Magnifier to work in a WPF-specific way, we can be more agile in what we provide to WPF customers moving forward. Note that the above is only about out-of-process magnification. When you do zooming (via scaling) within your own WPF application, the rendering continues to be re-rasterized at the higher scale, so everything remains smooth in that most common scenario. This change is strictly about using the external magnifier.Anonymous

May 13, 2008

Cheers for the explanation. Bit of a shame for the poor Magnifier :)Anonymous

May 14, 2008

The comment has been removedAnonymous

May 30, 2008

Hi, are these features available in XP or are they Vista only?Anonymous

June 01, 2008

What are the graphic card requirement ?Anonymous

June 01, 2008

The comment has been removedAnonymous

June 02, 2008

The comment has been removedAnonymous

June 08, 2008

These features are available also in W2k3 server, obviously with .NET 3.5 SP1 installed? ThxAnonymous

August 11, 2008

The comment has been removedAnonymous

May 04, 2009

BitmapEffect已经过时了。UIElement.BitmapEffectpropertyisobsolete没办法,BitmapEffect的效率过于低下,有时还有莫名其妙的界面闪...