Storage options for a Kubernetes cluster

This article compares the storage capabilities of Amazon Elastic Kubernetes Service (Amazon EKS) and Azure Kubernetes Service (AKS) and describes the options to store workload data on AKS.

Note

This article is part of a series of articles that helps professionals who are familiar with Amazon EKS to understand Azure Kubernetes Service (AKS).

Amazon EKS storage options

When running applications that require data storage, Amazon EKS offers different types of volumes for both temporary and long-lasting storage.

Ephemeral Volumes

For applications that require temporary local volumes but don't need to persist data after restarts, ephemeral volumes can be used. Kubernetes supports different types of ephemeral volumes, such as emptyDir, configMap, downwardAPI, secret, and hostpath. To ensure cost efficiency and performance, it's important to choose the most appropriate host volume. In Amazon EKS, you can use gp3 as the host root volume, which offers lower prices compared to gp2 volumes.

Another option for ephemeral volumes is Amazon EC2 instance stores, which provide temporary block-level storage for EC2 instances. These volumes are physically attached to the hosts and only exist during the lifetime of the instance. Using local store volumes in Kubernetes requires partitioning, configuring, and formatting the disks using Amazon EC2 user-data.

Persistent Volumes

While Kubernetes is typically associated with running stateless applications, there are cases where persistent data storage is required. Kubernetes Persistent Volumes (PVs) can be used to store data independently from pods, allowing data to persist beyond the lifetime of a given pod. Amazon EKS supports different types of storage options for PVs, including Amazon EBS, Amazon EFS, Amazon FSx for Lustre, and Amazon FSx for NetApp ONTAP.

Amazon EBS volumes are suitable for block-level storage and are well-suited for databases and throughput-intensive applications. Amazon EKS users can use the latest generation of block storage gp3 for a balance between price and performance. For higher-performance applications, io2 block express volumes can be used.

Amazon EFS is a serverless, elastic file system that can be shared across multiple containers and nodes. It automatically grows and shrinks as files are added or removed, eliminating the need for capacity planning. The Amazon Elastic File System Container Storage Interface (CSI) Driver is used to integrate Amazon EFS with Kubernetes.

Amazon FSx for Lustre provides high-performance parallel file storage, ideal for scenarios requiring high throughput and low-latency operations. It can be linked to an Amazon S3 data repository to store large datasets. Amazon FSx for NetApp ONTAP is a fully managed shared storage solution built on NetApp's ONTAP file system.

Amazon EKS users can utilize tools like AWS Compute Optimizer and Velero to optimize storage configurations and manage backups and snapshots.

AKS storage options

Applications running in Azure Kubernetes Service (AKS) might need to store and retrieve data. While some application workloads can use local, fast storage on unneeded, emptied nodes, others require storage that persists on more regular data volumes within the Azure platform. Multiple pods might need to:

- Share the same data volumes.

- Reattach data volumes if the pod is rescheduled on a different node.

This article introduces the storage options and core concepts that provide storage to your applications in AKS.

Volume types

Kubernetes volumes represent more than just a traditional disk for storing and retrieving information. Kubernetes volumes can also be used as a way to inject data into a pod for use by its containers.

Common volume types in Kubernetes include EmptyDirs, Secret, and ConfigMaps.

EmptyDirs

For a Pod that defines an emptyDir volume, the volume is created when the Pod is assigned to a node. As the name suggests, the emptyDir volume is initially empty. All containers in the Pod can read and write the same files in the emptyDir volume, although this volume can be mounted at the same or different paths in each container. When a Pod is removed from a node for any reason, the data in the emptyDir is deleted permanently.

Secrets

A Secret is an object that holds a small amount of sensitive data, such as a password, token, or key. This information would otherwise be included in a Pod specification or container image. By using a Secret, you avoid embedding confidential data directly in your application code. Since Secrets can be created independently of the Pods that use them, there is a reduced risk of exposing the Secret (and its data) during the processes of creating, viewing, and editing Pods. Kubernetes, along with the applications running in your cluster, can also take extra precautions with Secrets, such as preventing sensitive data from being written to nonvolatile storage. While Secrets are similar to ConfigMaps, they are specifically designed to store confidential data.

You can use Secrets for the following purposes:

- Set environment variables for a container.

- Provide credentials such as SSH keys or passwords to Pods.

- Allow the kubelet to pull container images from private registries.

The Kubernetes control plane also uses Secrets, such as bootstrap token Secrets, which are a mechanism to help automate node registration.

ConfigMaps

A ConfigMap is a Kubernetes object used to store non-confidential data in key-value pairs. Pods can consume ConfigMaps as environment variables, command-line arguments, or as configuration files in a volume. A ConfigMap allows you to decouple environment-specific configuration from your container images, so that your applications are easily portable.

ConfigMap does not provide secrecy or encryption. If the data you want to store are confidential, use a Secret rather than a ConfigMap, or use additional (third party) tools to keep your data private.

You can use a ConfigMap for setting configuration data separately from application code. For example, imagine that you are developing an application that you can run on your own computer (for development) and in the cloud (to handle real traffic). You write the code to look in an environment variable named DATABASE_HOST. Locally, you set that variable to localhost. In the cloud, you set it to refer to a Kubernetes Service that exposes the database component to your cluster. This lets you fetch a container image running in the cloud and debug the exact same code locally if needed.

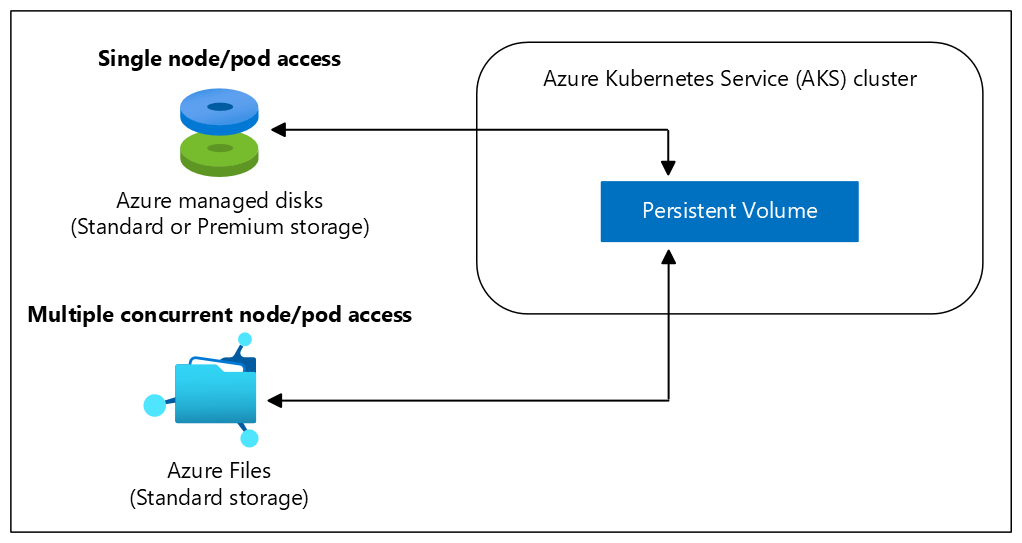

Persistent volumes

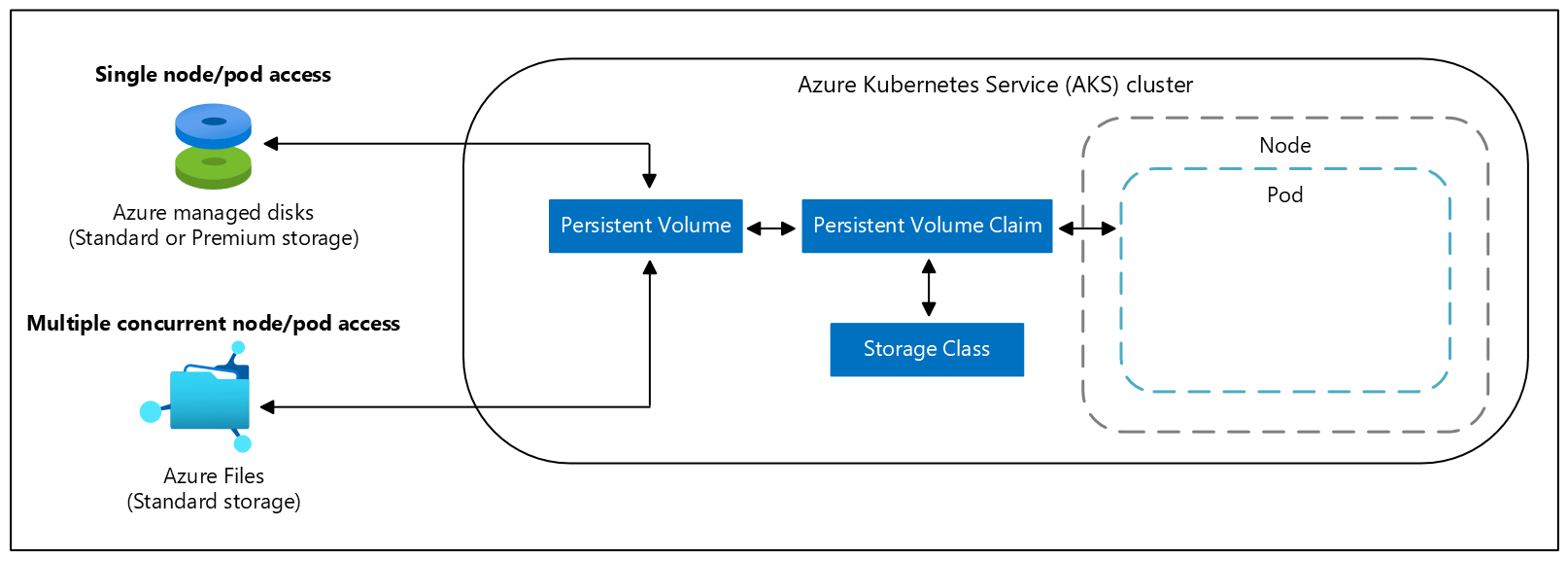

Volumes defined and created as part of the pod lifecycle only exist until you delete the pod. Pods often expect their storage to remain if a pod is rescheduled on a different host during a maintenance event, especially in StatefulSets. A persistent volume (PV) is a storage resource created and managed by the Kubernetes API that can exist beyond the lifetime of an individual pod. You can use the following Azure Storage services to provide the persistent volume:

As noted in the Volumes section, the choice of Azure Disks or Azure Files is often determined by the need for concurrent access to the data or the performance tier.

A cluster administrator can statically create a persistent volume, or a volume can be created dynamically by the Kubernetes API server. If a pod is scheduled and requests storage that is currently unavailable, Kubernetes can create the underlying Azure Disk or File storage and attach it to the pod. Dynamic provisioning uses a storage class to identify what type of resource needs to be created.

Important

Persistent volumes can't be shared by Windows and Linux pods due to differences in file system support between the two operating systems.

If you want a fully managed solution for block-level access to data, consider using Azure Container Storage instead of CSI drivers. Azure Container Storage integrates with Kubernetes, allowing dynamic and automatic provisioning of persistent volumes. Azure Container Storage supports Azure Disks, Ephemeral Disks, and Azure Elastic SAN (preview) as backing storage, offering flexibility and scalability for stateful applications running on Kubernetes clusters.

Storage classes

To specify different tiers of storage, such as premium or standard, you can create a storage class.

A storage class also defines a reclaim policy. When you delete the persistent volume, the reclaim policy controls the behavior of the underlying Azure Storage resource. The underlying resource can either be deleted or kept for use with a future pod.

For clusters using Azure Container Storage, you'll see an additional storage class called acstor-<storage-pool-name>. An internal storage class is also created.

For clusters using Container Storage Interface (CSI) drivers, the following extra storage classes are created:

| Storage class | Description |

|---|---|

managed-csi |

Uses Azure Standard SSD locally redundant storage (LRS) to create a managed disk. The reclaim policy ensures that the underlying Azure Disk is deleted when the persistent volume that used it is deleted. The storage class also configures the persistent volumes to be expandable. You can edit the persistent volume claim to specify the new size. Effective starting with Kubernetes version 1.29, in Azure Kubernetes Service (AKS) clusters deployed across multiple availability zones, this storage class utilizes Azure Standard SSD zone-redundant storage (ZRS) to create managed disks. |

managed-csi-premium |

Uses Azure Premium locally redundant storage (LRS) to create a managed disk. The reclaim policy again ensures that the underlying Azure Disk is deleted when the persistent volume that used it is deleted. Similarly, this storage class allows for persistent volumes to be expanded. Effective starting with Kubernetes version 1.29, in Azure Kubernetes Service (AKS) clusters deployed across multiple availability zones, this storage class utilizes Azure Premium zone-redundant storage (ZRS) to create managed disks. |

azurefile-csi |

Uses Azure Standard storage to create an Azure file share. The reclaim policy ensures that the underlying Azure file share is deleted when the persistent volume that used it is deleted. |

azurefile-csi-premium |

Uses Azure Premium storage to create an Azure file share. The reclaim policy ensures that the underlying Azure file share is deleted when the persistent volume that used it is deleted. |

azureblob-nfs-premium |

Uses Azure Premium storage to create an Azure Blob storage container and connect using the NFS v3 protocol. The reclaim policy ensures that the underlying Azure Blob storage container is deleted when the persistent volume that used it is deleted. |

azureblob-fuse-premium |

Uses Azure Premium storage to create an Azure Blob storage container and connect using BlobFuse. The reclaim policy ensures that the underlying Azure Blob storage container is deleted when the persistent volume that used it is deleted. |

Unless you specify a storage class for a persistent volume, the default storage class is used. Ensure volumes use the appropriate storage you need when requesting persistent volumes.

Important: Starting with Kubernetes version 1.21, AKS uses CSI drivers by default, and CSI migration is enabled. While existing in-tree persistent volumes continue to function, starting with version 1.26, AKS will no longer support volumes created using in-tree driver and storage provisioned for files and disk.

The default class will be the same as managed-csi.

Effective starting with Kubernetes version 1.29, when you deploy Azure Kubernetes Service (AKS) clusters across multiple availability zones, AKS now utilizes zone-redundant storage (ZRS) to create managed disks within built-in storage classes. ZRS ensures synchronous replication of your Azure managed disks across multiple Azure availability zones in your chosen region. This redundancy strategy enhances the resilience of your applications and safeguards your data against datacenter failures.

However, it's important to note that zone-redundant storage (ZRS) comes at a higher cost compared to locally redundant storage (LRS). If cost optimization is a priority, you can create a new storage class with the skuname parameter set to LRS. You can then use the new storage class in your Persistent Volume Claim (PVC).

You can create a storage class for other needs using kubectl. The following example uses premium managed disks and specifies that the underlying Azure Disk should be retained when you delete the pod:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-premium-retain

provisioner: disk.csi.azure.com

parameters:

skuName: Premium_ZRS

reclaimPolicy: Retain

volumeBindingMode: WaitForFirstConsumer

allowVolumeExpansion: true

Be aware that AKS reconciles the default storage classes and will overwrite any changes you make to those storage classes.

For more information about storage classes, see StorageClass in Kubernetes.

Persistent volume claims

A persistent volume claim (PVC) requests storage of a particular storage class, access mode, and size. The Kubernetes API server can dynamically provision the underlying Azure Storage resource if no existing resource can fulfill the claim based on the defined storage class.

The pod definition includes the volume mount once the volume has been connected to the pod.

Once an available storage resource has been assigned to the pod requesting storage, the persistent volume is bound to a persistent volume claim. Persistent volumes are mapped to claims in a 1:1 mapping.

The following example YAML manifest shows a persistent volume claim that uses the managed-premium storage class and requests an Azure Disk that is 5Gi in size:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: azure-managed-disk

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-premium-retain

resources:

requests:

storage: 5Gi

When you create a pod definition, you also specify:

- The persistent volume claim to request the desired storage.

- The volume mount for your applications to read and write data.

The following example YAML manifest shows how the previous persistent volume claim can be used to mount a volume at /mnt/azure:

kind: Pod

apiVersion: v1

metadata:

name: nginx

spec:

containers:

- name: myfrontend

image: mcr.microsoft.com/oss/nginx/nginx:1.15.5-alpine

volumeMounts:

- mountPath: "/mnt/azure"

name: volume

volumes:

- name: volume

persistentVolumeClaim:

claimName: azure-managed-disk

For mounting a volume in a Windows container, specify the drive letter and path. For example:

...

volumeMounts:

- mountPath: "d:"

name: volume

- mountPath: "c:\k"

name: k-dir

...

Ephemeral OS disk

By default, Azure automatically replicates the operating system disk for a virtual machine to Azure Storage to avoid data loss when the VM is relocated to another host. However, since containers aren't designed to have local state persisted, this behavior offers limited value while providing some drawbacks. These drawbacks include, but aren't limited to, slower node provisioning and higher read/write latency.

By contrast, ephemeral OS disks are stored only on the host machine, just like a temporary disk. With this configuration, you get lower read/write latency, together with faster node scaling and cluster upgrades.

When you don't explicitly request Azure managed disks for the OS, AKS defaults to ephemeral OS if possible for a given node pool configuration.

Size requirements and recommendations for ephemeral OS disks are available in the Azure VM documentation. The following are some general sizing considerations:

- If you chose to use the AKS default VM size Standard_DS2_v2 SKU with the default OS disk size of 100 GiB, the default VM size supports ephemeral OS, but only has 86 GiB of cache size. This configuration would default to managed disks if you don't explicitly specify it. If you do request an ephemeral OS, you receive a validation error.

- If you request the same Standard_DS2_v2 SKU with a 60-GiB OS disk, this configuration would default to ephemeral OS. The requested size of 60 GiB is smaller than the maximum cache size of 86 GiB.

- If you select the Standard_D8s_v3 SKU with 100-GB OS disk, this VM size supports ephemeral OS and has 200 GiB of cache space. If you don't specify the OS disk type, the node pool would receive ephemeral OS by default.

The latest generation of VM series doesn't have a dedicated cache, but only temporary storage. For example, if you selected the Standard_E2bds_v5 VM size with the default OS disk size of 100 GiB, it supports ephemeral OS disks, but only has 75 GB of temporary storage. This configuration would default to managed OS disks if you don't explicitly specify it. If you do request an ephemeral OS disk, you receive a validation error.

- If you request the same Standard_E2bds_v5 VM size with a 60-GiB OS disk, this configuration defaults to ephemeral OS disks. The requested size of 60 GiB is smaller than the maximum temporary storage of 75 GiB.

- If you select Standard_E4bds_v5 SKU with 100-GiB OS disk, this VM size supports ephemeral OS and has 150 GiB of temporary storage. If you don't specify the OS disk type, by default Azure provisions an ephemeral OS disk to the node pool.

Customer-managed keys

You can manage encryption for your ephemeral OS disk with your own keys on an AKS cluster. For more information, see Use Customer Managed key with Azure disk on AKS.

Volumes

Kubernetes typically treats individual pods as ephemeral, disposable resources. Applications have different approaches available to them for using and persisting data. A volume represents a way to store, retrieve, and persist data across pods and through the application lifecycle.

Traditional volumes are created as Kubernetes resources backed by Azure Storage. You can manually create data volumes to be assigned to pods directly or have Kubernetes automatically create them. Data volumes can use: Azure Disk, Azure Files, Azure NetApp Files, or Azure Blobs.

Note

Depending on the VM SKU you're using, the Azure Disk CSI driver might have a per-node volume limit. For some high-performance VMs (for example, 16 cores), the limit is 64 volumes per node. To identify the limit per VM SKU, review the Max data disks column for each VM SKU offered. For a list of VM SKUs offered and their corresponding detailed capacity limits, see General purpose virtual machine sizes.

To help determine the best fit for your workload between Azure Files and Azure NetApp Files, review the information provided in the article Azure Files and Azure NetApp Files comparison.

Azure Disk

By default, an AKS cluster comes with pre-created managed-csi and managed-csi-premium storage classes that use Disk Storage. Similar to Amazon EBS, these classes create a managed disk or block device that's attached to the node for pod access.

The Disk classes allow both static and dynamic volume provisioning. Reclaim policy ensures that the disk is deleted with the persistent volume. You can expand the disk by editing the persistent volume claim.

These storage classes use Azure managed disks with locally redundant storage (LRS). LRS means that the data has three synchronous copies within a single physical location in an Azure primary region. LRS is the least expensive replication option, but doesn't offer protection against a datacenter failure. You can define custom storage classes that use Zone-redundant storage (ZRS) managed disks. Zone-redundant storage (ZRS) synchronously replicates your Azure managed disk across three Azure availability zones in the region you select. Each availability zone is a separate physical location with independent power, cooling, and networking. ZRS disks provide at least 99.9999999999% (12 9's) of durability over a given year. A ZRS managed disk can be attached by a virtual machines in a different availability zone. ZRS disks are currently not available an all the Azure regions. For more information on ZRS disks, see Zone Redundant Storage (ZRS) option for Azure Disks for high availability. In addition, to mitigate the risk of data loss, you can take regular backups or snapshots of Disk Storage data by using Azure Kubernetes Service Backup or third party solutions like Velero or Azure Backup that can use built-in snapshot technologies.

You can use Azure Disk to create a Kubernetes DataDisk resource. Disks types include:

- Premium SSDs (recommended for most workloads)

- Premium SSD v2

- Ultra disks

- Standard SSDs

- Standard HDDs

Tip

For most production and development workloads, use Premium SSDs.

Because an Azure Disk is mounted as ReadWriteOnce, it's only available to a single node. For storage volumes accessible by pods on multiple nodes simultaneously, use Azure Files.

Azure Premium SSD v2 disks

Azure Premium SSD v2 disks offer IO-intense enterprise workloads, a consistent submillisecond disk latency, and high IOPS and throughput. The performance (capacity, throughput, and IOPS) of Premium SSD v2 disks can be independently configured at any time, making it easier for more scenarios to be cost efficient while meeting performance needs. For more information on how to configure a new or existing AKS cluster to use Azure Premium SSD v2 disks, see Use Azure Premium SSD v2 disks on Azure Kubernetes Service.

Ultra Disk Storage

Ultra Disk Storage is an Azure managed disk tier that offers high throughput, high IOPS, and consistent low latency disk storage for Azure VMs. Ultra Disk Storage is intended for workloads that are data and transaction heavy. Like other Disk Storage SKUs, and Amazon EBS, Ultra Disk Storage mounts one pod at a time and doesn't provide concurrent access.

Use the flag --enable-ultra-ssd to enable Ultra Disk Storage on your AKS cluster.

If you choose Ultra Disk Storage, be aware of its limitations, and make sure to select a compatible VM size. Ultra Disk Storage is available with locally redundant storage (LRS) replication.

Bring your own keys (BYOK)

Azure encrypts all data in a managed disk at rest. By default, data is encrypted with Microsoft-managed keys. For more control over encryption keys, you can supply customer-managed keys to use for encryption at rest for both the OS and data disks for your AKS clusters. For more information, see Bring your own keys (BYOK) with Azure managed disks in Azure Kubernetes Service (AKS).

Azure Files

Disk Storage can't provide concurrent access to a volume, but you can use Azure Files to mount a Server Message Block (SMB) version 3.1.1 share or Network File System (NFS) version 4.1 share backed by Azure Storage. This process provides a network-attached storage that's similar to Amazon EFS. As with Disk Storage, there are two options:

- Azure Files Standard storage is backed by regular hard disk drives (HDDs).

- Azure Files Premium storage backs the file share with high-performance SSD drives. The minimum file share size for Premium is 100 GB.

Azure Files has the following storage account replication options to protect your data in case of failure:

- Standard_LRS: Standard locally redundant storage (LRS)

- Standard_GRS: Standard geo-redundant storage (GRS)

- Standard_ZRS: Standard zone-redundant storage (ZRS)

- Standard_RAGRS: Standard read-access geo-redundant storage (RA-GRS)

- Standard_RAGZRS: Standard read-access geo-zone-redundant storage (RA-GZRS)

- Premium_LRS: Premium locally redundant storage (LRS)

- Premium_ZRS: Premium zone-redundant storage (ZRS)

To optimize costs for Azure Files, purchase Azure Files capacity reservations.

Azure NetApp Files

Azure NetApp Files is an enterprise-class, high-performance, metered file storage service running on Azure and supports volumes using NFS (NFSv3 or NFSv4.1), SMB, and dual-protocol (NFSv3 and SMB, or NFSv4.1 and SMB). Kubernetes users have two options for using Azure NetApp Files volumes for Kubernetes workloads:

- Create Azure NetApp Files volumes statically. In this scenario, the creation of volumes is external to AKS. Volumes are created using the Azure CLI or from the Azure portal, and are then exposed to Kubernetes by the creation of a

PersistentVolume. Statically created Azure NetApp Files volumes have many limitations (for example, inability to be expanded, needing to be over-provisioned, and so on). Statically created volumes aren't recommended for most use cases. - Create Azure NetApp Files volumes dynamically, orchestrating through Kubernetes. This method is the preferred way to create multiple volumes directly through Kubernetes, and is achieved using Astra Trident. Astra Trident is a CSI-compliant dynamic storage orchestrator that helps provision volumes natively through Kubernetes.

For more information, see Configure Azure NetApp Files for Azure Kubernetes Service.

Azure Blob storage

The Azure Blob storage Container Storage Interface (CSI) driver is a CSI specification-compliant driver used by Azure Kubernetes Service (AKS) to manage the lifecycle of Azure Blob storage. The CSI is a standard for exposing arbitrary block and file storage systems to containerized workloads on Kubernetes.

By adopting and using CSI, AKS now can write, deploy, and iterate plug-ins to expose new or improve existing storage systems in Kubernetes. Using CSI drivers in AKS avoids having to touch the core Kubernetes code and wait for its release cycles.

When you mount Azure Blob storage as a file system into a container or pod, it enables you to use blob storage with a number of applications that work massive amounts of unstructured data. For example:

- Log file data

- Images, documents, and streaming video or audio

- Disaster recovery data

The data on the object storage can be accessed by applications using BlobFuse or Network File System (NFS) 3.0 protocol. Before the introduction of the Azure Blob storage CSI driver, the only option was to manually install an unsupported driver to access Blob storage from your application running on AKS. When the Azure Blob storage CSI driver is enabled on AKS, there are two built-in storage classes: azureblob-fuse-premium and azureblob-nfs-premium.

To create an AKS cluster with CSI drivers support, see CSI drivers on AKS. To learn more about the differences in access between each of the Azure storage types using the NFS protocol, see Compare access to Azure Files, Blob Storage, and Azure NetApp Files with NFS.

Azure HPC Cache

Azure HPC Cache speeds access to your data for HPC tasks, with all the scalability of cloud solutions. If you choose this storage solution, make sure to deploy your AKS cluster in a region that supports Azure HPC cache.

NFS server

The best option for shared NFS access is to use Azure Files or Azure NetApp Files. You can also create an NFS Server on an Azure VM that exports volumes.

Be aware that this option only supports static provisioning. You must provision the NFS shares manually on the server, and can't do so from AKS automatically.

This solution is based on infrastructure as a service (IaaS) rather than platform as a service (PaaS). You're responsible for managing the NFS server, including OS updates, high availability, backups, disaster recovery, and scalability.

Bring your own keys (BYOK) with Azure disks

Azure Storage encrypts all data in a storage account at rest, including the OS and data disks of an AKS cluster. By default, data is encrypted with Microsoft-managed keys. For more control over encryption keys, you can supply customer-managed keys to use for encryption at rest of the OS and data disks of your AKS clusters. For more information, see:

Azure Container Storage

Azure Container Storage is a cloud-based volume management, deployment, and orchestration service built natively for containers. It integrates with Kubernetes, allowing you to dynamically and automatically provision persistent volumes to store data for stateful applications running on Kubernetes clusters.

Azure Container Storage utilizes existing Azure Storage offerings for actual data storage and offers a volume orchestration and management solution purposely built for containers. Supported backing storage options include:

- Azure Disks: Granular control of storage SKUs and configurations. They are suitable for tier 1 and general purpose databases.

- Ephemeral Disks: Utilizes local storage resources on AKS nodes (NVMe or temp SSD). Best suited for applications with no data durability requirement or with built-in data replication support. AKS discovers the available ephemeral storage on AKS nodes and acquires them for volume deployment.

- Azure Elastic SAN: Provision on-demand, fully managed resource. Suitable for general purpose databases, streaming and messaging services, CD/CI environments, and other tier 1/tier 2 workloads. Multiple clusters can access a single SAN concurrently, however persistent volumes can only be attached by one consumer at a time.

Until now, providing cloud storage for containers required using individual container storage interface (CSI) drivers to use storage services intended for infrastructure as a service (IaaS)-centric workloads and make them work for containers. This creates operational overhead and increases the risk of issues with application availability, scalability, performance, usability, and cost.

Azure Container Storage is derived from OpenEBS, an open-source solution that provides container storage capabilities for Kubernetes. By offering a managed volume orchestration solution via microservice-based storage controllers in a Kubernetes environment, Azure Container Storage enables true container-native storage.

Azure Container Storage is suitable in the following scenarios:

Accelerate VM-to-container initiatives: Azure Container Storage surfaces the full spectrum of Azure block storage offerings that were previously only available for VMs and makes them available for containers. This includes ephemeral disk that provides extremely low latency for workloads like Cassandra, as well as Azure Elastic SAN that provides native iSCSI and shared provisioned targets.

Simplify volume management with Kubernetes: By providing volume orchestration via the Kubernetes control plane, Azure Container Storage makes it easy to deploy and manage volumes within Kubernetes - without the need to move back and forth between different control planes.

Reduce total cost of ownership (TCO): Improve cost efficiency by increasing the scale of persistent volumes supported per pod or node. Reduce the storage resources needed for provisioning by dynamically sharing storage resources. Note that scale up support for the storage pool itself isn't supported.

Azure Container Storage provides the following key benefits:

Rapid scale out of stateful pods: Azure Container Storage mounts persistent volumes over network block storage protocols (NVMe-oF or iSCSI), offering fast attach and detach of persistent volumes. You can start small and deploy resources as needed while making sure your applications aren't starved or disrupted, either during initialization or in production. Application resiliency is improved with pod respawns across the cluster, requiring rapid movement of persistent volumes. Using remote network protocols, Azure Container Storage tightly couples with the pod lifecycle to support highly resilient, high-scale stateful applications on AKS.

Improved performance for stateful workloads: Azure Container Storage enables superior read performance and provides near-disk write performance by using NVMe-oF over RDMA. This allows customers to cost-effectively meet performance requirements for various container workloads including tier 1 I/O intensive, general purpose, throughput sensitive, and dev/test. Accelerate the attach/detach time of persistent volumes and minimize pod failover time.

Kubernetes-native volume orchestration: Create storage pools and persistent volumes, capture snapshots, and manage the entire lifecycle of volumes using

kubectlcommands without switching between toolsets for different control plane operations.

Third-party solutions

Like Amazon EKS, AKS is a Kubernetes implementation, and you can integrate third-party Kubernetes storage solutions. Here are some examples of third-party storage solutions for Kubernetes:

- Rook turns distributed storage systems into self-managing storage services by automating Storage administrator tasks. Rook delivers its services via a Kubernetes operator for each storage provider.

- GlusterFS is a free and open-source scalable network filesystem that uses common off-the-shelf hardware to create large, distributed storage solutions for data-heavy and bandwidth-intensive tasks.

- Ceph provides a reliable and scalable unified storage service with object, block, and file interfaces from a single cluster built from commodity hardware components.

- MinIO multicloud object storage lets enterprises build AWS S3-compatible data infrastructure on any cloud, providing a consistent, portable interface to your data and applications.

- Portworx is an end-to-end storage and data management solution for Kubernetes projects and container-based initiatives. Portworx offers container-granular storage, disaster recovery, data security, and multicloud migrations.

- Quobyte provides high-performance file and object storage you can deploy on any server or cloud to scale performance, manage large amounts of data, and simplify administration.

- Ondat delivers a consistent storage layer across any platform. You can run a database or any persistent workload in a Kubernetes environment without having to manage the storage layer.

Kubernetes storage considerations

Consider the following factors when you choose a storage solution for Amazon EKS or AKS.

Storage class access modes

In Kubernetes version 1.21 and newer, AKS and Amazon EKS storage classes use Container Storage Interface (CSI) drivers only and by default.

Different services support storage classes that have different access modes.

| Service | ReadWriteOnce | ReadOnlyMany | ReadWriteMany |

|---|---|---|---|

| Azure Disks | X | ||

| Azure Files | X | X | X |

| Azure NetApp Files | X | X | X |

| NFS server | X | X | X |

| Azure HPC Cache | X | X | X |

Dynamic vs static provisioning

Dynamically provision volumes to reduce the management overhead of statically creating persistent volumes. Set a correct reclaim policy to avoid having unused disks when you delete pods.

Backup

Choose a tool to back up persistent data. The tool should match your storage type, such as snapshots, Azure Backup, Velero or Kasten.

Cost optimization

To optimize Azure Storage costs, use Azure reservations. Make sure to check which services support Azure Reservations. Also see Cost management for a Kubernetes cluster.

Contributors

This article is maintained by Microsoft. It was originally written by the following contributors.

Principal authors:

- Paolo Salvatori | Principal System Engineer

- Laura Nicolas | Senior Cloud Solution Architect

Other contributors:

- Chad Kittel | Principal Software Engineer

- Ed Price | Senior Content Program Manager

- Theano Petersen | Technical Writer

To see non-public LinkedIn profiles, sign in to LinkedIn.

Next steps

- AKS for Amazon EKS professionals

- Kubernetes identity and access management

- Kubernetes monitoring and logging

- Secure network access to Kubernetes

- Cost management for Kubernetes

- Kubernetes node and node pool management

- Cluster governance