Add and manage an event source in an eventstream

Once you created an eventstream, you can connect it to various data sources and destinations.

Note

Enhanced capabilities are enabled by default when you create eventstreams now. If you have eventstreams that were created using standard capabilities, those eventstreams will continue to work. You can still edit and use them as usual. We recommend that you create a new eventstream to replace standard eventstreams so that you can take advantage of additional capabilities and benefits of enhanced eventstreams.

Once you create an eventstream, you can connect it to various data sources and destinations.

Eventstream not only allows you to stream data from Microsoft sources, but also supports ingestion from third-party platforms like Google Cloud and Amazon Kinesis with new messaging connectors. This expanded capability offers seamless integration of external data streams into Fabric, providing greater flexibility and enabling you to gain real-time insights from multiple sources.

In this article, you learn about the event sources that you can add to an eventstream.

Prerequisites

- Access to a workspace in the Fabric capacity license mode (or) the Trial license mode with Contributor or higher permissions.

- Prerequisites specific to each source that are documented in the following source-specific articles.

Supported sources

Fabric event streams with enhanced capabilities support the following sources. Each article provides details and instructions for adding specific sources.

| Sources | Description |

|---|---|

| Azure Event Hubs | If you have an Azure event hub, you can ingest event hub data into Microsoft Fabric using Eventstream. |

| Azure IoT Hub | If you have an Azure IoT hub, you can ingest IoT data into Microsoft Fabric using Eventstream. |

| Azure SQL Database Change Data Capture (CDC) | The Azure SQL Database CDC source connector allows you to capture a snapshot of the current data in an Azure SQL database. The connector then monitors and records any future row-level changes to this data. |

| PostgreSQL Database CDC | The PostgreSQL Database Change Data Capture (CDC) source connector allows you to capture a snapshot of the current data in a PostgreSQL database. The connector then monitors and records any future row-level changes to this data. |

| MySQL Database CDC | The Azure MySQL Database Change Data Capture (CDC) Source connector allows you to capture a snapshot of the current data in an Azure Database for MySQL database. You can specify the tables to monitor, and the eventstream records any future row-level changes to the tables. |

| Azure Cosmos DB CDC | The Azure Cosmos DB Change Data Capture (CDC) source connector for Microsoft Fabric event streams lets you capture a snapshot of the current data in an Azure Cosmos DB database. The connector then monitors and records any future row-level changes to this data. |

| SQL Server on virtual machine (VM) Database (DB) CDC | The SQL Server on VM DB (CDC) source connector for Fabric event streams allows you to capture a snapshot of the current data in a SQL Server database on VM. The connector then monitors and records any future row-level changes to the data. |

| Azure SQL Managed Instance CDC | The Azure SQL Managed Instance CDC source connector for Microsoft Fabric event streams allows you to capture a snapshot of the current data in a SQL Managed Instance database. The connector then monitors and records any future row-level changes to this data. |

| Google Cloud Pub/Sub | Google Pub/Sub is a messaging service that enables you to publish and subscribe to streams of events. You can add Google Pub/Sub as a source to your eventstream to capture, transform, and route real-time events to various destinations in Fabric. |

| Amazon Kinesis Data Streams | Amazon Kinesis Data Streams is a massively scalable, highly durable data ingestion, and processing service optimized for streaming data. By integrating Amazon Kinesis Data Streams as a source within your eventstream, you can seamlessly process real-time data streams before routing them to multiple destinations within Fabric. |

| Confluent Cloud Kafka | Confluent Cloud Kafka is a streaming platform offering powerful data streaming and processing functionalities using Apache Kafka. By integrating Confluent Cloud Kafka as a source within your eventstream, you can seamlessly process real-time data streams before routing them to multiple destinations within Fabric. |

| Amazon MSK Kafka | Amazon MSK Kafka is a fully managed Kafka service that simplifies the setup, scaling, and management. By integrating Amazon MSK Kafka as a source within your eventstream, you can seamlessly bring the real-time events from your MSK Kafka and process them before routing them to multiple destinations within Fabric. |

| Sample data | You can choose Bicycles, Yellow Taxi, or Stock Market events as a sample data source to test the data ingestion while setting up an eventstream. |

| Custom endpoint (that is, Custom App in standard capability) | The custom endpoint feature allows your applications or Kafka clients to connect to Eventstream using a connection string, enabling the smooth ingestion of streaming data into Eventstream. |

| Azure Service Bus (preview) | You can ingest data from an Azure Service Bus queue or a topic's subscription into Microsoft Fabric using Eventstream. |

| Apache Kafka (preview) | Apache Kafka is an open-source, distributed platform for building scalable, real-time data systems. By integrating Apache Kafka as a source within your eventstream, you can seamlessly bring real-time events from your Apache Kafka and process them before routing them to multiple destinations within Fabric. |

| Azure Blob Storage events (preview) | Azure Blob Storage events are triggered when a client creates, replaces, or deletes a blob. The connector allows you to link Blob Storage events to Fabric events in Real-Time hub. You can convert these events into continuous data streams and transform them before routing them to various destinations in Fabric. |

| Fabric Workspace Item events (preview) | Fabric Workspace Item events are discrete Fabric events that occur when changes are made to your Fabric Workspace. These changes include creating, updating, or deleting a Fabric item. With Fabric event streams, you can capture these Fabric workspace events, transform them, and route them to various destinations in Fabric for further analysis. |

| Fabric OneLake events (preview) | OneLake events allow you to subscribe to changes in files and folders in OneLake, and then react to those changes in real-time. With Fabric event streams, you can capture these OneLake events, transform them, and route them to various destinations in Fabric for further analysis. This seamless integration of OneLake events within Fabric event streams gives you greater flexibility for monitoring and analyzing activities in your OneLake. |

| Fabric Job events (preview) | Job events allow you to subscribe to changes produced when Fabric runs a job. For example, you can react to changes when refreshing a semantic model, running a scheduled pipeline, or running a notebook. Each of these activities can generate a corresponding job, which in turn generates a set of corresponding job events. With Fabric event streams, you can capture these Job events, transform them, and route them to various destinations in Fabric for further analysis. This seamless integration of Job events within Fabric event streams gives you greater flexibility for monitoring and analyzing activities in your Job. |

Note

The maximum number of sources and destinations for one eventstream is 11.

Related content

Prerequisites

Before you start, you must complete the following prerequisites:

- Access to a workspace in the Fabric capacity license mode (or) the Trial license mode with Contributor or higher permissions.

- To add an Azure Event Hubs or Azure IoT Hub as eventstream source, you need to have appropriate permission to access its policy keys. They must be publicly accessible and not behind a firewall or secured in a virtual network.

Supported sources

Fabric event streams support the following sources. Use links in the table to navigate to articles that provide more details about adding specific sources.

| Sources | Description |

|---|---|

| Azure Event Hubs | If you have an Azure event hub, you can ingest event hub data into Microsoft Fabric using Eventstream. |

| Azure IoT Hub | If you have an Azure IoT hub, you can ingest IoT data into Microsoft Fabric using Eventstream. |

| Sample data | You can choose Bicycles, Yellow Taxi, or Stock Market events as a sample data source to test the data ingestion while setting up an eventstream. |

| Custom App | The custom app feature allows your applications or Kafka clients to connect to Eventstream using a connection string, enabling the smooth ingestion of streaming data into Eventstream. |

Note

The maximum number of sources and destinations for one eventstream is 11.

Manage a source

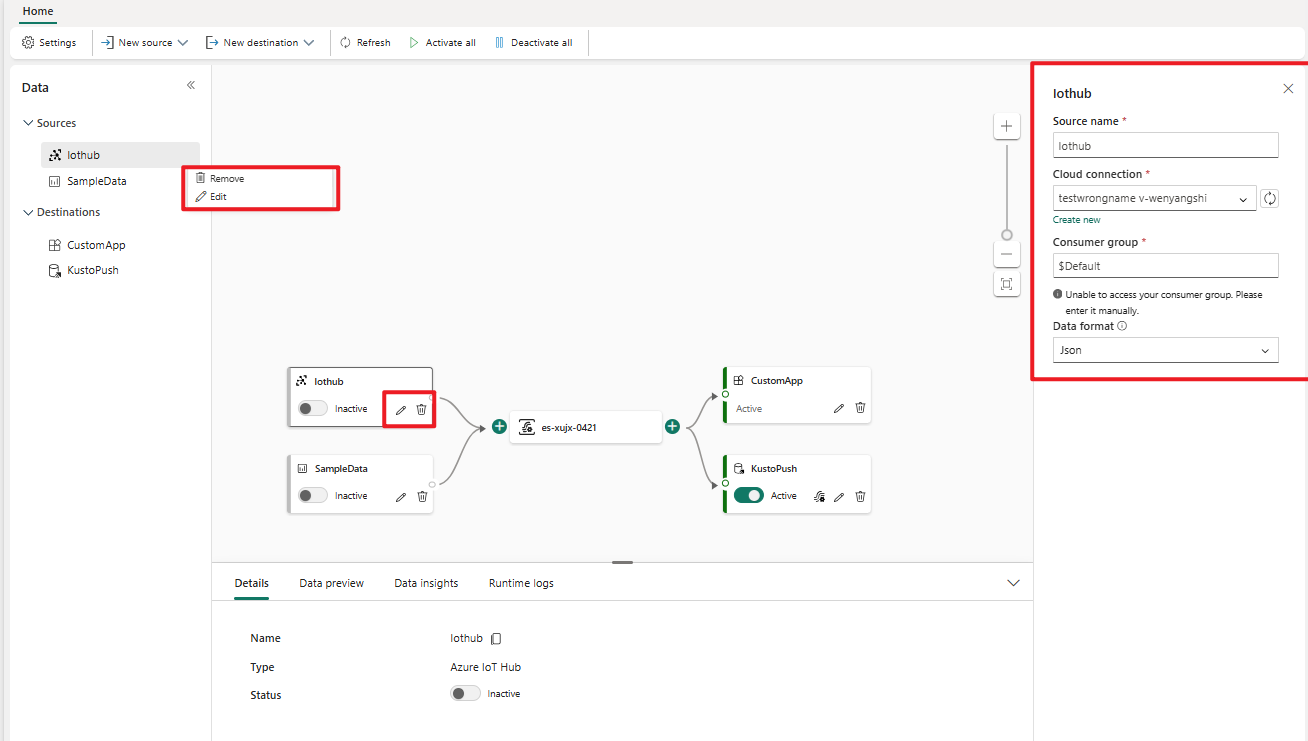

Edit/remove: You can select an eventstream source to edit or remove either through the navigation pane or canvas. When you select Edit, the edit pane opens in the right of the main editor.

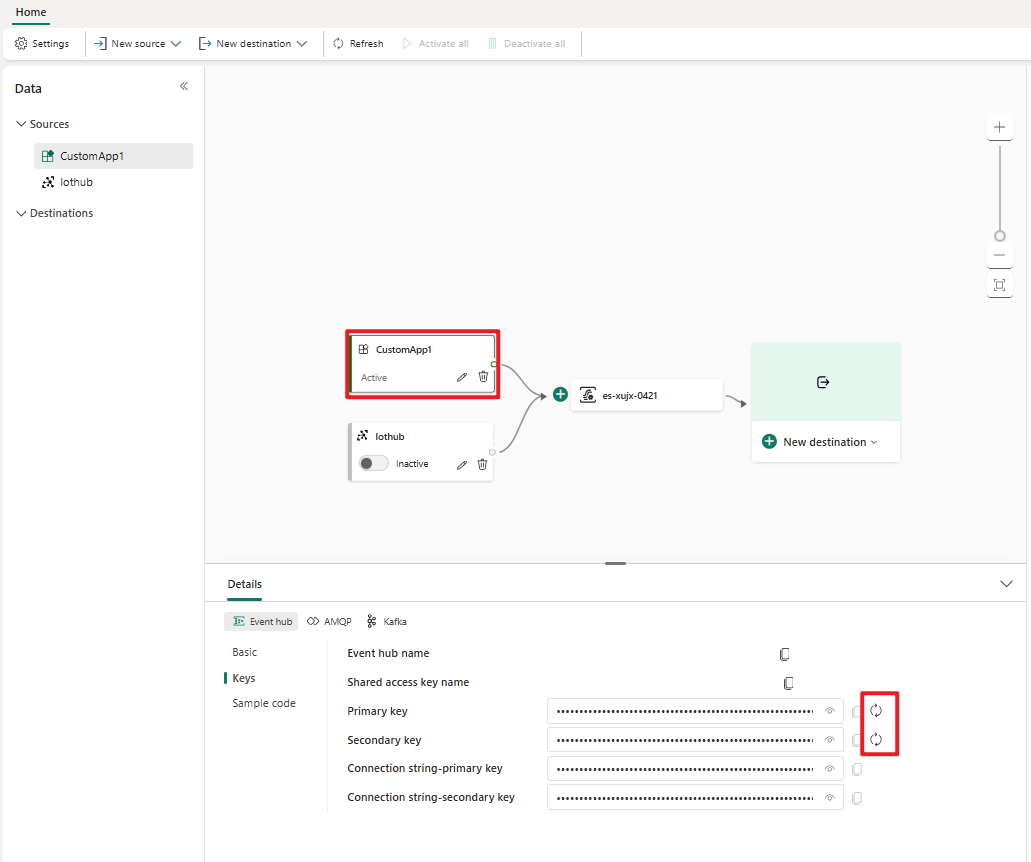

Regenerate key for a custom app: If you want to regenerate a new connection key for your application, select one of your custom app sources on the canvas and select Regenerate to get a new connection key.