Dataflow refresh

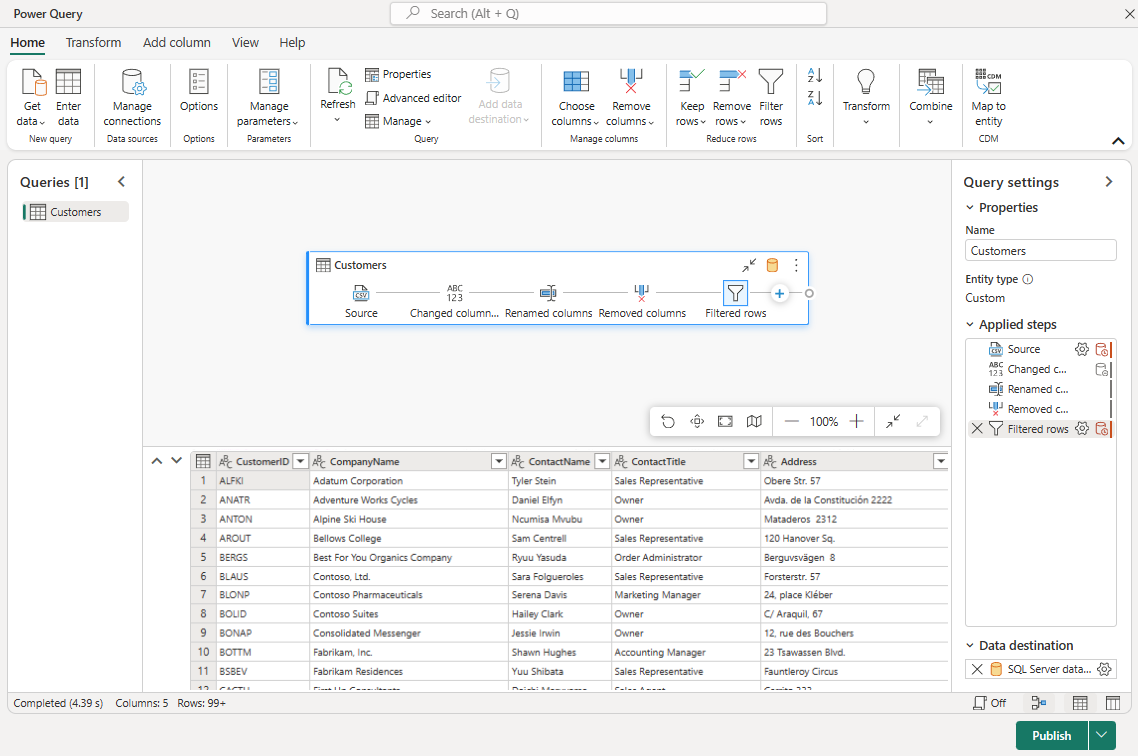

Dataflows enable you to connect to, transform, combine, and load data to storage for downstream consumption. A key element in dataflows is the refresh process, which applies the transformation steps defined during authoring to extract, transform, and load data to the target storage.

A dataflow refresh can be triggered in one of two ways, either on-demand or by setting up a refresh schedule. A scheduled refresh is run based on the specific days and times you specify.

Prerequisites

Here are the prerequisites for refreshing a dataflow:

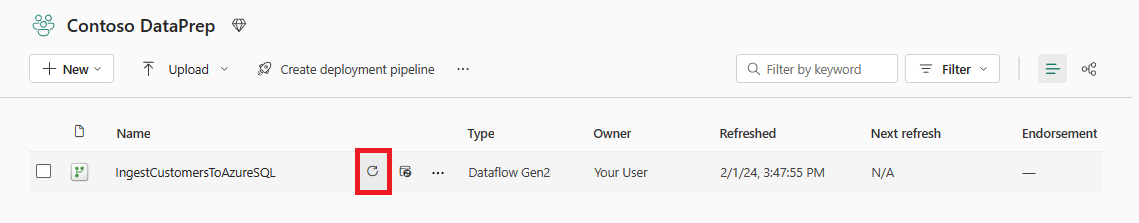

On-demand refresh

To refresh a dataflow on-demand, select Refresh icon found in workspace list or lineage views.

There are other ways an on-demand dataflow refresh can be triggered. When a dataflow publish completes successfully, an on-demand refresh is started. On-demand refresh can also be triggered via a pipeline that contains a dataflow activity.

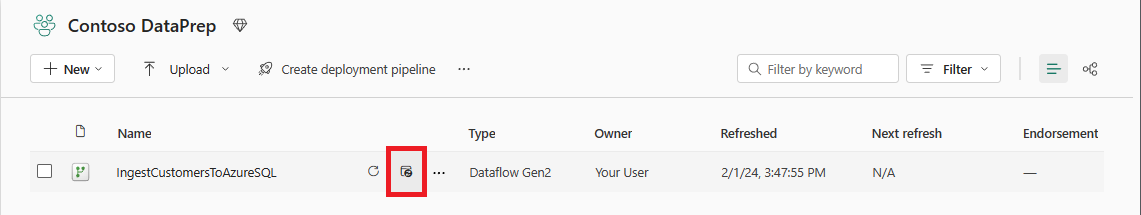

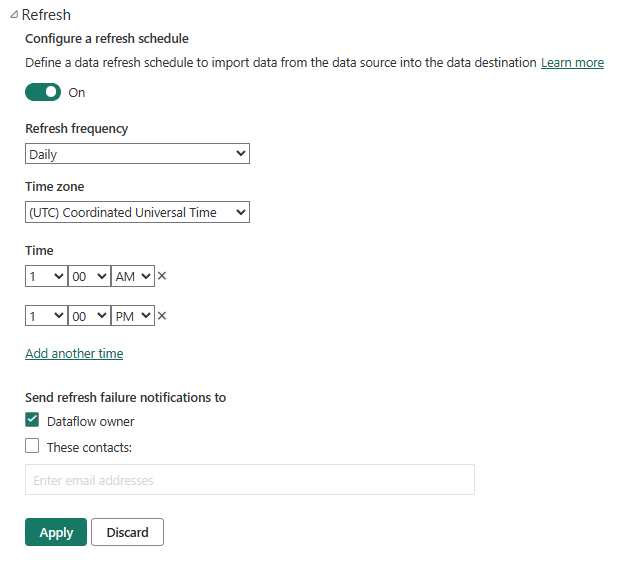

Scheduled refresh

To automatically refresh a dataflow on a schedule, select Scheduled Refresh icon found in workspace list view:

The refresh section is where you can define the frequency and time slots to refresh a dataflow, up to 48 times per day. The following screenshot shows a daily refresh schedule on a 12 hour interval.

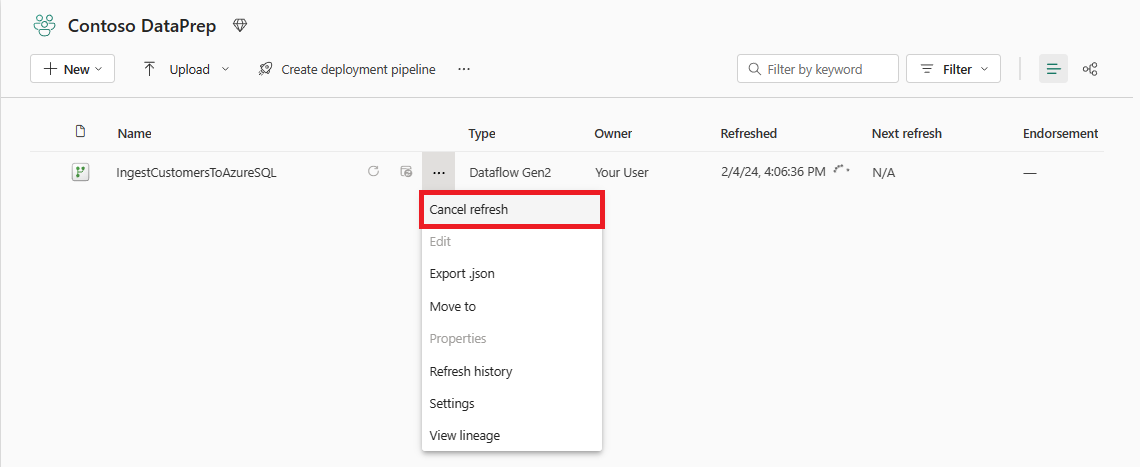

Cancel refresh

Cancel dataflow refresh is useful when you want to stop a refresh during peak time, if a capacity is nearing its limits, or if refresh is taking longer than expected. Use the refresh cancellation feature to stop refreshing dataflows.

To cancel a dataflow refresh, select Cancel icon found in workspace list or lineage views for a dataflow with in-progress refresh.

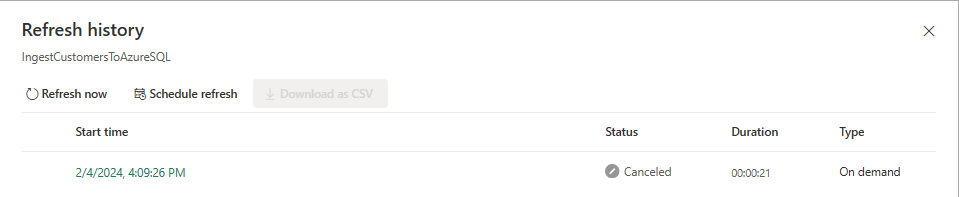

Once a dataflow refresh is canceled, the dataflow's refresh history status is updated to reflect cancelation status:

Refresh limitations

For dataflow refreshes, a couple of limitations are in place:

- Per dataflow, you're only allowed to have 150 refreshes per 24 hours (rolling window). When you exceed this limit, you receive an error in your refresh history and refreshes resume after you are below the limit.

- If your scheduled dataflow refresh fails consecutively, we pause your dataflow refresh schedule and send the owner of the dataflow an email. The following rules apply in this case:

- 72 hours (3 days)

- 100% failure rate over 72 hours

- Minimum of 6 refreshes (2 refreshes a day)

- 168 hours (1 week)

- 100% failure rate over 168 hours

- Minimum of 5 refreshes (1 refresh a day)

- 72 hours (3 days)

- A single evaluation of a query has a limit of 8 hours.

- Total refresh time of a single refresh of a dataflow is limited to a max of 24 hours.

- Per dataflow you can have a maximum of 50 staged queries, or queries with output destination, or combination of both.

Refresh cancelation implications to output data

A dataflow refresh can be stopped via cancel refresh feature or if a failure occurred during processing of the dataflow's queries. Different outcomes can be observed depending on the type of destination and when refresh was stopped. Here are the possible outcomes, for the two types of data destination for a query:

- Query is loading data to staging: Data from the last successful refresh is available.

- Query is loading data to a data destination: Data written up to the point of cancelation is available.

Not all queries in a dataflow are processed at the same time, for example, if a dataflow contains many queries or some queries depend on others. If a refresh is canceled before evaluation of a query that loads data to a destination began, there's no change to data in that query's destination.