Case Study: Troubleshooting a Slow Log Shipping Restore job

Scenario Consider a scenario where you have a Log shipping setup with a STANDY secondary database and things are working just fine. One fine day you notice that the secondary database is not in sync with the primary. The seasoned DBA that you are, you go ahead and looked at the log shipping jobs and identify that the restore is taking a lot of time.

The obvious question that come to your mind is whether a lot of transactions have happened recently causing the log backup to be much larger. So you check the folder and see the .TRN file sizes remain pretty much the same. What next?

I will cover some basic troubleshooting that you can do, to identify “why” the restore process is so slow.

To give you a perspective, let’s says that earlier a restore of a 4MB Transaction Log backup used to take less than a minute. Now, it takes about approximately 20-25 minutes.Before I get into troubleshooting, make sure that you have ruled out these factors:-

1. The Log backup size (.TRN) is pretty much the same as it was before.

2. The Disk is not a bottleneck on the secondary server.

3. The Copy job is working just fine and there is no delay here. From the job history you clearly see that Restore is where the time is being spent.

4. The Restore job is not failing and no errors are reported during this time (e.g. Out of Memory etc.).

Troubleshooting

The 1st thing to do to get more information on what the restore is doing is to enable these trace flags

DBCC TRACEON (3004, 3605, -1)

3004 – Gives extended information on backup and restore

3605 – Prints trace information to the error log.

You can read more about these trace flags here à https://blogs.msdn.com/b/psssql/archive/2008/01/23/how-it-works-what-is-restore-backup-doing.aspx

Here is a sample output after I enabled these trace flags. Focus on the specific database which is the secondary database in your log shipping.

<Snippet from SQL Errorlog>

2010-12-29 16:11:19.10 spid64 RestoreLog: Database TESTDB

2010-12-29 16:11:19.10 spid64 X-locking database: TESTDB

2010-12-29 16:11:19.10 spid64 Opening backup set

2010-12-29 16:11:19.10 spid64 Restore: Configuration section loaded

2010-12-29 16:11:19.10 spid64 Restore: Backup set is open

2010-12-29 16:11:19.10 spid64 Restore: Planning begins

2010-12-29 16:11:19.12 spid64 Dismounting FullText catalogs

2010-12-29 16:11:19.12 spid64 Restore: Planning complete

2010-12-29 16:11:19.12 spid64 Restore: BeginRestore (offline) on TESTDB

2010-12-29 16:11:19.12 spid64 Restore: Undoing STANDBY for TESTDB

2010-12-29 16:11:23.46 spid64 SnipEndOfLog from LSN: (296258:29680:1)

2010-12-29 16:11:23.46 spid64 Zeroing D:\SQL\SQLLog\TESTDB.ldf from page 2492695 to 2492738 (0x4c122e000 to 0x4c1284000)

2010-12-29 16:11:23.46 spid64 Zeroing completed on D:\SQL\SQLLog\TESTDB.ldf

2010-12-29 16:11:23.46 spid64 Restore: Finished undoing STANDBY for TESTDB

2010-12-29 16:11:23.51 spid64 Restore: PreparingContainers

2010-12-29 16:11:23.51 spid64 Restore: Containers are ready

2010-12-29 16:11:23.51 spid64 Restore: Restoring backup set

2010-12-29 16:11:23.51 spid64 Restore: Transferring data to TESTDB

2010-12-29 16:11:23.51 spid64 Restore: Waiting for log zero on TESTDB

2010-12-29 16:11:23.51 spid64 Restore: LogZero complete

2010-12-29 16:11:24.24 spid64 FileHandleCache: 0 files opened. CacheSize: 10

2010-12-29 16:11:24.24 spid64 Restore: Data transfer complete on TESTDB

2010-12-29 16:11:24.24 spid64 Restore: Backup set restored

2010-12-29 16:11:24.24 spid64 Restore-Redo begins on database TESTDB

2010-12-29 16:11:25.69 spid64 Rollforward complete on database TESTDB

2010-12-29 16:11:25.69 spid64 Restore: Done with fixups

2010-12-29 16:11:25.74 spid64 Transitioning to STANDBY

2010-12-29 16:11:26.74 spid64 Starting up database 'TESTDB'.

2010-12-29 16:11:26.76 spid64 The database 'TESTDB' is marked RESTORING and is in a state that does not allow recovery to be run.

2010-12-29 16:11:27.63 spid64 FixupLogTail() zeroing S:\SQLServer\SQLLog\TESTDB.ldf from 0x4c1769400 to 0x4c176a000.

2010-12-29 16:11:27.63 spid64 Zeroing D:\SQL\SQLLog\TESTDB.ldf from page 2493365 to 2493425 (0x4c176a000 to 0x4c17e2000)

2010-12-29 16:11:27.65 spid64 Zeroing completed on D:\SQL\SQLLog\TESTDB.ldf

2010-12-29 16:24:30.55 spid64 Recovery is writing a checkpoint in database 'TESTDB' (5). This is an informational message only. No user action is required.

2010-12-29 16:24:35.43 spid64 Starting up database 'TESTDB'.

2010-12-29 16:24:39.10 spid64 CHECKDB for database 'TESTDB' finished without errors on 2010-12-21 23:31:25.493 (local time). This is an informational message only; no user action is required.

2010-12-29 16:24:39.10 spid64 Database is in STANDBY

2010-12-29 16:24:39.10 spid64 Restore: Writing history records

2010-12-29 16:24:39.10 Backup Log was restored. Database: TESTDB, creation date(time): 2008/01/26(09:32:02), first LSN: 296258:29680:1, last LSN: 298258:40394:1, number of dump devices: 1, device information: (FILE=1, TYPE=DISK: {'S:\SQL\SQLLogShip\TESTDB\TESTDB_20101229011500.trn'}). This is an informational message. No user action is required.

2010-12-29 16:24:39.12 spid64 Writing backup history records

2010-12-29 16:24:39.21 spid64 Restore: Done with MSDB maintenance

2010-12-29 16:24:39.21 spid64 RestoreLog: Finished

</Snippet>

From the above output we see that the restore took ~13 minutes. If you look closely at the output the section highlighted in green is where most of the time is spent.

Now when we talk about log restores, the number of VLF’s play a very important factor. More about the effect of VLF’s vs. Restore Time given here à https://blogs.msdn.com/b/psssql/archive/2009/05/21/how-a-log-file-structure-can-affect-database-recovery-time.aspx

Bottom line is that a large number of virtual log files (VLFs) can slow down transaction log restores. To find out if this is the case here, use the following command

DBCC LOGINFO (TESTDB) WITH NO_INFOMSGS

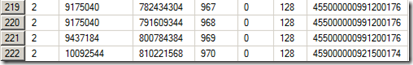

The following information can be deciphered from the above output :-

1. The number of rows returned in the above output is the number of VLF’s.

2. The number of VLF’s that had to be restored in this log backup can be calculated from the section highlighted in blue (above).

first LSN: 296258:29680:1, last LSN: 298258:40394:1

298258 – 296258 = 2000 VLF’s

3. The Size of each VLF can be calculated based on the FileSize column.

9175040 – 8.75 MB

9437184 – 9 MB

10092544 – 9.62 MB

Problem (s)

So based on the above there are two possibilities,

1. The number of VLF’s is rather large which we know will impact restore performance.

2. The size of each VLF is large is cause for concern if STANDY mode is in effect.

The 2nd problem is aggravated if there are batch jobs or long-running transactions that span multiple backups (e.g. Rebuild Indexes). In this case the work of repeatedly rolling back the long-running transaction, writing the rollback work to the standby file (TUF file), then undoing all the rollback work with the next log restore just to start the process over again can easily cause a log shipping secondary to get behind.

While we are talking about the TUF file, I know many people out there are not clear on what this is used for. So here goes,

What is the Transaction Undo File (TUF)?

This file contains information on any modifications that were made as part of incomplete transactions (uncommitted transactions and is used to save the contents of these pages) at the time the backup was performed. A transaction undo file is required if a database is loaded in read-only state (STANDY mode option in LS). In this state, further transaction log backups may be applied.

In the standby mode (which we have for secondary database), database recovery is done when the log is restored and this mode also creates a file with the extension .TUF (which is the transaction Undo file on the destination server). That is why in this mode we will be able to access the databases (Read-Only access). So before the next TLOG backup is applied, the saved changes in the undo file are reapplied to the database. Since this is in STANDBY mode, for any large transactions, the restore process also does the work of writing the rollback to the standby file (TUF), so we might be spending time initializing the whole virtual log.

Solution 1

You need to reduce the number of VLF’s. You can do this by running DBCC SHRINKFILE to reduce the ldf (s) to a small size, thereby reducing the number of VLFs. Note: You need to do this on the Primary database.

After the shrink is complete verify the VLF’s have reduced by running DBCC LOGINFO again. A good range would be somewhere between 500-1000. Resize the log file to the desired size using a single growth operation. You can do this by tweaking the Initial Size setting, also pay attention to the Auto-Growth setting for the LDF file. Setting it too small a value can lead to too many VLF’s.

ALTER DATABASE DBNAME MODIFY FILE (NAME='ldf_logical_name', SIZE=<target>MB)

Also remember that you still have to first apply the pending Log backups before we get to the one which holds the shrink operation. Once we reach this then you can measure the restore time to see if the changes above had a positive impact.

Solution 2

For problem 2 where the size of the VLF’s is causing havoc with the STANDBY mode, you will have to truncate the transaction log. This means that log shipping has to be broken.

You can truncate the TLOG on the source database by setting the recovery model to SIMPLE (using ALTER DATABASE command). If on SQL 2005 or lower versions you can use the BACKUP LOG DBNAME with TRUNCATE_ONLY command.

Then make modifications to the Log file Auto-Grow setting to an appropriate value. Pay attention to the value you set here such that it is not too high, else transactions will have to wait while the file is being grown or too low that it creates too many VLF’s. Take a full database backup immediately and use this to re-setup the log shipping.

Tip: You can use the DBCC SQLPERF(LOGSPACE) command to find out what percent of your log file is used.

I hope this information was helpful in demystifying log shipping restore troubleshooting. As always, stay tuned for more on this blog.

Regards,

Sudarshan Narasimhan

Technical Lead, Microsoft SQL Server CSS

Comments

Anonymous

January 08, 2011

Thanks very much for yet another great blog entry. As a result of the clear explanations presented here I am gaining a deeper appreciation of the what SQL Server is actually doing when I'm conducting my daily DBA duties.Anonymous

January 28, 2011

How about if you are log shipping in standby-mode with precidence given to user connections? Where do you look to get a handle on the interference between updates and users?Anonymous

February 13, 2011

Lynda, If you have LS with standby mode, then the secondary database is in Read-only mode, which means only SELECT statements will be able to run on the secondary server/db. So there will be no interference (I take it you mean blocking/locks) with the restore process IF you have this option enabled for the restore process (disconnect users from the secondary database while the restore operation is underway). So, when the restore runs, the read-only users will be kicked out automatically by the restore. HTH.Anonymous

March 13, 2011

Thanks very much for this blog entryAnonymous

October 10, 2011

Nice post Sudarshan, really interesting stuff here. I have one question: I'm confronting with the same situation but with a different cause: For a 300MB trn log for a database ~600GB, the restore job with Standby takes around 35 minutes to complete. Most of this time is spent on this operation "Rollforward complete on database abc". Can you give some hints about this? ThanksAnonymous

October 12, 2013

Great work bro.. thanks a lot for explanationAnonymous

February 14, 2014

I have the same symptoms noted above, user complains they're never able to connect and do selects because database is always in restoring mode. Yet all on the surface seems well.Anonymous

December 04, 2014

Thanks! This has resolved a major issue for one of my customers. And we have been blaming storage team for over a year ;)Anonymous

December 09, 2014

Great One I Learned One New thing todayAnonymous

March 18, 2015

very nice post, thank you. This solved my problemAnonymous

September 09, 2015

Hi Balmukund Thanks for your support, 1st solution is works for me. :-)Anonymous

September 11, 2015

very nice cast study.. it educates in following things.. VLF, DB AUtogrowth parameter Restore operation trace flagsAnonymous

October 06, 2015

Thanks Actually A deeper section of logs very good ................- Anonymous

August 19, 2016

VERY GOOD EXPLANATION .

- Anonymous

Anonymous

November 17, 2015

What else besides VLF can cause this? We have 40 LOG SHIPPED DBs in STAND BY mode. One day restore time per log went up 15 times for ALL DBs simultaneously. I am looking at smallest DB with 12 VLFs and no trans in the log backup, it still takes "long" time. Any suggestions highly appreciated.Anonymous

August 19, 2016

Thanks for posting excelent information balmukand