Microsoft and SAP NetWeaver - step by step up to the cloud - Part III : some thoughts about automated Windows patching

Microsoft Private Cloud for SAP NetWeaver

Automated Windows Patching in a Microsoft Private Cloud for SAP

High-level Overview

First let's describe the basic idea which is pretty simple. The following four screenshots will give a very brief overview. Details

are described in the sections further down in this blog.

System Center Orchestrator 2012 was chosen as the perfect workflow engine to control the automated patch process.

Powershell was the tool of choice to implement the patch process itself for three reasons :

- it's easy to call Powershell out of Orchestrator 2012 and there are cmdlets available to manage a failover cluster

- Microsoft partner connmove offers "Easycloud" - a cmdlet based interface to SAP

- Powershell will be essential for administration on Windows Server Core installations

The main goal was to build a sample where one just has to click on the run-button of an Orchestrator 2012 runbook and then

a fully automated OS patch process should start on both VMs of a Hyper-V guest failover cluster where a SAP

High-Availability installation is running :

1. a SAP HA installation ( ASCS failover cluster ) on SQL Server ( again a failover cluster installation ) was done on two

virtual machines in form of a guest failover cluster ( failover cluster manager inside the VMs )

2. two dialog instances were added - one on each of the VMs - which are not part of the failover cluster. The assumption is

that in reality HA for dialog instances is in most cases achieved by installing enough of them on different servers

3. the blog will not provide a ready-to-use and officially supported solution which customers can download and install. It just

gives an idea how it could work to fully automate WIndows OS patching in a virtualized environment as one piece of a

bigger private cloud picture

4. Orchestrator 2012 ( workflow engine of System Center 2012 ) was installed on a VM outside of the failover cluster to

start the WIndows OS patch process inside the cluster VMs and minimize downtime for the SAP users

5. to use a Windows failover cluster minimizes downtime not only for unplanned incidents but also for planned activities like

OS patching. As it's necessary in the sample scenario to do a failover of the database too there will be some downtime.

It cannot be zero. Therefore it still makes sense to use times with low workload levels to accomplish the task

6. the fundamental workflow of the patch process is straight forward :

a, specify the name of the failover cluster and a filename where the node names will be saved

b, the Powershell scripts should start automatically on each of the two nodes of the failover cluster

( scripts as well as connmove 3rd-party tool were installed as a prerequisite on both cluster node VMs )

c, SAP dialog instances should be stopped and SAP / SQL Server cluster groups should be moved to the other node

d, OS patches should be installed

e, all resources which were running on the patched node before should be started again

f, at the end everything should look the same - just with an updated OS in both VMs of the failover cluster

7. the four screenshots below should give a first impression how the patch process workflow looks like.

Details will be explained in the walkthrough chapter further down

Conclusion :

it was possible to achieve the goal and create a sample which automatically installed WIndows OS patches on

the two nodes ( VMs ) of a guest failover cluster. But it would have been too complex for a single blog to cover

all potential scenarios. Therefore it should be considered a collection of ideas and not a read-to-use tool. What

is described in the blog leaves room for individual enhancements/adaptions which will be necessary in real-life

projects.

Figure 1 : SAP High-Availability installation on a Windows/Hyper-V - SQL Server guest failover cluster.

"Guest failover cluster" means that the failover cluster manager runs inside the two VMs

which represent the cluster. There are two cluster groups - one for SAP and one for

SQL Server.

Figure 2 : the minimum which has to be specified for the Orchestrator sample runbook are two

variables : the name of the cluster and a filename to store the node names of the cluster

Figure 3 : Orchestrator runbook 1 figures out which nodes belong to the cluster and stores the info

in a table in the Orchestrator database. Then it selects these two node names out of the

database and invokes a second runbook for every node which does the actual OS patching

Figure 4 : the second Orchestrator runbook simply calls the "OS patch script" on a node. The script first

stops SAP dialog instances, moves cluster groups away, does the OS patching and then

restarts everything again

Intro

In part II of the blog series :

it was described how a SAP dialog instance could be installed fully automated using Orchestrator 2012 - the workflow engine

in the System Center 2012 suite. Besides all the new and fancy stuff related to private clouds there are also some well-known

routine jobs which have to be managed.

And one of these routine jobs is Windows Patching. Over the years Microsoft improved the patching experience and related

tools and customers as well as partners came up with processes, procedures and solutions to handle this topic very efficiently.

This blog will again focus on the aspect of "automation" in a private cloud environment and show a way how it could be

implemented.

The basic approach is pretty clear :

1. on the server where the Windows patches should be installed the applications have to be stopped in some way. An

alternative in some cases could be that a new server will be provisioned ( e.g. creating a new VM from a template )

2. Windows patches will be applied

3. applications will be re-started in some way or newly installed. A good example for the latter case would be to add a new

SAP dialog instance as described in blog 2 of the series based on a VM template which is kept up-to-date regarding

Windows patches and just throw away the old one

The key question is how these three steps could be implemented to automate the whole process as much as possible and at

the same time reduce the downtime of the whole SAP system from a user perspective to an absolute minimum. Looking into

the future we expect full virtualization of SAP landscapes including the DB server. This means to distinguish between two

scenarios :

a, installation of Windows patches on the physical host

b, installation of Windows patches on the guest OS within a virtual machine

Due to virtualization new options are available to handle the Windows patching on the physical servers like :

- live migration

https://technet.microsoft.com/en-us/library/dd446679(v=ws.10)

https://www.microsoft.com/en-us/download/details.aspx?id=12601

https://blogs.msdn.com/b/clustering/archive/2009/02/19/9433146.aspx

- quick storage migration

https://blogs.technet.com/b/virtualization/archive/2009/06/25/system-center-virtual-machine-manager-2008-r2-quick-storage-migration.aspx

https://social.technet.microsoft.com/wiki/contents/articles/239.aspx

https://technet.microsoft.com/en-us/library/dd759249.aspx

In the future things will change using CAU - Cluster Aware Updating :

https://technet.microsoft.com/de-de/library/hh831694.aspx

'

To fully cover the physical host scenario is out of the scope of this blog. The main topic of this blog is the automation of

Windows patches within the guest OS of the virtual machines which belong to a SAP landscape :

The best way today on Hyper-V to automate Windows patching and reduce downtime within the VMs is guest clustering :

https://blogs.technet.com/b/mghazai/archive/2009/12/12/hyper-v-guest-clustering-step-by-step-guide.aspx

https://blogs.technet.com/b/schadinio/archive/2010/08/02/virtualization-guest-failover-clustering-with-hyper-v.aspx

https://technet.microsoft.com/en-us/library/cc732181(v=ws.10).aspx

The idea is then to do a SQL Server as well as a SAP failover cluster installation on top of the guest clustering setup.

These are well known procedures which customers and partners are already practicing for many years :

https://blogs.msdn.com/b/pamitt/archive/2010/11/06/sql-server-clustering-best-practices.aspx

https://sqlserver.in/blog/index.php/archive/sql-server-2008-clustering-step-by-step/

https://www.sdn.sap.com/irj/scn/go/portal/prtroot/docs/library/uuid/80bde77a-3f66-2d10-d390-a3c44e58f256?QuickLink=index&overridelayout=true&48438641349409

https://www.sdn.sap.com/irj/scn/go/portal/prtroot/docs/library/uuid/a0b86598-35c5-2b10-4bad-890edeb4c8f2?QuickLink=index&overridelayout=true&37443524944380

https://www.sapteched.com/sapvweek/presentation/TECH08/TECH08.pdf

Automated Windows Patching in a Microsoft Private Cloud for SAP

1. How to handle SAP specific tasks in the OS patch process via Powershell ?

All which was described above leaves the open question how to automate the Windows patching task within the VMs by

triggering a failover of the cluster resources, install the OS patches and finally move back the cluster resources. Looking

around on the Internet one will find already Powershell examples to do it like this one :

https://practicalpowershell.com/2012/01/07/cluster-reboot/

But in a virtualized SAP landscape or SAP private cloud there will/might be some additional SAP specific items which have

to be considered besides a simple move of cluster groups. Administrators around the world implemented processes to

optimize the OS patching. The purpose of this blog is to present some additional ideas to drive automation to the next level.

No doubt - Powershell is the way to go. But what about the SAP specific stuff mentioned before ? Here are three simple

examples :

a, there might be a SAP dialog instance on the node of a failover cluster which is not part of a cluster group and just has to

be stopped and restarted after the OS patch. How could this be done via Powershell ?

b, what if a SAP system message should be generated automatically before shutting down a SAP dialog instance ?

c, what if a dialog instance should be taken out of a SAP logon group before the final shutdown ?

To make life easier and give an idea how the optimal solution could look like a SAP Powershell interface called "Easycloud"

from Microsoft partner connmove in Germany was used. This collection of cmdlets fills the gap to connect to the SAP system

very asily via Powershell. connmove just started to offer an evaluation copy of "Easycloud" for download :

https://connmove.eu/en/software/downloads/

https://connmove.eu/en/software/easycloud/

https://connmove.eu/en/solutions/it-automatization/

As mentioned at the beginning of the blog - Orchestrator 2012 is a great workflow engine to create so-called runbooks which

can then be used to put the Powershell scripts into a workflow to control the whole process. OS patching on the physical host

and also the OS patching inside the VMs of a guest failover cluster can be managed out of System Center Orchestrator 2012.

2. How should the OS patch be installed via Powershell ?

The OS patch itself will be usually done via WSUS or SCCM. The link to the article above shows the SCCM usage. This blog

will show how to get everything done with WSUS. Again one will find examples for Powershell access to WSUS like this one :

https://www.gregorystrike.com/2011/04/07/force-windows-automatic-updates-with-powershell/

As Windows OS patching is an important and critical task Microsoft partner connmove also integrated WSUS OS updates into

their Easycloud SAP Powershell interface which reduces the complexity of the whole process quite a bit

( https://connmove.eu/en/solutions/it-automatization/ ).

The WSUS console for the sample was configured/installed on the same VM where Orchestrator was installed. The idea is to

give the WSUS server Internet access to download OS updates but don't allow the VMs of the failover cluster direct Internet

access. The VMs will connect to the local WSUS server and get the OS updates from there. The walkthrough chapter below

includes a separate section regarding the WSUS configuration.

Walkthrough

The walkthrough chapter has four parts :

1. Detailed explanation of the Orchestrator 2012 runbook sample

2. Miscellaneous items which show some additional options for extending the solution

3. Some info about the WSUS setup used for this test environment

4. Powershell test scripts which were used for the sample

1. Detailed explanation of the Orchestrator 2012 runbook sample

Figure 5 : a full SAP ASCS HA ( guest failover cluster on Hyper-V ) installation was done

Figure 6 : the underlying SQL Server installation is a cluster installation too

Figure 7 : corresponding cluster disks for SAP and SQL Server can be seen here

Figure 8 : two variables were defined in Orchestrator runbook designer to parameterize the process :

a, the virtual name of the failover cluster ( has a static IP address )

b, a filename to store the info about the two nodes of the cluster

Figure 9 : here one can see all the activities of the first runbook :

a, do a remote WMI call using the virtual cluster name to get infos about the cluster nodes

b, store the output of the WMI call in a file on the local Orchestrator machine

c, call a Powershell script which extracts the node names from the file and writes them

into a table in the Orchestrator database

d, query the Orchestrator database to get the cluster node names

e, for every row coming from the DB query ( one row per cluster node name ) invoke the

second runbook which will initiate the OS patch process

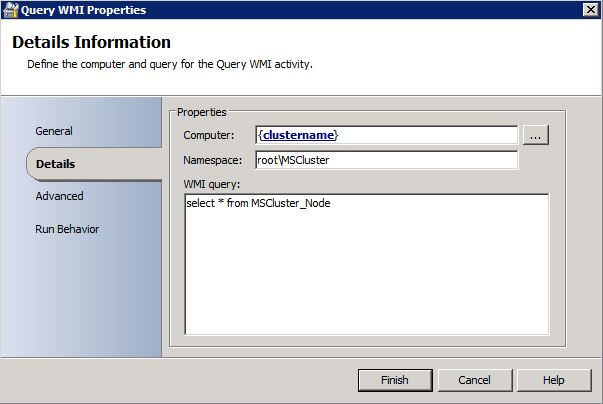

Figure 10 : the WMI activity is very easy to use. The runbook variable containing the virtual cluster

name was used as the computer name. The simple query on namespace "root\MSCluster"

returns information about the two cluster nodes ( including their hostnames )

Figure 11 : the WMI query output will be written to a file on the local machine where Orchestrator is

installed

Figure 12 : now the first Powershell script will be called. The script is also stored on the local

Orchestrator box. It has two parameters : the virtual name of the guest failover cluster

and the filename where the WMI query output was saved.

The script source can be seen at the end of this blog in section 4 of the walkthrough

Figure 13 : a simple table with three columns was created in the Orchestrator database to store the

node name information. The Powershell script inserted in fact two rows :

row1 : virtual cluster name, name node 1, name node 2

row2 : virtual cluster name, name node 2, name node 1

This is a simple solution to call the Powershell script which does the real OS patch. In the

first call "name node 1" will be used as the computer name where the script should run

and do the patching. And "name node 2" will be used as the target node where the cluster

groups should be moved to. For the second call it's just the other way around. This way

both cluster nodes will be patched one after the other

Figure 14 : the next activity in the runbook will just do a select on the database table to retrieve the

two rows with the cluster node names

Figure 15 : the last activity in runbook 1 will invoke the second runbook which controls the patch

process on a specific cluster node. As the DB query activity will return every row in

form of a single string where the individual fields are separated by a semicolon the

field function ( put into brackets ) was used to extract the two node names and use

them as parameters for the second runbook :

[field({Full line as a string with fields separated by ‘;’ from “Query Database”},';',2)]

[field({Full line as a string with fields separated by ‘;’ from “Query Database”},';',3)]

Figure 16 : the second runbook has only two activities :

a, "initialize data" to take over the two parameters from the calling runbook

b, "run program" to call the Powershell script which does the OS patching

Figure 17 : in case there should be issues to use the "run program" activity in Orchestrator to start a

Powershell script on the nodes of the failover cluster it might be necessary to add/change

a registry key on the nodes :

HKLM\SYSTEM\CurrentControlSet\services\LanmanServer\Parameters

DWORD32 AutoShareServer = 1

Figure 18 : the "initialize data" activity is trivial. It just defines the two runbook parameters

Figure 19 : the "run program" activity in runbook 2 will simply call the Powershell script which was

put on both VMs of the failover cluster. The first parameter ( name of a cluster node )

will also be used as the name of the remote computer where the Powershell script

should be started. The runbook will be called two times - once for every cluster node.

The script source can be seen at the end of this blog in section 4 of the walkthrough

Figure 20 : once the whole process is finished there should be a "success" message in the log history

of the first runbook - see bottom of the screenshot

Figure 21 : the log history of the second runbook should have two "success" messages as it can be

seen at the bottom of the screenshot because the first one will call the patch process on

every of the two nodes in the guest failover cluster

Figure 22 : this screenshot shows the content of the simple and rudimentary log file of the Powershell

script which does the real OS patch ( see script source in section 4 of this chapter ). SAP

ASCS wasn't moved because this is the log of node 1 ( hostname ends with '2' ) and the

owner node of the SAP cluster group was node 2 ( hostname ends with '3' ). But the

SQL Server cluster group was moved to node 2 and later back to node 1 again. The SAP

dialog instance running on the same node was just stopped and restarted at the end

Figure 23 : the rudimentary Powershell log file on the second node looks analog to the first one. It just

took longer to bring the SAP dialog instance up again. Don't forget though that the other

dialog instance on node 1 was up at this point in time and able to process user transactions

2. Miscellaneous

This section will cover different tasks of the patch process and how they can be accomplished via

Powershell. It also should show options and give ideas how the OS patch process described in the

first section of the walkthrough chapter could be further extended or improved.

Figure 24 : loading the failoverclusters module provides a list of cmdlets which make it very easy to

handle all failover cluster related activities. "get-clusternode" returns all nodes which

belong to the failover cluster

Figure 25 : the get-clustergroup cmdlet returns all existing cluster groups as well as the state

information. As expected one can see the SAP and the SQL Server cluster group

all online on the first node ( node name ends with '2' )

Figure 26 : a simple "move-clustergroup" command will move e.g. the SQL Server group to the

second node in the cluster ( node name ends with '3' )

Figure 27 : after starting the move-clustergroup Powershell command one can see the change of the

SQL Server cluster group in the failover cluster manager

Figure 28 : at the end the SQL Server group is online again on the second node ( node name ends

with '3' )

Figure 29 : while it's possible to manage the move of the cluster groups with standard Powershell

commands from the failoverclusters module the save and restore cmdlets from

Easycloud were used to simplify the patch script - see source in section 4 further down.

The two Easycloud commands save the clustergroup information in a file and later on

restore the node to its original state

Figure 30 : this screenshot shows the WSUS console which was set up for the sample. A group for

the SAP VMs was created. Some additional hints regarding the WSUS setup can be found

in section 3 of the walkthrough

Figure 31 : based on the configuration two updates were available on the local WSUS for the cluster

nodes

Figure 32 : here one can see the details about the two WSUS updates

Figure 33 : as mentioned earlier there are examples out there on the Internet how to access a local

WSUS server via Powershell. Easycloud from connmove integrated this too. There is a

list of cmdlets which help to manage the installation of OS patches

Figure 34 : the measure cmdlet shows that there are 2 updates available

Figure 35 : the search cmdlet shows the details of the two patches as seen on the Windows update

screenshot above

Figure 36 : here one can see that the get cmdlet downloads the updates and the install cmdlet finally

installs them

Figure 37 : two SAP dialog instances were installed in addition to the SAP ASCS cluster installation.

Both belong to the SAPWPTLG logon group

Figure 38 : Easycloud provides cmdlets to assign SAP instances to logon groups. And it offers the

capability to take an instance out of a logon group.

This would allow to remove e.g. a dialog instance from a logon group before shutting

it down to let current users finish their work and avoid that new users connect

Figure 39 : another Easycloud cmdlet lists the existing SAP instances

Figure 40 : using the virtual hostname of the SAP cluster group shows the message server when

working with the list-cmsapinstance cmdlet

Figure 41 : list SAP instances on the second cluster node where the second dialog instance

( again instance number 1 ) was installed

Figure 42 : as seen before the Easycloud cmdlets can be used to check and test all existing

SAP instance. So how could this be used to improve the patch process ? Well - one

possibility might be to combine it with a system message. The screenshot shows an

empty system message screen

Figure 43 : the Easycloud set-cmsm02 cmdlet allows to create a SAP system message

Figure 44 : as expected the system message shows up at the SAP user afterwards

Figure 45 : pretty cool - the Powershell command inserted the new message. So in addition to

taking out a SAP dialog instance from a logon group the patch script could also create

a corresponding system message

Figure 46 : now it gets more complicated. The screenshot shows a simple batch job which was

scheduled for a certain time/date

Figure 47 : again - there are Easycloud cmdlets which allow to check for existing SAP jobs and their

state. So in case jobs were canceled during a forced failover to do an OS patch one

could make use of these cmdlets to automatically handle the situation and maybe

inform the admin via email or try to re-start jobs

3. WSUS setup

As described before a local WSUS setup was used for the tests. There were two major challenges

which will be explained with the following screenshots :

a, the WSUS server was set up on a VM outside of the failover cluster. The VMs of the cluster

were taken out of a domain policy to allow the configuration for the local WSUS server. But

after they were removed from the domain policy the question was how to configure them to

work with the local WSUS ?

b, after all the configuration for usage of the local WSUS server the VMs still couldn't connect.

It took some time until tracert showed the issue. There was an additional unexpected hop

due to the network configuration going over a router where port 80 was blocked - which was

the port WSUS was listening on ....

Figure 48 : instead of messing around with direct registry entries to configure the VM for usage of a

local WSUS server MMC was called to do it via a local policy. So first start mmc from

Start->Run ... Then the Console Root window should come up as seen on the screenshot.

Choose File->Add/Remove Snap-in

Figure 49 : in the selection list which comes up next double-click on "Group Policy ...."

Figure 50 : make sure that the group policy object says "Local Computer" and click on "Finish".

Afterwards you will be back on the Add or Remove Snap-Ins window. There click on

the Ok-button

Figure 51 : then expand the tree-view on the left as seen on the screenshot and select

"Windows Update"

Figure 52 : now a list of options comes up where several settings for the WSUS server can be

configured

Figure 53 : one important option "Configure Automatic Updates" has to be set to a value which

leaves the control to the Orchestrator runbook instead of installing the patches

automatically by WSUS. In the test environment it was set to '2'

Figure 54 : "Specify intranet Microsoft update service location" is the option where one will define

the hostname of the server where WSUS is installed/configured

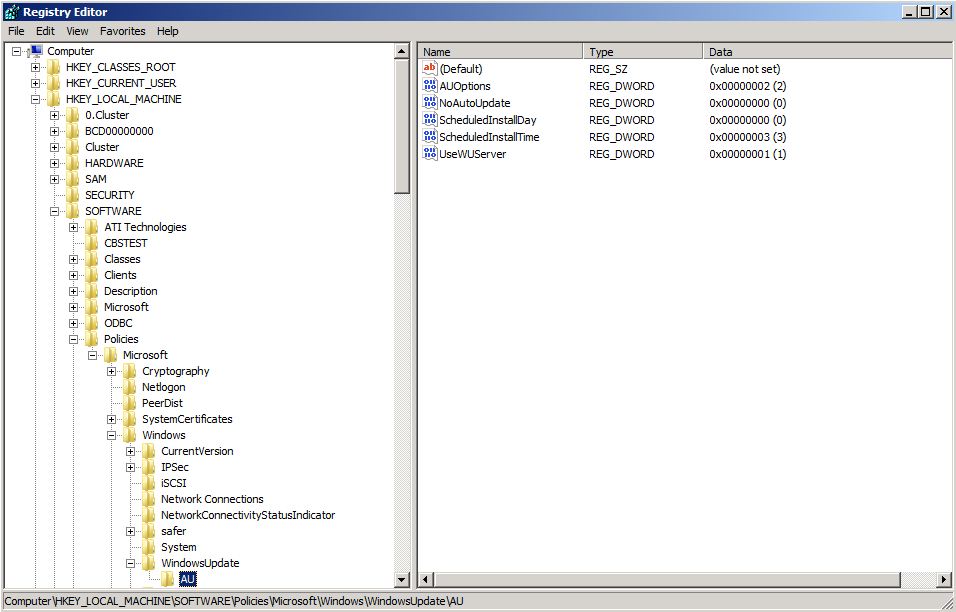

Figure 55 : after leaving the mmc one will find the entries which were made in the registry under :

HKLM\SOFTWARE\Policies\Microsoft\Windows\WindowsUpdate

Figure 56 : additional values related to the WSUS setting can be found under :

HKLM\SOFTWARE\Policies\Microsoft\Windows\WindowsUpdate\AU

Figure 57 : back to the WSUS server. As explained in the intro of this section there was an issue due

to the network configuration and an unexpected hop over a router which blocked port 80.

So despite the fact that the firewalls were turned off the VMs of the cluster couldn't

connect to the WSUS server. Finally using "tracert" showed the issue.

Question was then how to change the port on which the WSUS server would listen.

Well - first start the IIS manager on the WSUS server machine. Under

<Computer>->Sites->Default Web Site->Selfupdate one will find the relevant WSUS

pages

Figure 58 : but the port cannot be changed just for WSUS. It has to be changed via "Edit Bindings"

for all default pages. Right-click on "Default Web Site" and select "Edit Bindings".

Figure 59 : now select the "http" line and choose "Edit". A dialog will come up which allows to change

the port number

4. Powershell test scripts

Both of the following Powershell scripts which were used for the sample are just test scripts. The

purpose is to give some ideas how things can be accomplished. Focus was NOT on optimal

Powershell programming style or error handling. The scripts are NOT meant to be used in this

form in a production environment.

PS Script 1

the first Powershell script reads a file where the WMI query described before stored the info

about the cluster nodes. The script extracts just the node names and inserts them into the

Orchestrator database. The virtual cluster name as well as the file name are handed over

as parameters when calling the script from Orchestrator. There is no check right now if the

rows already exist in the database.

$clustername = $args[0]

$cluster_node_name_file = $args[1]

$clusternodes = new-object system.collections.arraylist

Get-Content $cluster_node_name_file | Foreach-Object { if (

$_ -like "Name*" ) {

$clusternodes.add([string]$_.split("=")[1].trim())

} }

$SQLServerConnection = New-Object

System.Data.SQLClient.SQLConnection

$SQLServerConnection.ConnectionString =

"Server=wsiv5100;Database=Orchestrator;Integrated Security=SSPI"

try {

$SQLServerConnection.Open()

$SQLCommand = New-Object System.Data.SQLClient.SQLCommand

$SQLCommand.Connection = $SQLServerConnection

$SQLCommand.CommandText = "INSERT INTO [dbo].[autopatch_cluster_nodes]

values ( '"+$clustername+"','"+$clusternodes[0]+"','"+$clusternodes[1]+"' )"

$SQLCommand.ExecuteNonQuery()

$SQLCommand.CommandText = "INSERT INTO [dbo].[autopatch_cluster_nodes]

values ( '"+$clustername+"','"+$clusternodes[1]+"','"+$clusternodes[0]+"' )"

$SQLCommand.ExecuteNonQuery()

}

catch [System.Data.SqlClient.SqlException] {

"Error during DB Insert : " >> C:\patchtest\sqlins_error_log.txt

$_.Exception.Message >> C:\patchtest\sqlins_error_log.txt

}

catch {

"Error during DB access/insert" >> C:\patchtest\sqlins_error_log.txt

}

$SQLServerConnection.Close()

PS Script 2

the second script controls the OS patch process itself. But it only includes a dummy instead

of real commands to install a patch. In the sections before a sample screenshot showed

how a patch install using Easycloud from connmove could look like. And a blog mentioned

before shows how it could work using SCCM. There are many options how the process

could be extended and improved or adapted to special individual needs.Again - it's NOT a

read-to-use patch solution but more a test script to give ideas.

As a prerequisite the script was put on both VMs of the failover cluster. It would also be

possible to keep a master copy of it on a share and move it to the VMs for every patch

process. Easycloud from connmove was also installed on both VMs. It also could be

installed "on-the-fly" from a share similar to the installation of a SAP dialog instance

described in the last blog of the series.

The main steps are pretty simple :

- use an Easycloud command to save the cluster state of the current node

- use an Easycloud command to go through the existing SAP instances

- try to identify a dialog instance assuming that these are not part of the failover

cluster (depending on the SAP setup it's probably a better idea to identify via

the instance number )

- shut down SAP dialog instances which are online and keep the instance nr

- loop until the SAP dialog instance is really down

- then go through all the cluster groups and check which ones have the current

node as the owner node

- move the relevant cluster groups to the other node

- patch dummy

- restore the current node regarding cluster groups

- restart all SAP dialog instances which were stopped before

- for each dialog instance wait until it's really up

$source_node = $args[0]

$target_node = $args[1]

$sapinstancesstopped

$log_file = ".\prepare_Wpatch_log"

import-module failoverclusters

add-pssnapin CmCmdlets

"Clusternode 1 - source / localhost : " + $source_node > $log_file

"Clusternode 2 - target : " + $target_node >> $log_file

"" >> $log_file

$connsaphost = connect-cmsaphost -hostname $source_node

-username <test user> -password <password>

save-CmCluster -ConfigFileName "c:\temp\cmclusterconfig"

list-cmsapinstance -sapcontrolconnect $connsaphost |

foreach-object {

$sapinststate = test-cmsapinstance -SapControlConnect

$connsaphost -Sapinstancenumber $_.instanceNr

if ( $_.features -eq "ABAP|GATEWAY|ICMAN|IGS" -And

$sapinststate -eq "SAPControlGREEN" ) {

"check SAP instance feature set : " + $_.features +

" : this looks like a DI - let's shut it down" >> $log_file

$sapinstancesstopped += @($_.instanceNr)

stop-cmsapinstance -sapinstancenumber $_.instanceNr

-sapcontrolconnect $connsaphost

"" >> $log_file

"loop to check DI status after stop" >> $log_file

"" >> $log_file

do {

disconnect-CmSAPHost -SapControlConnect $connsaphost

$connsaphost = connect-cmsaphost -hostname $source_node -username <test user>

-password <password>

$sapinststate = test-cmsapinstance -SapControlConnect $connsaphost

-Sapinstancenumber $_.instanceNr

start-sleep -s 3

"SAP instance nr : " + $_.instanceNr + " state : " + $sapinststate >> $log_file }

while ( $sapinststate -ne "SAPControlGRAY" )

}

else {

"" >> $log_file

"no shutdown : SAP instance feature set - " + $_.features + " state : " +

$sapinststate >> $log_file } }

"" >> $log_file

"Clustergroups before move" >> $log_file

"" >> $log_file

get-clustergroup | foreach-object {

"Clustergroup : " + $_.Name + " Ownernode : " + $_.OwnerNode.Name + " State : " +

$_.OwnerNode.State >> $log_file }

"" >> $log_file

get-clustergroup | foreach-object {

if ( $_.State -eq "Online" -And $_.OwnerNode -like $source_node )

{ move-clustergroup -Name $_.Name -Node $target_node }

else

{ "no move of cluster group : " + $_.Name + " Ownernode : " + $_.OwnerNode.Name +

" State : " + $_.OwnerNode.State>> $log_file } }

"" >> $log_file

"Clustergroups after move" >> $log_file

"" >> $log_file

get-clustergroup | foreach-object {

"Clustergroup : " + $_.Name + " Ownernode :

" + $_.OwnerNode.Name + " State : " + $_.OwnerNode.State >> $log_file }

"" >> $log_file

"do patching" >> $log_file

"" >> $log_file

restore-cmcluster -ConfigFileName "c:\temp\cmclusterconfig"

-TargetNode $source_node

"Clustergroups after cluster restore" >> $log_file

"" >> $log_file

get-clustergroup | foreach-object {

"Clustergroup : " + $_.Name + " Ownernode : " + $_.OwnerNode.Name + " State : " +

$_.OwnerNode.State >> $log_file }

"" >> $log_file

if( $sapinstancesstopped -ne $null ) {

"number of SAP instances to start : " + $sapinstancesstopped.count >> $log_file

"" >> $log_file

foreach ( $sapinstance in $sapinstancesstopped ) {

"start SAP instance nr : " + $sapinstance >> $log_file

start-cmsapinstance -sapcontrolconnect $connsaphost

-SapInstancenumber $sapinstance

"" >> $log_file

"loop to check DI status after start" >> $log_file

"" >> $log_file

do {

disconnect-CmSAPHost -SapControlConnect $connsaphost

$connsaphost = connect-cmsaphost -hostname $source_node -username <test user>

-password <password>

$sapinststate = test-cmsapinstance -SapControlConnect $connsaphost

-Sapinstancenumber $sapinstance

start-sleep -s 3

"SAP instance nr : " + $sapinstance + " state : " + $sapinststate >> $log_file } }

while ( $sapinststate -ne "SAPControlGREEN" )

} else { "no SAP instances need to be started" >> $log_file }