Resource Management in the Concurrency Runtime – Part 1

In my previous blog post, I gave an introduction to the native concurrency runtime in Visual Studio 2010. For a general picture of the native concurrency runtime, and high level roles of each of its components please refer to this post. Today, I will talk about the lowest level in that component stack: the Resource Manager (RM).

Resource Manager Responsibilities

In short, there are two main responsibilities of the Resource Manager:

1- Initial Allocation: Allocating resources to schedulers when schedulers are created.

2- Dynamic Migration: Constantly monitoring utilization of resources by schedulers, and dynamically migrating resources between schedulers.

This post will focus on the initial allocation and the dynamic migration will be detailed in my next blog post.

What is a Resource Anyway?

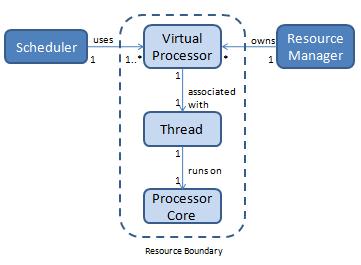

Before going into details about the “Resource Manager” for the Concurrency Runtime, let’s define what is meant by a resource in this context. A resource is the unit of the processing power of the machine which is owned by the RM and used by schedulers. A resource is abstracted in the form of a “Virtual Processor” in Concurrency Runtime. At a given time each virtual processor is associated with a thread running on a processor core (see below - Resource Boundary). The RM creates virtual processors and hands them to schedulers; meanwhile, scheduler instances use them to execute tasks in parallel.

Why do we need a Resource Manager?

Clearly, we wouldn’t need a Resource Manager if it was possible for a single scheduler to fit all the needs of parallel tasks. However, there are a couple of reasons why it is feasible for an app to use multiple schedulers:

· The hotter the cache, the faster the processing: Virtual processors have affinity to group of processor cores; the sockets or nodes. The more they do related work, the more likely they are to find that the data they need is already in the cache.

o Assume that your app uses a bunch of libraries. It is highly probable that work performed by a given library is related. Having a scheduler per library will keep the cache warm and enable faster processing.

· Not all schedulers are same: A scheduler instance is policy driven. This means different schedulers might have different characteristics.

o Assume that a game engine is to be implemented. As an example, it could have an image rendering component, a sound rendering component and an object state update component. All these will execute in parallel; however, we might want state update to have a higher thread priority and would like to decouple sound processing tasks from image rendering tasks. Having multiple schedulers will enable us to customize scheduler behavior and separate task execution.

Now given that there can be multiple schedulers in a process for the reasons mentions above, let’s talk about if a resource manager was not present what would happen:

1- Schedulers would be free to use any resource

2- Schedulers would implement a way of sharing resources

Apparently the first one would end up with a non-optimum utilization of resources; some processors cores would be over utilized and some would be underutilized due to no communication between schedulers. On the other hand, the second one would end up with a complex design contradicting with design patterns like ‘Low Coupling’ or ‘High Cohesion’ (https://en.wikipedia.org/wiki/GRASP_(Object_Oriented_Design) ). Thanks to RM that it helps resources to be utilized evenly meanwhile still keeping the design of a scheduler simpler. By the way it is important to keep the design of the scheduler simpler because users can also implement a custom scheduler on top of RM (see below for more on this).

Additionally, the scheduler implemented as part of the concurrency runtime is a general purpose scheduler; for specialty scenarios, it may be possible to implement a scheduler tuned for a particular purpose, and it’s important that such a scheduler be usable in conjunction with other work in the same process and without oversubscribing on resources. Thanks to RM again that by using its APIs custom schedulers can be built meanwhile respecting the resource usage of other schedulers in the process.

The Initial Allocation of Resources

Now we can describe initial allocation, one of the core functions of the Resource Manager.

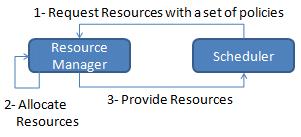

As mentioned in the Resource Manager Responsibilities paragraph, initial allocation is about providing the resources to a scheduler at its creation time. A diagram of initial allocation steps can be found below.

A scheduler instance will place its request for resources by providing a set of policies to the resource manager. The resource manager will do the allocation depending on the policies and taking into account the other schedulers in the process. Eventually the resources will be provided to the scheduler in need.

The policy values given in step-1 play an important role in how many virtual processors the scheduler will have. Let’s go through those policies that effect initial allocation:

MinConcurrency and MaxConcurrency: These two policies specify the concurrency level of the scheduler. The number of virtual processors allocated to the scheduler by the RM will be bounded by these policy values. Depending on the existence and policy of the other schedulers, any number of resources can be allocated in between.

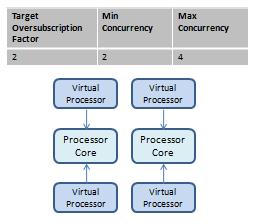

TargetOversubscriptionFactor: Oversubscription is defined as the number of threads associated with a particular processor core. RM will try to allocate resources up to MaxConcurrency by oversubscribing each core with the given TargetOversubscriptionFactor.

Here is an example where the scheduler actually asks for minimum of 1 processor core and maximum of 2 processor cores both oversubscribed by a factor of 2. If there are no other schedulers, on a 2 core machine the allocation will be as shown below.

ResourceAllocationPriority: RM will try to satisfy the requests of the schedulers with higher priority at the cost of lower priority schedulers.

Now let’s take a look at a general overview of the rules of initial allocation and some examples of it in order to understand the usage of the policy values.

Rules of Initial Allocation

Initial allocation has a set of rules that defines its behavior. I will mention those rules and then in the next paragraph will try to give examples to explain in more detail.

1) RM will never allocate less resources then MinConcurrency and more resources than MaxConcurrency

2) RM will share cores within schedulers as minimum as possible

3) The resources will be allocated as close as possible. The closeness criteria here is that the processor cores in a NUMA node / processor socket are closer with respect to processor cores in other NUMA nodes / processor sockets.

4) The resources will be proportionally distributed with respect to MaxConcurrency within the schedulers of equal priority if MaxConcurrency cannot be met.

5) Until MaxConcurrency resources are provided to a higher priority scheduler, lower priority schedulers will be reduced to their MinConcurrency

Examples of Initial Allocation

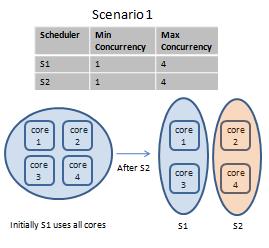

We have mentioned that multiple schedulers may reside in a process. What if there is an existing Scheduler (S1) when a Scheduler (S2) with the same ResourceAllocationPriority requests resources?

RM will try to avoid sharing of processor cores between S1 and S2 as much as possible.

Scenario1: If there are no resources available to satisfy S2 then a proportional allocation will be performed between all schedulers that have the same ResourceAllocationPriority (S1 and S2). Allocation is proportional to each scheduler’s policy value of MaxConcurrency.

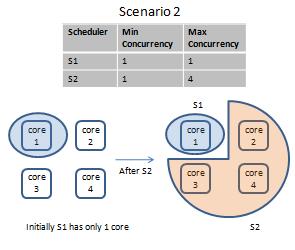

Scenraio2: If there are N available resources such that MinConcurrency of S2 <= N <= MaxConcurrency of S2, N resources are allocated to S2, and S1 is left untouched.

Please note here that S1’s number of allocated cores is reduced from 4 to 2 when a scheduler with equal ResourceAllocationPriority is created.

Resources of S1 is not touched and S2 gets only 3 of its requested resources when there are at least MinConcurrency number of resources available for S2.

What if ResourceAllocationPriority of S2 is greater than S1?

In this case RM will lower S1 to MinConcurrency, and try to allocate N resources where MinConcurrency of S2 <= N <= MaxConcurrency to S2. If N is less than the minimum that S2 requires, RM will share processors cores with S1, to satisfy S2’s minimum request.

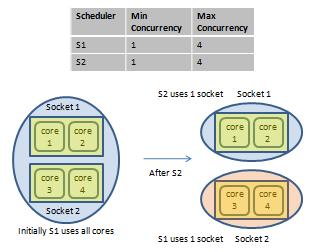

What if the machine supports NUMA architecture? Is locality taken into account?

Yes, RM will try to localize the allocation as much as possible. For example, on a machine with 2 sockets and 2 processor cores in each socket, the allocations will be made within socket boundaries.

What if both S1 and S2 has MinConcurrency=MaxConcurrency =Number of processor cores of the machine?

Since RM can’t allocate less than MinConcurrency and both schedulers have requested all cores, all the cores will be shared between S1 and S2. In general, RM will share cores in the final step of initial allocation algorithm, and share as little as possible.

What if one of S1 shutdowns? Are the resources made available to S2?

If S2 doesn’t have MaxConcurrency allocated at the time S1 shutdowns, dynamic migration will increase the number of resources of S2. However dynamic resource management is a whole topic unto itself, and I will write about it in my next blog.

Comments

Anonymous

March 11, 2009

Thank you for submitting this cool story - Trackback from DotNetShoutoutAnonymous

May 25, 2009

I have a question regarding the resource manager. Is there just one resource manager for the whole system or one for each process? If each process has its own resource manager how it tackles the situation when two or more threads in different processes compete for a CPU?Anonymous

May 25, 2009

As of Dev10 the resource manager is per process and it will load balance the schedulers within a process. We are investigating a single resource manager for the whole system for Dev11. Thank you for your feedback.Anonymous

August 06, 2009

Good posting! I have a question regarding the creation of the physical resource's topology: are you able to the tell the locality of a given NUMA node, with regard to the remaining nodes (that is, tell which nodes are closer and which are further, in terms of latency or any other metric)? Thank you, DuarteAnonymous

August 07, 2009

Thank you Duarte. As of Dev10 our distance function is: 0, node1 == node2 distance(node1,node2) = 1, node1 != node2 We are targeting Dev11 together with OS support to enhance the distance function.Anonymous

August 03, 2010

I want the program to allocate the resources .. do you help me? please..Anonymous

August 03, 2010

Hi Archana. Do you mean that you would like to implement your own resource manager? I.e., your own resoruce manager decides which schedulers get what resources and how recources are reallocated dynamically between multiple schedulers. If that was your question, Concurrency Runtime does not support this feature. I would be interested in your scenario in that case to share it with my team. What functionality of resource manager you want to be different or what functionality you want to be added, so that you dont need to implement your own resource manager? Let me know if you meant something else with the question. Thank you, Atilla