An Introduction to Native Concurrency in Visual Studio 2010

In this blog I will be giving an introduction to the native concurrency support in Visual Studio 2010. My motivation is that an architectural understanding of the features will enable the reader to make the most of the underlying infrastructure. In future blogs, I will go deeper into each sub topic.

The Goal

The ultimate goal of the native concurrency is to provide tools to the developers that will enable them to introduce scalable and maintainable parallelism into their applications.

One side of the goal is to inject efficient parallelism as easy as writing the following:

parallel_for(0, 10, [=](int i){ foo(i); } );

where foo() will be evaluated in parallel by the runtime. The other side is to enable productivity via the provided programming models.

The Architecture of Native Concurrency in Visual Studio 2010

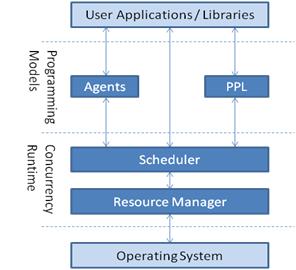

Here’s a picture of the major components in the native concurrency development stack. I’d like to go through each of them and the interactions in between.

Resource Manager (RM)

As the name of the component suggests, the main responsibility of the RM is to manage the resources, where the resources here are the processor cores of the system. These resources are requested by the scheduler(s) to execute parallel work and are then distributed by the RM to the scheduler(s).

In order to accomplish the distribution, the RM captures the machine topology (i.e. number of processor cores and the closeness of the processor cores to each other) at its creation time. As the schedulers are created, it takes into account various factors to make the allocation including:

i- Minimum and maximum number of cores requested by each scheduler

ii- Scheduler priority

iii- Utilization of allocated processor cores per scheduler

iv- Closeness of the allocated cores of each scheduler

(i.e. on a NUMA Architecture RM will try to allocate cores as close as possible. In other words, RM will span the allocation to a minimum number of NUMA nodes)

RM will not only allocate resources at the scheduler's creation time but also continue to monitor all schedulers to maximize the utilization of resources by taking from the ones that don't utilize and by giving to the ones that are in need.

Users that are willing to implement their own scheduler will mostly use this interface of the runtime.

For more information on concurrency runtime's resource manager please refer to the following blog posts:

Scheduler

Each parallel application consists of multiple tasks to be executed. As an example for the code given above; foo(0), foo(1), ..., foo(9) can be considered as tasks to be executed in parallel. It is critical to have a component to have this set of work distributed to the processor cores available for execution in an efficient way. Within the Concurrency Runtime this is responsibility of the Scheduler.

The Scheduler, despite having a predefined default behavior, can be customized through a set of policies such as: the number of resources it will use, the number of OS threads mapped to its allocated resources, its resource allocation priority, and either it should give priority to execute tasks to increase cache hits or improve fairness across tasks.

An important aspect of the Scheduler is that it is cooperative in the sense that it will not preempt a running task until it finishes execution or that task cooperatively yields its execution on behalf of other tasks. This is particularly important since it avoids context switches and cache being thrashed due to randomization introduced by preemption.

Users that are willing to implement their own concurrent library will mostly target this interface of the runtime.

PPL

PPL stands for the Parallel Pattern Library and is meant to provide a convenient interface to execute work in parallel. By using PPL, you can introduce parallelism without even having to manage a scheduler. Here are the common patterns available:

Task execution patterns:

parallel_invoke: To execute from 2 to 10 tasks in parallel

parallel_for: To execute tasks in parallel over a range of integers

parallel_for_each: To execute tasks in parallel over a collection of items in an STL container

Synchronization patterns:

reader_writer_lock: A cooperative reader-writer lock that yields to other tasks instead of preempting.

critical_section: A cooperative mutual exclusion primitive that yields to other tasks instead of preempting.

For more information on synchronization patterns please refer to the following blog posts:

Data sharing pattern:

combinable: A scalable object that has a local copy for each thread where processing can be done lock free on the local copy and combined afterwards when parallel processing is done. For more info on combinable please refer to this.

Application developers will mostly use this interface to inject parallelism.

Agents

We all know that building software is not trivial. Building software that has to manage concurrent access to shared state is even harder. Agents are a model for decomposing parallel applications to make them easy to design, build and maintain. With Agents, you can implement the main components of the software in isolation, communicate between them by message passing (i.e. via messaging blocks), and in each Agent introduce concurrent computation using finer grain constructs like the PPL. For more info about using Agents and messaging bocks please refer to this blog or this Channel9 video.

Here are also other interesting blogs on agents and messaging blocks:

What next?

I hope this blog helped in a better understanding of what the Concurrency Runtime is about. I will continue to detail each component as I give more concrete examples while digging that particular feature. Your comments are valuable for me alot so please feel free to provide feedback.

Comments

Anonymous

January 12, 2009

My latest in a series of the weekly, or more often, summary of interesting links I come across related to Visual Studio. Justin Etheredge wrote a nice overview of overflow checking in c# and explains how to enable this for certain statements and projectAnonymous

January 13, 2009

The comment has been removedAnonymous

January 13, 2009

Thank you for the feedback Christian. This should be fixed now.Anonymous

January 13, 2009

An Introduction to Native Concurrency in Visual Studio 2010