原生引擎中的延伸眼球追蹤

延伸眼球追蹤是HoloLens 2的新功能。 這是標準眼球追蹤的超集合,只提供結合的眼球注視資料。 延伸眼球追蹤也提供個別的眼球注視資料,並允許應用程式為注視資料設定不同的畫面播放速率,例如 30、60 和 90fps。 目前HoloLens 2不支援眼球開啟和眼球頂點等其他功能。

擴充眼球追蹤 SDK可讓應用程式存取延伸眼球追蹤的資料和功能。 它可以與 WinRT API 或 OpenXR API 搭配使用。

本文涵蓋在原生引擎中使用擴充眼球追蹤 SDK 的方式, (C# 或 C++/WinRT) ,以及 WinRT API。

專案設定

-

使用 Visual Studio 2019 或更新版本建立

Holographic DirectX 11 App (Universal Windows)或Holographic DirectX 11 App (Universal Windows) (C++/WinRT)專案,或開啟現有的全像攝影 Visual Studio 專案。 - 將延伸眼球追蹤 SDK 匯入專案中。

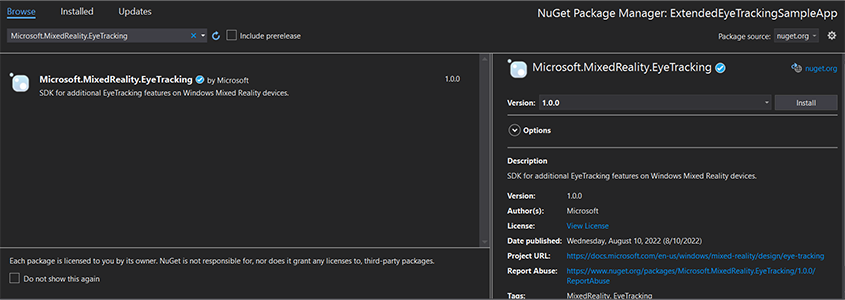

- 在 Visual Studio 方案總管中,以滑鼠右鍵按一下您的專案 - > 管理 NuGet 套件...

- 請確定右上角的套件來源指向 nuget.org: https://api.nuget.org/v3/index.json

- 按一下 [瀏覽器] 索引標籤,然後搜尋

Microsoft.MixedReality.EyeTracking。 - 按一下 [安裝] 按鈕以安裝最新版本的 SDK。

- 設定注視輸入功能

- 按兩下 方案總管 中的 Package.appxmanifest 檔案。

- 按一下 [ 功能] 索引標籤,然後檢查 [注視輸入]。

- 包含前端檔案並使用命名空間。

- 針對 C# 專案:

using Microsoft.MixedReality.EyeTracking;- 針對 C++/WinRT 專案:

#include <winrt/Microsoft.MixedReality.EyeTracking.h> using namespace winrt::Microsoft::MixedReality::EyeTracking; - 取用延伸眼球追蹤 SDK API 並實作您的邏輯。

- 建置並部署至 HoloLens。

取得注視資料的步驟概觀

透過擴充眼球追蹤 SDK API 取得眼球注視資料需要下列步驟:

- 從使用者取得眼球追蹤功能的存取權。

- 觀看眼球注視追蹤器連線和中斷連線。

- 開啟眼球注視追蹤器,然後查詢其功能。

- 從眼球注視追蹤器重複讀取注視資料。

- 將注視資料傳送至其他 SpatialCoordinateSystems。

取得眼球追蹤功能的存取權

若要使用任何眼球相關資訊,應用程式必須先要求使用者同意。

var status = await Windows.Perception.People.EyesPose.RequestAccessAsync();

bool useGaze = (status == Windows.UI.Input.GazeInputAccessStatus.Allowed);

auto accessStatus = co_await winrt::Windows::Perception::People::EyesPose::RequestAccessAsync();

bool useGaze = (accessStatus.get() == winrt::Windows::UI::Input::GazeInputAccessStatus::Allowed);

偵測眼球注視追蹤器

眼球注視追蹤器偵測是透過 類別的 EyeGazeTrackerWatcher 用法進行。

EyeGazeTrackerAdded 當偵測到眼球注視追蹤器或中斷連線時,會分別引發 和 EyeGazeTrackerRemoved 事件。

監看員必須使用 方法明確啟動 StartAsync() ,當已連線的追蹤器已透過 EyeGazeTrackerAdded 事件發出訊號時,此方法會以非同步方式完成。

偵測到眼球注視追蹤器時, EyeGazeTracker 實例會傳遞至事件參數中的 EyeGazeTrackerAdded 應用程式;相互連接時,對應的 EyeGazeTracker 實例會傳遞至 EyeGazeTrackerRemoved 事件。

EyeGazeTrackerWatcher watcher = new EyeGazeTrackerWatcher();

watcher.EyeGazeTrackerAdded += _watcher_EyeGazeTrackerAdded;

watcher.EyeGazeTrackerRemoved += _watcher_EyeGazeTrackerRemoved;

await watcher.StartAsync();

...

private async void _watcher_EyeGazeTrackerAdded(object sender, EyeGazeTracker e)

{

// Implementation is in next section

}

private void _watcher_EyeGazeTrackerRemoved(object sender, EyeGazeTracker e)

{

...

}

EyeGazeTrackerWatcher watcher;

watcher.EyeGazeTrackerAdded(std::bind(&SampleEyeTrackingNugetClientAppMain::OnEyeGazeTrackerAdded, this, _1, _2));

watcher.EyeGazeTrackerRemoved(std::bind(&SampleEyeTrackingNugetClientAppMain::OnEyeGazeTrackerRemoved, this, _1, _2));

co_await watcher.StartAsync();

...

winrt::Windows::Foundation::IAsyncAction SampleAppMain::OnEyeGazeTrackerAdded(const EyeGazeTrackerWatcher& sender, const EyeGazeTracker& tracker)

{

// Implementation is in next section

}

void SampleAppMain::OnEyeGazeTrackerRemoved(const EyeGazeTrackerWatcher& sender, const EyeGazeTracker& tracker)

{

...

}

開啟眼球注視追蹤器

接收 EyeGazeTracker 實例時,應用程式必須先呼叫 OpenAsync() 方法來開啟它。 然後,您可以視需要查詢追蹤器功能。 方法 OpenAsync() 採用布林參數;這表示應用程式是否需要存取不屬於標準眼球追蹤的功能,例如個別眼球注視向量或變更追蹤器的畫面播放速率。

合併注視是所有眼球注視追蹤器所支援的強制功能。 其他功能,例如個別注視的存取權是選擇性的,而且可能會受到支援,視追蹤器和其驅動程式而定。 針對這些選擇性功能,類別 EyeGazeTracker 會公開屬性,指出是否支援此功能,例如 AreLeftAndRightGazesSupported 屬性,指出裝置是否支援個別眼球注視資訊。

眼球注視追蹤器公開的所有空間資訊都會與追蹤器本身有關,由 動態節點識別碼識別。 使用 nodeId 透過 WinRT API 取得 SpatialCoordinateSystem ,可以將注視資料的座標轉換成另一個座標系統。

private async void _watcher_EyeGazeTrackerAdded(object sender, EyeGazeTracker e)

{

try

{

// Try to open the tracker with access to restricted features

await e.OpenAsync(true);

// If it has succeeded, store it for future use

_tracker = e;

// Check support for individual eye gaze

bool supportsIndividualEyeGaze = _tracker.AreLeftAndRightGazesSupported;

// Get a spatial locator for the tracker, this will be used to transfer the gaze data to other coordinate systems later

var trackerNodeId = e.TrackerSpaceLocatorNodeId;

_trackerLocator = Windows.Perception.Spatial.Preview.SpatialGraphInteropPreview.CreateLocatorForNode(trackerNodeId);

}

catch (Exception ex)

{

// Unable to open the tracker

}

}

winrt::Windows::Foundation::IAsyncAction SampleEyeTrackingNugetClientAppMain::OnEyeGazeTrackerAdded(const EyeGazeTrackerWatcher&, const EyeGazeTracker& tracker)

{

auto newTracker = tracker;

try

{

// Try to open the tracker with access to restricted features

co_await newTracker.OpenAsync(true);

// If it has succeeded, store it for future use

m_gazeTracker = newTracker;

// Check support for individual eye gaze

const bool supportsIndividualEyeGaze = m_gazeTracker.AreLeftAndRightGazesSupported();

// Get a spatial locator for the tracker. This will be used to transfer the gaze data to other coordinate systems later

const auto trackerNodeId = m_gazeTracker.TrackerSpaceLocatorNodeId();

m_trackerLocator = winrt::Windows::Perception::Spatial::Preview::SpatialGraphInteropPreview::CreateLocatorForNode(trackerNodeId);

}

catch (const winrt::hresult_error& e)

{

// Unable to open the tracker

}

}

設定眼球注視追蹤器畫面播放速率

屬性 EyeGazeTracker.SupportedTargetFrameRates 會傳回追蹤器所支援的目標畫面播放速率清單。 HoloLens 2支援 30、60 和 90fps。

EyeGazeTracker.SetTargetFrameRate()使用 方法來設定目標畫面播放速率。

// This returns a list of supported frame rate: 30, 60, 90 fps in order

var supportedFrameRates = _tracker.SupportedTargetFrameRates;

// Sets the tracker at the highest supported frame rate (90 fps)

var newFrameRate = supportedFrameRates[supportedFrameRates.Count - 1];

_tracker.SetTargetFrameRate(newFrameRate);

uint newFramesPerSecond = newFrameRate.FramesPerSecond;

// This returns a list of supported frame rate: 30, 60, 90 fps in order

const auto supportedFrameRates = m_gazeTracker.SupportedTargetFrameRates();

// Sets the tracker at the highest supported frame rate (90 fps)

const auto newFrameRate = supportedFrameRates.GetAt(supportedFrameRates.Size() - 1);

m_gazeTracker.SetTargetFrameRate(newFrameRate);

const uint32_t newFramesPerSecond = newFrameRate.FramesPerSecond();

從眼球注視追蹤器讀取注視資料

眼球注視追蹤器會在迴圈緩衝區中定期發佈其狀態。 這可讓應用程式一次讀取屬於小型時間範圍的追蹤器狀態。 例如,它允許擷取追蹤器的最新狀態,或在某些事件時的狀態,例如使用者手勢。

擷取追蹤器狀態作為 EyeGazeTrackerReading 實例的方法:

TryGetReadingAtTimestamp()和TryGetReadingAtSystemRelativeTime()方法會傳EyeGazeTrackerReading回最接近應用程式所傳遞時間的時間。 追蹤器會控制發佈排程,因此傳回的讀數可能比要求時間稍早或更新。EyeGazeTrackerReading.Timestamp和EyeGazeTrackerReading.SystemRelativeTime屬性可讓應用程式知道已發佈狀態的確切時間。TryGetReadingAfterTimestamp()和TryGetReadingAfterSystemRelativeTime()方法會傳回第一EyeGazeTrackerReading個 ,其時間戳記嚴格高於以參數方式傳遞的時間。 這可讓應用程式循序讀取追蹤器所發行的所有狀態。 請注意,所有這些方法都會查詢現有的緩衝區,並立即傳回。 如果沒有可用的狀態,它們會傳回 null (,換句話說,它們不會讓應用程式等候狀態發佈) 。

除了時間戳記之外, EyeGazeTrackerReading 實例也有 IsCalibrationValid 屬性,指出眼球追蹤器校正是否有效。

最後,可以透過 或 等 TryGetCombinedEyeGazeInTrackerSpace()TryGetLeftEyeGazeInTrackerSpace() 一組方法擷取注視資料。 所有這些方法都會傳回布林值,指出成功。 無法取得某些資料可能表示資料不支援 (EyeGazeTracker 有屬性來偵測此案例) ,或者追蹤器無法取得資料 (例如不正確校正或眼睛隱藏) 。

例如,如果應用程式想要顯示對應至合併注視的資料指標,它可以使用準備之框架預測的時間戳記來查詢追蹤器,如下所示。

var holographicFrame = holographicSpace.CreateNextFrame();

var prediction = holographicFrame.CurrentPrediction;

var predictionTimestamp = prediction.Timestamp;

var reading = _tracker.TryGetReadingAtTimestamp(predictionTimestamp.TargetTime.DateTime);

if (reading != null)

{

// Vector3 needs the System.Numerics namespace

if (reading.TryGetCombinedEyeGazeInTrackerSpace(out Vector3 gazeOrigin, out Vector3 gazeDirection))

{

// Use gazeOrigin and gazeDirection to display the cursor

}

}

auto holographicFrame = m_holographicSpace.CreateNextFrame();

auto prediction = holographicFrame.CurrentPrediction();

auto predictionTimestamp = prediction.Timestamp();

const auto reading = m_gazeTracker.TryGetReadingAtTimestamp(predictionTimestamp.TargetTime());

if (reading)

{

float3 gazeOrigin;

float3 gazeDirection;

if (reading.TryGetCombinedEyeGazeInTrackerSpace(gazeOrigin, gazeDirection))

{

// Use gazeOrigin and gazeDirection to display the cursor

}

}

將注視資料轉換成其他 SpatialCoordinateSystem

傳回空間資料的 WinRT API,例如位置一律需要 PerceptionTimestamp 和 SpatialCoordinateSystem 。 例如,若要使用 WinRT API 擷取HoloLens 2合併注視,API SpatialPointerPose.TryGetAtTimestamp () 需要兩個 PerceptionTimestamp 參數:a SpatialCoordinateSystem 和 。 然後,透過 存取合併注視 SpatialPointerPose.Eyes.Gaze 時,其原點和方向會以 SpatialCoordinateSystem 傳入的 表示。

擴充的系結追蹤 SDK API 不需要採取 , SpatialCoordinateSystem 而且注視資料一律會以追蹤器的座標系統表示。 但是,您可以使用追蹤器與其他座標系統相關的姿勢,將這些注視資料轉換成另一個座標系統。

如上述名為「開啟眼球注視追蹤器」一節所述,若要取得

SpatialLocator眼球注視追蹤器的 ,請使用EyeGazeTracker.TrackerSpaceLocatorNodeId屬性呼叫Windows.Perception.Spatial.Preview.SpatialGraphInteropPreview.CreateLocatorForNode()。透過擷

EyeGazeTrackerReading取的注視來源和方向與眼球注視追蹤器有關。SpatialLocator.TryLocateAtTimestamp()會傳回位於指定且與指定PerceptionTimeStampSpatialCoordinateSystem相關的眼球注視追蹤器的完整 6DoF 位置,這個位置可用來建構 Matrix4x4 轉換矩陣。使用建構的 Matrix4x4 轉換矩陣,將注視原點和方向傳送至其他 SpatialCoordinateSystem。

下列程式碼範例示範如何計算位於結合注視方向的 Cube 位置,在注視原點前面有兩公尺;

var predictionTimestamp = prediction.Timestamp;

var stationaryCS = stationaryReferenceFrame.CoordinateSystem;

var trackerLocation = _trackerLocator.TryLocateAtTimestamp(predictionTimestamp, stationaryCS);

if (trackerLocation != null)

{

var trackerToStationaryMatrix = Matrix4x4.CreateFromQuaternion(trackerLocation.Orientation) * Matrix4x4.CreateTranslation(trackerLocation.Position);

var reading = _tracker.TryGetReadingAtTimestamp(predictionTimestamp.TargetTime.DateTime);

if (reading != null)

{

if (reading.TryGetCombinedEyeGazeInTrackerSpace(out Vector3 gazeOriginInTrackerSpace, out Vector3 gazeDirectionInTrackerSpace))

{

var cubePositionInTrackerSpace = gazeOriginInTrackerSpace + 2.0f * gazeDirectionInTrackerSpace;

var cubePositionInStationaryCS = Vector3.Transform(cubePositionInTrackerSpace, trackerToStationaryMatrix);

}

}

}

auto predictionTimestamp = prediction.Timestamp();

auto stationaryCS = m_stationaryReferenceFrame.CoordinateSystem();

auto trackerLocation = m_trackerLocator.TryLocateAtTimestamp(predictionTimestamp, stationaryCS);

if (trackerLocation)

{

auto trackerOrientation = trackerLocation.Orientation();

auto trackerPosition = trackerLocation.Position();

auto trackerToStationaryMatrix = DirectX::XMMatrixRotationQuaternion(DirectX::XMLoadFloat4(reinterpret_cast<const DirectX::XMFLOAT4*>(&trackerOrientation))) * DirectX::XMMatrixTranslationFromVector(DirectX::XMLoadFloat3(&trackerPosition));

const auto reading = m_gazeTracker.TryGetReadingAtTimestamp(predictionTimestamp.TargetTime());

if (reading)

{

float3 gazeOriginInTrackerSpace;

float3 gazeDirectionInTrackerSpace;

if (reading.TryGetCombinedEyeGazeInTrackerSpace(gazeOriginInTrackerSpace, gazeDirectionInTrackerSpace))

{

auto cubePositionInTrackerSpace = gazeOriginInTrackerSpace + 2.0f * gazeDirectionInTrackerSpace;

float3 cubePositionInStationaryCS;

DirectX::XMStoreFloat3(&cubePositionInStationaryCS, DirectX::XMVector3TransformCoord(DirectX::XMLoadFloat3(&cubePositionInTrackerSpace), trackerToStationaryMatrix));

}

}

}

擴充眼球追蹤 SDK 的 API 參考

namespace Microsoft.MixedReality.EyeTracking

{

/// <summary>

/// Allow discovery of Eye Gaze Trackers connected to the system

/// This is the only class from Extended Eye Tracking SDK that the application will instantiate,

/// other classes' instances will be returned by method calls or properties.

/// </summary>

public class EyeGazeTrackerWatcher

{

/// <summary>

/// Constructs an instance of the watcher

/// </summary>

public EyeGazeTrackerWatcher();

/// <summary>

/// Starts trackers enumeration.

/// </summary>

/// <returns>Task representing async action; completes when the initial enumeration is completed</returns>

public System.Threading.Tasks.Task StartAsync();

/// <summary>

/// Stop listening to trackers additions and removal

/// </summary>

public void Stop();

/// <summary>

/// Raised when an Eye Gaze tracker is connected

/// </summary>

public event System.EventHandler<EyeGazeTracker> EyeGazeTrackerAdded;

/// <summary>

/// Raised when an Eye Gaze tracker is disconnected

/// </summary>

public event System.EventHandler<EyeGazeTracker> EyeGazeTrackerRemoved;

}

/// <summary>

/// Represents an Eye Tracker device

/// </summary>

public class EyeGazeTracker

{

/// <summary>

/// True if Restricted mode is supported, which means the driver supports to provide individual

/// eye gaze vector and framerate

/// </summary>

public bool IsRestrictedModeSupported;

/// <summary>

/// True if Vergence Distance is supported by tracker

/// </summary>

public bool IsVergenceDistanceSupported;

/// <summary>

/// True if Eye Openness is supported by the driver

/// </summary>

public bool IsEyeOpennessSupported;

/// <summary>

/// True if individual gazes are supported

/// </summary>

public bool AreLeftAndRightGazesSupported;

/// <summary>

/// Get the supported target frame rates of the tracker

/// </summary>

public System.Collections.Generic.IReadOnlyList<EyeGazeTrackerFrameRate> SupportedTargetFrameRates;

/// <summary>

/// NodeId of the tracker, used to retrieve a SpatialLocator or SpatialGraphNode to locate the tracker in the scene

/// for Perception API, use SpatialGraphInteropPreview.CreateLocatorForNode

/// for Mixed Reality OpenXR API, use SpatialGraphNode.FromDynamicNodeId

/// </summary>

public Guid TrackerSpaceLocatorNodeId;

/// <summary>

/// Opens the tracker

/// </summary>

/// <param name="restrictedMode">True if restricted mode active</param>

/// <returns>Task representing async action; completes when the initial enumeration is completed</returns>

public System.Threading.Tasks.Task OpenAsync(bool restrictedMode);

/// <summary>

/// Closes the tracker

/// </summary>

public void Close();

/// <summary>

/// Changes the target frame rate of the tracker

/// </summary>

/// <param name="newFrameRate">Target frame rate</param>

public void SetTargetFrameRate(EyeGazeTrackerFrameRate newFrameRate);

/// <summary>

/// Try to get tracker state at a given timestamp

/// </summary>

/// <param name="timestamp">timestamp</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAtTimestamp(DateTime timestamp);

/// <summary>

/// Try to get tracker state at a system relative time

/// </summary>

/// <param name="time">time</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAtSystemRelativeTime(TimeSpan time);

/// <summary>

/// Try to get first first tracker state after a given timestamp

/// </summary>

/// <param name="timestamp">timestamp</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAfterTimestamp(DateTime timestamp);

/// <summary>

/// Try to get the first tracker state after a system relative time

/// </summary>

/// <param name="time">time</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAfterSystemRelativeTime(TimeSpan time);

}

/// <summary>

/// Represents a Frame Rate supported by an Eye Tracker

/// </summary>

public class EyeGazeTrackerFrameRate

{

/// <summary>

/// Frames per second of the frame rate

/// </summary>

public UInt32 FramesPerSecond;

}

/// <summary>

/// Snapshot of Gaze Tracker state

/// </summary>

public class EyeGazeTrackerReading

{

/// <summary>

/// Timestamp of state

/// </summary>

public DateTime Timestamp;

/// <summary>

/// Timestamp of state as system relative time

/// Its SystemRelativeTime.Ticks could provide the QPC time to locate tracker pose

/// </summary>

public TimeSpan SystemRelativeTime;

/// <summary>

/// Indicates user calibration is valid

/// </summary>

public bool IsCalibrationValid;

/// <summary>

/// Tries to get a vector representing the combined gaze related to the tracker's node

/// </summary>

/// <param name="origin">Origin of the gaze vector</param>

/// <param name="direction">Direction of the gaze vector</param>

/// <returns></returns>

public bool TryGetCombinedEyeGazeInTrackerSpace(out System.Numerics.Vector3 origin, out System.Numerics.Vector3 direction);

/// <summary>

/// Tries to get a vector representing the left eye gaze related to the tracker's node

/// </summary>

/// <param name="origin">Origin of the gaze vector</param>

/// <param name="direction">Direction of the gaze vector</param>

/// <returns></returns>

public bool TryGetLeftEyeGazeInTrackerSpace(out System.Numerics.Vector3 origin, out System.Numerics.Vector3 direction);

/// <summary>

/// Tries to get a vector representing the right eye gaze related to the tracker's node position

/// </summary>

/// <param name="origin">Origin of the gaze vector</param>

/// <param name="direction">Direction of the gaze vector</param>

/// <returns></returns>

public bool TryGetRightEyeGazeInTrackerSpace(out System.Numerics.Vector3 origin, out System.Numerics.Vector3 direction);

/// <summary>

/// Tries to read vergence distance

/// </summary>

/// <param name="value">Vergence distance if available</param>

/// <returns>bool if value is valid</returns>

public bool TryGetVergenceDistance(out float value);

/// <summary>

/// Tries to get left Eye openness information

/// </summary>

/// <param name="value">Eye Openness if valid</param>

/// <returns>bool if value is valid</returns>

public bool TryGetLeftEyeOpenness(out float value);

/// <summary>

/// Tries to get right Eye openness information

/// </summary>

/// <param name="value">Eye Openness if valid</param>

/// <returns>bool if value is valid</returns>

public bool TryGetRightEyeOpenness(out float value);

}

}