Test your agents

Because an agent is made up of multiple topics, it's important to ensure that each topic is working appropriately and can be interacted with as intended. For example, if you want to make sure that your Store Hours topic is triggered when someone enters text asking about store hours, you can test your agent to ensure that it responds appropriately.

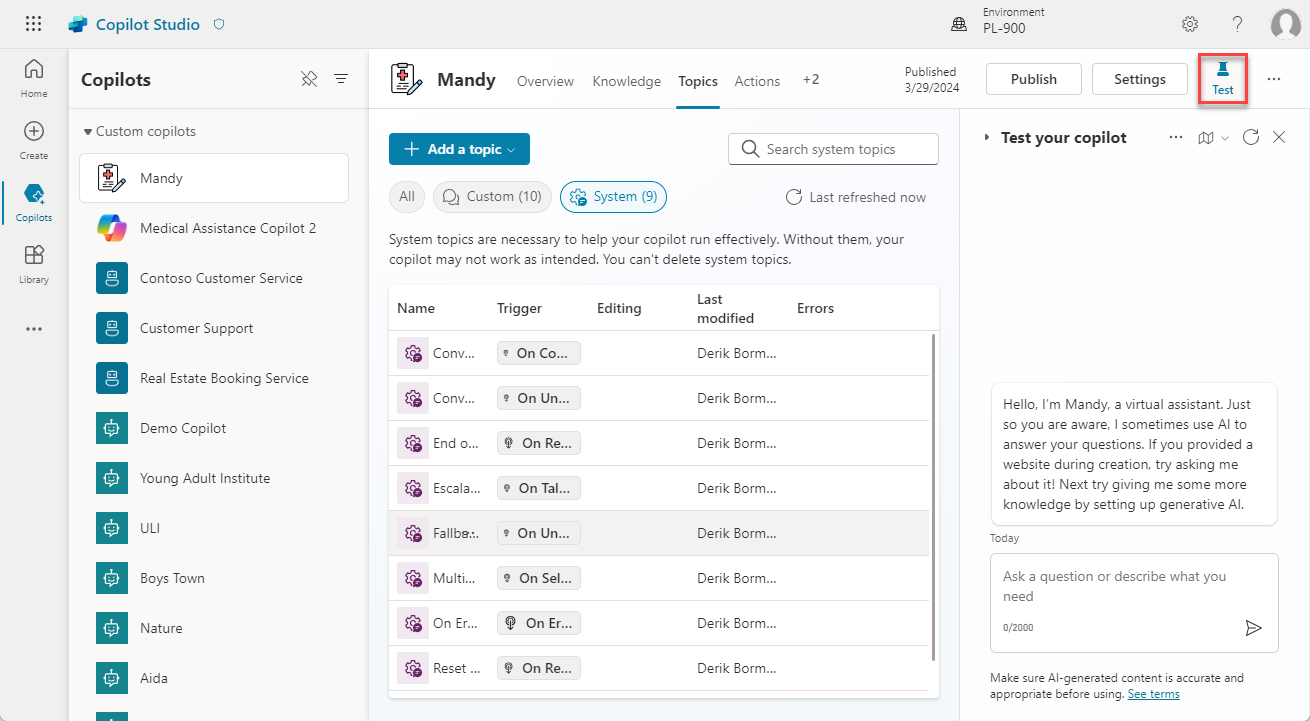

You can test your agent in real time by using the test agent panel, which you can enable by selecting Test in the upper right-hand side of the application. To hide the test pane, select the Test button again.

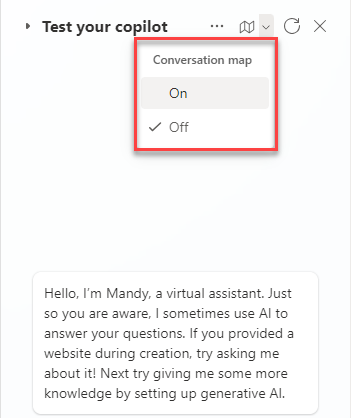

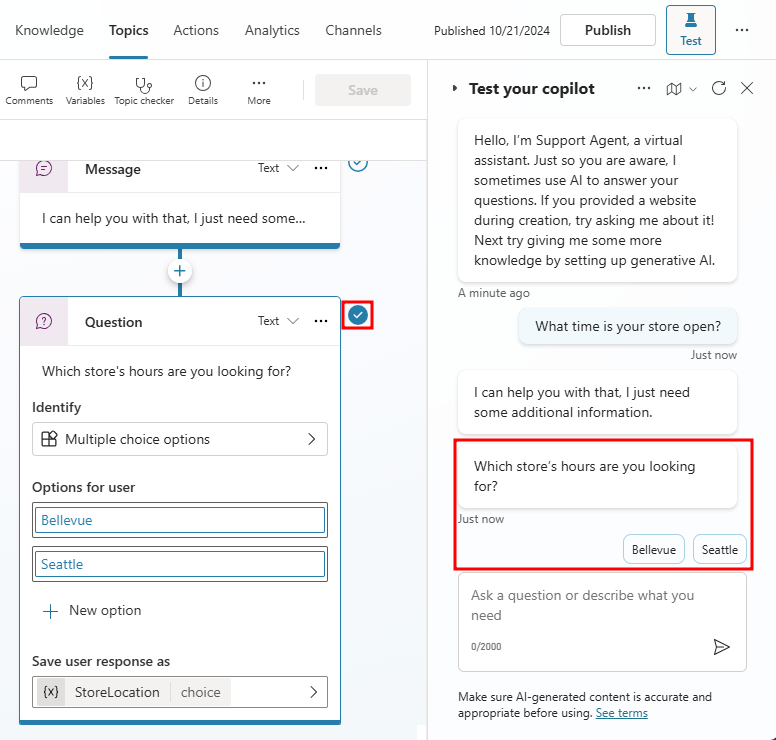

The Test your agent window interacts with your agent topics just as a user would. As you enter text into the test agent window, information is presented as it would be to a user. Your agent likely contains multiple topics. As you engage with a specific topic, it might be handy to have the application take you to that topic. You can accomplish this task by setting Track between topics to On at the top of the test window. This option follows along with the agent as it implements the different topics. For example, typing "hello" would trigger the Greeting topic, and then the application opens the Greeting topic and displays its conversation path in the window. If you type "What time is your store open?" the application switches to display the Store Hours topic. As each topic is displayed, you can observe how the path progresses, which help you evaluate how your topics are doing.

The following image shows the "What time is your store open?" message has been sent to the agent. Notice that you're automatically taken to the Store Hours topic. The conversation path is highlighted in green. The agent is now waiting for you to respond and has provided two suggestion buttons on how to respond. These suggestion buttons reflect the Seattle and Bellevue user options that were defined when the topic was previously created. In the test agent, you can select either of these suggestion buttons to continue.

As you select an option, you continue down the conversation path until you reach the end. The chat stops when you reach the bottom of this branch.

By testing your agents often throughout the creation process, you can ensure that the conversation flows as anticipated. If the dialog doesn't reflect your intention, you can change the dialog and save it. The latest content is pushed into the test agent, and you can try it out again.

Test generative answers

The agent uses generative answers as a fallback when it's unable to identify a topic that provides an acceptable answer.

When testing the generative answer capabilities, you should ask a question relevant to the data sources that you defined for Generative AI, but that can't be answered by any of your topics. Your agent uses the defined data sources to find the correct answer. Once an answer is displayed, you can ask more follow-up questions. The agent remembers the context, so you don't have to provide further clarification. For example, if you asked an agent that was connected to Microsoft Learn as a data source a question such as "What is an IF statement used for in Microsoft Excel?" Agent returns details about the IF statement function. If you then asked it to "Provide me with an example." The agent would realize that you're still talking about Microsoft Excel and provide you with an example.