Introduction to ingestion

Important

Some or all of this functionality is available as part of a preview release. The content and the functionality are subject to change.

Important

This document includes several references to the ISA95 semantic model. Visit the ISA95 website and store to purchase the ISA95 standards.

The ingestion layer in Manufacturing data solutions in Microsoft Fabric enables partners and customers to bring ISV/partner pipeline, Manufacturing data solutions normalized data into Manufacturing data solutions stores. Manufacturing data solutions trigger an ingest process when data lands in the customer's Fabric OneLake or designated Event Hubs. The ingestion layer expects specific file formats, file names, data schemas, and data that is semantically modeled using the Manufacturing data solutions data model, based on ISA95, to be defined further in the functionality section. For more information, see the ISA95 standards.

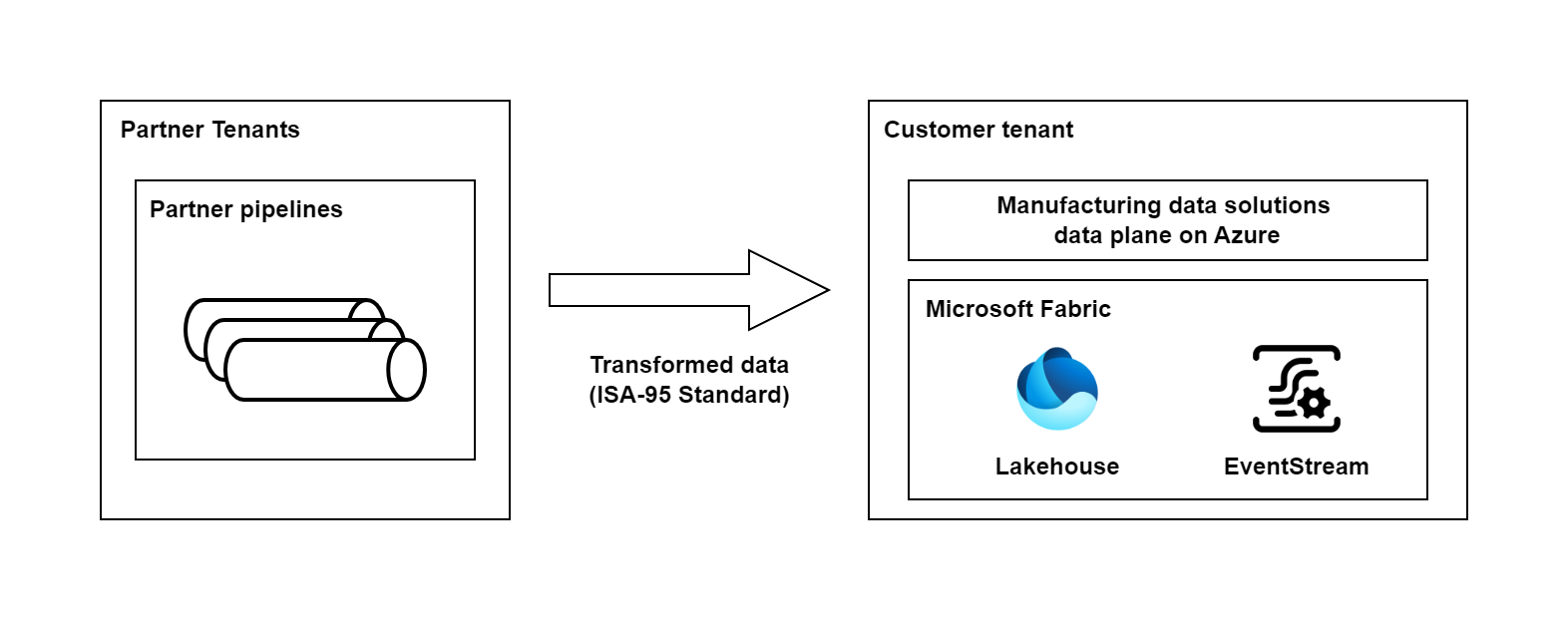

This diagram illustrates the ISV tenant with pipelines sending normalized data to the Customer tenant. The data is stored in the Lakehouse of Microsoft Fabric OneLake and is synchronized with the Manufacturing data solutions Data Plane on Azure. You need to normalize customer data during ingestion, for which normalization API is used. Using normalization, you can instruct Manufacturing data solutions to map data from one form to another and standardize it in your storage for easier querying.

Prerequisite knowledge

Functionality

Types of data ingestion supported

- Batch data

- Eventstream data

- OPC UA data and metadata

File format types supported

CSV - The csv file represents a single entity that was registered during Entity Registration and has a header row that contains one or more columns from the entity. The csv file also includes a set of mandatory and nonmandatory columns and up to several ten thousands of rows.

JSON - The JSON file is composed of a set of nested properties.

Parquet - Parquet is a columnar file format that provides optimizations to speed up queries and is far more efficient than CSV/TXT/JSON as a file format. Here, along with CSV and JSON, Parquet is now supported as a file format for ingestion. You can add parquet files in Fabric Lakehouse and start ingesting it using Manufacturing data solutions.

Data ingestion modes supported

There are two modes of data ingestion from ISV pipelines.

Batch data: In the form of CSV files. During Batch ingestion flow, Manufacturing data solutions asynchronously pull the .csv file from the Fabric Lakehouse and start processing and ingesting it.

Stream data: As JSON message payloads. During the Stream ingestion flow, Manufacturing data solutions asynchronously pull the JSON file from Fabric Eventstream and start processing and ingesting it.

Data mapping, pipelines, and consumption

To map to the Manufacturing data solutions data model, refer to Manufacturing data solutions Mapping.

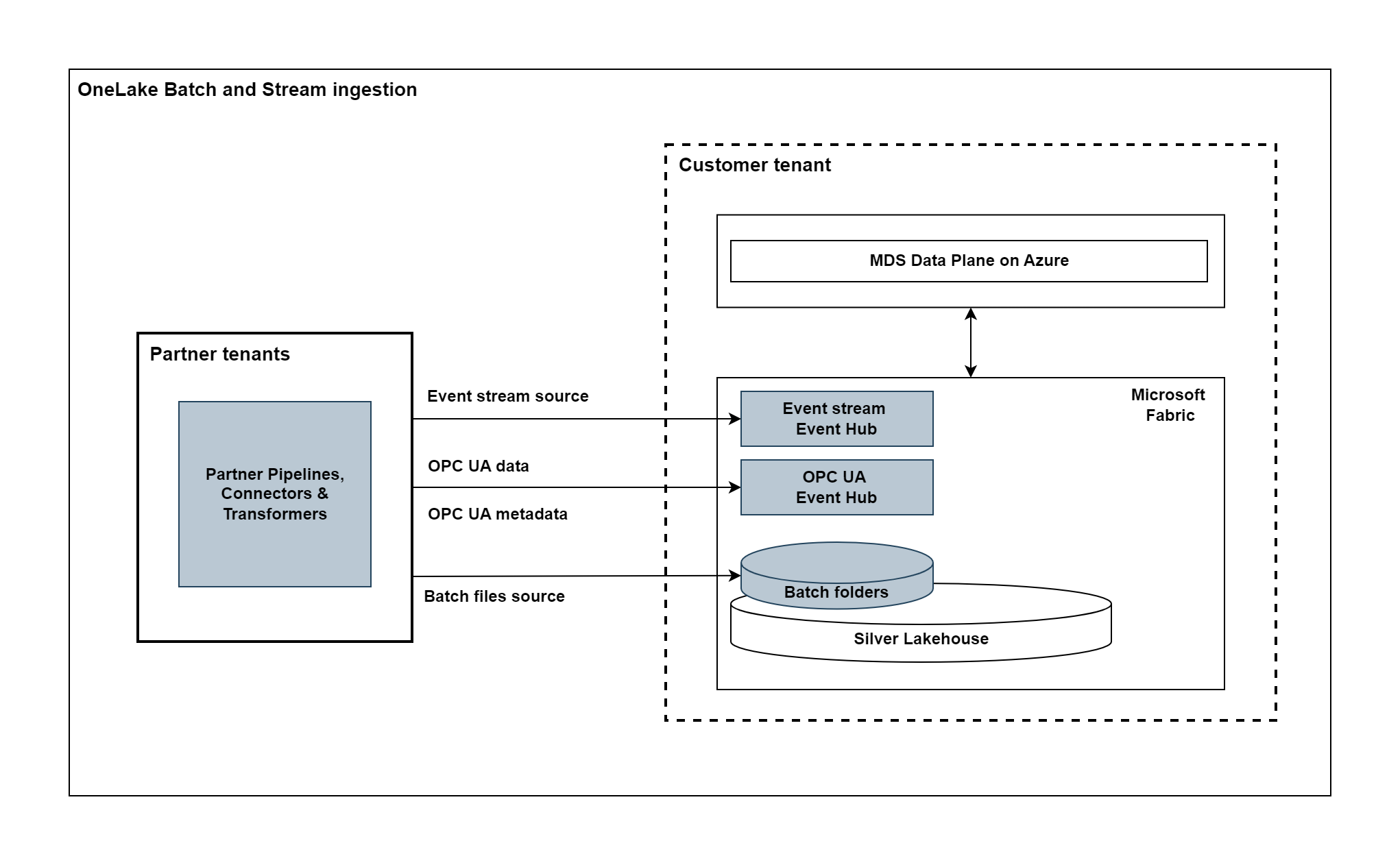

This diagram illustrates how partner pipelines transmit data through various channels, including Eventstream, OPC UA Stream, and Batch Files, to Microsoft Fabric. This data is later utilized within the Manufacturing data solutions Data Plane on Azure.

After the data is ingested, you can call the API to retrieve the required results according to your specifications.

Refer to Use Consume Structured Queries