使用系統表格監視作業成本和效能&

本文提供如何使用系統數據表來監視帳戶中作業的成本和效能的範例。

這些查詢只會計算在作業計算和無伺服器計算上執行的作業成本。 在 SQL 倉儲和所有用途計算上執行的作業不會計費為作業,因此會排除在成本屬性之外。

注意

這些查詢不會從您目前工作區雲端區域以外的工作區傳回記錄。 若要從您目前區域以外的工作區監視作業成本,請在部署於該區域的工作區中執行這些查詢。

要求

- 帳戶管理員必須啟用

system.lakeflow架構。請參閱 啟用系統數據表架構。 - 若要存取這些系統數據表,用戶必須:

- 同時為中繼存放區管理員和帳戶管理員,或

- 在系統架構中具有

USE和SELECT許可權。 請參閱 授與系統資料表的存取權。

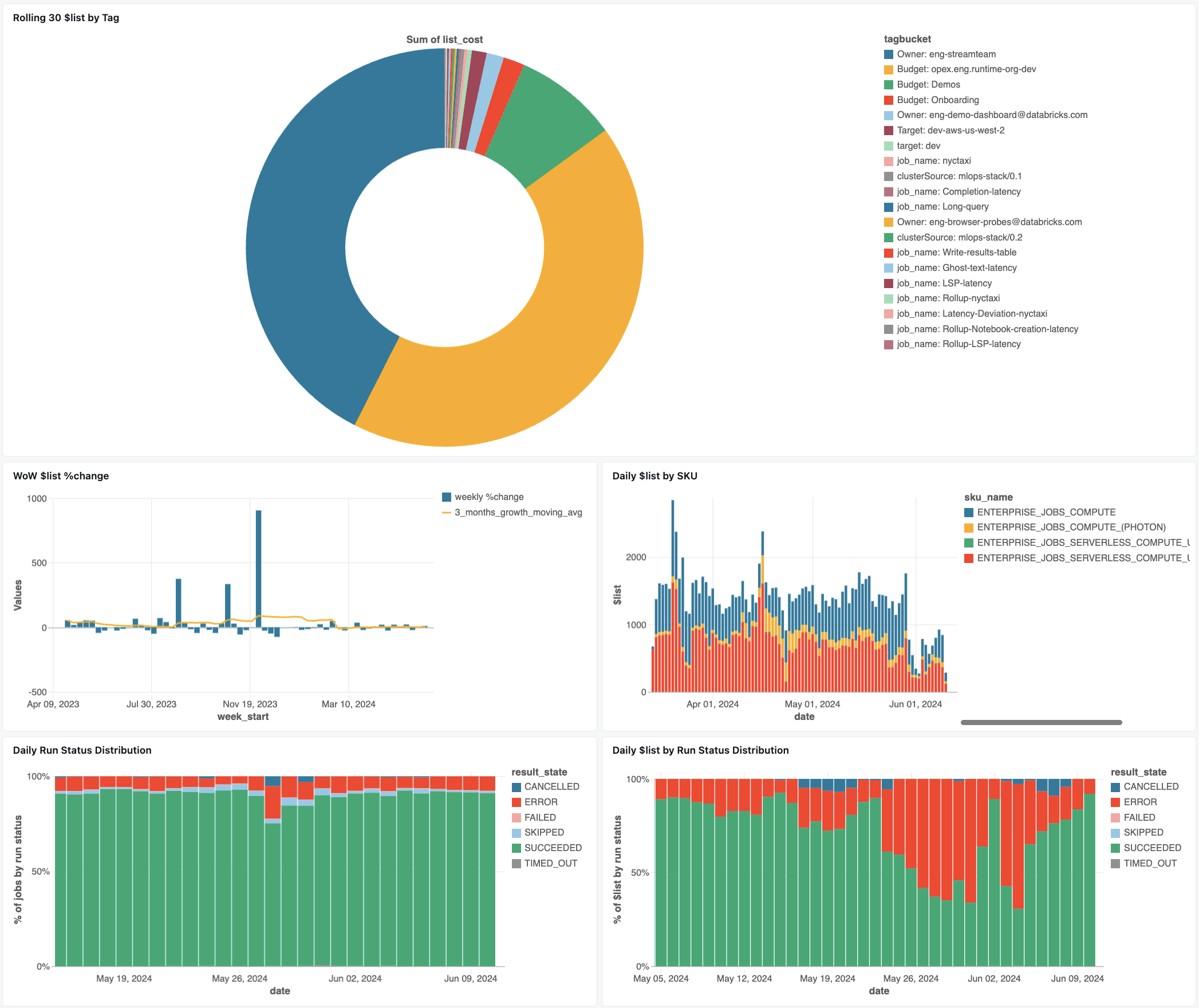

作業監視儀錶板

以下的儀錶板利用系統數據表,為您提供 Databricks 作業和運行狀況的全面監控。 其中包含常見的使用案例,例如作業效能追蹤、失敗監視和資源使用率。

匯入儀錶板

- 從 Databricks GitHub 存放庫下載儀表板 JSON 檔案。

- 將儀錶板匯入工作區。 如需匯入儀錶板的指示,請參閱 匯入儀錶板檔案。

成本可觀察性查詢

儀表板中的下列查詢展示作業成本監控的功能。

最昂貴的工作 (過去 30 天)

此查詢會識別過去 30 天內花費最高的作業。

with list_cost_per_job as (

SELECT

t1.workspace_id,

t1.usage_metadata.job_id,

COUNT(DISTINCT t1.usage_metadata.job_run_id) as runs,

SUM(t1.usage_quantity * list_prices.pricing.default) as list_cost,

first(identity_metadata.run_as, true) as run_as,

first(t1.custom_tags, true) as custom_tags,

MAX(t1.usage_end_time) as last_seen_date

FROM system.billing.usage t1

INNER JOIN system.billing.list_prices list_prices on

t1.cloud = list_prices.cloud and

t1.sku_name = list_prices.sku_name and

t1.usage_start_time >= list_prices.price_start_time and

(t1.usage_end_time <= list_prices.price_end_time or list_prices.price_end_time is null)

WHERE

t1.billing_origin_product = "JOBS"

AND t1.usage_date >= CURRENT_DATE() - INTERVAL 30 DAY

GROUP BY ALL

),

most_recent_jobs as (

SELECT

*,

ROW_NUMBER() OVER(PARTITION BY workspace_id, job_id ORDER BY change_time DESC) as rn

FROM

system.lakeflow.jobs QUALIFY rn=1

)

SELECT

t2.name,

t1.job_id,

t1.workspace_id,

t1.runs,

t1.run_as,

SUM(list_cost) as list_cost,

t1.last_seen_date

FROM list_cost_per_job t1

LEFT JOIN most_recent_jobs t2 USING (workspace_id, job_id)

GROUP BY ALL

ORDER BY list_cost DESC

最昂貴的作業執行 (過去 30 天)

此查詢會識別過去 30 天內花費最高的作業執行。

with list_cost_per_job_run as (

SELECT

t1.workspace_id,

t1.usage_metadata.job_id,

t1.usage_metadata.job_run_id as run_id,

SUM(t1.usage_quantity * list_prices.pricing.default) as list_cost,

first(identity_metadata.run_as, true) as run_as,

first(t1.custom_tags, true) as custom_tags,

MAX(t1.usage_end_time) as last_seen_date

FROM system.billing.usage t1

INNER JOIN system.billing.list_prices list_prices on

t1.cloud = list_prices.cloud and

t1.sku_name = list_prices.sku_name and

t1.usage_start_time >= list_prices.price_start_time and

(t1.usage_end_time <= list_prices.price_end_time or list_prices.price_end_time is null)

WHERE

t1.billing_origin_product = 'JOBS'

AND t1.usage_date >= CURRENT_DATE() - INTERVAL 30 DAY

GROUP BY ALL

),

most_recent_jobs as (

SELECT

*,

ROW_NUMBER() OVER(PARTITION BY workspace_id, job_id ORDER BY change_time DESC) as rn

FROM

system.lakeflow.jobs QUALIFY rn=1

)

SELECT

t1.workspace_id,

t2.name,

t1.job_id,

t1.run_id,

t1.run_as,

SUM(list_cost) as list_cost,

t1.last_seen_date

FROM list_cost_per_job_run t1

LEFT JOIN most_recent_jobs t2 USING (workspace_id, job_id)

GROUP BY ALL

ORDER BY list_cost DESC

消費趨勢分析 (7-14 天)

此查詢會識別過去 2 周清單成本支出增加最多的作業。

with job_run_timeline_with_cost as (

SELECT

t1.*,

t1.usage_metadata.job_id as job_id,

t1.identity_metadata.run_as as run_as,

t1.usage_quantity * list_prices.pricing.default AS list_cost

FROM system.billing.usage t1

INNER JOIN system.billing.list_prices list_prices

ON

t1.cloud = list_prices.cloud AND

t1.sku_name = list_prices.sku_name AND

t1.usage_start_time >= list_prices.price_start_time AND

(t1.usage_end_time <= list_prices.price_end_time or list_prices.price_end_time is NULL)

WHERE

t1.billing_origin_product = 'JOBS' AND

t1.usage_date >= CURRENT_DATE() - INTERVAL 14 DAY

),

most_recent_jobs as (

SELECT

*,

ROW_NUMBER() OVER(PARTITION BY workspace_id, job_id ORDER BY change_time DESC) as rn

FROM

system.lakeflow.jobs QUALIFY rn=1

)

SELECT

t2.name

,t1.workspace_id

,t1.job_id

,t1.sku_name

,t1.run_as

,Last7DaySpend

,Last14DaySpend

,last7DaySpend - last14DaySpend as Last7DayGrowth

,try_divide( (last7DaySpend - last14DaySpend) , last14DaySpend) * 100 AS Last7DayGrowthPct

FROM

(

SELECT

workspace_id,

job_id,

run_as,

sku_name,

SUM(list_cost) AS spend

,SUM(CASE WHEN usage_end_time BETWEEN date_add(current_date(), -8) AND date_add(current_date(), -1) THEN list_cost ELSE 0 END) AS Last7DaySpend

,SUM(CASE WHEN usage_end_time BETWEEN date_add(current_date(), -15) AND date_add(current_date(), -8) THEN list_cost ELSE 0 END) AS Last14DaySpend

FROM job_run_timeline_with_cost

GROUP BY ALL

) t1

LEFT JOIN most_recent_jobs t2 USING (workspace_id, job_id)

ORDER BY

Last7DayGrowth DESC

LIMIT 100

作業健康情況查詢

以下是此儀錶板可協助您追蹤作業效能和可靠性的一些方式。

失敗工作之分析

此查詢會傳回過去 30 天內執行大量失敗作業的相關信息。 您可以檢視執行次數、失敗次數、成功率,以及作業失敗執行的成本。

with job_run_timeline_with_cost as (

SELECT

t1.*,

t1.identity_metadata.run_as as run_as,

t2.job_id,

t2.run_id,

t2.result_state,

t1.usage_quantity * list_prices.pricing.default as list_cost

FROM system.billing.usage t1

INNER JOIN system.lakeflow.job_run_timeline t2

ON

t1.workspace_id=t2.workspace_id

AND t1.usage_metadata.job_id = t2.job_id

AND t1.usage_metadata.job_run_id = t2.run_id

AND t1.usage_start_time >= date_trunc("Hour", t2.period_start_time)

AND t1.usage_start_time < date_trunc("Hour", t2.period_end_time) + INTERVAL 1 HOUR

INNER JOIN system.billing.list_prices list_prices on

t1.cloud = list_prices.cloud and

t1.sku_name = list_prices.sku_name and

t1.usage_start_time >= list_prices.price_start_time and

(t1.usage_end_time <= list_prices.price_end_time or list_prices.price_end_time is null)

WHERE

t1.billing_origin_product = 'JOBS' AND

t1.usage_date >= CURRENT_DATE() - INTERVAL 30 DAYS

),

cumulative_run_status_cost as (

SELECT

workspace_id,

job_id,

run_id,

run_as,

result_state,

usage_end_time,

SUM(list_cost) OVER (ORDER BY workspace_id, job_id, run_id, usage_end_time ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS cumulative_cost

FROM job_run_timeline_with_cost

ORDER BY workspace_id, job_id, run_id, usage_end_time

),

cost_per_status as (

SELECT

workspace_id,

job_id,

run_id,

run_as,

result_state,

usage_end_time,

cumulative_cost - COALESCE(LAG(cumulative_cost) OVER (ORDER BY workspace_id, job_id, run_id, usage_end_time), 0) AS result_state_cost

FROM cumulative_run_status_cost

WHERE result_state IS NOT NULL

ORDER BY workspace_id, job_id, run_id, usage_end_time),

cost_per_status_agg as (

SELECT

workspace_id,

job_id,

FIRST(run_as, TRUE) as run_as,

SUM(result_state_cost) as list_cost

FROM cost_per_status

WHERE

result_state IN ('ERROR', 'FAILED', 'TIMED_OUT')

GROUP BY ALL

),

terminal_statues as (

SELECT

workspace_id,

job_id,

CASE WHEN result_state IN ('ERROR', 'FAILED', 'TIMED_OUT') THEN 1 ELSE 0 END as is_failure,

period_end_time as last_seen_date

FROM system.lakeflow.job_run_timeline

WHERE

result_state IS NOT NULL AND

period_end_time >= CURRENT_DATE() - INTERVAL 30 DAYS

),

most_recent_jobs as (

SELECT

*,

ROW_NUMBER() OVER(PARTITION BY workspace_id, job_id ORDER BY change_time DESC) as rn

FROM

system.lakeflow.jobs QUALIFY rn=1

)

SELECT

first(t2.name) as name,

t1.workspace_id,

t1.job_id,

COUNT(*) as runs,

t3.run_as,

SUM(is_failure) as failures,

(1 - COALESCE(try_divide(SUM(is_failure), COUNT(*)), 0)) * 100 as success_ratio,

first(t3.list_cost) as failure_list_cost,

MAX(t1.last_seen_date) as last_seen_date

FROM terminal_statues t1

LEFT JOIN most_recent_jobs t2 USING (workspace_id, job_id)

LEFT JOIN cost_per_status_agg t3 USING (workspace_id, job_id)

GROUP BY ALL

ORDER BY failures DESC

重試模式

此查詢會傳回過去 30 天內經常進行修復之作業的相關信息,包括修復次數、修復執行的成本,以及修復執行的累計持續時間。

with job_run_timeline_with_cost as (

SELECT

t1.*,

t2.job_id,

t2.run_id,

t1.identity_metadata.run_as as run_as,

t2.result_state,

t1.usage_quantity * list_prices.pricing.default as list_cost

FROM system.billing.usage t1

INNER JOIN system.lakeflow.job_run_timeline t2

ON

t1.workspace_id=t2.workspace_id

AND t1.usage_metadata.job_id = t2.job_id

AND t1.usage_metadata.job_run_id = t2.run_id

AND t1.usage_start_time >= date_trunc("Hour", t2.period_start_time)

AND t1.usage_start_time < date_trunc("Hour", t2.period_end_time) + INTERVAL 1 HOUR

INNER JOIN system.billing.list_prices list_prices on

t1.cloud = list_prices.cloud and

t1.sku_name = list_prices.sku_name and

t1.usage_start_time >= list_prices.price_start_time and

(t1.usage_end_time <= list_prices.price_end_time or list_prices.price_end_time is null)

WHERE

t1.billing_origin_product = 'JOBS' AND

t1.usage_date >= CURRENT_DATE() - INTERVAL 30 DAYS

),

cumulative_run_status_cost as (

SELECT

workspace_id,

job_id,

run_id,

run_as,

result_state,

usage_end_time,

SUM(list_cost) OVER (ORDER BY workspace_id, job_id, run_id, usage_end_time ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS cumulative_cost

FROM job_run_timeline_with_cost

ORDER BY workspace_id, job_id, run_id, usage_end_time

),

cost_per_status as (

SELECT

workspace_id,

job_id,

run_id,

run_as,

result_state,

usage_end_time,

cumulative_cost - COALESCE(LAG(cumulative_cost) OVER (ORDER BY workspace_id, job_id, run_id, usage_end_time), 0) AS result_state_cost

FROM cumulative_run_status_cost

WHERE result_state IS NOT NULL

ORDER BY workspace_id, job_id, run_id, usage_end_time),

cost_per_unsuccesful_status_agg as (

SELECT

workspace_id,

job_id,

run_id,

first(run_as, TRUE) as run_as,

SUM(result_state_cost) as list_cost

FROM cost_per_status

WHERE

result_state != "SUCCEEDED"

GROUP BY ALL

),

repaired_runs as (

SELECT

workspace_id, job_id, run_id, COUNT(*) as cnt

FROM system.lakeflow.job_run_timeline

WHERE result_state IS NOT NULL

GROUP BY ALL

HAVING cnt > 1

),

successful_repairs as (

SELECT t1.workspace_id, t1.job_id, t1.run_id, MAX(t1.period_end_time) as period_end_time

FROM system.lakeflow.job_run_timeline t1

JOIN repaired_runs t2

ON t1.workspace_id=t2.workspace_id AND t1.job_id=t2.job_id AND t1.run_id=t2.run_id

WHERE t1.result_state="SUCCEEDED"

GROUP BY ALL

),

combined_repairs as (

SELECT

t1.*,

t2.period_end_time,

t1.cnt as repairs

FROM repaired_runs t1

LEFT JOIN successful_repairs t2 USING (workspace_id, job_id, run_id)

),

most_recent_jobs as (

SELECT

*,

ROW_NUMBER() OVER(PARTITION BY workspace_id, job_id ORDER BY change_time DESC) as rn

FROM

system.lakeflow.jobs QUALIFY rn=1

)

SELECT

last(t3.name) as name,

t1.workspace_id,

t1.job_id,

t1.run_id,

first(t4.run_as, TRUE) as run_as,

first(t1.repairs) - 1 as repairs,

first(t4.list_cost) as repair_list_cost,

CASE WHEN t1.period_end_time IS NOT NULL THEN CAST(t1.period_end_time - MIN(t2.period_end_time) as LONG) ELSE NULL END AS repair_time_seconds

FROM combined_repairs t1

JOIN system.lakeflow.job_run_timeline t2 USING (workspace_id, job_id, run_id)

LEFT JOIN most_recent_jobs t3 USING (workspace_id, job_id)

LEFT JOIN cost_per_unsuccesful_status_agg t4 USING (workspace_id, job_id, run_id)

WHERE

t2.result_state IS NOT NULL

GROUP BY t1.workspace_id, t1.job_id, t1.run_id, t1.period_end_time

ORDER BY repairs DESC