從容器見解查詢記錄

容器見解會收集容器主機和容器的效能計量、清查資料和健全狀態資訊。 資料每三分鐘收集一次,並轉送至 Azure 監視器中的 Log Analytics 工作區,使用 Azure 監視器中的Log Analytics 進行記錄查詢。

您可以將此資料套用至各種案例,包括移轉規劃、容量分析、探索和隨選效能疑難排解。 Azure 監視器記錄可協助您找出趨勢、診斷瓶頸、預測或相互關聯資料,協助您判斷目前叢集設定是否以最佳方式執行。

如需使用這些查詢的資訊,請參閱在 Azure 監視器 Log Analytics 中使用查詢。 如需使用 Log Analytics 執行查詢以及處理其結果的完整教學課程,請參閱 Log Analytics 教學課程。

重要

本文中的查詢取決於容器深入解析所收集的資料,並儲存在 Log Analytics 工作區中。 如果您已修改預設資料收集設定,查詢可能不會傳回預期的結果。 最重要的是,如果因為您已為叢集啟用 Prometheus 計量而停用收集效能資料,則任何使用 Perf 資料表的查詢都不會傳回結果。

請參閱使用資料收集規則在容器深入解析中設定資料收集,了解預先設定,包含停用效能資料收集。 請參閱使用 ConfigMap 在容器深入解析中設定資料收集,了解更多資料收集的選項。

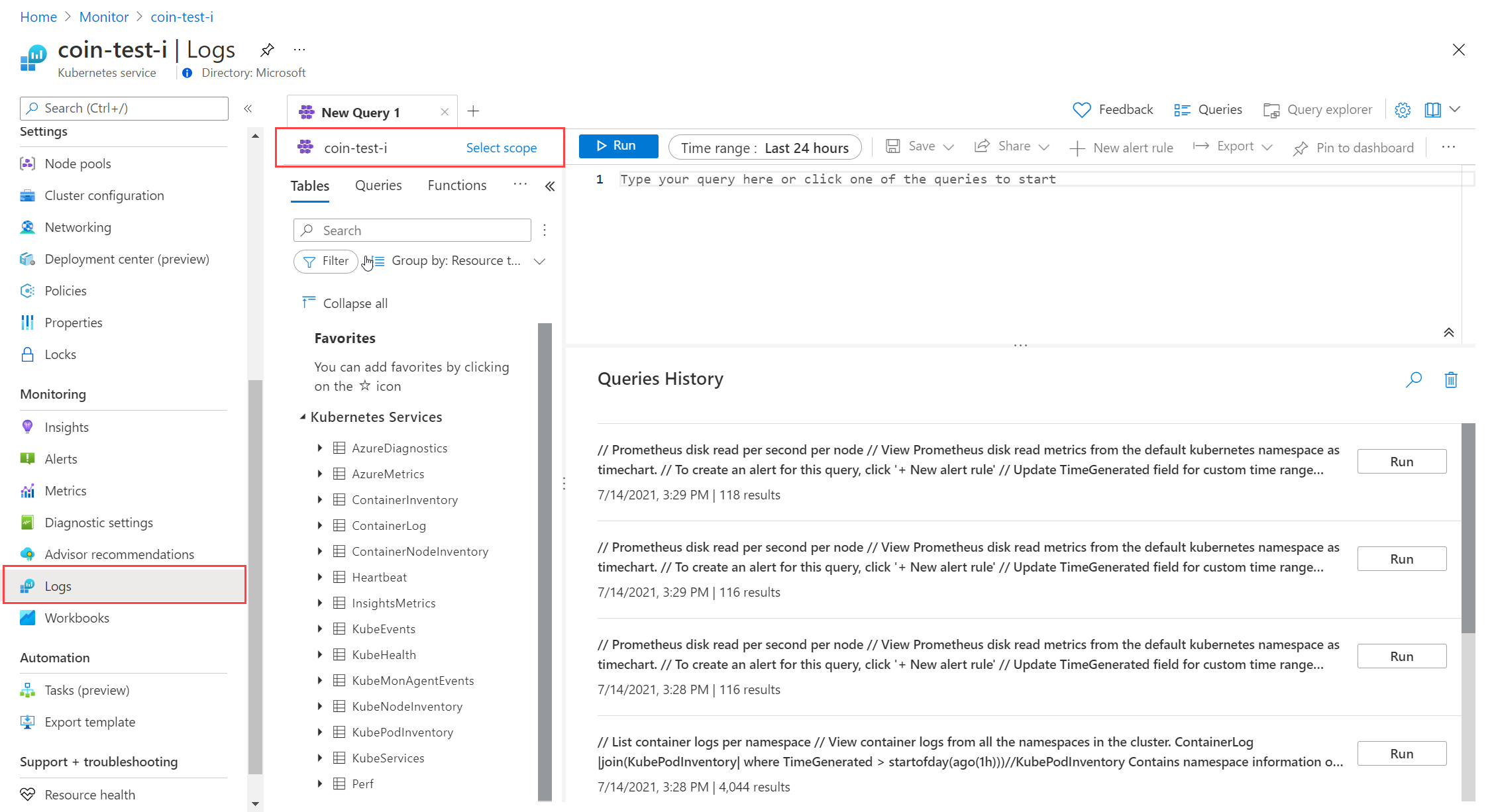

開啟 Log Analytics

Log Analytics 有多種啟動方式, 每個方式都會從不同的範圍開始操作。 若要存取工作區中的所有資料,請在 [監視] 功能表中選取 [記錄]。 若要將資料限制為單一 Kubernetes 叢集,請從該叢集的功能表選取 [記錄]。

現有記錄查詢

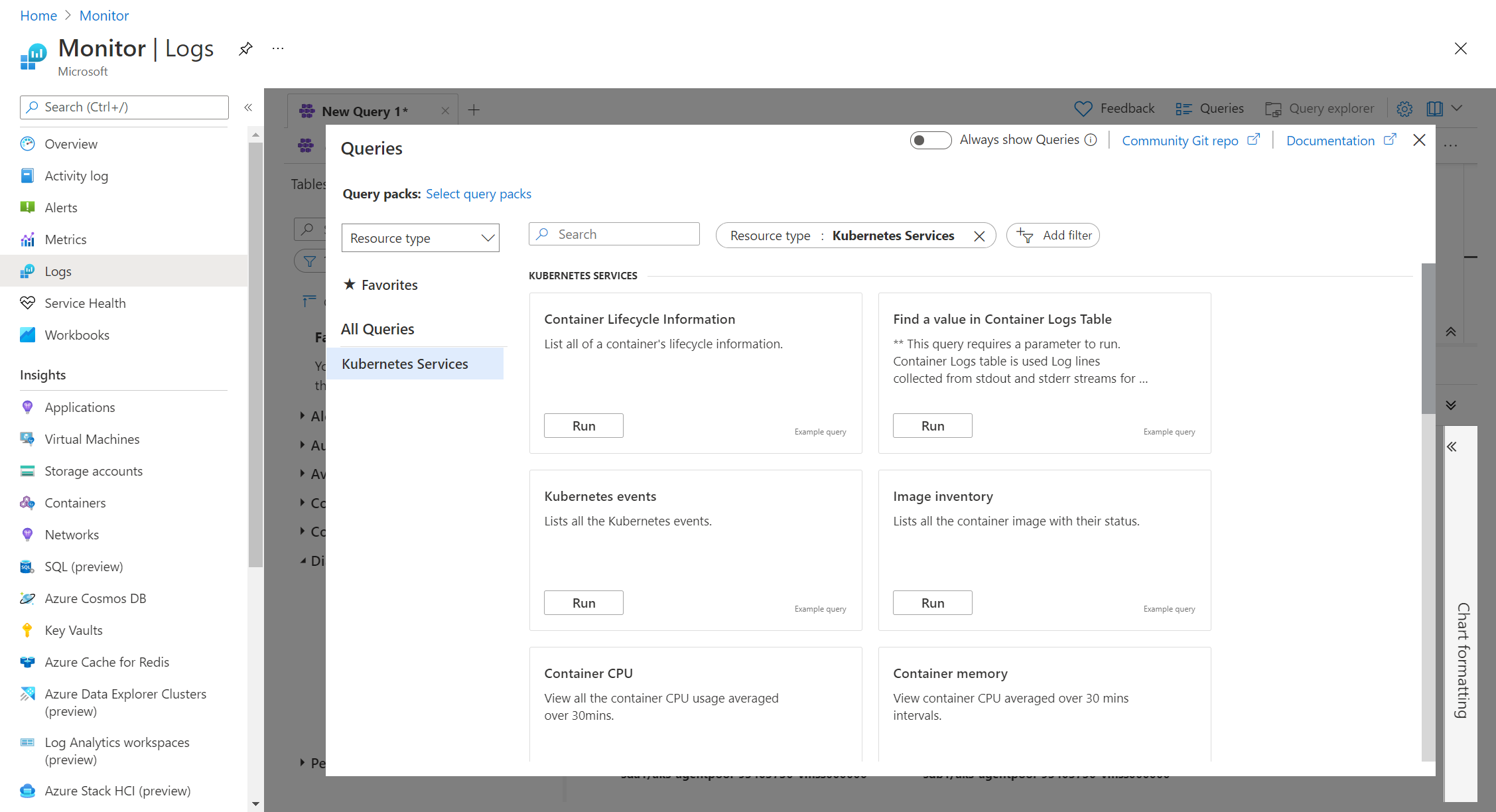

使用 Log Analytics 不一定要了解如何撰寫記錄查詢。 您可以從多個預先建置的查詢中選取。 您可以在不修改的情況下執行查詢,或使用這些查詢作為自訂查詢的開頭。 選取 Log Analytics 畫面頂端的 [查詢],然後檢視包含 [Kubernetes Services] 資源類型的查詢。

容器資料表

如需容器見解使用的資料表及其詳細描述的清單,請參閱 Azure 監視器資料表參考。 這些資料表全都可用於記錄查詢。

範例記錄查詢

從一或兩個範例開始建置查詢,然後加以修改以滿足您的需求,通常很實用。 若要取得建置更進階查詢的協助,您可以試驗下列範例查詢。

列出所有容器的生命週期資訊

ContainerInventory

| project Computer, Name, Image, ImageTag, ContainerState, CreatedTime, StartedTime, FinishedTime

| render table

Kubernetes 事件

注意

根據預設,不會收集 Normal 事件類型,除非已啟用 collect_all_kube_events ConfigMap 設定,否則在查詢 KubeEvents 資料表時,不會看到這些事件類型。 如果您需要收集 Normal 事件,請在 container-azm-ms-agentconfig ConfigMap 中啟用 collect_all_kube_events 設定。 如需如何設定 ConfigMap 的詳細資訊,請參閱為容器深入解析設定代理程式資料收集 (部分機器翻譯)。

KubeEvents

| where not(isempty(Namespace))

| sort by TimeGenerated desc

| render table

容器 CPU

Perf

| where ObjectName == "K8SContainer" and CounterName == "cpuUsageNanoCores"

| summarize AvgCPUUsageNanoCores = avg(CounterValue) by bin(TimeGenerated, 30m), InstanceName

容器記憶體

此查詢會使用僅適用於 Linux 節點的 memoryRssBytes。

Perf

| where ObjectName == "K8SContainer" and CounterName == "memoryRssBytes"

| summarize AvgUsedRssMemoryBytes = avg(CounterValue) by bin(TimeGenerated, 30m), InstanceName

自訂計量的每分鐘要求數

InsightsMetrics

| where Name == "requests_count"

| summarize Val=any(Val) by TimeGenerated=bin(TimeGenerated, 1m)

| sort by TimeGenerated asc

| project RequestsPerMinute = Val - prev(Val), TimeGenerated

| render barchart

依名稱和命名空間排序的 Pod

let startTimestamp = ago(1h);

KubePodInventory

| where TimeGenerated > startTimestamp

| project ContainerID, PodName=Name, Namespace

| where PodName contains "name" and Namespace startswith "namespace"

| distinct ContainerID, PodName

| join

(

ContainerLog

| where TimeGenerated > startTimestamp

)

on ContainerID

// at this point before the next pipe, columns from both tables are available to be "projected". Due to both

// tables having a "Name" column, we assign an alias as PodName to one column which we actually want

| project TimeGenerated, PodName, LogEntry, LogEntrySource

| summarize by TimeGenerated, LogEntry

| order by TimeGenerated desc

Pod 擴增 (HPA)

這個查詢會傳回每個部署中擴增的複本數目, 並計算擴增百分比與 HPA 中設定的複本數目上限。

let _minthreshold = 70; // minimum threshold goes here if you want to setup as an alert

let _maxthreshold = 90; // maximum threshold goes here if you want to setup as an alert

let startDateTime = ago(60m);

KubePodInventory

| where TimeGenerated >= startDateTime

| where Namespace !in('default', 'kube-system') // List of non system namespace filter goes here.

| extend labels = todynamic(PodLabel)

| extend deployment_hpa = reverse(substring(reverse(ControllerName), indexof(reverse(ControllerName), "-") + 1))

| distinct tostring(deployment_hpa)

| join kind=inner (InsightsMetrics

| where TimeGenerated > startDateTime

| where Name == 'kube_hpa_status_current_replicas'

| extend pTags = todynamic(Tags) //parse the tags for values

| extend ns = todynamic(pTags.k8sNamespace) //parse namespace value from tags

| extend deployment_hpa = todynamic(pTags.targetName) //parse HPA target name from tags

| extend max_reps = todynamic(pTags.spec_max_replicas) // Parse maximum replica settings from HPA deployment

| extend desired_reps = todynamic(pTags.status_desired_replicas) // Parse desired replica settings from HPA deployment

| summarize arg_max(TimeGenerated, *) by tostring(ns), tostring(deployment_hpa), Cluster=toupper(tostring(split(_ResourceId, '/')[8])), toint(desired_reps), toint(max_reps), scale_out_percentage=(desired_reps * 100 / max_reps)

//| where scale_out_percentage > _minthreshold and scale_out_percentage <= _maxthreshold

)

on deployment_hpa

Nodepool 擴增

這個查詢會傳回每個節點集區中的作用中節點數目, 並計算自動調整器設定中可用的使用中節點數目和最大節點設定,以判斷擴增百分比。 請參閱查詢中的註解行,將其用於結果數目警示規則。

let nodepoolMaxnodeCount = 10; // the maximum number of nodes in your auto scale setting goes here.

let _minthreshold = 20;

let _maxthreshold = 90;

let startDateTime = 60m;

KubeNodeInventory

| where TimeGenerated >= ago(startDateTime)

| extend nodepoolType = todynamic(Labels) //Parse the labels to get the list of node pool types

| extend nodepoolName = todynamic(nodepoolType[0].agentpool) // parse the label to get the nodepool name or set the specific nodepool name (like nodepoolName = 'agentpool)'

| summarize nodeCount = count(Computer) by ClusterName, tostring(nodepoolName), TimeGenerated

//(Uncomment the below two lines to set this as a log search alert)

//| extend scaledpercent = iff(((nodeCount * 100 / nodepoolMaxnodeCount) >= _minthreshold and (nodeCount * 100 / nodepoolMaxnodeCount) < _maxthreshold), "warn", "normal")

//| where scaledpercent == 'warn'

| summarize arg_max(TimeGenerated, *) by nodeCount, ClusterName, tostring(nodepoolName)

| project ClusterName,

TotalNodeCount= strcat("Total Node Count: ", nodeCount),

ScaledOutPercentage = (nodeCount * 100 / nodepoolMaxnodeCount),

TimeGenerated,

nodepoolName

系統容器 (複本集) 可用性

這個查詢會傳回系統容器 (replicasets),並報告無法使用的百分比。 請參閱查詢中的註解行,將其用於結果數目警示規則。

let startDateTime = 5m; // the minimum time interval goes here

let _minalertThreshold = 50; //Threshold for minimum and maximum unavailable or not running containers

let _maxalertThreshold = 70;

KubePodInventory

| where TimeGenerated >= ago(startDateTime)

| distinct ClusterName, TimeGenerated

| summarize Clustersnapshot = count() by ClusterName

| join kind=inner (

KubePodInventory

| where TimeGenerated >= ago(startDateTime)

| where Namespace in('default', 'kube-system') and ControllerKind == 'ReplicaSet' // the system namespace filter goes here

| distinct ClusterName, Computer, PodUid, TimeGenerated, PodStatus, ServiceName, PodLabel, Namespace, ContainerStatus

| summarize arg_max(TimeGenerated, *), TotalPODCount = count(), podCount = sumif(1, PodStatus == 'Running' or PodStatus != 'Running'), containerNotrunning = sumif(1, ContainerStatus != 'running')

by ClusterName, TimeGenerated, ServiceName, PodLabel, Namespace

)

on ClusterName

| project ClusterName, ServiceName, podCount, containerNotrunning, containerNotrunningPercent = (containerNotrunning * 100 / podCount), TimeGenerated, PodStatus, PodLabel, Namespace, Environment = tostring(split(ClusterName, '-')[3]), Location = tostring(split(ClusterName, '-')[4]), ContainerStatus

//Uncomment the below line to set for automated alert

//| where PodStatus == "Running" and containerNotrunningPercent > _minalertThreshold and containerNotrunningPercent < _maxalertThreshold

| summarize arg_max(TimeGenerated, *), c_entry=count() by PodLabel, ServiceName, ClusterName

//Below lines are to parse the labels to identify the impacted service/component name

| extend parseLabel = replace(@'k8s-app', @'k8sapp', PodLabel)

| extend parseLabel = replace(@'app.kubernetes.io\\/component', @'appkubernetesiocomponent', parseLabel)

| extend parseLabel = replace(@'app.kubernetes.io\\/instance', @'appkubernetesioinstance', parseLabel)

| extend tags = todynamic(parseLabel)

| extend tag01 = todynamic(tags[0].app)

| extend tag02 = todynamic(tags[0].k8sapp)

| extend tag03 = todynamic(tags[0].appkubernetesiocomponent)

| extend tag04 = todynamic(tags[0].aadpodidbinding)

| extend tag05 = todynamic(tags[0].appkubernetesioinstance)

| extend tag06 = todynamic(tags[0].component)

| project ClusterName, TimeGenerated,

ServiceName = strcat( ServiceName, tag01, tag02, tag03, tag04, tag05, tag06),

ContainerUnavailable = strcat("Unavailable Percentage: ", containerNotrunningPercent),

PodStatus = strcat("PodStatus: ", PodStatus),

ContainerStatus = strcat("Container Status: ", ContainerStatus)

系統容器 (精靈集) 可用性

這個查詢會傳回系統容器 (daemonsets),並報告無法使用的百分比。 請參閱查詢中的註解行,將其用於結果數目警示規則。

let startDateTime = 5m; // the minimum time interval goes here

let _minalertThreshold = 50; //Threshold for minimum and maximum unavailable or not running containers

let _maxalertThreshold = 70;

KubePodInventory

| where TimeGenerated >= ago(startDateTime)

| distinct ClusterName, TimeGenerated

| summarize Clustersnapshot = count() by ClusterName

| join kind=inner (

KubePodInventory

| where TimeGenerated >= ago(startDateTime)

| where Namespace in('default', 'kube-system') and ControllerKind == 'DaemonSet' // the system namespace filter goes here

| distinct ClusterName, Computer, PodUid, TimeGenerated, PodStatus, ServiceName, PodLabel, Namespace, ContainerStatus

| summarize arg_max(TimeGenerated, *), TotalPODCount = count(), podCount = sumif(1, PodStatus == 'Running' or PodStatus != 'Running'), containerNotrunning = sumif(1, ContainerStatus != 'running')

by ClusterName, TimeGenerated, ServiceName, PodLabel, Namespace

)

on ClusterName

| project ClusterName, ServiceName, podCount, containerNotrunning, containerNotrunningPercent = (containerNotrunning * 100 / podCount), TimeGenerated, PodStatus, PodLabel, Namespace, Environment = tostring(split(ClusterName, '-')[3]), Location = tostring(split(ClusterName, '-')[4]), ContainerStatus

//Uncomment the below line to set for automated alert

//| where PodStatus == "Running" and containerNotrunningPercent > _minalertThreshold and containerNotrunningPercent < _maxalertThreshold

| summarize arg_max(TimeGenerated, *), c_entry=count() by PodLabel, ServiceName, ClusterName

//Below lines are to parse the labels to identify the impacted service/component name

| extend parseLabel = replace(@'k8s-app', @'k8sapp', PodLabel)

| extend parseLabel = replace(@'app.kubernetes.io\\/component', @'appkubernetesiocomponent', parseLabel)

| extend parseLabel = replace(@'app.kubernetes.io\\/instance', @'appkubernetesioinstance', parseLabel)

| extend tags = todynamic(parseLabel)

| extend tag01 = todynamic(tags[0].app)

| extend tag02 = todynamic(tags[0].k8sapp)

| extend tag03 = todynamic(tags[0].appkubernetesiocomponent)

| extend tag04 = todynamic(tags[0].aadpodidbinding)

| extend tag05 = todynamic(tags[0].appkubernetesioinstance)

| extend tag06 = todynamic(tags[0].component)

| project ClusterName, TimeGenerated,

ServiceName = strcat( ServiceName, tag01, tag02, tag03, tag04, tag05, tag06),

ContainerUnavailable = strcat("Unavailable Percentage: ", containerNotrunningPercent),

PodStatus = strcat("PodStatus: ", PodStatus),

ContainerStatus = strcat("Container Status: ", ContainerStatus)

容器記錄

AKS 的容器記錄會儲存在 ContainerLogV2 資料表中。 您可以執行下列範例查詢,以尋找來自目標 Pod、部署或命名空間的 stderr/stdout 記錄輸出。

特定 Pod、命名空間和容器的容器記錄

ContainerLogV2

| where _ResourceId =~ "clusterResourceID" //update with resource ID

| where PodNamespace == "podNameSpace" //update with target namespace

| where PodName == "podName" //update with target pod

| where ContainerName == "containerName" //update with target container

| project TimeGenerated, Computer, ContainerId, LogMessage, LogSource

特定部署的容器記錄

let KubePodInv = KubePodInventory

| where _ResourceId =~ "clusterResourceID" //update with resource ID

| where Namespace == "deploymentNamespace" //update with target namespace

| where ControllerKind == "ReplicaSet"

| extend deployment = reverse(substring(reverse(ControllerName), indexof(reverse(ControllerName), "-") + 1))

| where deployment == "deploymentName" //update with target deployment

| extend ContainerId = ContainerID

| summarize arg_max(TimeGenerated, *) by deployment, ContainerId, PodStatus, ContainerStatus

| project deployment, ContainerId, PodStatus, ContainerStatus;

KubePodInv

| join

(

ContainerLogV2

| where TimeGenerated >= startTime and TimeGenerated < endTime

| where PodNamespace == "deploymentNamespace" //update with target namespace

| where PodName startswith "deploymentName" //update with target deployment

) on ContainerId

| project TimeGenerated, deployment, PodName, PodStatus, ContainerName, ContainerId, ContainerStatus, LogMessage, LogSource

特定命名空間中任何失敗 Pod 的容器記錄

let KubePodInv = KubePodInventory

| where TimeGenerated >= startTime and TimeGenerated < endTime

| where _ResourceId =~ "clustereResourceID" //update with resource ID

| where Namespace == "podNamespace" //update with target namespace

| where PodStatus == "Failed"

| extend ContainerId = ContainerID

| summarize arg_max(TimeGenerated, *) by ContainerId, PodStatus, ContainerStatus

| project ContainerId, PodStatus, ContainerStatus;

KubePodInv

| join

(

ContainerLogV2

| where TimeGenerated >= startTime and TimeGenerated < endTime

| where PodNamespace == "podNamespace" //update with target namespace

) on ContainerId

| project TimeGenerated, PodName, PodStatus, ContainerName, ContainerId, ContainerStatus, LogMessage, LogSource

容器深入解析預設視覺效果查詢

這些查詢是從容器深入解析現成視覺效果產生的。 如果您啟用自訂成本最佳化設定,則可以選擇使用這些來取代預設圖表。

依狀態的節點計數

此圖表的必要資料表包括 KubeNodeInventory。

let trendBinSize = 5m;

let maxListSize = 1000;

let clusterId = 'clusterResourceID'; //update with resource ID

let rawData = KubeNodeInventory

| where ClusterId =~ clusterId

| distinct ClusterId, TimeGenerated

| summarize ClusterSnapshotCount = count() by Timestamp = bin(TimeGenerated, trendBinSize), ClusterId

| join hint.strategy=broadcast ( KubeNodeInventory

| where ClusterId =~ clusterId

| summarize TotalCount = count(), ReadyCount = sumif(1, Status contains ('Ready')) by ClusterId, Timestamp = bin(TimeGenerated, trendBinSize)

| extend NotReadyCount = TotalCount - ReadyCount ) on ClusterId, Timestamp

| project ClusterId, Timestamp, TotalCount = todouble(TotalCount) / ClusterSnapshotCount, ReadyCount = todouble(ReadyCount) / ClusterSnapshotCount, NotReadyCount = todouble(NotReadyCount) / ClusterSnapshotCount;

rawData

| order by Timestamp asc

| summarize makelist(Timestamp, maxListSize), makelist(TotalCount, maxListSize), makelist(ReadyCount, maxListSize), makelist(NotReadyCount, maxListSize) by ClusterId

| join ( rawData

| summarize Avg_TotalCount = avg(TotalCount), Avg_ReadyCount = avg(ReadyCount), Avg_NotReadyCount = avg(NotReadyCount) by ClusterId ) on ClusterId

| project ClusterId, Avg_TotalCount, Avg_ReadyCount, Avg_NotReadyCount, list_Timestamp, list_TotalCount, list_ReadyCount, list_NotReadyCount

依狀態的 Pod 計數

此圖表的必要資料表包括 KubePodInventory。

let trendBinSize = 5m;

let maxListSize = 1000;

let clusterId = 'clusterResourceID'; //update with resource ID

let rawData = KubePodInventory

| where ClusterId =~ clusterId

| distinct ClusterId, TimeGenerated

| summarize ClusterSnapshotCount = count() by bin(TimeGenerated, trendBinSize), ClusterId

| join hint.strategy=broadcast ( KubePodInventory

| where ClusterId =~ clusterId

| summarize PodStatus=any(PodStatus) by TimeGenerated, PodUid, ClusterId

| summarize TotalCount = count(), PendingCount = sumif(1, PodStatus =~ 'Pending'), RunningCount = sumif(1, PodStatus =~ 'Running'), SucceededCount = sumif(1, PodStatus =~ 'Succeeded'), FailedCount = sumif(1, PodStatus =~ 'Failed'), TerminatingCount = sumif(1, PodStatus =~ 'Terminating') by ClusterId, bin(TimeGenerated, trendBinSize) ) on ClusterId, TimeGenerated

| extend UnknownCount = TotalCount - PendingCount - RunningCount - SucceededCount - FailedCount - TerminatingCount

| project ClusterId, Timestamp = TimeGenerated, TotalCount = todouble(TotalCount) / ClusterSnapshotCount, PendingCount = todouble(PendingCount) / ClusterSnapshotCount, RunningCount = todouble(RunningCount) / ClusterSnapshotCount, SucceededCount = todouble(SucceededCount) / ClusterSnapshotCount, FailedCount = todouble(FailedCount) / ClusterSnapshotCount, TerminatingCount = todouble(TerminatingCount) / ClusterSnapshotCount, UnknownCount = todouble(UnknownCount) / ClusterSnapshotCount;

let rawDataCached = rawData;

rawDataCached

| order by Timestamp asc

| summarize makelist(Timestamp, maxListSize), makelist(TotalCount, maxListSize), makelist(PendingCount, maxListSize), makelist(RunningCount, maxListSize), makelist(SucceededCount, maxListSize), makelist(FailedCount, maxListSize), makelist(TerminatingCount, maxListSize), makelist(UnknownCount, maxListSize) by ClusterId

| join ( rawDataCached

| summarize Avg_TotalCount = avg(TotalCount), Avg_PendingCount = avg(PendingCount), Avg_RunningCount = avg(RunningCount), Avg_SucceededCount = avg(SucceededCount), Avg_FailedCount = avg(FailedCount), Avg_TerminatingCount = avg(TerminatingCount), Avg_UnknownCount = avg(UnknownCount) by ClusterId ) on ClusterId

| project ClusterId, Avg_TotalCount, Avg_PendingCount, Avg_RunningCount, Avg_SucceededCount, Avg_FailedCount, Avg_TerminatingCount, Avg_UnknownCount, list_Timestamp, list_TotalCount, list_PendingCount, list_RunningCount, list_SucceededCount, list_FailedCount, list_TerminatingCount, list_UnknownCount

依狀態的容器清單

此圖表的必要資料表包括 KubePodInventory 和 Perf。

let startDateTime = datetime('start time');

let endDateTime = datetime('end time');

let trendBinSize = 15m;

let maxResultCount = 10000;

let metricUsageCounterName = 'cpuUsageNanoCores';

let metricLimitCounterName = 'cpuLimitNanoCores';

let KubePodInventoryTable = KubePodInventory

| where TimeGenerated >= startDateTime

| where TimeGenerated < endDateTime

| where isnotempty(ClusterName)

| where isnotempty(Namespace)

| where isnotempty(Computer)

| project TimeGenerated, ClusterId, ClusterName, Namespace, ServiceName, ControllerName, Node = Computer, Pod = Name, ContainerInstance = ContainerName, ContainerID, ReadySinceNow = format_timespan(endDateTime - ContainerCreationTimeStamp , 'ddd.hh:mm:ss.fff'), Restarts = ContainerRestartCount, Status = ContainerStatus, ContainerStatusReason = columnifexists('ContainerStatusReason', ''), ControllerKind = ControllerKind, PodStatus;

let startRestart = KubePodInventoryTable

| summarize arg_min(TimeGenerated, *) by Node, ContainerInstance

| where ClusterId =~ 'clusterResourceID' //update with resource ID

| project Node, ContainerInstance, InstanceName = strcat(ClusterId, '/', ContainerInstance), StartRestart = Restarts;

let IdentityTable = KubePodInventoryTable

| summarize arg_max(TimeGenerated, *) by Node, ContainerInstance

| where ClusterId =~ 'clusterResourceID' //update with resource ID

| project ClusterName, Namespace, ServiceName, ControllerName, Node, Pod, ContainerInstance, InstanceName = strcat(ClusterId, '/', ContainerInstance), ContainerID, ReadySinceNow, Restarts, Status = iff(Status =~ 'running', 0, iff(Status=~'waiting', 1, iff(Status =~'terminated', 2, 3))), ContainerStatusReason, ControllerKind, Containers = 1, ContainerName = tostring(split(ContainerInstance, '/')[1]), PodStatus, LastPodInventoryTimeGenerated = TimeGenerated, ClusterId;

let CachedIdentityTable = IdentityTable;

let FilteredPerfTable = Perf

| where TimeGenerated >= startDateTime

| where TimeGenerated < endDateTime

| where ObjectName == 'K8SContainer'

| where InstanceName startswith 'clusterResourceID'

| project Node = Computer, TimeGenerated, CounterName, CounterValue, InstanceName ;

let CachedFilteredPerfTable = FilteredPerfTable;

let LimitsTable = CachedFilteredPerfTable

| where CounterName =~ metricLimitCounterName

| summarize arg_max(TimeGenerated, *) by Node, InstanceName

| project Node, InstanceName, LimitsValue = iff(CounterName =~ 'cpuLimitNanoCores', CounterValue/1000000, CounterValue), TimeGenerated;

let MetaDataTable = CachedIdentityTable

| join kind=leftouter ( LimitsTable ) on Node, InstanceName

| join kind= leftouter ( startRestart ) on Node, InstanceName

| project ClusterName, Namespace, ServiceName, ControllerName, Node, Pod, InstanceName, ContainerID, ReadySinceNow, Restarts, LimitsValue, Status, ContainerStatusReason = columnifexists('ContainerStatusReason', ''), ControllerKind, Containers, ContainerName, ContainerInstance, StartRestart, PodStatus, LastPodInventoryTimeGenerated, ClusterId;

let UsagePerfTable = CachedFilteredPerfTable

| where CounterName =~ metricUsageCounterName

| project TimeGenerated, Node, InstanceName, CounterValue = iff(CounterName =~ 'cpuUsageNanoCores', CounterValue/1000000, CounterValue);

let LastRestartPerfTable = CachedFilteredPerfTable

| where CounterName =~ 'restartTimeEpoch'

| summarize arg_max(TimeGenerated, *) by Node, InstanceName

| project Node, InstanceName, UpTime = CounterValue, LastReported = TimeGenerated;

let AggregationTable = UsagePerfTable

| summarize Aggregation = max(CounterValue) by Node, InstanceName

| project Node, InstanceName, Aggregation;

let TrendTable = UsagePerfTable

| summarize TrendAggregation = max(CounterValue) by bin(TimeGenerated, trendBinSize), Node, InstanceName

| project TrendTimeGenerated = TimeGenerated, Node, InstanceName , TrendAggregation

| summarize TrendList = makelist(pack("timestamp", TrendTimeGenerated, "value", TrendAggregation)) by Node, InstanceName;

let containerFinalTable = MetaDataTable

| join kind= leftouter( AggregationTable ) on Node, InstanceName

| join kind = leftouter (LastRestartPerfTable) on Node, InstanceName

| order by Aggregation desc, ContainerName

| join kind = leftouter ( TrendTable) on Node, InstanceName

| extend ContainerIdentity = strcat(ContainerName, ' ', Pod)

| project ContainerIdentity, Status, ContainerStatusReason = columnifexists('ContainerStatusReason', ''), Aggregation, Node, Restarts, ReadySinceNow, TrendList = iif(isempty(TrendList), parse_json('[]'), TrendList), LimitsValue, ControllerName, ControllerKind, ContainerID, Containers, UpTimeNow = datetime_diff('Millisecond', endDateTime, datetime_add('second', toint(UpTime), make_datetime(1970,1,1))), ContainerInstance, StartRestart, LastReportedDelta = datetime_diff('Millisecond', endDateTime, LastReported), PodStatus, InstanceName, Namespace, LastPodInventoryTimeGenerated, ClusterId;

containerFinalTable

| limit 200

依狀態的控制項清單

此圖表的必要資料表包括 KubePodInventory 和 Perf。

let endDateTime = datetime('start time');

let startDateTime = datetime('end time');

let trendBinSize = 15m;

let metricLimitCounterName = 'cpuLimitNanoCores';

let metricUsageCounterName = 'cpuUsageNanoCores';

let primaryInventory = KubePodInventory

| where TimeGenerated >= startDateTime

| where TimeGenerated < endDateTime

| where isnotempty(ClusterName)

| where isnotempty(Namespace)

| extend Node = Computer

| where ClusterId =~ 'clusterResourceID' //update with resource ID

| project TimeGenerated, ClusterId, ClusterName, Namespace, ServiceName, Node = Computer, ControllerName, Pod = Name, ContainerInstance = ContainerName, ContainerID, InstanceName, PerfJoinKey = strcat(ClusterId, '/', ContainerName), ReadySinceNow = format_timespan(endDateTime - ContainerCreationTimeStamp, 'ddd.hh:mm:ss.fff'), Restarts = ContainerRestartCount, Status = ContainerStatus, ContainerStatusReason = columnifexists('ContainerStatusReason', ''), ControllerKind = ControllerKind, PodStatus, ControllerId = strcat(ClusterId, '/', Namespace, '/', ControllerName);

let podStatusRollup = primaryInventory

| summarize arg_max(TimeGenerated, *) by Pod

| project ControllerId, PodStatus, TimeGenerated

| summarize count() by ControllerId, PodStatus = iif(TimeGenerated < ago(30m), 'Unknown', PodStatus)

| summarize PodStatusList = makelist(pack('Status', PodStatus, 'Count', count_)) by ControllerId;

let latestContainersByController = primaryInventory

| where isnotempty(Node)

| summarize arg_max(TimeGenerated, *) by PerfJoinKey

| project ControllerId, PerfJoinKey;

let filteredPerformance = Perf

| where TimeGenerated >= startDateTime

| where TimeGenerated < endDateTime

| where ObjectName == 'K8SContainer'

| where InstanceName startswith 'clusterResourceID' //update with resource ID

| project TimeGenerated, CounterName, CounterValue, InstanceName, Node = Computer ;

let metricByController = filteredPerformance

| where CounterName =~ metricUsageCounterName

| extend PerfJoinKey = InstanceName

| summarize Value = percentile(CounterValue, 95) by PerfJoinKey, CounterName

| join (latestContainersByController) on PerfJoinKey

| summarize Value = sum(Value) by ControllerId, CounterName

| project ControllerId, CounterName, AggregationValue = iff(CounterName =~ 'cpuUsageNanoCores', Value/1000000, Value);

let containerCountByController = latestContainersByController

| summarize ContainerCount = count() by ControllerId;

let restartCountsByController = primaryInventory

| summarize Restarts = max(Restarts) by ControllerId;

let oldestRestart = primaryInventory

| summarize ReadySinceNow = min(ReadySinceNow) by ControllerId;

let trendLineByController = filteredPerformance

| where CounterName =~ metricUsageCounterName

| extend PerfJoinKey = InstanceName

| summarize Value = percentile(CounterValue, 95) by bin(TimeGenerated, trendBinSize), PerfJoinKey, CounterName

| order by TimeGenerated asc

| join kind=leftouter (latestContainersByController) on PerfJoinKey

| summarize Value=sum(Value) by ControllerId, TimeGenerated, CounterName

| project TimeGenerated, Value = iff(CounterName =~ 'cpuUsageNanoCores', Value/1000000, Value), ControllerId

| summarize TrendList = makelist(pack("timestamp", TimeGenerated, "value", Value)) by ControllerId;

let latestLimit = filteredPerformance

| where CounterName =~ metricLimitCounterName

| extend PerfJoinKey = InstanceName

| summarize arg_max(TimeGenerated, *) by PerfJoinKey

| join kind=leftouter (latestContainersByController) on PerfJoinKey

| summarize Value = sum(CounterValue) by ControllerId, CounterName

| project ControllerId, LimitValue = iff(CounterName =~ 'cpuLimitNanoCores', Value/1000000, Value);

let latestTimeGeneratedByController = primaryInventory

| summarize arg_max(TimeGenerated, *) by ControllerId

| project ControllerId, LastTimeGenerated = TimeGenerated;

primaryInventory

| distinct ControllerId, ControllerName, ControllerKind, Namespace

| join kind=leftouter (podStatusRollup) on ControllerId

| join kind=leftouter (metricByController) on ControllerId

| join kind=leftouter (containerCountByController) on ControllerId

| join kind=leftouter (restartCountsByController) on ControllerId

| join kind=leftouter (oldestRestart) on ControllerId

| join kind=leftouter (trendLineByController) on ControllerId

| join kind=leftouter (latestLimit) on ControllerId

| join kind=leftouter (latestTimeGeneratedByController) on ControllerId

| project ControllerId, ControllerName, ControllerKind, PodStatusList, AggregationValue, ContainerCount = iif(isempty(ContainerCount), 0, ContainerCount), Restarts, ReadySinceNow, Node = '-', TrendList, LimitValue, LastTimeGenerated, Namespace

| limit 250;

依狀態的節點清單

此圖表的必要資料表包括 KubeNodeInventory、KubePodInventory 和 Perf。

let endDateTime = datetime('start time');

let startDateTime = datetime('end time');

let binSize = 15m;

let limitMetricName = 'cpuCapacityNanoCores';

let usedMetricName = 'cpuUsageNanoCores';

let materializedNodeInventory = KubeNodeInventory

| where TimeGenerated < endDateTime

| where TimeGenerated >= startDateTime

| project ClusterName, ClusterId, Node = Computer, TimeGenerated, Status, NodeName = Computer, NodeId = strcat(ClusterId, '/', Computer), Labels

| where ClusterId =~ 'clusterResourceID'; //update with resource ID

let materializedPerf = Perf

| where TimeGenerated < endDateTime

| where TimeGenerated >= startDateTime

| where ObjectName == 'K8SNode'

| extend NodeId = InstanceName;

let materializedPodInventory = KubePodInventory

| where TimeGenerated < endDateTime

| where TimeGenerated >= startDateTime

| where isnotempty(ClusterName)

| where isnotempty(Namespace)

| where ClusterId =~ 'clusterResourceID'; //update with resource ID

let inventoryOfCluster = materializedNodeInventory

| summarize arg_max(TimeGenerated, Status) by ClusterName, ClusterId, NodeName, NodeId;

let labelsByNode = materializedNodeInventory

| summarize arg_max(TimeGenerated, Labels) by ClusterName, ClusterId, NodeName, NodeId;

let countainerCountByNode = materializedPodInventory

| project ContainerName, NodeId = strcat(ClusterId, '/', Computer)

| distinct NodeId, ContainerName

| summarize ContainerCount = count() by NodeId;

let latestUptime = materializedPerf

| where CounterName == 'restartTimeEpoch'

| summarize arg_max(TimeGenerated, CounterValue) by NodeId

| extend UpTimeMs = datetime_diff('Millisecond', endDateTime, datetime_add('second', toint(CounterValue), make_datetime(1970,1,1)))

| project NodeId, UpTimeMs;

let latestLimitOfNodes = materializedPerf

| where CounterName == limitMetricName

| summarize CounterValue = max(CounterValue) by NodeId

| project NodeId, LimitValue = CounterValue;

let actualUsageAggregated = materializedPerf

| where CounterName == usedMetricName

| summarize Aggregation = percentile(CounterValue, 95) by NodeId //This line updates to the desired aggregation

| project NodeId, Aggregation;

let aggregateTrendsOverTime = materializedPerf

| where CounterName == usedMetricName

| summarize TrendAggregation = percentile(CounterValue, 95) by NodeId, bin(TimeGenerated, binSize) //This line updates to the desired aggregation

| project NodeId, TrendAggregation, TrendDateTime = TimeGenerated;

let unscheduledPods = materializedPodInventory

| where isempty(Computer)

| extend Node = Computer

| where isempty(ContainerStatus)

| where PodStatus == 'Pending'

| order by TimeGenerated desc

| take 1

| project ClusterName, NodeName = 'unscheduled', LastReceivedDateTime = TimeGenerated, Status = 'unscheduled', ContainerCount = 0, UpTimeMs = '0', Aggregation = '0', LimitValue = '0', ClusterId;

let scheduledPods = inventoryOfCluster

| join kind=leftouter (aggregateTrendsOverTime) on NodeId

| extend TrendPoint = pack("TrendTime", TrendDateTime, "TrendAggregation", TrendAggregation)

| summarize make_list(TrendPoint) by NodeId, NodeName, Status

| join kind=leftouter (labelsByNode) on NodeId

| join kind=leftouter (countainerCountByNode) on NodeId

| join kind=leftouter (latestUptime) on NodeId

| join kind=leftouter (latestLimitOfNodes) on NodeId

| join kind=leftouter (actualUsageAggregated) on NodeId

| project ClusterName, NodeName, ClusterId, list_TrendPoint, LastReceivedDateTime = TimeGenerated, Status, ContainerCount, UpTimeMs, Aggregation, LimitValue, Labels

| limit 250;

union (scheduledPods), (unscheduledPods)

| project ClusterName, NodeName, LastReceivedDateTime, Status, ContainerCount, UpTimeMs = UpTimeMs_long, Aggregation = Aggregation_real, LimitValue = LimitValue_real, list_TrendPoint, Labels, ClusterId

Prometheus 計量

下列範例需要使用容器深入解析將 Prometheus 計量傳送至 Log Analytics 工作區中所描述的設定。

若要檢視 Azure 監視器抓取並依命名空間篩選的 Prometheus 計量,請指定 "prometheus"。 以下是從 default Kube 命名空間檢視 Prometheus 計量的範例查詢。

InsightsMetrics

| where Namespace contains "prometheus"

| extend tags=parse_json(Tags)

| summarize count() by Name

Prometheus 資料也可以依名稱直接查詢。

InsightsMetrics

| where Namespace contains "prometheus"

| where Name contains "some_prometheus_metric"

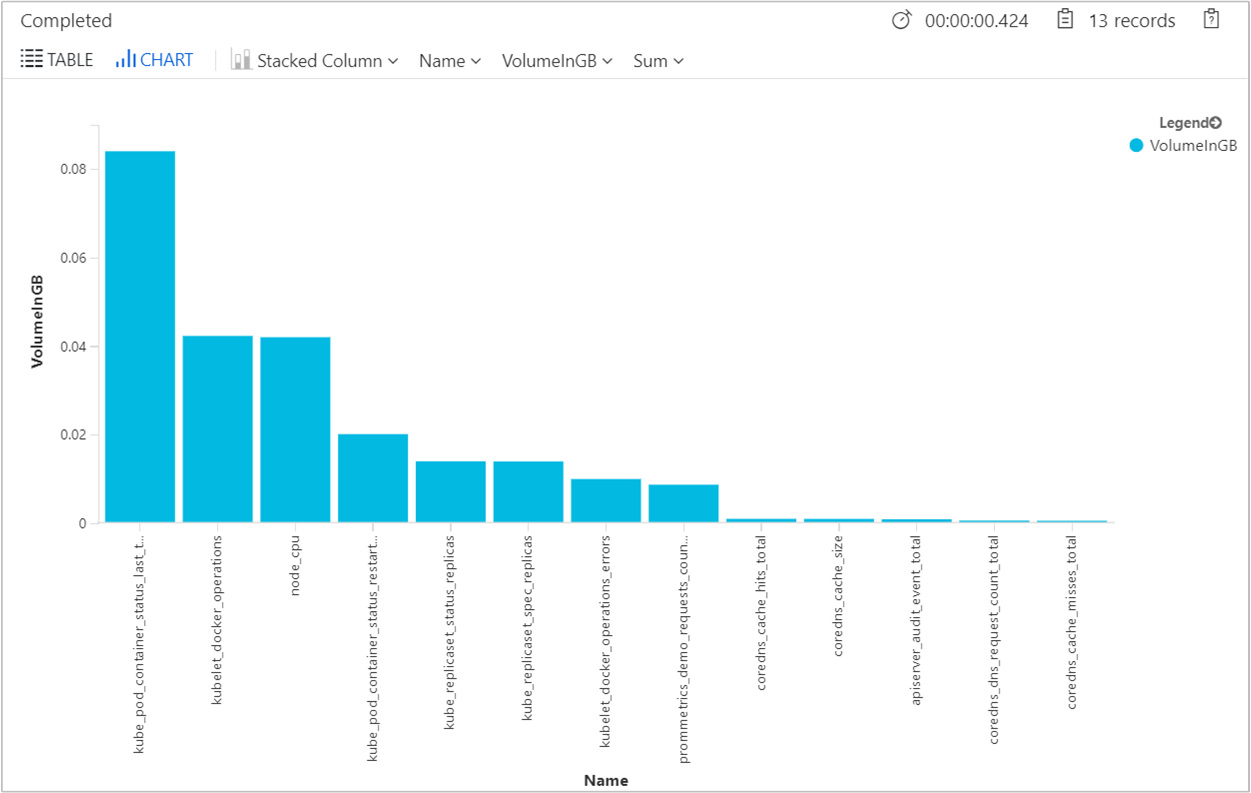

若要查明每天每個計量大小的擷取量 (以 GB 為單位),以瞭解是否過高,我們提供下列查詢。

InsightsMetrics

| where Namespace contains "prometheus"

| where TimeGenerated > ago(24h)

| summarize VolumeInGB = (sum(_BilledSize) / (1024 * 1024 * 1024)) by Name

| order by VolumeInGB desc

| render barchart

將顯示類似下列範例結果的輸出。

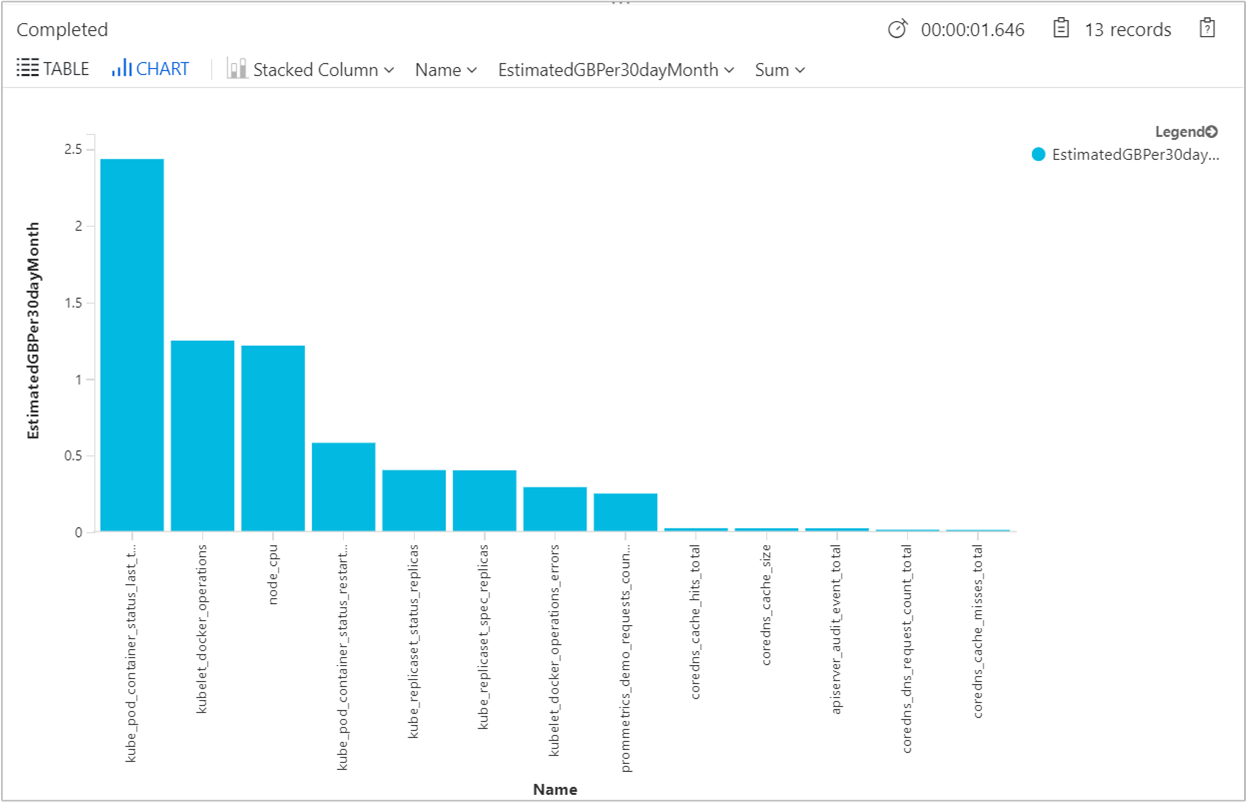

為了估計每個月的每個計量大小 (以 GB 為單位),以了解工作區收到的內嵌資料量是否太高,我們提供下列查詢。

InsightsMetrics

| where Namespace contains "prometheus"

| where TimeGenerated > ago(24h)

| summarize EstimatedGBPer30dayMonth = (sum(_BilledSize) / (1024 * 1024 * 1024)) * 30 by Name

| order by EstimatedGBPer30dayMonth desc

| render barchart

將顯示類似下列範例結果的輸出。

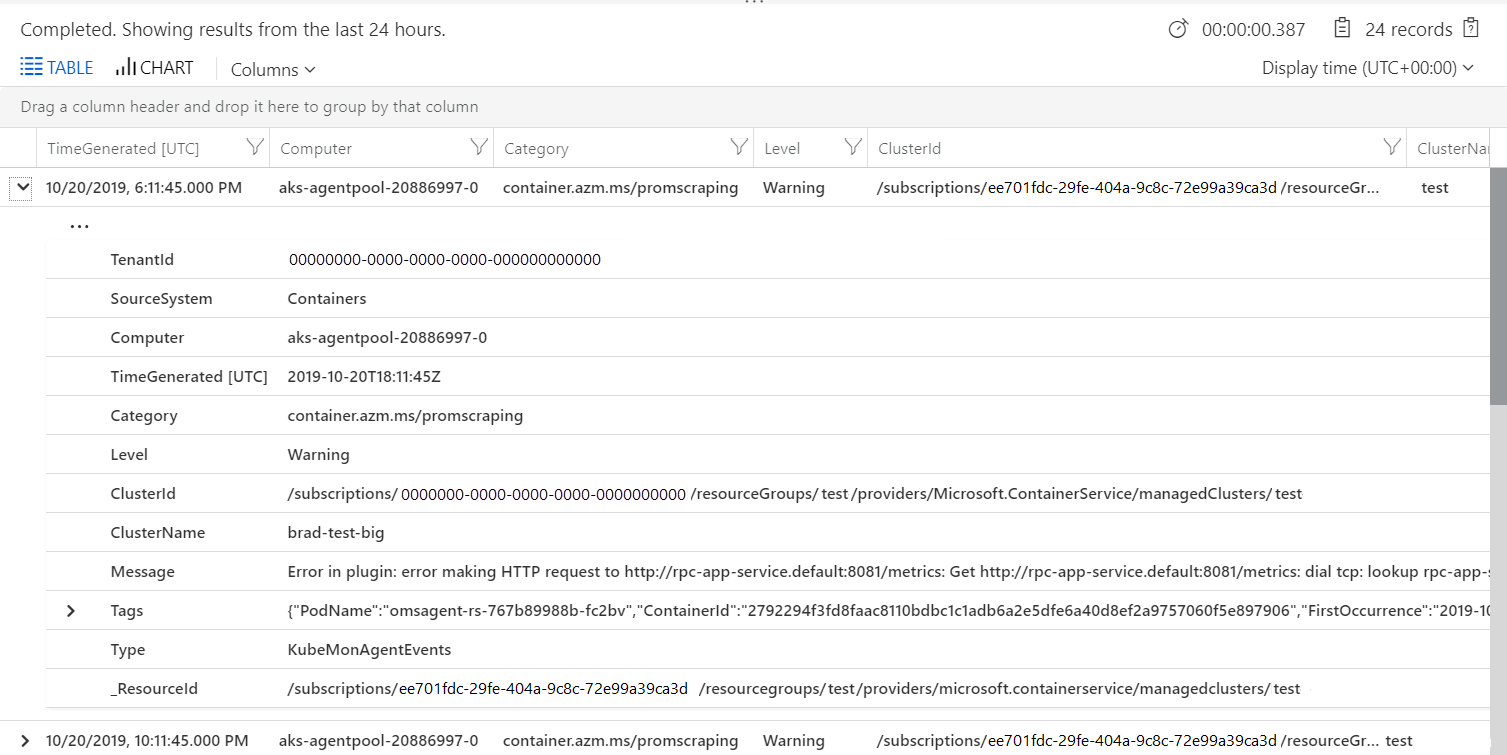

設定或抓取錯誤

若要調查任何設定或抓取錯誤,下列範例查詢會從 KubeMonAgentEvents 資料表傳回資訊事件。

KubeMonAgentEvents | where Level != "Info"

輸出會顯示類似下列範例的結果:

常見問題集

本節提供常見問題的答案。

我可以在 Grafana 中檢視收集到的計量嗎?

容器深入解析支援在 Grafana 儀表板中,檢視 Log Analytics 工作區中儲存的計量。 我們已提供範本,您可以從 Grafana 的儀表板存放庫 (英文) 下載。 使用範本開始並當作參考,協助您了解如何從受監視的叢集查詢資料以在自訂 Grafana 儀表板中進行視覺化。

為什麼大於 16 KB 的記錄行會分割為 Log Analytics 中的多筆記錄?

代理程式會使用 Docker JSON 檔案記錄驅動程式擷取容器的 stdout 和 stderr。 此記錄驅動程式會在從 stdout 或 stderr 複製到檔案時,將大於 16 KB 的記錄行分割成多行。 使用多行記錄來取得最多 64KB 的記錄檔大小。

下一步

容器見解不包含一組預先定義的警示。 如想瞭解如何建立高 CPU 和記憶體使用率的建議警示,以支援您的 DevOps 或作業流程和程序,請參閱使用容器見解建立效能警示。