Azure OpenAI 評估版 (預覽版)

大型語言模型的評估是測量不同工作和維度效能的重要步驟。 對於微調的模型來說,這特別重要,其中評估訓練的效能收益(或損失)非常重要。 徹底的評估可協助您瞭解不同版本的模型如何影響您的應用程式或案例。

Azure OpenAI 評估可讓開發人員建立評估回合,以針對預期的輸入/輸出組進行測試,評估模型在關鍵計量上的效能,例如精確度、可靠性和整體效能。

評估支援

區域可用性

- 美國東部 2

- 美國中北部

- 瑞典中部

- 瑞士西部

支援的部署類型

- 標準

- 已佈建

評估管線

測試資料

您需要組合您想要測試的基礎真相數據集。 數據集建立通常是反覆程式,可確保評估一段時間后仍與您的案例相關。 這個基礎事實數據集通常是手工製作的,並代表您模型的預期行為。 數據集也會加上標籤,並包含預期的答案。

注意

某些評估測試,例如 情感 和 有效的 JSON 或 XML ,不需要一般事實數據。

您的數據源必須是 JSONL 格式。 以下是 JSONL 評估數據集的兩個範例:

評估格式

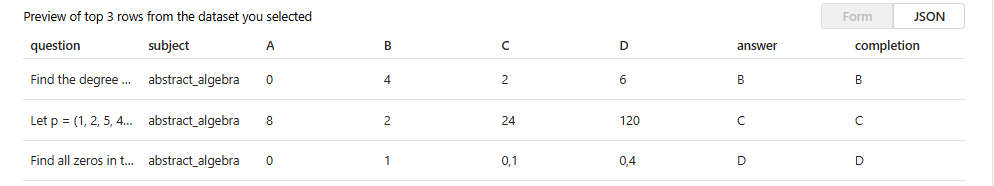

{"question": "Find the degree for the given field extension Q(sqrt(2), sqrt(3), sqrt(18)) over Q.", "subject": "abstract_algebra", "A": "0", "B": "4", "C": "2", "D": "6", "answer": "B", "completion": "B"}

{"question": "Let p = (1, 2, 5, 4)(2, 3) in S_5 . Find the index of <p> in S_5.", "subject": "abstract_algebra", "A": "8", "B": "2", "C": "24", "D": "120", "answer": "C", "completion": "C"}

{"question": "Find all zeros in the indicated finite field of the given polynomial with coefficients in that field. x^5 + 3x^3 + x^2 + 2x in Z_5", "subject": "abstract_algebra", "A": "0", "B": "1", "C": "0,1", "D": "0,4", "answer": "D", "completion": "D"}

當您上傳並選取評估檔案時,將會傳回前三行的預覽:

您可以選擇任何先前上傳的數據集,或上傳新的數據集。

產生回應 (選擇性)

您在評估中使用的提示應該符合您計劃用於生產環境的提示。 這些提示會提供要遵循之模型的指示。 與遊樂場體驗類似,您可以建立多個輸入,以在提示中包含很少拍攝的範例。 如需詳細資訊,請參閱 提示工程技術 ,以取得提示設計和提示工程中某些進階技術的詳細數據。

您可以使用 格式參考提示 {{input.column_name}} 內的輸入數據,其中column_name對應至輸入檔中的數據行名稱。

評估期間產生的輸出將會使用 {{sample.output_text}} 格式在後續步驟中參考。

注意

您必須使用雙大括弧,以確保正確參考數據。

模型部署

在建立評估時,您將挑選產生回應時要使用的模型(選擇性),以及評分具有特定測試準則的模型時要使用的模型。

在 Azure OpenAI 中,您將指派特定的模型部署,以作為評估的一部分使用。 您可以為每個模型建立個別的評估組態來比較多個部署。 這可讓您為每個評估定義特定的提示,以更好地控制不同模型所需的變化。

您可以評估基底或微調的模型部署。 清單中可用的部署取決於您在 Azure OpenAI 資源內建立的部署。 如果您找不到所需的部署,您可以從 Azure OpenAI 評估版頁面建立新的部署。

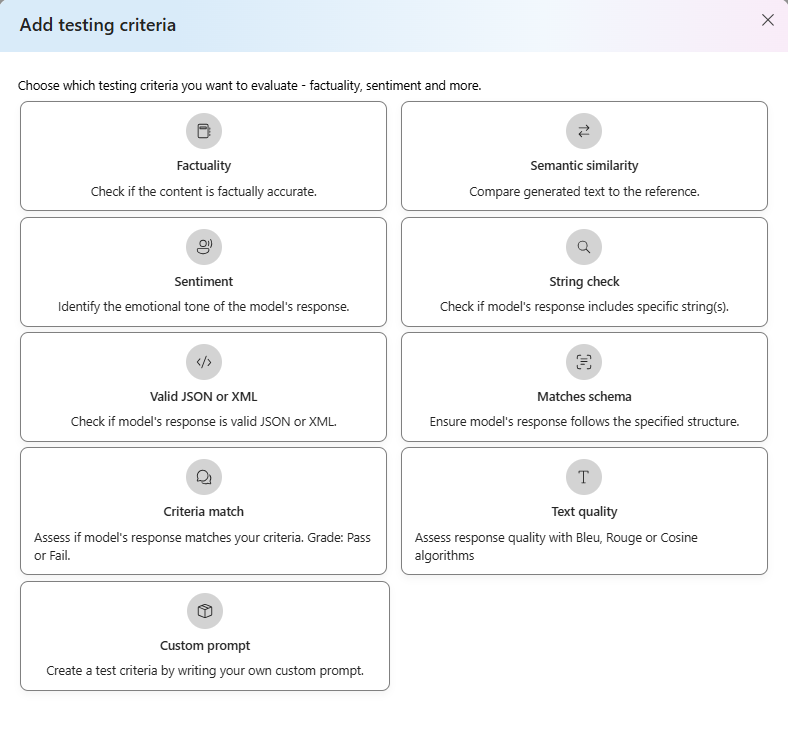

測試準則

測試準則可用來評估目標模型所產生的每個輸出的有效性。 這些測試會比較輸入數據與輸出數據,以確保一致性。 您可以彈性地設定不同的準則,以測試及測量不同層級輸出的質量和相關性。

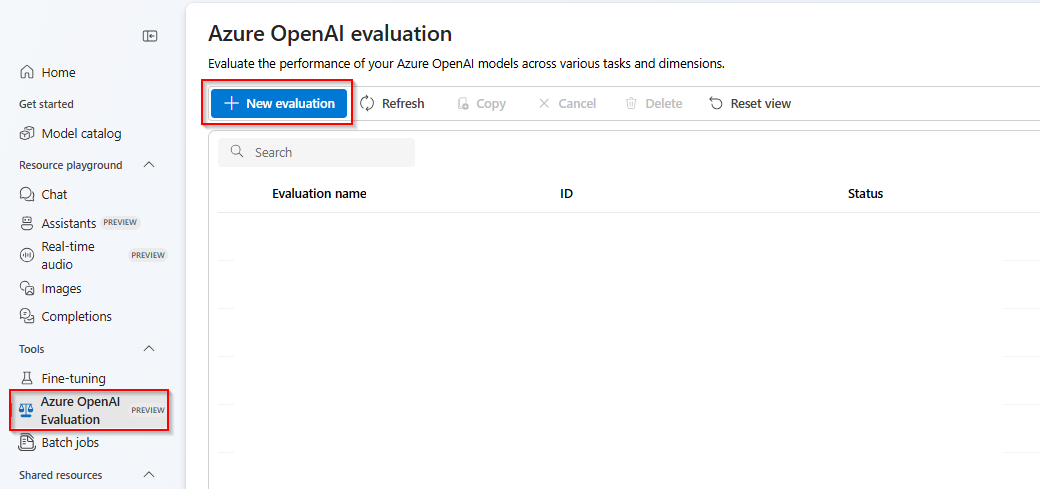

開始使用

在 Azure AI Foundry 入口網站中選取 Azure OpenAI 評估版(預覽版 )。 若要查看此檢視,可能需要先選取支援區域中的現有 Azure OpenAI 資源。

選取 [新增評估]

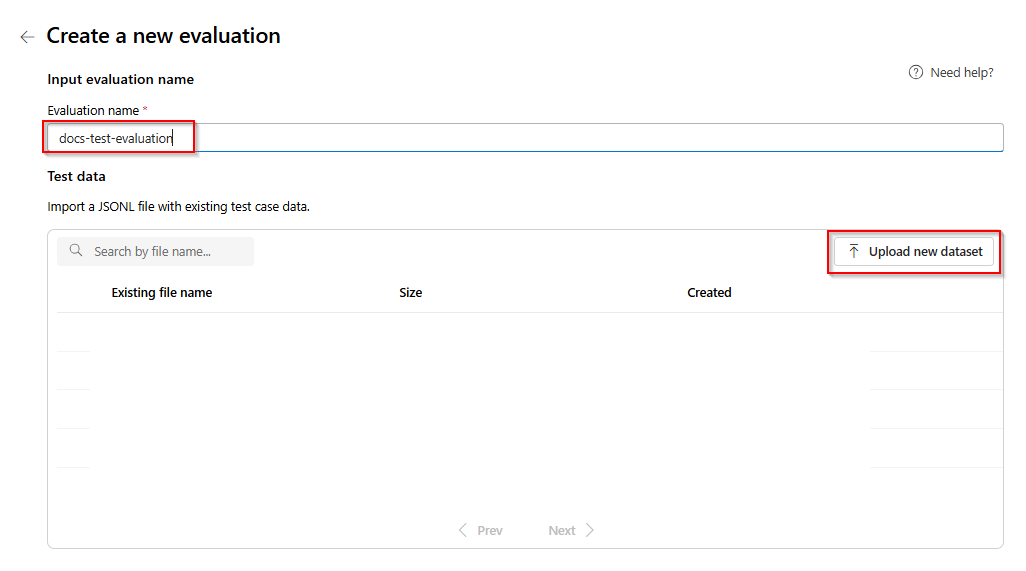

輸入評估的名稱。 除非您編輯並取代它,否則預設會自動產生隨機名稱。 > 選取 [ 上傳新數據集]。

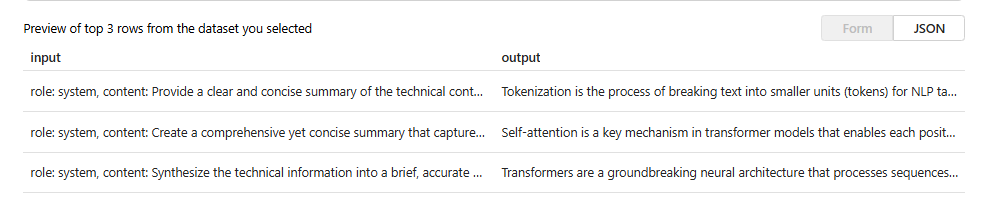

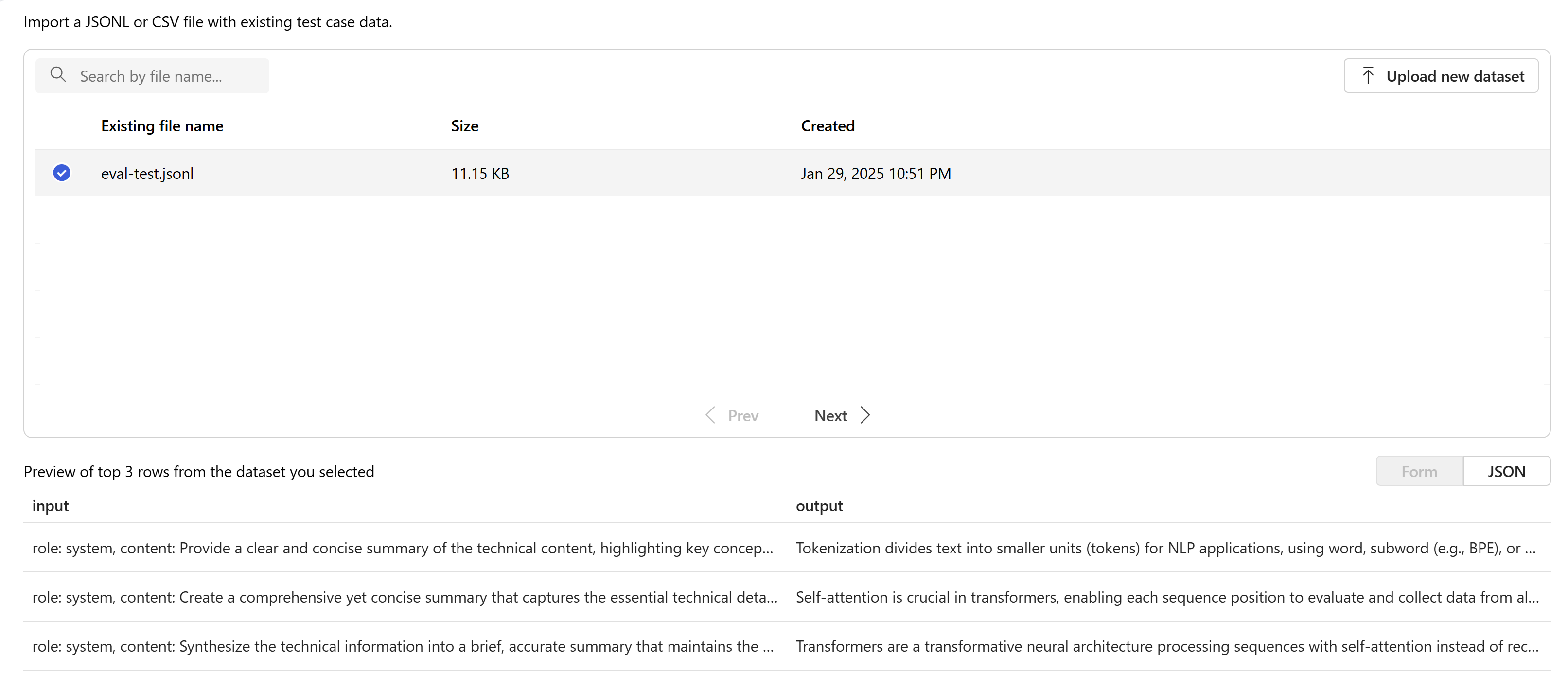

選取您的評估,其格式為

.jsonl。 如果您需要範例測試檔案,您可以將這 10 行儲存至名為 的eval-test.jsonl檔案:{"input": [{"role": "system", "content": "Provide a clear and concise summary of the technical content, highlighting key concepts and their relationships. Focus on the main ideas and practical implications."}, {"role": "user", "content": "Tokenization is a key step in preprocessing for natural language processing, involving the division of text into smaller components called tokens. These can be words, subwords, or characters, depending on the method chosen. Word tokenization divides text at word boundaries, while subword techniques like Byte Pair Encoding (BPE) or WordPiece can manage unknown words by breaking them into subunits. Character tokenization splits text into individual characters, useful for multiple languages and misspellings. The tokenization method chosen greatly affects model performance and its capacity to handle various languages and vocabularies."}], "output": "Tokenization divides text into smaller units (tokens) for NLP applications, using word, subword (e.g., BPE), or character methods. Each has unique benefits, impacting model performance and language processing capabilities."} {"input": [{"role": "system", "content": "Create a comprehensive yet concise summary that captures the essential technical details and significance of the topic."}, {"role": "user", "content": "Self-attention mechanisms are vital in modern transformer models, allowing them to evaluate the relevance of different parts of an input sequence when processing each element. In self-attention, each position in a sequence learns to focus on all other positions, forming a weighted sum of their values. Attention weights are derived using queries, keys, and values, with compatibility between a query and key determining attention to each value. This mechanism enables capturing long-range dependencies and relationships within a sequence, making it effective for tasks needing context understanding and inter-part relationships."}], "output": "Self-attention is crucial in transformers, enabling each sequence position to evaluate and collect data from all others. It uses queries, keys, and values for attention weights, effectively capturing long-range dependencies and contextual links."} {"input": [{"role": "system", "content": "Synthesize the technical information into a brief, accurate summary that maintains the core concepts and their significance."}, {"role": "user", "content": "The transformer architecture significantly changed natural language processing by introducing a new method for sequence processing. Unlike recurrent neural networks, transformers process whole sequences at once using self-attention. The architecture consists of an encoder and decoder, each with multiple layers of multi-head attention, feed-forward networks, and normalization layers. The encoder processes the input sequence, and the decoder produces the output sequence. Positional encodings are added to retain sequence order information since the model lacks inherent sequence position awareness. This architecture underlies many leading language models like BERT and GPT."}], "output": "Transformers are a transformative neural architecture processing sequences with self-attention instead of recurrence. Their encoder-decoder design, with multi-head attention and position encodings, is fundamental to modern language models like BERT and GPT."} {"input": [{"role": "system", "content": "Distill the technical content into a clear, concise summary that preserves the most important concepts and their practical applications."}, {"role": "user", "content": "Cosine similarity is a metric for measuring similarity between vectors by calculating the cosine of the angle between them. The outcome ranges from -1 to 1, with 1 indicating identical orientation, 0 orthogonality, and -1 opposite orientation. In machine learning and NLP, cosine similarity is useful for comparing document vectors, word embeddings, or any high-dimensional representations. It's often preferred over Euclidean distance because it's invariant to vector magnitude, focusing instead on orientation. This makes it particularly useful for comparing documents or embeddings of various lengths or scales."}], "output": "Cosine similarity evaluates vector similarity by computing their angle's cosine, with values from -1 to 1. It's widely used in ML and NLP for comparing document vectors and embeddings due to its magnitude-invariant nature."} {"input": [{"role": "system", "content": "Provide a succinct summary that captures the key technical concepts and their relationships while maintaining clarity and accuracy."}, {"role": "user", "content": "Neural network activation functions are crucial for introducing non-linearity to deep learning models. Commonly used activation functions include ReLU (Rectified Linear Unit), which outputs the input directly if positive and zero otherwise, sigmoid, which compresses values between 0 and 1, and tanh, which normalizes values between -1 and 1. Each function has specific benefits and applications: ReLU avoids vanishing gradients and enables sparse activation, sigmoid is useful for binary classification, and tanh is often used in hidden layers for its zero-centered output. The activation function choice greatly affects model training dynamics and ultimate performance."}], "output": "Activation functions introduce non-linearity to neural networks, with ReLU, sigmoid, and tanh being the most common. Each serves distinct purposes: ReLU prevents vanishing gradients, sigmoid is suitable for binary classification, and tanh offers zero-centered output, all affecting model performance differently."} {"input": [{"role": "system", "content": "Create a focused summary that emphasizes the main technical concepts and their practical implications while maintaining accuracy."}, {"role": "user", "content": "Batch normalization is a technique that stabilizes and accelerates deep neural network training by normalizing each layer's inputs. It normalizes activations using the mean and variance computed over the current data batch. This normalization reduces internal covariate shift, where the input distribution changes during training. Batch normalization includes learnable parameters (gamma and beta) allowing the network to reverse normalization if necessary. Benefits include enabling higher learning rates, lessening the importance of precise parameter initialization, and serving as a regularizer, potentially removing the need for dropout."}], "output": "Batch normalization stabilizes neural network training by normalizing layer inputs using batch statistics. It addresses internal covariate shift with learnable parameters, allowing higher learning rates and improved training dynamics, while offering regularization benefits."} {"input": [{"role": "system", "content": "Summarize the technical content while preserving the essential concepts and their interconnections, ensuring clarity and precision."}, {"role": "user", "content": "Gradient descent optimization algorithms are foundational to training machine learning models. The basic version updates parameters in the steepest descent direction of the loss function. Variants like Stochastic Gradient Descent (SGD) use random samples to estimate gradients, enhancing computational efficiency. Advanced optimizers like Adam combine momentum (helping overcome local minima) and RMSprop (adapting learning rates for each parameter). These optimizers maintain running averages of gradients and their squares, enabling adaptive learning rates and more efficient training. The optimizer choice can significantly influence model convergence speed and final performance."}], "output": "Gradient descent algorithms optimize ML models by updating parameters based on loss gradients. Variants like SGD use sampling for efficiency, while advanced optimizers like Adam combine momentum with adaptive learning rates for improved training performance."} {"input": [{"role": "system", "content": "Deliver a concise yet comprehensive summary that highlights the key technical aspects and their practical significance."}, {"role": "user", "content": "Cross-validation is a resampling method used to evaluate machine learning models on limited data. The most common form, k-fold cross-validation, involves dividing the dataset into k equal parts, training the model on k-1 folds while validating on the remaining fold, and rotating through all combinations. This provides a more robust model performance assessment than a single train-test split, helping detect overfitting and estimate model generalization to unseen data. Variations include stratified k-fold (maintaining class distribution), leave-one-out (k equals dataset size), and time series cross-validation for temporal data."}], "output": "Cross-validation evaluates ML models by training and testing on different data splits, typically using k-fold methodology. This approach offers better performance assessment than single splits, with variations for different data types and requirements."} {"input": [{"role": "system", "content": "Generate a clear and focused summary that captures the essential technical details while maintaining their relationships and significance."}, {"role": "user", "content": "Transfer learning is a machine learning method where a model developed for one task is reused as the starting point for a model on a second task. This approach is powerful in deep learning, where pre-trained models on large datasets (like ImageNet for computer vision or BERT for NLP) are fine-tuned on specific downstream tasks. Transfer learning reduces the need for large amounts of task-specific training data and computational resources, as the model has already learned useful features from the source domain. Common strategies include feature extraction (freezing pre-trained layers) and fine-tuning (updating all or some pre-trained weights)."}], "output": "Transfer learning reuses models trained on one task for different tasks, particularly effective in deep learning. It leverages pre-trained models through feature extraction or fine-tuning, reducing data and computational needs for new tasks."} {"input": [{"role": "system", "content": "Provide a precise and informative summary that distills the key technical concepts while maintaining their relationships and practical importance."}, {"role": "user", "content": "Ensemble methods combine multiple machine learning models to create a more robust and accurate predictor. Common techniques include bagging (training models on random data subsets), boosting (sequentially training models to correct earlier errors), and stacking (using a meta-model to combine base model predictions). Random Forests, a popular bagging method, create multiple decision trees using random feature subsets. Gradient Boosting builds trees sequentially, with each tree correcting the errors of previous ones. These methods often outperform single models by reducing overfitting and variance while capturing different data aspects."}], "output": "Ensemble methods enhance prediction accuracy by combining multiple models through techniques like bagging, boosting, and stacking. Popular implementations include Random Forests (using multiple trees with random features) and Gradient Boosting (sequential error correction), offering better performance than single models."}您將會看到檔案的前三行作為預覽:

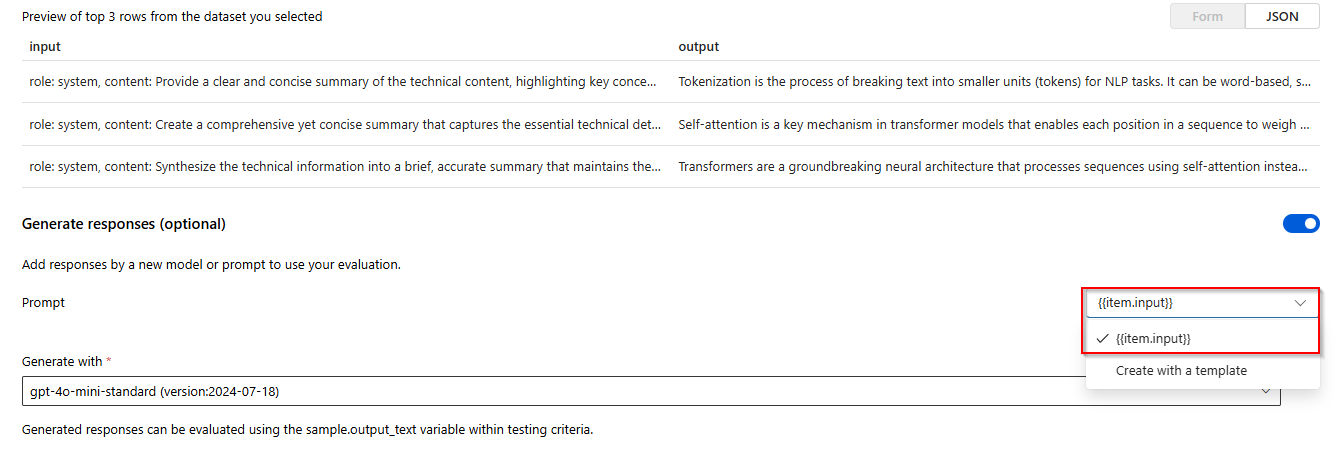

選取 [ 產生回應] 的切換。 從下拉式清單中選取

{{item.input}}。 這會將評估檔案中的輸入字段插入個別提示,以取得我們想要與評估數據集進行比較的新模型執行。 模型會接受該輸入併產生自己的唯一輸出,在此情況下,該輸出會儲存在稱為{{sample.output_text}}的變數中。 我們稍後會使用該範例輸出文字作為測試準則的一部分。 或者,您可以手動提供自己的自定義系統訊息和個別訊息範例。選取您想要根據評估產生回應的模型。 如果您沒有模型,可以建立模型。 為了此範例的目的,我們使用的標準

gpt-4o-mini部署。settings/sprocket 符號會控制傳遞至模型的基本參數。 目前僅支援下列參數:

- 溫度

- 最大長度

- 頂端 P

不論您選取的模型為何,目前長度上限為 2048。

選取 [新增測試準則 ] 選取 [新增]。

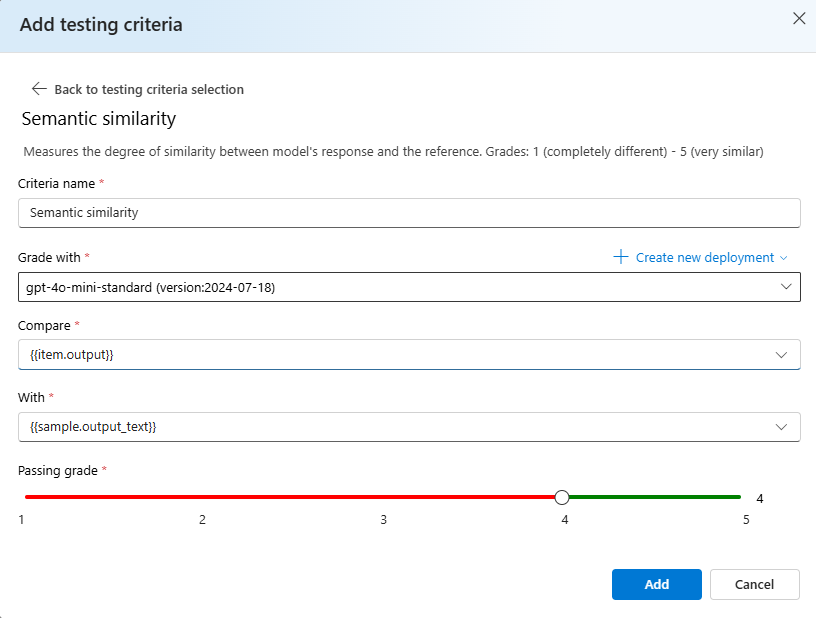

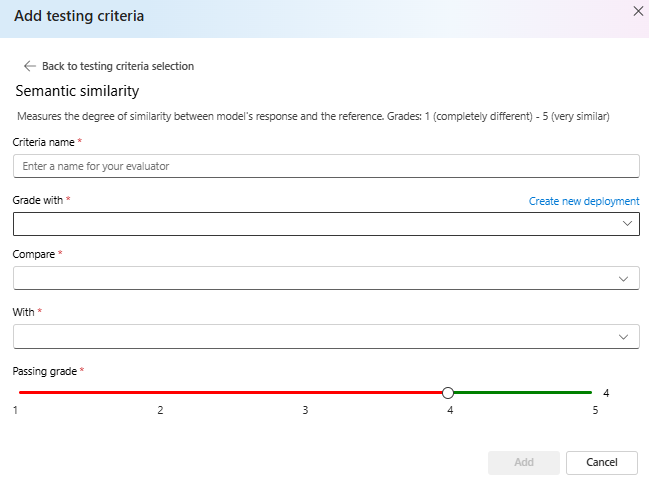

在 [比較新增] 下方的 [語意相似度>] 下選取 [新增

{{sample.output_text}}]。{{item.output}}這會取得評估.jsonl檔案的原始參考輸出,並根據 提供模型提示{{item.input}},將其與所產生的輸出進行比較。>選取 [此時新增],您可以新增其他測試準則,或選取 [建立] 來起始評估作業執行。

選取 [ 建立 ] 之後,系統會將您帶到評估作業的狀態頁面。

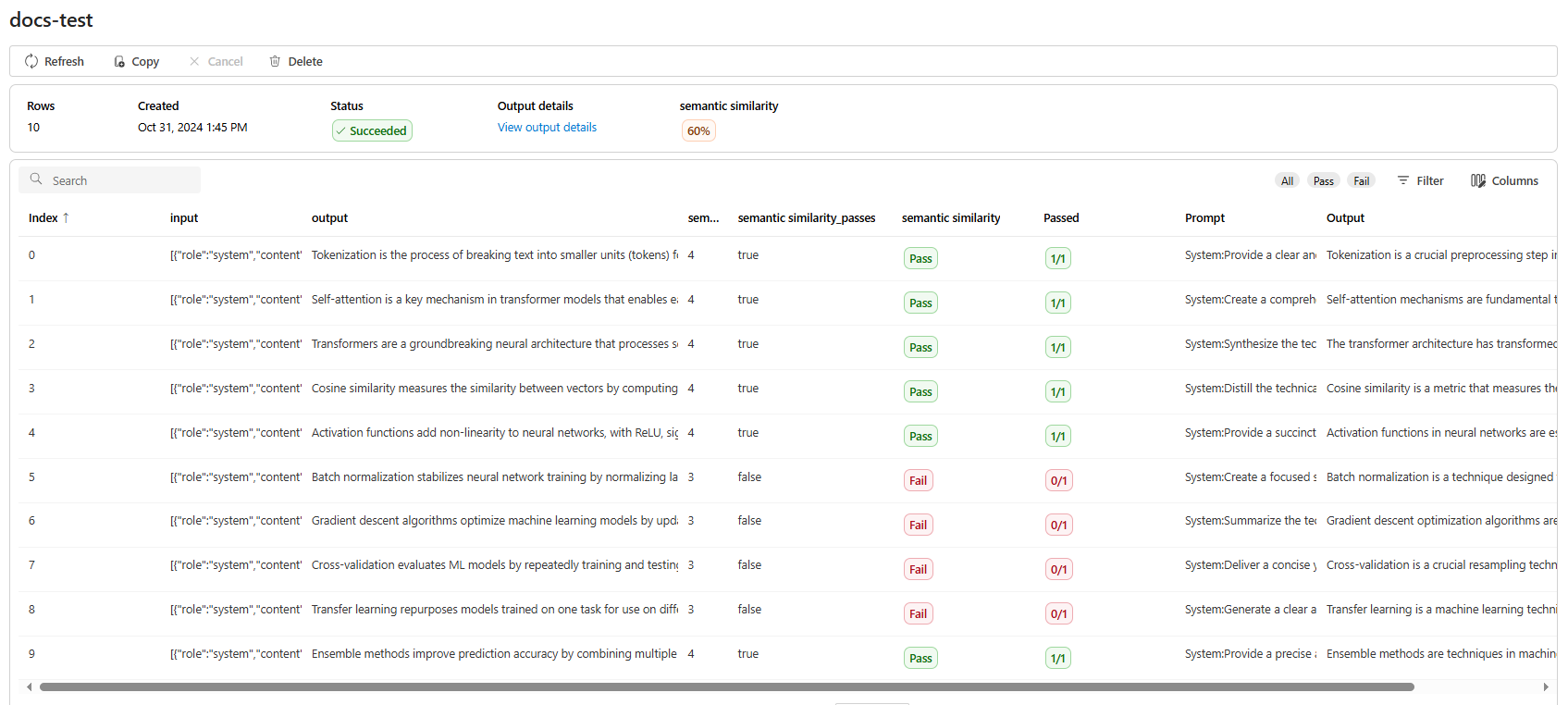

建立評估作業之後,您可以選取作業以檢視作業的完整詳細數據:

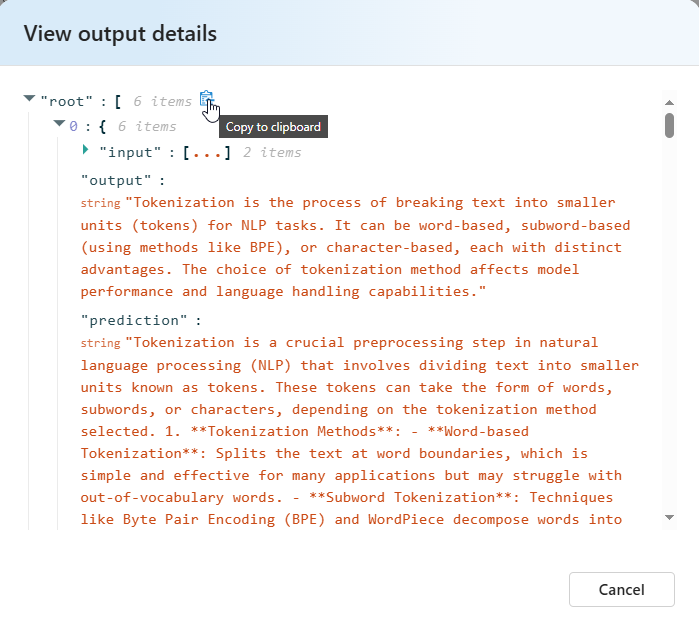

針對語意相似性 檢視輸出詳細 數據,包含 JSON 表示法,您可以複製/貼上通過的測試。

測試準則詳細數據

Azure OpenAI 評估提供多個測試準則選項。 下一節提供每個選項的其他詳細數據。

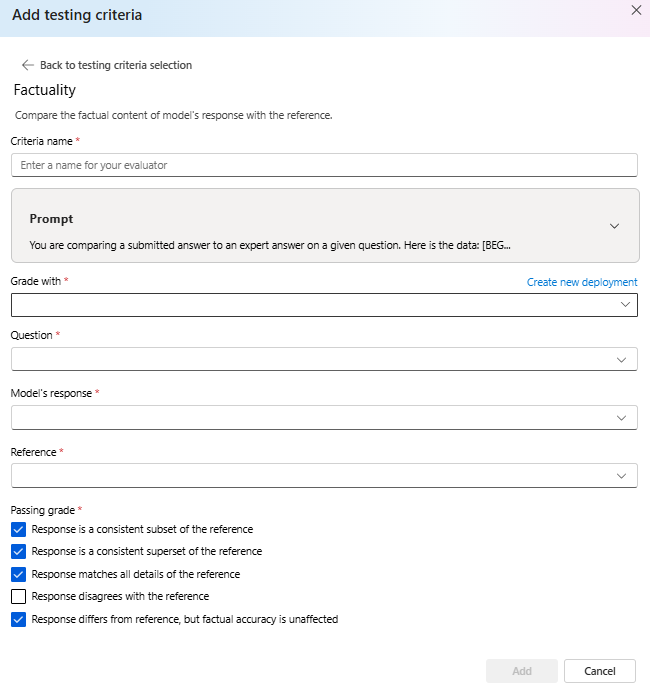

事實

藉由比較提交答案與專家答案,評估提交答案的事實正確性。

事實性會藉由比較提交答案與專家答案來評估所提交答案的事實精確度。 使用詳細的思考鏈結(CoT)提示時,評分員會判斷提交的答案是否與、子集、超集或與專家答案衝突。 它無視樣式、文法或標點符號的差異,只關注事實內容。 事實在許多案例中都很有用,包括但不限於內容驗證和教育工具,以確保 AI 提供的答案正確性。

您可以選取提示旁邊的下拉式清單,來檢視作為此測試準則一部分的提示文字。 目前的提示文字為:

Prompt

You are comparing a submitted answer to an expert answer on a given question.

Here is the data:

[BEGIN DATA]

************

[Question]: {input}

************

[Expert]: {ideal}

************

[Submission]: {completion}

************

[END DATA]

Compare the factual content of the submitted answer with the expert answer. Ignore any differences in style, grammar, or punctuation.

The submitted answer may either be a subset or superset of the expert answer, or it may conflict with it. Determine which case applies. Answer the question by selecting one of the following options:

(A) The submitted answer is a subset of the expert answer and is fully consistent with it.

(B) The submitted answer is a superset of the expert answer and is fully consistent with it.

(C) The submitted answer contains all the same details as the expert answer.

(D) There is a disagreement between the submitted answer and the expert answer.

(E) The answers differ, but these differences don't matter from the perspective of factuality.

語意相似性

測量模型回應與參考之間的相似度。 Grades: 1 (completely different) - 5 (very similar).

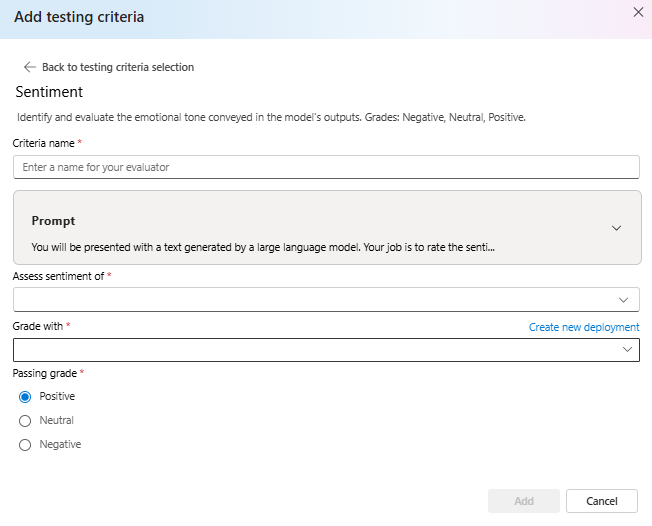

情緒

嘗試識別輸出的情感基調。

您可以選取提示旁邊的下拉式清單,來檢視作為此測試準則一部分的提示文字。 目前的提示文字為:

Prompt

You will be presented with a text generated by a large language model. Your job is to rate the sentiment of the text. Your options are:

A) Positive

B) Neutral

C) Negative

D) Unsure

[BEGIN TEXT]

***

[{text}]

***

[END TEXT]

First, write out in a step by step manner your reasoning about the answer to be sure that your conclusion is correct. Avoid simply stating the correct answers at the outset. Then print only the single character (without quotes or punctuation) on its own line corresponding to the correct answer. At the end, repeat just the letter again by itself on a new line

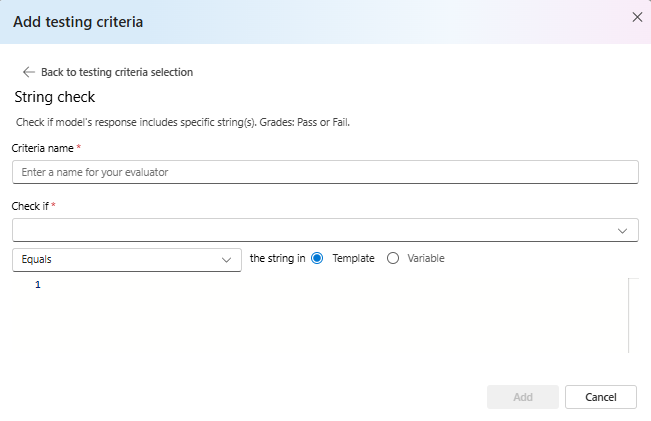

字串檢查

驗證輸出是否符合預期的字串。

字串檢查會對允許各種評估準則的兩個字元串變數執行各種二進位作業。 它有助於驗證各種字串關聯性,包括相等、內含專案和特定模式。 此評估工具允許區分大小寫或不區分大小寫的比較。 它也針對 true 或 false 結果提供指定的成績,允許根據比較結果自定義的評估結果。 以下是支援的作業類型:

equals:檢查輸出字串是否完全等於評估字串。contains:檢查評估字串是否為輸出字串的子字串。starts-with:檢查輸出字串是否以評估字串開頭。ends-with:檢查輸出字串是否以評估字串結尾。

注意

在測試準則中設定特定參數時,您可以選擇變數與範本。 如果您想要參考輸入資料中的數據行,請選取 變數 。 如果您想要提供固定字串,請選擇 範本 。

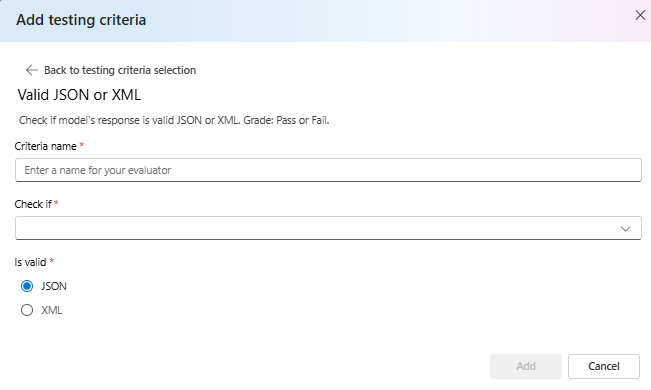

有效的 JSON 或 XML

驗證輸出是否為有效的 JSON 或 XML。

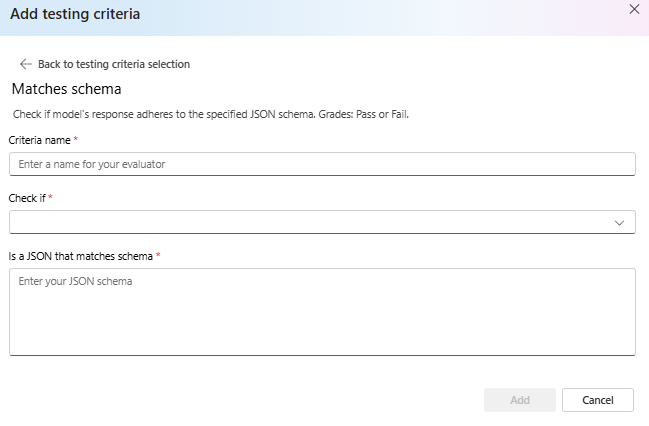

符合架構

確保輸出遵循指定的結構。

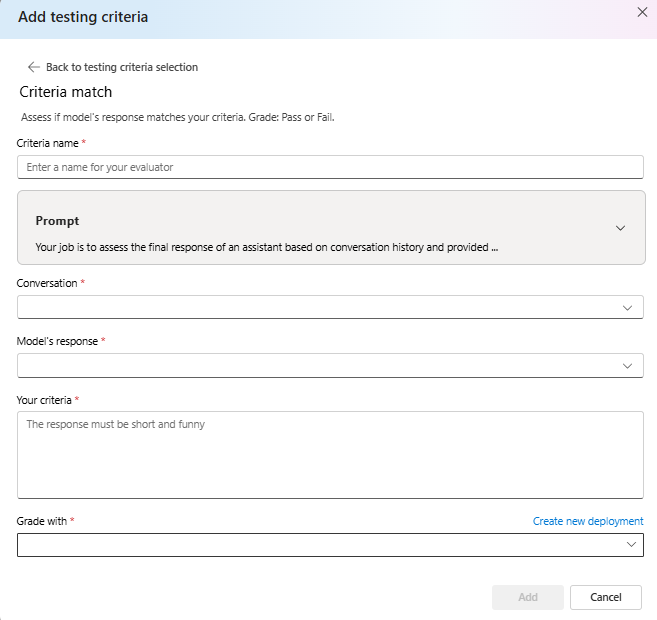

準則比對

評估模型的回應是否符合您的準則。 成績:通過或失敗。

您可以選取提示旁邊的下拉式清單,來檢視作為此測試準則一部分的提示文字。 目前的提示文字為:

Prompt

Your job is to assess the final response of an assistant based on conversation history and provided criteria for what makes a good response from the assistant. Here is the data:

[BEGIN DATA]

***

[Conversation]: {conversation}

***

[Response]: {response}

***

[Criteria]: {criteria}

***

[END DATA]

Does the response meet the criteria? First, write out in a step by step manner your reasoning about the criteria to be sure that your conclusion is correct. Avoid simply stating the correct answers at the outset. Then print only the single character "Y" or "N" (without quotes or punctuation) on its own line corresponding to the correct answer. "Y" for yes if the response meets the criteria, and "N" for no if it does not. At the end, repeat just the letter again by itself on a new line.

Reasoning:

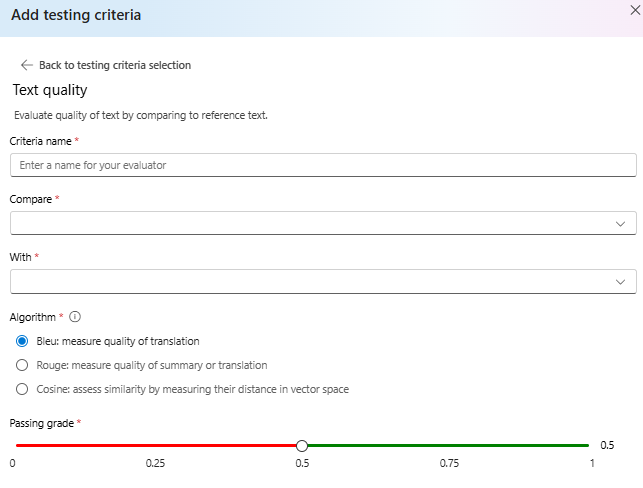

文字品質

藉由比較參考文字來評估文字的品質。

摘要:

- BLEU分數:使用BLEU分數比較文字與一或多個高品質參考翻譯,以評估產生的文字品質。

- ROUGE 分數:使用 ROUGE 分數比較文字與參考摘要,以評估產生的文字品質。

- 餘弦值:也稱為餘弦相似度測量兩個文字內嵌的方式,例如模型輸出和參考文字,在意義上對齊,有助於評估兩者之間的語意相似性。 這是藉由測量向量空間中的距離來完成。

詳細資料:

BLEU(BiLingual Evaluation Understudy)分數通常用於自然語言處理(NLP)和機器翻譯。 它廣泛使用於文字摘要和文字產生使用案例中。 它會評估產生的文字與參考文字的相符程度。 BLEU分數的範圍從 0 到 1,分數越高,表示品質更好。

ROUGE (召回率導向的摘要評估) 是一組用來評估自動摘要和機器翻譯的計量。 它會測量產生的文字與參考摘要之間的重疊。 ROUGE 著重於召回導向量值,以評估產生的文字涵蓋參考文字的方式。 ROUGE 分數提供各種計量,包括: • ROUGE-1:產生的單字與參考文字之間的單文重疊。 • ROUGE-2:產生的文字與參考文字之間的 bigrams 重疊(兩個連續單字)。 • ROUGE-3:產生的文字與參照文字之間的三克重疊(三個連續單字)。 • ROUGE-4:產生的文字與參照文字之間的四克(四個連續單字)重疊。 • ROUGE-5:產生的文字與參考文字之間的五克(五個連續單字)重疊。 • ROUGE-L:產生的文字與參考文字之間的 L-gram(L 連續單字)重疊。 文字摘要和文件比較是 ROUGE 的最佳使用案例之一,特別是在文字一致性和相關性十分重要的情況下。

餘弦相似度測量兩個文字內嵌的方式,例如模型輸出和參考文字,在意義上對齊,協助評估兩者之間的語意相似性。 與其他模型型評估工具相同,您必須使用 來提供模型部署以進行評估。

重要

此評估工具僅支援內嵌模型:

text-embedding-3-smalltext-embedding-3-largetext-embedding-ada-002

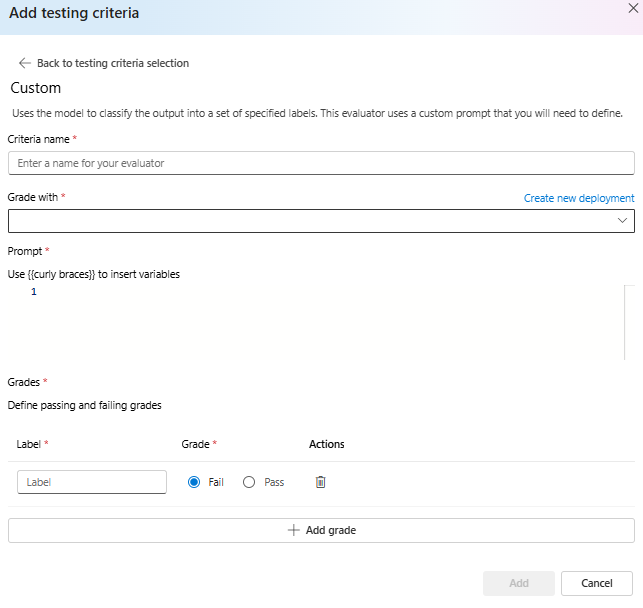

自訂提示

使用模型將輸出分類成一組指定的標籤。 此評估工具會使用您需要定義的自訂提示。