Load Balancing Your Web Server

A recent regression in the ASP.NET performance lab prompted us to make a change to our infrastructure. Our “Hello, World” scenario showed a 23% regression in throughput and 5% decrease in %CPU. I won’t go into the source change which triggered the regression, but I will demonstrate how tuning our web server performance made big impacts on throughput. The change: balancing and increasing the load on the web server.

For reference, the web server has the following specs:

- 4 Intel Xeon Quad-Core processors (16 cores total)

- 4 Broadcom BCM5708C NetXtreme II GigE NICs

Each NIC on the web server has a separate IP address:

> nslookup webserver Server: dnsserver Address: 192.168.0.1 Name: webserverAddresses: 192.168.0.2 192.168.0.3 192.168.0.4 192.168.0.5

If you remember from my “Hello, World” post, we use wcat to apply load from 3 remote, physical clients. Each client uses the web server’s hostname when issuing requests, which should ideally use a round robin rotation across the web server IPs.

Before I proceed, let me describe what happens to incoming network traffic as I understand it:

- tcpip.sys issues network interrupt when it receives data

- OS dispatches an ISR (interrupt service routine) to handle the interrupt

- tcpip.sys can’t issue another interrupt while the ISR is running

- ISR schedules a lower priority DPC (deferred procedure call) to handle the rest of the work

- ISR quickly returns so that tcpip.sys can issue more interrupts

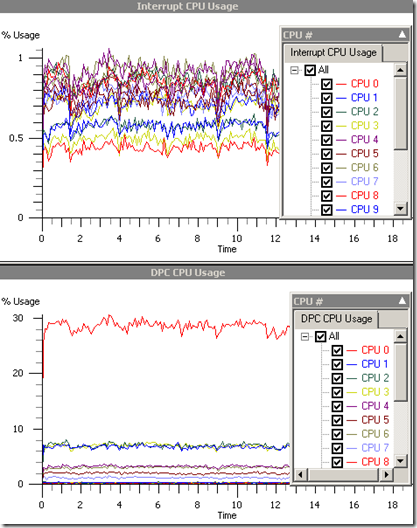

If the NICs are getting equal load and RSS (receive-side scaling) is enabled on the web server, then the network interrupts and their corresponding DPCs should be distributed across the multiple cores. We collected ETW profiles and found that while our interrupts were handled by all cores, DPCs were only handled by 8 of the 16 cores with cpu0 doing most of the work:

This led us to wonder if the NICs really were getting equal load from a round robin DNS lookup. The quickest and easiest way to ensure a balanced load was to manually edit the client etc\hosts files and force each client to use a separate NIC. Since there were 4 NICs we needed a 4th client, which also means an increase in load.

The following results are for Server R2 with .NET 4. Baseline is not load balanced, Result is.

Test Name

Metric

Unit

Baseline

Result

Diff

Status

Baseline StdDev %

Result StdDev %

Helloworld.Helloworld

Processor Time

%

95.60

99.16

3.73%

Pass

0.68

0.27

Helloworld.Helloworld

Throughput

req/sec

28403.67

32125.67

13.10%

Pass

0.85

0.57

Notice the improvements in %CPU and throughput? Using Result as our new load-balanced baseline, we re-ran HelloWorld on the same build where we saw the original regression. After maxing our CPU and increasing our load, throughput is now less than 5% off from the baseline.

Conclusion: When adding multiple NICs, carefully tune your web server and infrastructure in order to maximize its performance. Profile to verify that your server is behaving as expected. I recognize that it is not this easy to load balance production servers, but there should be tools available to help you do this. I don’t work on production servers, so don’t ask me which tools – but I would love to hear about them from you!

Update: To collect the ETW profiles above, I enabled INTERRUPT and DPC on the xperf command-line:

xperf –on PROC_THREAD+LOADER+INTERRUPT+DPC –f <etwFile>