A TaskScheduler that Limits the Number of Threads

I created an application which uses a Parallel.For loop to create some data and another Parallel.For loop to make comparisons in the data set. This process is repeated a hundred times, with each iteration having a slightly bigger data set. The data is small enough that it is contained in memory and each piece of datum is independent of the others. The problem being solved is very comparable to a matrix multiplication.

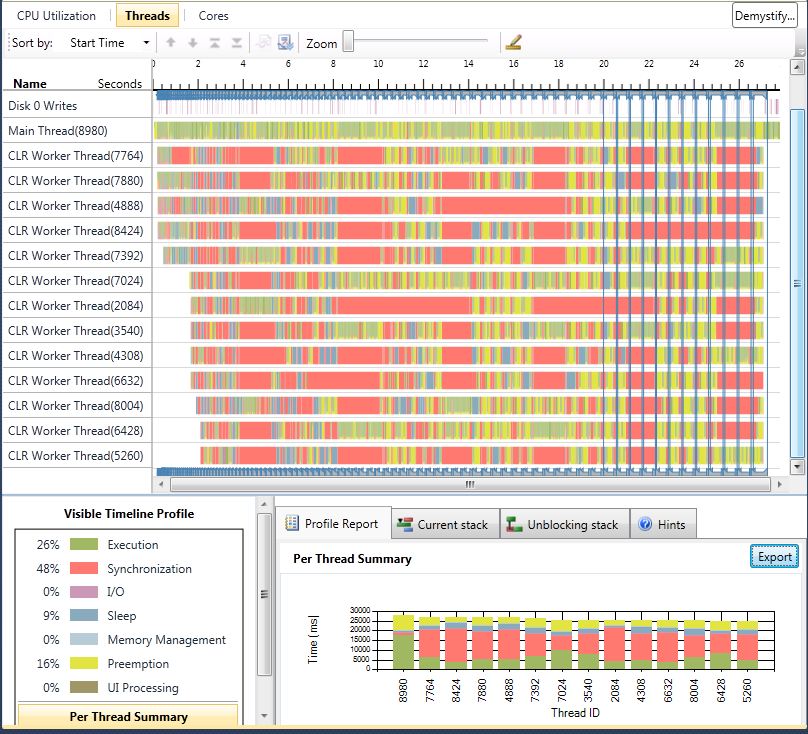

I used the Concurrency Visualizer to profile the application on my quad core workstation and was not surprised when it had more than 80% CPU utilization. Though, I was a little surprised when I looked at the Threads View and saw how many threads the ThreadPool created. I expected it to create roughly four threads since the only calls to the ThreadPool would have been through the Parallel.For’s and there are four logical cores in the computer.

Work does get assigned to each of the thread pool threads, but I felt that creating so many threads for this type of a problem could be less than ideal. If there’s only ever work for four threads to do at a time, why have more? Reading How to: Create a Task Scheduler That Limits the Degree of Concurrency, I used the provided LimitedConcurrencyLevelTaskScheduler class to see if the application would use less threads, and run faster as well. It resulted in a profile of:

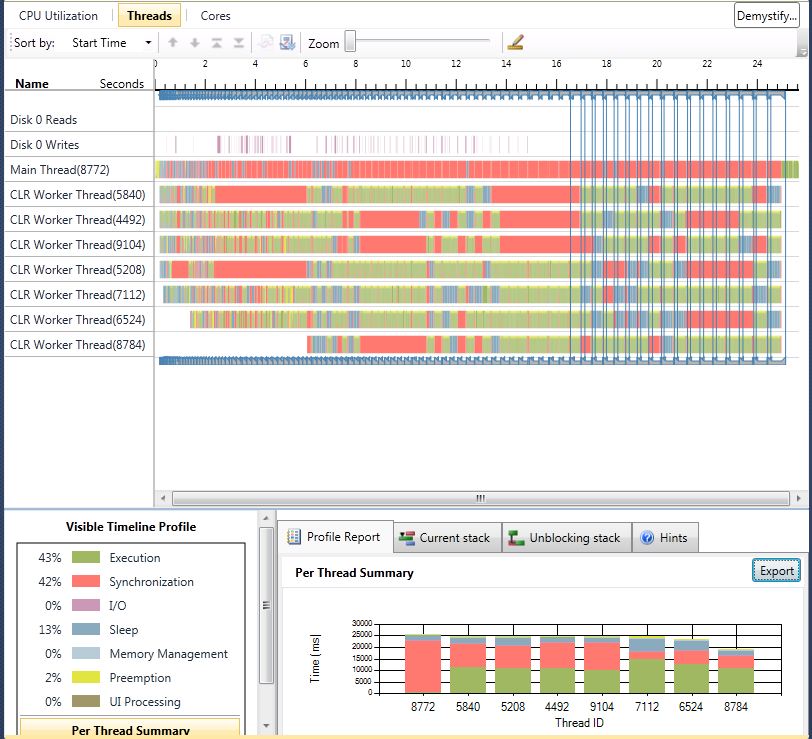

Using the LimitedConcurrencyLevelTaskScheduler with a MaxDegreeOfParallelism of Environment.ProcessorCount resulted in a faster application. It looks like the wall time of the application is two seconds faster than the default scheduler. The main thread does not appear to have been used in the comparison computations, but there definitely is a reduction in the number of threads created. As a consequence of creating fewer threads, less time was spent in Preemption and on average, a greater percentage time was spent in Execution (i.e. 43% vs. 26%). I was hoping that with the limited concurrency, only four threads would’ve been created, but there were seven.

To see if limiting the number of worker threads to the number of logical cores on the computer would result in the fastest run time I implemented my own TaskScheduler. It creates one less thread than cores on the computer. The idea being that the calling thread can be used to do part of the work in the Parallel.For as well.

/// <summary>

/// Scheduler which will use one less than the number of cores on the computer to

/// perform asynchronous tasks.

/// Do not use this scheduler if the tasks would block while new tasks would be added

/// </summary>

public class VeryLimitedScheduler : TaskScheduler, IDisposable

{

private Thread[] pool;

private BlockingCollection<Task> queue;

private CountdownEvent threadsExited;

private readonly TimeSpan TakeFrequency = TimeSpan.FromSeconds(30);

public VeryLimitedScheduler()

{

queue = new BlockingCollection<Task>();

int numberOfThreads = Environment.ProcessorCount - 1;

if (0 < numberOfThreads)

{

threadsExited = new CountdownEvent(numberOfThreads);

pool = new Thread[numberOfThreads];

ThreadStart ts = new ThreadStart(runPool);

for (int core = 0; core < pool.Length; core++)

{

pool[core] = new Thread(ts);

pool[core].Start();

}

}

}

private void runPool()

{

Thread.CurrentThread.IsBackground = true;

try

{

while (!queue.IsCompleted)

{

Task t;

if (queue.TryTake(out t, TakeFrequency))

{

base.TryExecuteTask(t);

}

}

}

finally

{

threadsExited.Signal();

}

}

public override int MaximumConcurrencyLevel

{

get

{

return pool.Length + 1;

}

}

protected override IEnumerable<Task> GetScheduledTasks()

{

return queue.ToList();

}

protected override void QueueTask(Task task)

{

queue.Add(task);

}

protected override bool TryExecuteTaskInline(Task task, bool taskWasPreviouslyQueued)

{

if (!taskWasPreviouslyQueued)

{

return base.TryExecuteTask(task);

}

return false;

}

public void Dispose()

{

if (null != queue)

{

queue.CompleteAdding();

if (null != threadsExited)

{

threadsExited.Wait(TakeFrequency);

threadsExited.Dispose();

}

queue.Dispose();

queue = null;

}

}

}

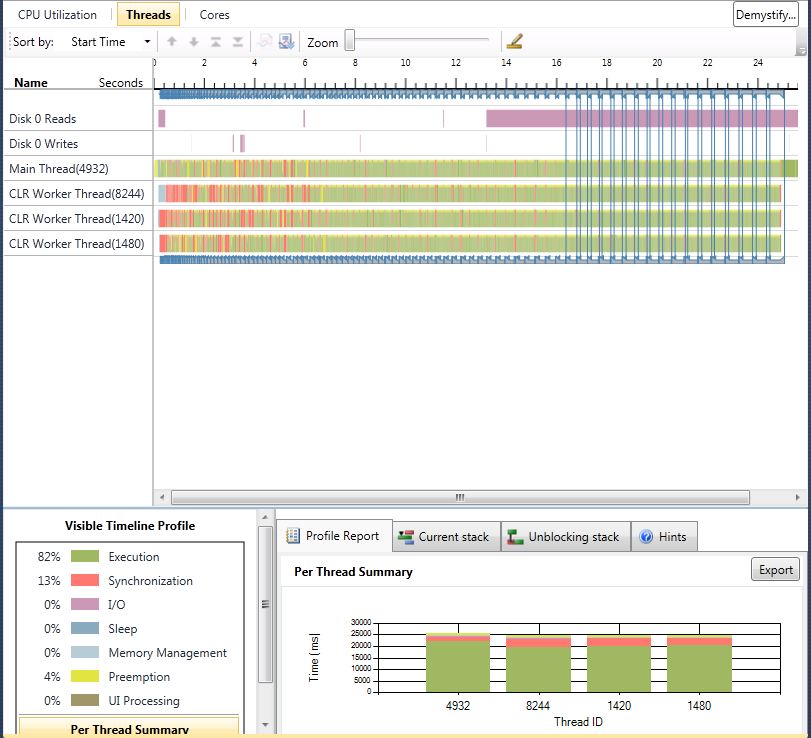

Running with the VeryLimitedScheduler resulted in:

The wall clock time with the VeryLimitedScheduler is only a hair better than it is with the LimitedConcurrencyLevelTaskScheduler. The percentage of time spent in the Execution state is wonderful compared to either of the other two schedulers (82% vs. 43% vs. 26%). Across the board, all of the threads spent more time executing than blocking. So the VeryLimitedScheduler does make the most efficient use of assigning work to threads out of the three schedulers.

With these results in mind, am I going to start using my scheduler over the default one? No. The amount of wall clock timed saved by my scheduler was pretty minimal. Plus there’s probably some bugs in my scheduler which don’t exist in the one provided by the framework. So even though the default scheduler seems to be creating too many threads for my simple application, I’m more than happy to rely on it.

Comments

- Anonymous

June 09, 2013

There is a simple API I found that performs task scheduling with limited concurrency. A task can be defined by a class that implements an IPipelineTask or by using an Action. www.avantprime.com/product/16/pipeline-net www.avantprime.com/product/api/16/pipeline-net Executing tasks can be as simple as: <code> var scheduler = new PipelineScheduler(); // var scheduler = new PipelineScheduler(5); var task = new MyCustomTask(); scheduler.Push(task); scheduler.Push(() => { ... }); </code>