Moving Schema and Data: SQL Azure Migration Wizard

By Dan Stillwell – Data Platform Solution Architect.

In my previous blog I wrote about a scenario where one of my customers wanted to utilise the flexibility of Azure for their SQL Server provisioning. The deployment started as a POC but very quickly became more than that with senior members of the organisation using the functionality. At this point we wanted to demonstrate the flexibility and elasticity of Azure by migrating the POC workload to a platform with HA, and SLA's. At this point we done a feasibility study between using SQL within a VM (IaaS) or Azure SQL DB (PaaS). We decided that Azure SQL DB was the right service offering for the requirements of the customer.

Azure strategies can vary and this is one of the great strengths within cloud technologies; whatever the customer requirements, Azure can generally offer a service to meet them. I have worked with customers who want to migrate all databases to Azure, I have worked with some customer who want to use Azure just for disaster recovery and High availability. This particular customer at present - is using Azure for development/ POC work. Once they are happy with the POC in Azure they then deploy and handover the service to their own Data Centre team.

So with this in mind we need to migrate the Schema and data from the standalone SQL Server within an Azure VM to Azure SQL DB. I will walk through the process, but as stated above I need a tool that can migrate in any 'Azure direction'. What I mean by this is the first stage will be to migrate the data from IaaS SQL Server to PaaS SQL DB, but the same method can be used to move it from PaaS to IaaS, PaaS to PaaS, on premise to Azure or Azure to on premise.

There are a few different ways when migrating data from one environment to another. You can use Generate Script Wizard, Deploy Database Wizard, SQL Server data tools, Export Data Tier, SSIS, BCP, sqlpackage.exe, AZ Copy, Backup/ Restore, Transactional Replication, HA technologies to replicate data (Log Shipping, AlwaysOn) to name a few. The tool I have used for the migration process is 'SQL Database Migration Wizard' the tool can be found from the open source site CodePlex (https://sqlazuremw.codeplex.com/). You can migrate from SQL Server 2005, 2008, 2008R2, 2012, 2014. This is a different tool to the Upgrade Advisor, there is now a SQL Server 2016 Upgrade Advisor available in preview (https://www.microsoft.com/en-us/download/details.aspx?id=48119).

One of the issues with migration is the compatibility between the source data/ instance version and the destination. For example, in Azure SQL DB at present CLR, XML, table partitions. FileStream is not supported. Full updated list of Azure SQL DB support (https://azure.microsoft.com/en-gb/documentation/articles/sql-database-transact-sql-information/#features-not-supported-in-sql-database). This tool is a great way to identify in a planned way what may not work or is not supported on the destination environment. The tool uses regular expressions to analyse the phrases, so it can be configurable by yourself. The tool can fix some issues, but some issues can only be identified and not fixed automatically. This tool can be used as an end to end process for migration (analyse, script out schema, BCP the data) or you can use parts of the tool. For example, use the tool to analyse the schema and then use another method to migration the actual data.

Once you install the tool, you will be welcomed with the following splash screen. Here you can see the different options. Please note that you can capture a profiler trace and analyse that via this tool:

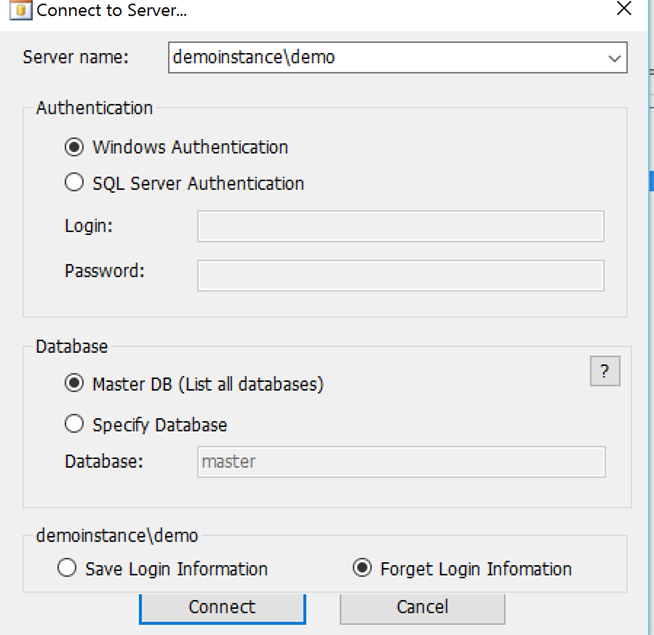

At this stage you will connect to a instance or database of SQL Server:

*You will need to create a firewall rule on Azure for the migration tool to access the Azure VM or Azure SQL DB. The IP address from the machine running the tool will require access.

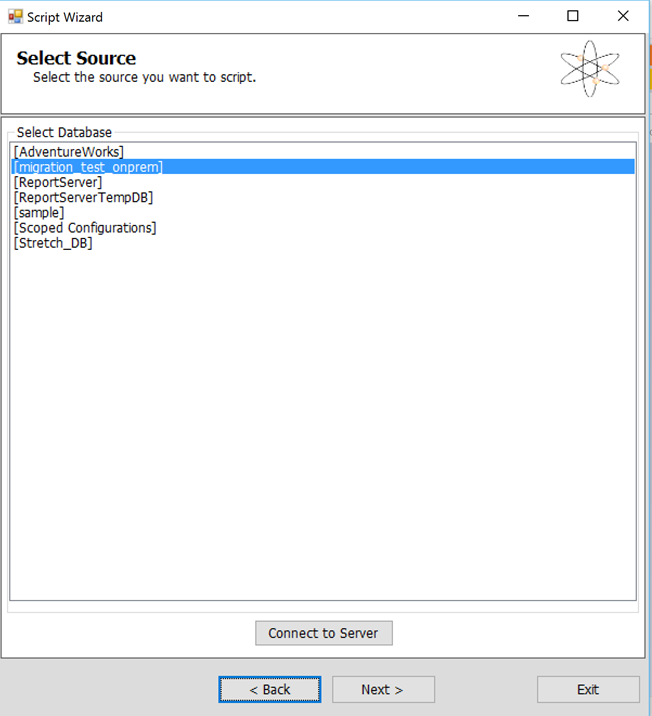

You will then need to select your source database

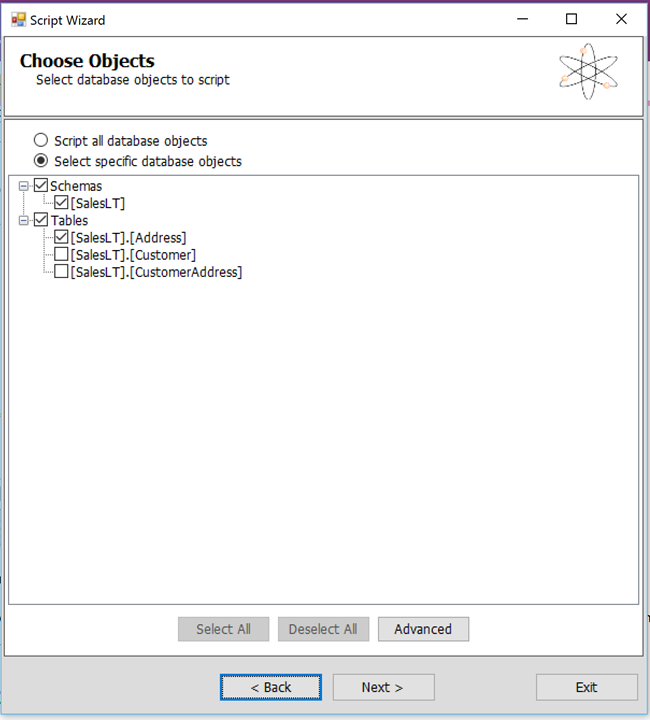

Select all objects or select individual objects.

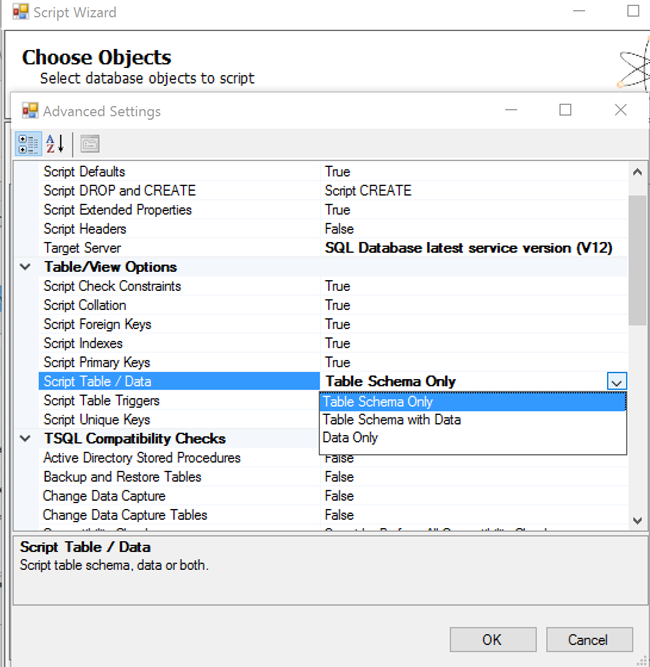

Clicking 'Advanced' gives the option to only script schema, data or both. You may wish to only run the schema script first to fix any compatibility issues.

You can also select or deselect compatibility checks at this stage.

After your selection click next, review and then choose to generate the script.

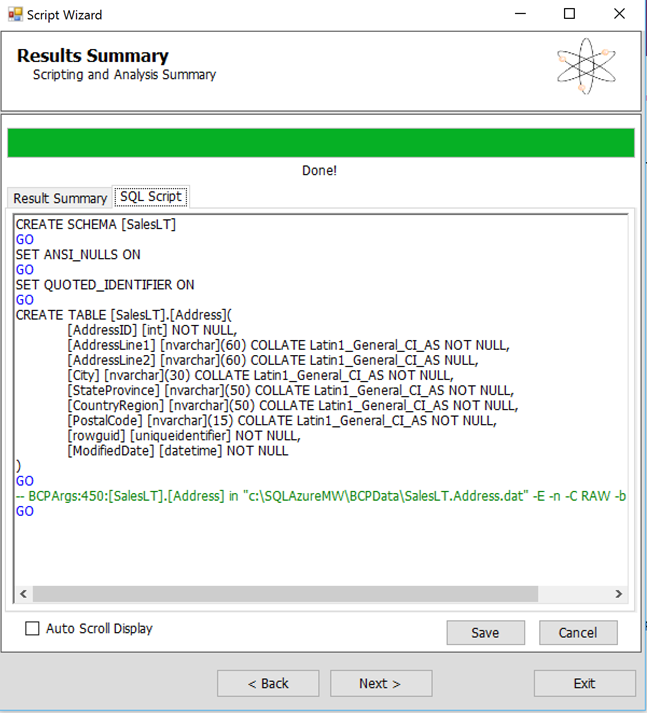

You then get presented with the script which you can then edit within the window, copy out to SSMS or save the script to run manually or edit at another time

In the 'Result Summary' tab any errors will be highlighted in red, you can also see the location of the .dat file where the data has been written to ready for the BCP process to import

Select Next and you will need to enter the destination server

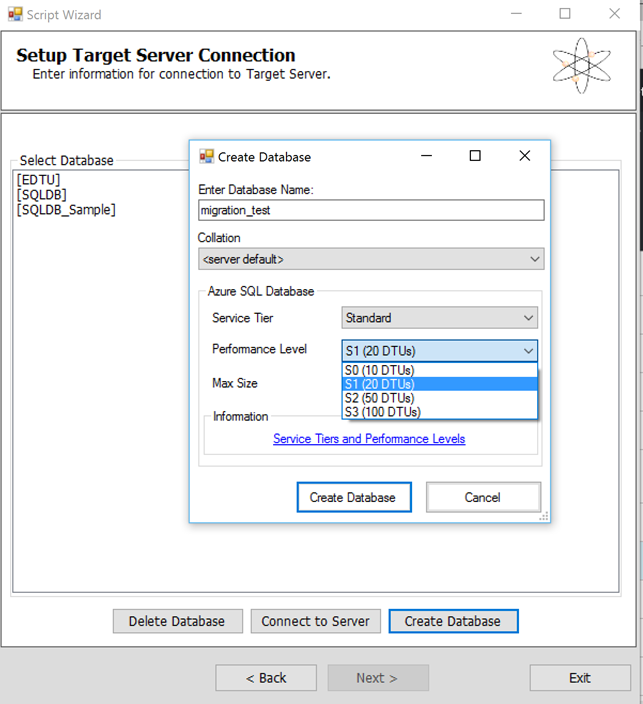

In Azure, you then have an option to select a current SQL Database or create a new one, I am creating a new one when I am selecting a S1 service tier

Once the SQL Database is created, you can then select it from the list and execute the script against it. Alternatively, you can create the database manually and then run the script that you had the option of saving earlier against the database

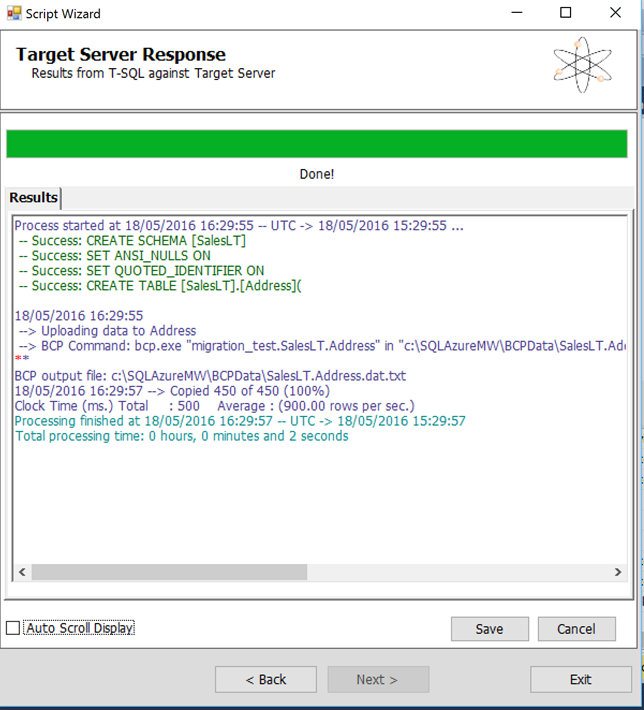

As you can see, the table has been created and the data has been migrated:

Below are some things to highlight when using the tool:

Please note that this tool is not officially supported by Microsoft. It is available on CodePlex which is Microsoft's open source site. Microsoft internal groups do monitor the feedback and discussions and will send a 'best effort' response.

The tool will fix some compatibility issues. Pay attention to the output report for what has been fixed and what needs additional fixing from you.

You can work with the script within the tool, or saving it and editing it in SQL Server Management Studio or SQL Server Data Tools (Visual Studio).

Within your script you can use 'IF Exist', this allows you to run the script multiple times. However, you may not want to script the data at this stage as it will BCP the data multiple times.

The process should be to allow the tool to fix automatically/ apply any recommendations, fix any other issues, migrate the schema, confirm migration of schema is correct, then import the data via running the BCP Data script.

If you are loading a large amount of data, you can run the script from different machines to utilise more threads and processing power.

DAT files are kept by default in 'C:\SQLAzureMW\BCPData)'.

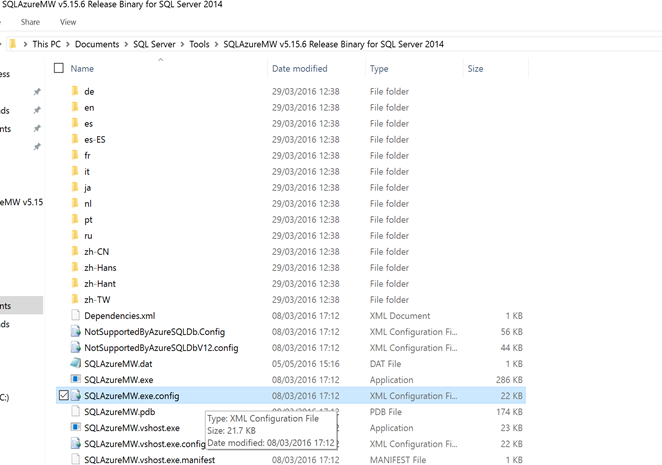

You can increase or decrease the parallel processing of the data migration by altering the 'NumberofBCPThreads' within the SQLAzureMW.exe.config file:

<add key="NumberOfBCPThreads" value="8"/>

As you can see the Migration Wizard gives great flexibility to assist in moving the schema and data to Azure whether that is IaaS or PaaS. When I have used the tool with my customers it can help solve a major barrier around complexities, time and resource in creating scripts and even a test process for ensuring compatibility to the different platform.