SQL Server Mysteries: The Case of the Not 100% RESTORE…

I recently visited a customer onsite and presented to them topics on SQL Server 2016. After the talk, I opened up the floor for the audience to ask me questions. One question I got went like this “I’ve tried to restore a database on SQL Server using the WITH STATS option. When I run the RESTORE the progress shows 100% but the restore is not complete and takes longer to actually finished. Do you know why?”. I thought about this for a second and my answer was “It is probably taking a long time to run recovery after the RESTORE but I’m not sure”. I didn’t commit to investigating this but it bugged me on my plane ride back to the great state of Texas (sorry if you haven’t figured out by now I love living in Texas).

When I got back to my office, I remembered my colleague from the Tiger Team, Ajay Jagannathan, had blogged about new extended events we introduced in SQL Server 2016 for backup and restore. You can read more about these on his blog post at https://blogs.msdn.microsoft.com/sql_server_team/new-extended-event-to-track-backup-and-restore-progress/. OK, this is very cool. We now have a new way of instrumenting the backup/restore process for any database or transaction log.

I have a problem now to solve my mystery. I don’t know the cause of the problem so I don’t know how to create a scenario to see if these new events will provide the answer.

The path to find the answer is more really to find out possible answers and draw conclusions. My thought was to setup the extended events trace as Ajay suggests in his article, run a RESTORE DATABASE … WITH STATS and then in the extended event trace analyze possible instrumentation points that occur after 100% is reported (which itself is an event) and then determine could they “take a long time”?.

First, I copied the XEvent session script that Ajay documented in his blog, executed it, and started that session.

Next, I decided to use the WideWorldImporters sample database available on github to test out the RESTORE. Here is the script I used to restore that backup I downloaded from github

restore database wideworldimporters from disk = 'c:\temp\wideworldimporters-full.bak'

with move 'wwi_primary' to 'd:\data\wideworldimporters.mdf',

move 'wwi_userdata' to 'd:\data\wideworldimporters_userdata.ndf',

move 'wwi_log' to 'd:\data\wideworldimporters.ldf',

move 'wwi_inmemory_data_1' to 'd:\data\wideworldimporters_inmemory_data_1',

stats

The resulting stats come through as Messages and looked like this in SSMS

10 percent processed.

20 percent processed.

30 percent processed.

Processed 1464 pages for database 'wideworldimporters', file 'WWI_Primary' on file 1.

Processed 53096 pages for database 'wideworldimporters', file 'WWI_UserData' on file 1.

Processed 33 pages for database 'wideworldimporters', file 'WWI_Log' on file 1.

Processed 3862 pages for database 'wideworldimporters', file 'WWI_InMemory_Data_1' on file 1.

100 percent processed.

RESTORE DATABASE successfully processed 58455 pages in 8.250 seconds (55.354 MB/sec).

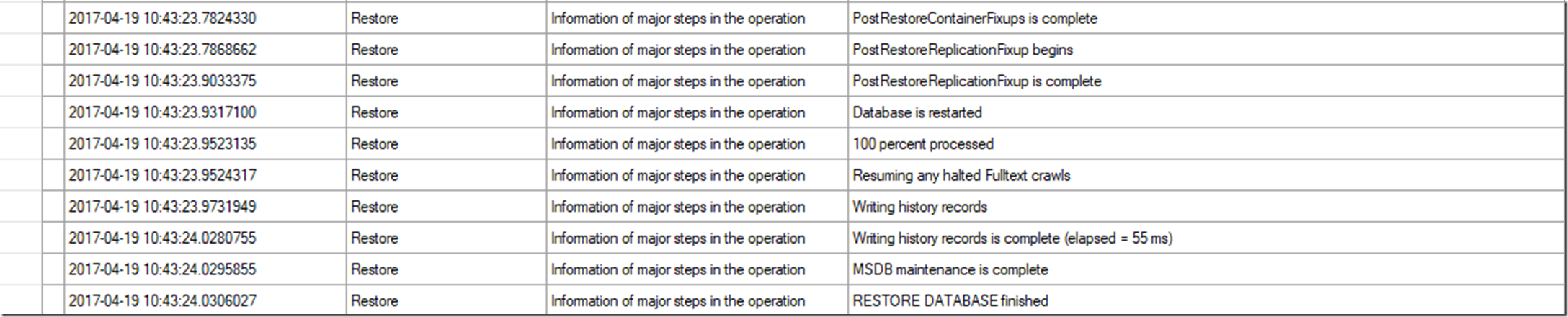

I then went to look at the result of my XEvent session file. The results grid looked something like this around the 100% progress reported message. And in my example, the RESTORE finished very quickly around 6 seconds and the 100% message was provided back to my SSMS query window just around the time the restore completed

If you look at this output, perhaps the only thing that has the potential to take time after the 100 percent processed message is writing out the backup history to msdb. Hmmmm. While that is possible, how probable is it? I’m not convinced that the writing of the history table is the only “work” to be done past posting this “100% percent processed” message.

Based on my knowledge of SQL Server and looking at these events, I then thought “What are the major phases of RESTORE and which ones could take a long time if say the database was fairly large?” I then created these scenarios and looked at the XEvent trace:

1) Create the database files - Making the assumption that most people use Instant File Initialization I decided to not go down this path

2) Copying in all the database pages from the backup – Very plausible but I tested fairly large backups of data (2Gb+) and 100% message only was displayed very close to when the backup was done. I’ll show you that in a minute.

3) Create the transaction log files – Probably not an issue but I’m running out of options so I will come back to that.

4) Copying in the transaction log blocks from the backup – This also a candidate if there were a high number of transactions during a long, large backup. Not sure how likely though this would be a huge amount of time but the messages at the end of the restore tell you how many “pages” we had to copy for the log.

5) Running Recovery – This is similar to #4 but if there were many concurrent transactions during the backup and the backup took a long time, there could be a certain amount of recovery to do, so this is a candidate.

If you look at my list of possible reasons, they all seem to be related to the transaction log. Given the needs of today’s database user, I think #3 has possibilities with larger transaction log sizes. Why? Because we can’t use Instant File Initialization for the transaction log and part of RESTORE is creating the actual transaction log file (see more on why no IFI on tlog from Paul Randal’s blog post). Another possibility here is a large number of Virtual Log Files but I thought we made advances here in our latest releases. I’ll come back to that one too later.

So any good case can be solved with some experimentation. I decided to create a scenario to test out theory #3 above: A “large” transaction log creation because I know since we can’t use IFI that can simply take some time.

I created a database with a 10Gb data file, a 5Gb transaction log, and populated the data with around 13Mb of data. I then backed it up, turned on my XEvent session, and ran RESTORE.

The output of the RESTORE hit 100% very fast and then just sat there “Executing Query” in the SSMS window for another ~2 minutes and then came back with the final message that the RESTORE had finished. So the SSMS Messages Window it looked something like this

15 percent processed.

23 percent processed.

31 percent processed.

47 percent processed.

55 percent processed.

63 percent processed.

71 percent processed.

87 percent processed.

95 percent processed.

100 percent processed.

< Delay for about 2 minutes >

Processed 1608 pages for database 'imabigdb', file 'imabigdb' on file 1.

Processed 2 pages for database 'imabigdb', file 'imabigdb_log' on file 1.

RESTORE DATABASE successfully processed 1610 pages in 143.812 seconds (0.087 MB/sec).

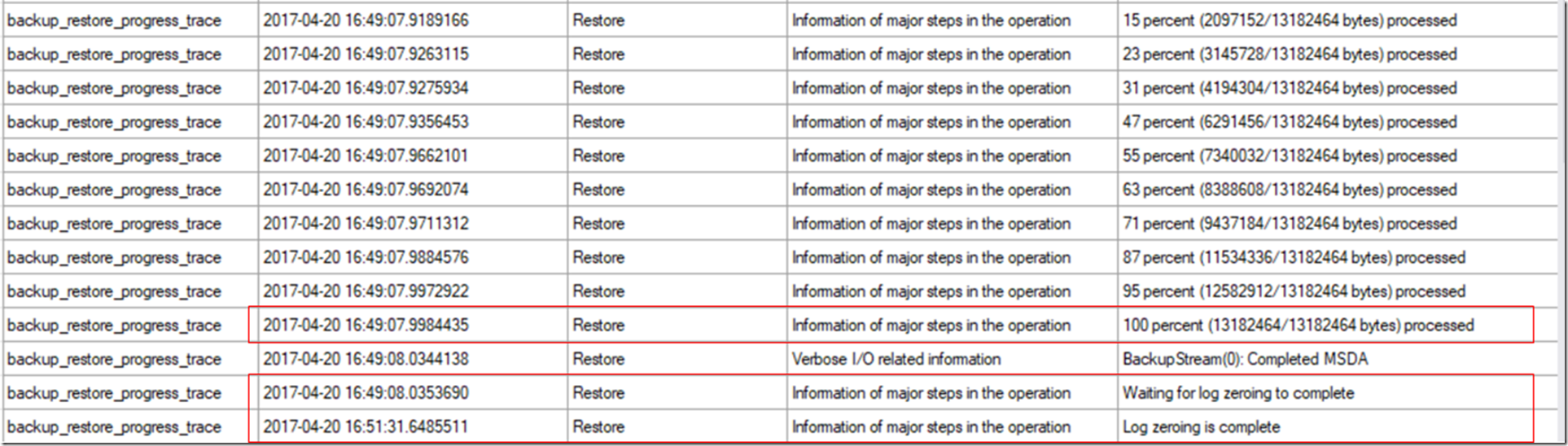

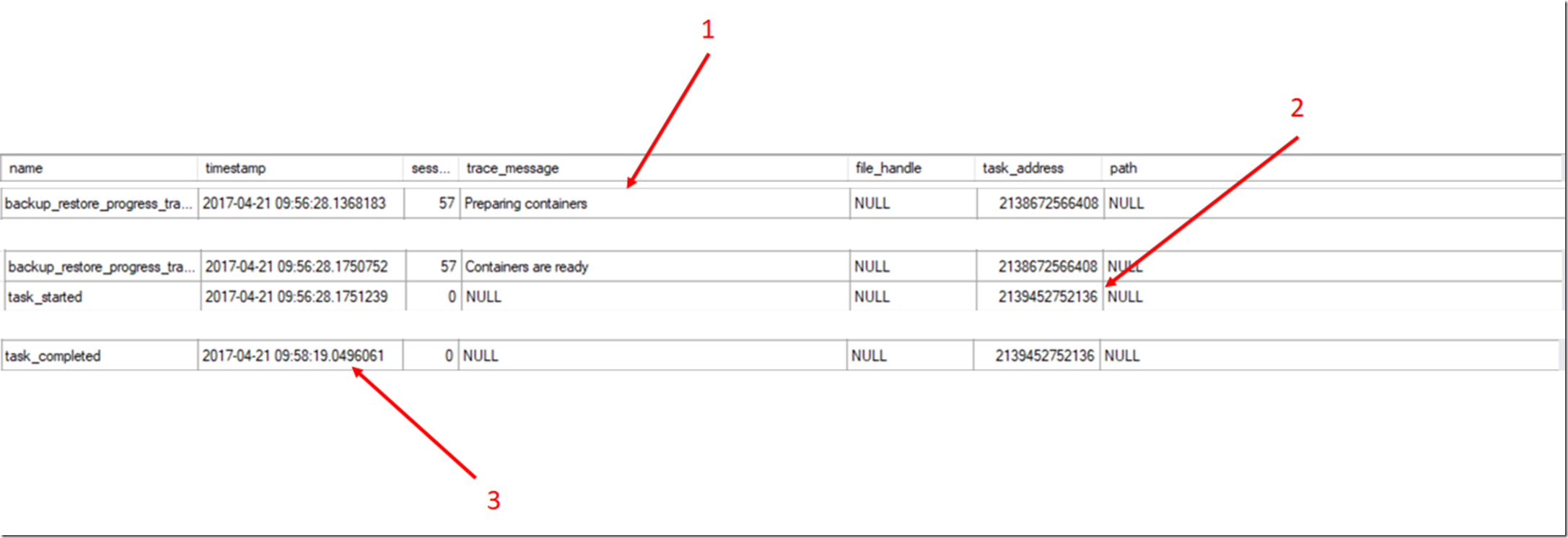

The XEvent results looked like this. I’ve highlighted a few areas

Notice the “100 percent…” message has detailed about “bytes processed”. Since my data is around 13Mb this tells me that the progress indicators are all about the data transferred step of RESTORE. Notice the time gap in the messages to “Waiting for Log zeroing…” and “Log Zeroing is complete”. That gap in time is around 2 minutes. Exactly the time it took between the the 100% Complete message in the SSMS Window and the final restore message.

From this evidence I can conclude that having a transaction log file size that takes a long time to initialize can be a possible cause of seeing the behavior of getting the 100% Complete Message quickly but the overall RESTORE takes longer to complete.

One thing that puzzled me though when I looked back at a previous RESTORE I tried where the database “had more data”. In this case the 100% message in SSMS was very close to the time I received the final RESTORE message. There was almost no delay (or at least it was not 2 minutes). But when I went back and looked at my database definition I was using a 5Gb transaction log. Huh? Why didn’t I see a big delay of 2 minutes as in the previous example. My suspicion was that the copying of data into the database from the backup is done concurrently with creation of the transaction log file. So if the time it takes to copy in your data is say around 2 minutes and the tlog takes 2.5 minutes to create and zero out that file, the delay between seeing the 100% and the final restore completion would be around 30 seconds. It makes sense if you think about it. Why should the engine wait to create the transaction log file until after copying the data. Why not create it at the same time because once the data copying is completed, we can copy in the log blocks and run recovery.

I have this new phrase I’ve been using lately. “There’s an XEvent for that”. And indeed there is to prove our theory…almost<g>. I decided to add in XEvents from the “debug” channel because I need some pretty low-level stuff to figure this out. Here is my new session definition:

CREATE EVENT SESSION [Backup_Restore_Trace] ON SERVER

ADD EVENT sqlos.async_io_completed(

ACTION(package0.event_sequence,sqlos.task_address,sqlserver.session_id)),

ADD EVENT sqlos.async_io_requested(

ACTION(package0.event_sequence,sqlos.task_address,sqlserver.session_id)),

ADD EVENT sqlos.task_completed(

ACTION(package0.event_sequence,sqlserver.session_id)),

ADD EVENT sqlos.task_started(

ACTION(package0.event_sequence,sqlserver.session_id)),

ADD EVENT sqlserver.backup_restore_progress_trace(

ACTION(package0.event_sequence,sqlos.task_address,sqlserver.session_id)),

ADD EVENT sqlserver.file_write_completed(SET collect_path=(1)

ACTION(package0.event_sequence,sqlos.task_address,sqlserver.session_id))

ADD TARGET package0.event_file(SET filename=N'Backup_Restore_Trace')

WITH (MAX_MEMORY=4096 KB,EVENT_RETENTION_MODE=ALLOW_SINGLE_EVENT_LOSS,MAX_DISPATCH_LATENCY=5 SECONDS,MAX_EVENT_SIZE=0 KB,MEMORY_PARTITION_MODE=NONE,TRACK_CAUSALITY=OFF,STARTUP_STATE=OFF)

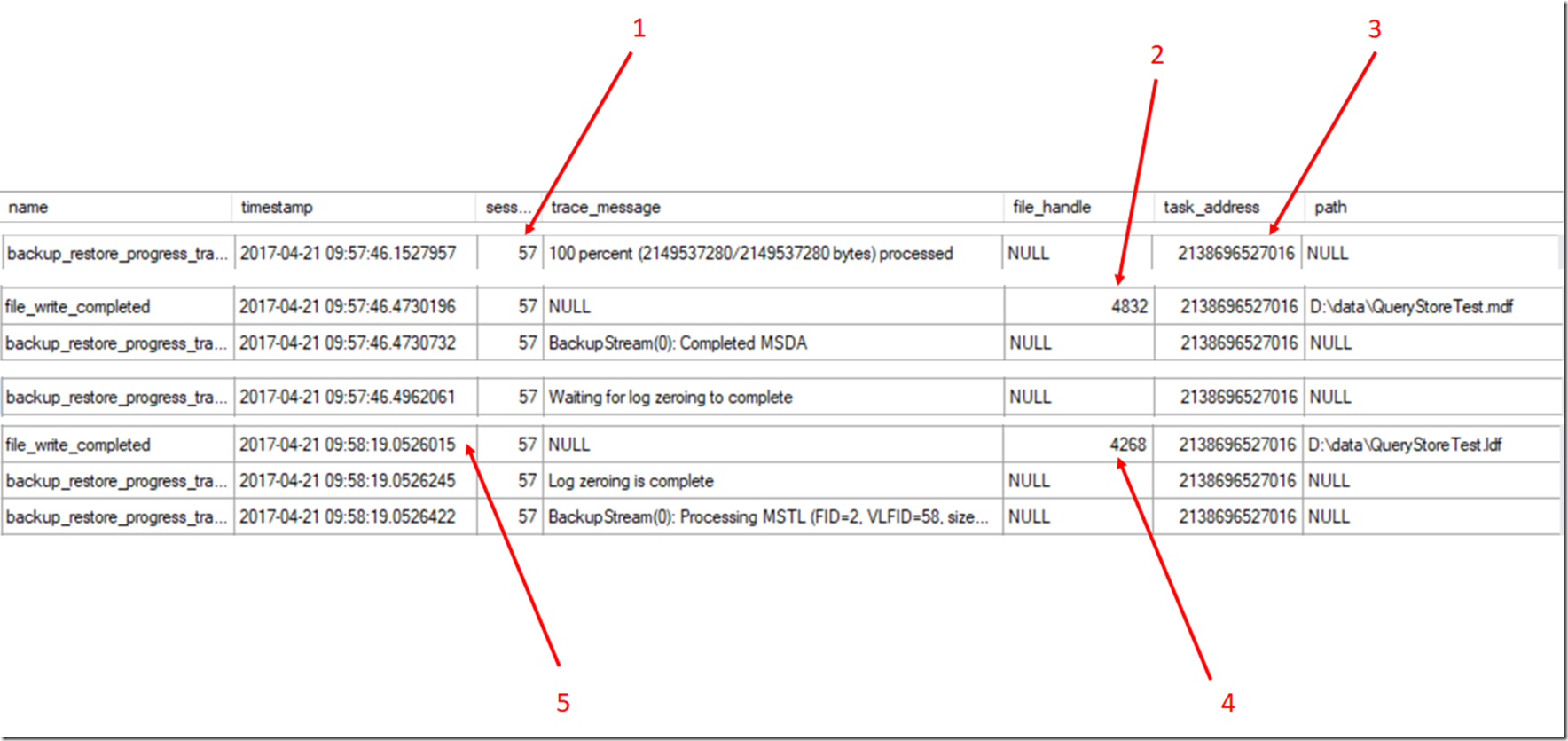

I’ve added in events for async_io, tasks, file writes, and added in actions to see session_id, event_sequence, file_handle, and task_address. I’ll show you why these are all important. Using my database where the difference was only about 30 seconds between the 100% processed and the final restore completion, I see results like this (I’m going to show only pieces of the result because it gets big).

This looks complicated so let me explain the results

1. The session_id is 57 for all of these evens so this is the RESTORE session

2. We know file_handle 4832 is for the database file

3. The task_address is the same for all of these events so we know this is one task that has finished copying the data and is waiting for the log to be zeroed out

4. We know file_handle 4268 is for the transaction log file

5. We can see the gap here in time between finishing copying the pages and the log zeroing complete which is 30 seconds. But remember this is a 5Gb tlog which I had already proved takes ~2 minutes to zero out

And it looks like my theory of this being done concurrently doesn’t fly because these events are all from the same task.

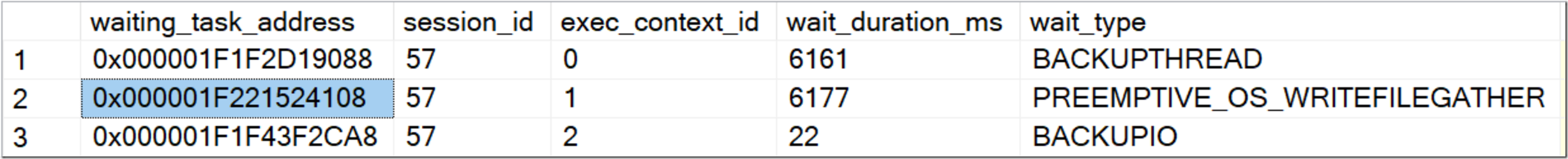

I went back and ran the RESTORE again, this time looking at sys.dm_os_waiting_tasks. I got these results

You can clearly see that three tasks are working on this same session with different wait types. But how does this help me line up the XEvents to prove my theory?

The waiting_task_address for the wait_type BACKUPIO is 2138696527016 in decimal so that matches exactly with the events I showed above. BACKUPIO is the wait type for a thread that is reading from the backup file and writing out data to the database file (and apparently also writes out something to the tlog file). Another waiting_task_address is waiting on PREEMPTIVE_OS_WRITEFILEGATHER. I’ve seen this exact same wait type for auto grow scenarios for the transaction log. So I believe this separate task is responsible for creating and zeroing out the transaction log file. Taking the waiting_task_address for this task, I searched my XEvent results and found this

Let’s look at this deeper.

1. The “Preparing Containers” task translates to the same task_address as the one waiting on BACKUPTHREAD. So you can see this is the main “coordinator” of the backup.

2. Soon after this a new task is started with a task address that matches the task waiting on PREEMPTIVE_OS_WRITEFILEGATHER. Ah. So this is the concurrent operation to zero out the transaction log. The coordinator task creates a “subprocess” to zero out the tlog. (I confirmed this further because in the task_started event is a field called entry_point. That is a number in decimal which is the actual function pointer to the code to run the task. In this specific case the name of that function pointer using symbols is sqlmin!SubprocEntrypoint()).

3. And then you can see that same task complete some ~2 minutes later, which is just about the time it takes to zero out the tlog. One thing that puzzled me is that I never saw any async_io or file_write_completed events for this task to zero the log. That is because we don’t use the “normal” routines in SQL Server that track async_io and file writes. We simply call the Windows routine WriteFileGather() in a loop and don’t use the “normal” routines for I/O in SQL Server. To ensure we don’t cause any scheduling problems, we do this in “preemptive mode”, hence the wait type PREEMPTIVE_OS_WRITEFILEGATHER. So I know that the parent coordinator task for the RESTORE created a subprocess to zero the log at the same time another subprocess task was copying in the database pages to the database files.

Wow. That was quite a bit just to show you how a RESTORE can take longer to complete “than you expect” and why 100% doesn’t necessarily mean 100% complete with the entire RESTORE process. A few closing thoughts:

1. The % process messages are only for the copying of database pages from the backup to database files. You can see their byte progress more in the XEvent trace. I suspect we do this because the copy process is a more predictable process we determine a “% complete” progress

2. The BACKUPIO subprocess task writes to the tlog as seen in the XEvent trace after the log is zeroed because that is where we copy in the log pages from the log portion of the backup. That does use “normal” I/O routines so will show up in async_io and file write events.

3. It is very possible that other scenarios I mentioned earlier regarding the transaction log (copying in the log blocks, going through VLFs, and running recovery) can make the completion take even long past the 100% mark because we wouldn’t try these operations until all the database pages are copied to the database files. Now you know though how to use XEvent to determine which part of applying the log could be taking extra time.

That is the end of this mystery. I hope you were able to understand how the combination of XEvents, DMVs, and paying attention to details of how the RESTORE executed helped turn a simple but mysterious question into case solved.

Bob Ward

Principal Architect

Microsoft Data Group

SEALS Tiger Team

Comments

- Anonymous

April 23, 2017

Very interesting, thank you! - Anonymous

April 23, 2017

This is awesome explanation Bob. Thank you so much - Anonymous

April 24, 2017

Thank you for not only showing in an understandable way how this works, but for giving a troubleshooting model that could be adapted for other situations (correlating XEs to the DMVs). - Anonymous

April 24, 2017

That is funny and good timing for us on this. We have a daily reporting database that gets restored from the previous day's Production, (we also do a checkb against it). We have a job set up that emails us the % complete of the restore at 7am so we know how long is left in the restore process. It always takes longer and when we run the query we can always see it sitting at 100% so we know it will be some time after. We configured our job to add on roughly 30 minutes to the estimate and so far it's been about right. Thanks! - Anonymous

April 26, 2017

Hello!Thank you for your article. We translated part of your article to Russian and put the link to original source (http://sqlcom.ru/helpful-and-interesting/sql-server-mysteries-restore/). - Anonymous

April 26, 2017

Great Article Bob! Thanks again for all you do.