Columnstore Index: How do I find tables that can benefit from Clustered Columnstore Index

Most of us understand that clustered columnstore index can typically provide 10x data compression and can speed up query performance up to 100x. While this sounds all so good, the question is how do I know which tables in my database could potentially benefit from CCI? For a traditional DW scenario with star schema, the FACT table is an obvious choice to consider. However, many workloads including DW have grown organically and it is not trivial to identify tables that could benefit from CCI. So the question is how can I quickly identify a subset of tables suitable for CCI in my workload?

Interestingly, the answer lies in leveraging the DMVs that collect data access patterns in each of the tables. The following DMV query provides a first order approximation to identify list of tables suitable for CCI. It queries the HEAP or the rowstore Clustered index using DMV sys.dm_db_index_operational_stats to identify the access pattern on the base rowstore table to identify tables that meet the criteria listed in the comments below:

-------------------------------------------------------

-- The queries below need to be executed per database.

-- Also, please make sure that your workload has run for

-- couple of days or its full cycle including ETL etc

-- to capture the relevant operational stats

-------------------------------------------------------

-- picking the tables that qualify CCI

-- Key logic is

-- (a) Table does not have CCI

-- (b) At least one partition has > 1 million rows and does not have unsupported types for CCI

-- (c) Range queries account for > 50% of all operations

-- (d) DML Update/Delete operations < 10% of all operations

select table_id, table_name

from (select quotename(object_schema_name(dmv_ops_stats.object_id)) + N'.' + quotename(object_name (dmv_ops_stats.object_id)) as table_name,

dmv_ops_stats.object_id as table_id,

SUM (leaf_delete_count + leaf_ghost_count + leaf_update_count) as total_DelUpd_count,

SUM (leaf_delete_count + leaf_update_count + leaf_insert_count + leaf_ghost_count) as total_DML_count,

SUM (range_scan_count + singleton_lookup_count) as total_query_count,

SUM (range_scan_count) as range_scan_count

from sys.dm_db_index_operational_stats (db_id(),

null,

null, null) as dmv_ops_stats

where (index_id = 0 or index_id = 1)

AND dmv_ops_stats.object_id in (select distinct object_id

from sys.partitions p

where data_compression <= 2 and (index_id = 0 or index_id = 1)

AND rows >= 1048576

AND object_id in (select distinct object_id

from sys.partitions p, sysobjects o

where o.type = 'u' and p.object_id = o.id))

AND dmv_ops_stats.object_id not in ( select distinct object_id

from sys.columns

where user_type_id IN (34, 35, 241)

OR ((user_type_id = 165 OR user_type_id = 167) and max_length = -1))

group by dmv_ops_stats.object_id

) summary_table

where ((total_DelUpd_count * 100.0/(total_DML_count + 1) < 10.0))

AND ((range_scan_count * 100.0/(total_query_count + 1) > 50.0))

Example: Restore database AdventureWorksDW2016CTP3 and following the following steps

- Step-1: Run the query. You will see no tables identified because there is no data access pattern available in the DMV sys.dm_db_index_operational_stats

- Step-2: Run few analytics queries such as

Select c.CalendarYear,b.SalesTerritoryRegion, FirstName + ' ' + LastName as FullName,

count(SalesOrderNumber) as NumSales,sum(SalesAmount) as TotalSalesAmt , Avg(SalesAmount) as AvgSalesAmt

,count(distinct SalesOrderNumber) as NumOrders, count(distinct ResellerKey) as NumResellers

From FactResellerSalesXL a

inner join DimSalesTerritory b on b.SalesTerritoryKey = a.SalesTerritoryKey

inner join DimEmployee d on d.Employeekey = a.EmployeeKey

inner join DimDate c on c.DateKey = a.OrderDateKey

Where c.FullDateAlternateKey between '1/1/2006' and '1/1/2010'

Group by b.SalesTerritoryRegion,d.EmployeeKey, d.FirstName,d.LastName,c.CalendarYear

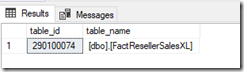

- Step-3: Run the query again and you will see the following output identifying the FACT table that can potentially benefit from CCI

Now, to find about data compression savings, unfortunately it is not yet supported in the popular stored procedure sp_estimate_data_compression_savings but you can use the following work around for approximate calculation.

- Select top 2 million rows from the source table and load into a temp table.

- Measure the size using sp_spaceused. Let us say it is X

- Now enable CCI on temp table using https://docs.microsoft.com/en-us/sql/t-sql/statements/create-columnstore-index-transact-sql

- Measure the size using sp_spaceused. Let us say it is Y

- Divide Y/X to get compression ratio. The actual compression will vary depending upon data skew.

Hope this helps you discover tables that could qualify for columnstore index. As always, please feel free to reach out to me for any questions on columnstore index

Thanks,

Sunil Agarwal

SQL Server Tiger Team

Follow us on Twitter: @mssqltiger | Team Blog: Aka.ms/sqlserverteam

Comments

- Anonymous

June 27, 2017

The comment has been removed - Anonymous

August 24, 2017

The comment has been removed- Anonymous

August 24, 2017

Your concerns are right on. CCI is not a suitable index for heavy updates. But here are some considerationsFew questions (1) what is the % of updates? how soon do you update the row after inserting? It is possible that compression delay could help(2) Is the table partitioned and your updates are limited to certain partitions?(3) How do you find rows to be updated? I suggest creating NCI to enable fast search.(4) when you update a row, do you have the value of all the columns in the rows? one technique that I have successfully deployed is to delete all the rows in the first pass and then insert the rows in second pass. Follow this by REORGLook forward to hearing from youthanksSunil

- Anonymous

- Anonymous

August 24, 2017

Great idea!! I have some version of it. I am still doing some testing to feel comfortable. Please expect a blog from me in few weeksregardsSunil