SQL Server 2016 Distributed Availability Groups for SAP

As mentioned in an earlier blog, we wanted to introduce Distributed Availability Groups in a separate article. This is one of the articles. Just to refresh what Distributed Availability Groups are, here a pointer to the documentation:

https://msdn.microsoft.com/en-US/library/mt651673.aspx

However, please realize that some important commands/steps did not make it into the documentation as of 06/04/2016 yet. These steps are referenced in this blog.

Purely from the technical side the idea is to couple independent AlwaysOn Availability Groups together by:

- Create independent Availability Group(s) in independent Windows Cluster Configurations.

- Have every one of the independent Availability Groups with their own AG Listeners.

- Create one Distributed AG over the other AGs making sure that the primary is synchronizing with the ‘other’ AG(s).

Since every one of the independent AGs runs on their own Windows Cluster configuration, quorum decisions are made independent from each other in the particular AGs. The Windows versions these different AGs are hosted on can be different. Means these concepts could be used as well for upgrading to a new Windows release the AG is running on.

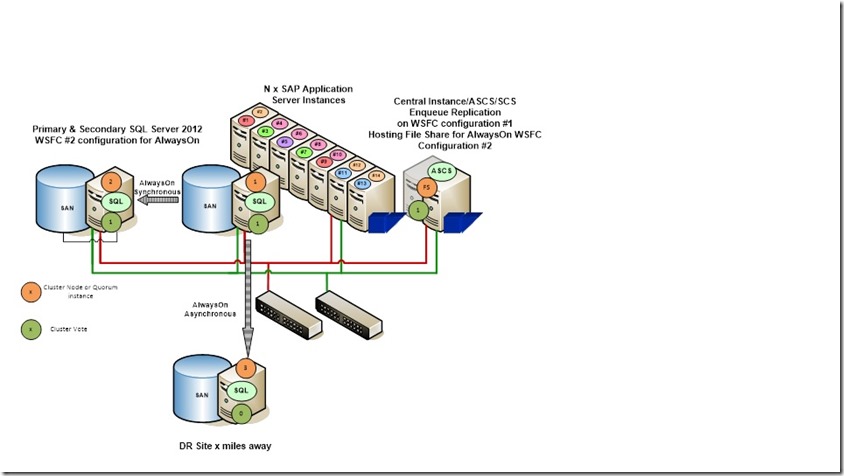

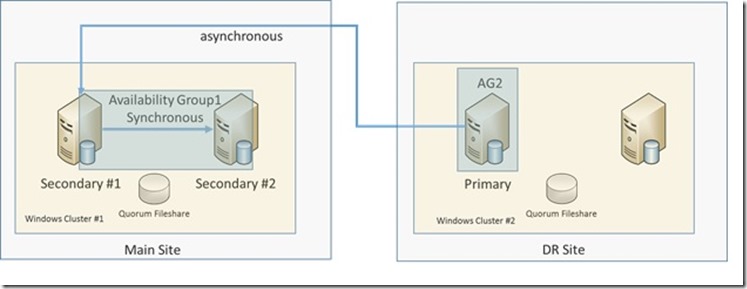

However, when you look into the graphics at the head of the article about Distributed Availability Groups, the scenario is not really what you as a customer might have asked for. All you really wanted is a single instance/replica in the DR site and not two of them. A scenario of configuration a lot of SAP customers implemented which basically looks like this:

See also this article: https://blogs.msdn.microsoft.com/saponsqlserver/2012/02/20/sql-server-2012-alwayson-part-3-sap-configuration-with-two-secondary-replicas/

No problem. We can use Distributed AGs for such a classical three replica scenario as well.

Scenario we want to cover

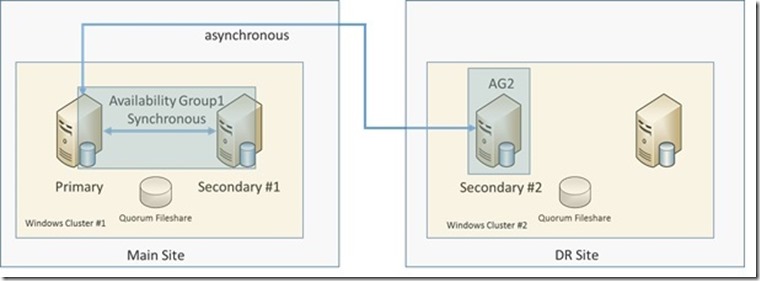

So the scenario we want to cover would need to cover one AlwaysOn AG in the main site which under normal operation conditions will run one primary and one secondary replica. Both with synchronous replication of the data. In the DR site we only want to run one single secondary replica that is getting asynchronously synchronized. The overview of the desired configuration would look like:

On the left hand side, we have the AG in the main site which usually runs the primary and the synchronous secondary replica. We will refer to that AG throughout the article as the ‘first AG’. Whereas in the DR site you have an independent AG (we will call ‘second AG’) with a single replica that is supplied out of our AG in the main site.

Motivation to use a Distributed Availability Group instead of one single Availability Group could be that Main site and DR site are far distant which causes trouble to keep one single Windows Cluster configuration function reliably for the requirement of a single AG.

So let’s go through the necessary steps to be done in order to create this scenario with Distributed Availability Groups.

Step #1: Create the AG in the Main site.

There is nothing special in this step. You can create this AG as before with support of the wizard in SSMS or manually with T-SQL commands (don’t forget to create the hadr_endpoint and the XEvent Session if you do so). We would recommend to enable database health checks with the AG as well. Also if you want to use automatic seeding, don’t forget to grant permission to create a database on the secondary node. If you want to have compression used for the automatic seeding, don’t forget to set traceflag 9567 on the primary instance (https://blogs.msdn.microsoft.com/saponsqlserver/2016/05/02/sql-server-2016-alwayson-for-sap/ ).

Step 2: Create a single node AG in the DR site

Let’s assume you got a Windows Cluster configuration setup already. This could be a setup with two nodes and a fileshare quorum as shown above or any other configuration you got in the DR site already. SQL Server 2016 is installed on one of the nodes and in SQL Server configuration, you enabled the usage of the Windows Cluster for AlwaysOn already. Creating an AG with a single replica is not possible with the wizard in SSMS. Hence we need to create the AG with a single replica by T-SQL commands. Make sure that you created the hadr_endpoint and the XEvent Session on that SQL Server instance (we usually script those from another instance and adapt the names). The command to create the single replica for the instance looks like:

CREATE AVAILABILITY GROUP [<name of second AG>]

WITH (DB_FAILOVER= ON)

FOR

REPLICA ON N'<node name of single node replica>'

WITH (ENDPOINT_URL = N'TCP://<Fully Qualified Name of single replica>:5022',

FAILOVER_MODE = MANUAL, AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT, BACKUP_PRIORITY = 50,

SECONDARY_ROLE(ALLOW_CONNECTIONS = NO), SEEDING_MODE = MANUAL);

We can highlight two new components to the command:

- The second line: with (DB_FAILOVER= ON) is a new addition that enables database health check and failover if the database is not healthy. Though the failover option is kind of irrelevant with a single replica AG, we added it to demonstrate how the syntax would look like

- Last line: SEEDING_MODE = AUTOMATIC is enabling an automatic seeding of the database. See also (https://blogs.msdn.microsoft.com/alwaysonpro/2016/05/02/sql-server-2016-alwayson-availability-group-enhancements-initial-data-synchronization-without-database-and-log-backup/ )

You also realize that there is no database named that should be part of the AG. That part will be done later through the Distributed AG.

After the AG is created, create an AG Listener on top of the second AG. After that, check whether the listener works by connecting through the listener name to the instance.

Step 3: Create Distributed AG on primary of ‘first AG’

Now we go to the last step in creating the Distributed AG. You need to issue commands on primary replica of the ‘first AG’ as well as on the ‘primary’ of the ‘second AG’. First go to the primary replica of the first AG and execute this command:

CREATE AVAILABILITY GROUP [<name of distributed AG>]

WITH (DISTRIBUTED)

AVAILABILITY GROUP ON '<name of first AG>' WITH

( LISTENER_URL = 'tcp://<name of first AG Listener>:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC ),

'<name of second AG>' WITH

( LISTENER_URL = 'tcp:// <name of second AG Listener>::5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC );

Remarks to this command:

- In line #2 you see the addition ‘WITH (DSITRIBUTED)’. This triggers the creation of a Distributed AG

- In the following two sections of the command, the two AGs are getting connected. The availability mode is set to asynchronous since we want to synchronize the DR site in an asynchronous manner. Purely from a functionality point of view synchronous replication does not work yet. However, will be enabled very soon. For our scenario with hundreds of miles distance between ‘first AG’ and ‘second AG’ asynchronous replication is anyway the way to go.

- A bit of confusion could be the information that is provided in the listener_url. It is basically two pieces of information combined. The listener name of the ‘first AG’ and the ‘second AG’ is the first piece of data and the port of the hadr_endpoint as second piece of information (not the port defined when you created the AG listener).

- We defined an automatic seeding between these AGs. As well as manual failover. Manual failover is common for the disaster recovery case.

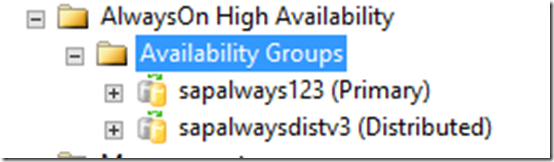

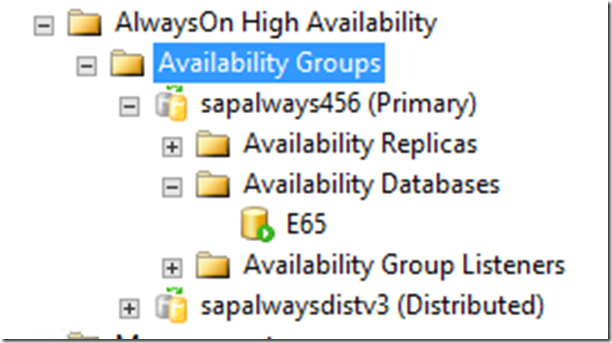

After this command executed successfully, refresh the item ‘Availability Groups’ in SSMS and you will see that there are two AGs listed as shown (in this case we called the first AG sapalways123 and the distributed AG sapalwaysdistv3) here:

However, properties or dashboard of the Distributed AG is not working yet in SSMS.

The information of the Distributed AG is stored together with the information of the ‘first AG’ in the Windows quorum.

Step 4: Join the ‘second AG’ into the Distributed AG

Now it is time to get to the ‘second AG’ in the DR site. On the primary and in our scenario only replica in the ‘second AG’, we need to join the Distributed AG. This is done with this T-SQL Command:

ALTER AVAILABILITY GROUP [<name of the distributed AG>]

join

AVAILABILITY GROUP ON

'<name of the first AG>' WITH

( LISTENER_URL = 'tcp://<name of first AG Listener>:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = MANUAL ),

'<name of the second AG>' WITH

( LISTENER_URL = 'tcp:// <name of second AG Listener>::5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = MANUAL );

Looking at the command, it has a similar structure as the command initially creating the AG. However, we ‘join’ the AG with this command (second line). Again we define the listener_url with the two information pieces of listener name and hadr_endpoint port.

After this command is executed, we expect:

- The Distributed AG showing up in SSMS Object Explorer under ‘Availability Groups’. The database being created due to our automatic seeding and replication being started.

- You can check on the primary of the ‘first AG’ on the status of the seeding by querying the DMV sys.dm_hadr_physical_seeding_stats.

- After a while the ‘secondary’ replica in the ‘second AG’ should be synchronized.

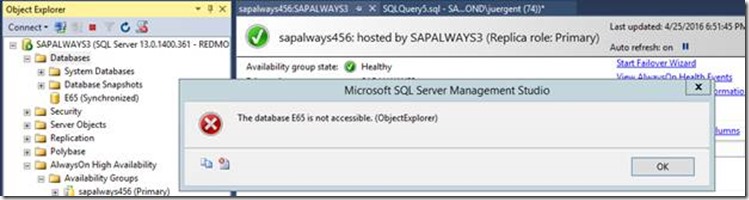

Object Explorer indeed will show the two AGs in the ‘primary’ of the ‘second AG’. However, in opposite to the primary replica of the ‘first AG’, the database of the primary of the ‘second AG’ is not accessible after a successful seeding. Means if you try to use Object Explorer and try to expand the database on this instance, you should get a message like this (we used sapalways3 as name for the primary node in the ‘second AG’ and sapalways456 as name of the ‘second AG’):

So, in the background you see the dashboard for our ‘second AG’ in the DR site, that tells that you are on the primary (and only instance). Yet since it is part of the Distributed AG, the database is not accessible since the only real accessible primary replica is active on the ‘first AG’ which represents our Main datacenter.

Step 5: Manual failover

The idea of our scenario is to have an automatic failover within the ‘first AG’ that is located in our main site. Whereas a failover to the DR site always should be a manual failover. Besides that, this is the only supported failover method we support with Distributed AGs. Hence to initiate that failover you need to go to the primary replica in the ‘SECOND AG’ running in the DR site and perform a failover command. In an emergency case we assume that your whole site that runs the ‘first AG’ and only primary replica might not be accessible anymore. Hence it does not make any sense to run a failover command from the primary replica on your ‘first AG’. In SQL Server 2016 bits as shipped initially, you could execute this failover command on the primary of the ‘first AG’. DON’T DO THAT. It will leave you with two AGs where none of the databases is accessible anymore. In future SQL Server releases we will block the failover command from being executed on the primary replica of the primary AG.

Attention!!!!!: Since we are careful folks we would perform this type of failover before we actually need to perform it in an emergency case. If you perform a failover to the ‘second AG’, the primary replica in the ‘second AG’, which was so far was not accessible and synchronized with the primary of the ‘first AG’ will open. However, the failover command will not move the primary in the ‘first AG’ into a secondary role automatically. Means that database remains accessible. By just issuing the failover command, you basically create a split brain scenario between the primary in the ‘first AG’ and the primary in the ‘second AG’. So not suitable at all for testing.

But there is a solution for testing this scenario. You can ‘demote’ the ‘first AG’ into a secondary role before executing the failover command. For this purpose, the following command needs to be run on the primary of the ‘first AG’ (Main site):

ALTER AVAILABILITY GROUP [<name od distributed AG>] SET (ROLE = SECONDARY)

If this command is executed on the primary of the ‘first AG’, the primary database closes and with it does not respond to your workload anymore.

NOW you go to the primary of the ‘second AG’ (DR site) and execute the failover command which needs to look like:

ALTER AVAILABILITY GROUP [<name of distributed AG>] FORCE_FAILOVER_ALLOW_DATA_LOSS

With this command your primary replica in the ‘second AG’ will open and will turn around the replication direction. Means you are now operating with that primary out of the DR site and replicate the data back into the Main site (think about the DR test case).

In an emergency case where you can’t connect into your main site anymore, you would run the failover command above just from the primary of the ‘second AG’. With that you certainly would create a split brain scenario. Before falling back into the main site you need to seed those databases anew from the primary replica of the ‘second AG’.

After the ‘controlled’ failover to the DR site with switching the ‘first AG’ to a secondary role, the replication flow would look like:

Step 6: Revert the failover test

As we want to revert our failover tests, we execute the commands in exactly the reverse order. This time we ‘demote’ the ‘second AG’ to a secondary role by issuing this commend on our current primary AG:

ALTER AVAILABILITY GROUP [<name od distributed AG>] SET (ROLE = SECONDARY)

And then issue the failover command on the current secondary AG:

ALTER AVAILABILITY GROUP [<name of distributed AG>] FORCE_FAILOVER_ALLOW_DATA_LOSS

With these commands, you basically fall back again to the ‘first AG’ as primary AG.

Sequence without Automatic Seeding

We showed the examples above with automatic seeding. Dependent on hardware, distances, network throughput and database volume automatic seeding might be not usable. Actually in our use case for extreme long distances and database volumes of a few TB, it might not be usable at all. So the question is how the sequence would look then.

The first change would be that the command for creating the DAG would have the SEEDING_MODE set to ‘MANUAL’ instead of ‘AUTOMATIC’. This change will prevent the automatic creation of the database(s) of the ‘first AG’ into the primary of the ‘second AG’.

This change needs to be done in the command that creates the DAG, executed on the primary of the ‘first AG’. This change also needs to be applied to the command executed on the primary of the ‘second AG’ to join the DAG.

At this stage you are left with a DAG, where the ‘first AG’ functions great. However, the ‘second AG’ included has no database in its AG. In order to get a database into the, so far, empty ‘second AG’, you need to restore the database(s) to be protected into the primary of the ‘second AG’ (in our case the only member node of the ‘second AG’). The database may not be recovered in the restore process. You also may need to apply a series of transaction log backups to the database(s), again without recovering the database(s). If you are at a nearly synchronized state (no transaction log backup executed on the primary that has not yet been restored), you execute this command:

ALTER DATABASE <name of database> SET HADR AVAILABILITY GROUP = [<name of second AG>]

This command should attach the non-recovered database to the ‘second AG’ and synchronize with the primary on the ‘first AG’ for a last catch up of the data that has not been yet backed up in transaction log backups. In order to see whether this step was successful, use Object Explorer and check the Availability Databases of the ‘second AG’ (saplaways456 in our example below).

The picture should look like this. You will not find an entry under ‘Availability Databases’ in the DAG (sapalwaysdistv3 in our example).

The order or sequence is important. The DAG needs to exist before you try to join the non-recovered database into the ‘second AG’. Otherwise you will get the error message that a database in that state can’t join the AG.

A logical question is whether we would need an AG listener for the one single node running the ‘second AG’. Answer is no. The DAG in this specific configuration with one node in the ‘second AG’ does work as well if you just refer to the node name in the SQL commands shown. Means in the statement where you create the DAG, you would supplement the name of the second AG’s listener with the node name of the primary node of the ‘second AG’. You do the same in the command to join the DAG on the primary of the ‘second AG’. From a process of DR failover, it does not really matter whether you use an AG listener or not. As you might have sensed already, you will need to change the SAP configuration anyway to adapt to either the AG listener name or the node name in case you move into the DR site (see next section).

Some considerations and some remarks

If we compare the scenario and the configurations we used so far to cover a scenario of local HA and far distanced DR site with the one described above, we note some differences.

So far we used one Windows Cluster configuration and one single AG Listener for the configuration. The Listener could cover multiple subnets. As a result, the AG listener moves with the failover of the nodes from the main site to the DR site. That again means that in the SAP connectivity configuration, we can just set the name of our AG listener and we cover the nodes and the main site and the one node in the DR site. In the SAP NetWeaver stack,

We talk about the following parameters:

- dbs/mss/server in the default.pfl or instance profile

- <SID>/DBHOST in TP_DOMAIN_<SID>.PFL

- MSSQL_SERVER in the environment of <sid>adm

- jdbc:sqlserver:// in the Secure Store

You can find more details in this blog: https://blogs.msdn.microsoft.com/saponsqlserver/2012/03/29/sql-server-2012-alwayson-part-6-building-an-alwayson-availability-group/

If you read through this article where we describe how to cover the scenario of local HA and far distanced DR site with Distributed AGs, it becomes clear that you have an AG listener for the ‘first AG’ and a separate listener for the ‘second AG’. There is no possibility to define an AG Listener for the Distributed AG. As a result, your process in case of a failover of a SAP system changes. Before you never had to change the parameters above when failing over. But now you need. If you initiate the failover process, you need to change all these parameters above to the AG Listener of the ‘second AG’. That needs to be part of the failover process.

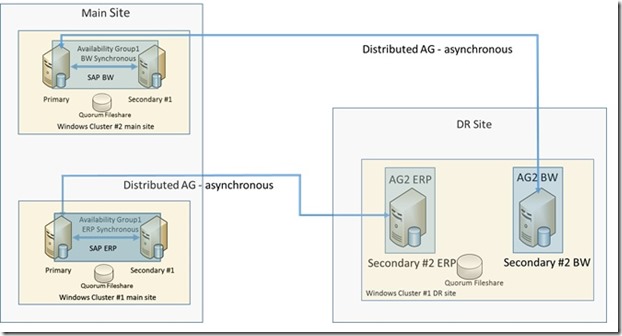

Obviously we need a Windows Cluster configuration in the DR site as well. Such a configuration usually contains multiple nodes of servers/VMs. However, in this case we only use one of those nodes for our single replica of the ‘second AG’. So what are we going to do with these other servers/VMs? Easy answer, we usually have more than one SAP system we need to protect. Means we can use the second, third, nth node of the Windows Cluster configuration in the DR site as target of other AGs that are connected with Distributed AGs to ‘first AGs’ of e.g. the SAP BW, SAP SCM, SAP GTS, etc system. So you run a Windows Cluster configuration in the DR site that runs single node AGs that act as DR target for AGs that run in the Main site. Where the AGs in the main site are connected with the single replica AGs in the DR sites with our new Distributed AGs. Means a scenario like this one:

So we are using our Windows Cluster configuration in the DR and its nodes as hosts for multiple ‘one replica’ AGs.

Another possibility that opens up for customers who have QA systems that are a mirror of the production infrastructure. In those cases, not only the production system is using AlwaysOn, but the QA system as well. In these cases, Distributed AGs allow to host the DR node in the same cluster with the QA system and eliminate the problem of embedding that node in the cluster on the main site. It also would allow you to run the ‘second AG’ on a separate SQL Server instance one of the QA database nodes and with that not even having the need to invest into a separate node.

Well, that is for this part. There are some more exiting configurations we can build with functionality in AlwaysOn of SQL Server 2016. But these will be part of another article.