SQL Checkpoint Scaling Observations

A recap on what a checkpoint does

Essentially a checkpoint operation writes dirty pages to disk. This speeds up any crash recovery. If a checkpoint did not happen then all transactions since the last persisted state of the database would need to be applied to pages in memory. With a checkpoint a much smaller portion of the transaction log is needed to move the pages in memory into the correct state.

Once a page has hardened to disk it is no longer necessary as far as constancy is concerned to keep the transaction log record. Other operations may still need to stop the transaction log from truncating such as full recovery mode prior to a transaction log backup but on a vanilla setup using the simple recovery model a checkpoint will also truncate the transaction log.

It should be noted that truncating a transaction log does not actually truncate it, just marks it as inactive. This can be seen with trace flag 2537

/*Using Simple recovery model this will show just the 3 checkpoint records*/

CHECKPOINT

SELECT * FROM fn_dblog (NULL, NULL);

/*Using trace flag 2537 shows the inactive portions of the log as well*/

DBCC TRACEON (2537);

SELECT * FROM fn_dblog (NULL, NULL);

DBCC TRACEOFF (2537);

In SQL page writes are asynchronous operations so will always happen in the background, usually by a checkpoint but also can be performed by other processes such as the lazy writer.

Scaling Observations

A checkpoint will perform a full scan of the memory and look for dirty pages to write to disk. With servers with not many pages in the buffer pool then this is a trivial operation. However, with servers increasingly being able to handle greater and greater amounts of memory with single large or multi-tenant database the impact of this can start to be seen.

To test the impact of this memory scan several servers were spun up in Azure to measure the time it takes to look though the buffer pool memory for dirty pages.

To view the timings a checkpoint was issued on a database using simple recovery model and then the transaction log viewed to see the time it took to complete the checkpoint. The buffers were then filled to see how much longer it took when the checkpoint needed to scan though the memory.

(The simple recovery model was used only so it was easier to view the results from the transaction log. it should make no real impact on the scan of the buffer pool)

The databases had no activity apart from default background processes at the point the checkpoint was issued. This was to rule out any IO or latching that may have interfered with the results.

The following code will perform a checkpoint then check the log for the timings

CHECKPOINT

SELECT [Checkpoint Begin], [Checkpoint End], * FROM fn_dblog (NULL, NULL);

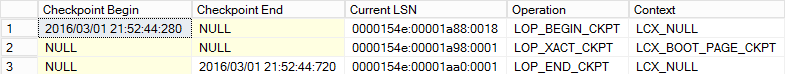

Using Azure on a D 14 with nothing in memory a checkpoint took ~40ms

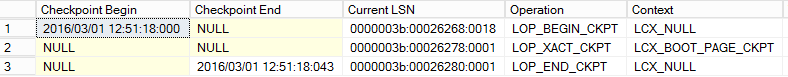

A D14 with ~100GB of cache full took around ~400ms

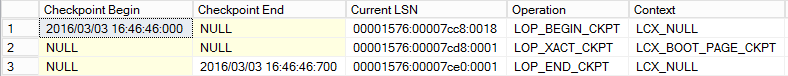

To increase the memory to see if this was a linear performance hit as the amount of memory was increased and the test repeated on a G4

With a full cache of ~200GB it took ~700ms

This suggests that scaling up to say a 2TB server a checkpoint could take anything up to nearly 8 seconds, before any other impact from checkpointing on a live box is taken into account.

However there is a way to stop SQL from performing this memory scan. In SQL 2012 indirect checkpoints were introduced. While a checkpoint generally runs every 60 seconds and scans all pages in memory an indirect checkpoint will keep track of the pages it needs to flush and flush them when it hits the time to do so. So for example if an indirect checkpoint was set to 60 seconds individual pages would be flushed around 60 seconds after they were made dirty. This will affect the nature of IO as it will move from a big hit every 60 seconds to small hits as pages change. Checkpoints will no longer be used and so the scan of all the pages in the buffer will be stopped.

On servers with a large amount of memory it makes sense to turn indirect checkpoints on, however, with any change to how SQL Server talks to its resources the impact must be tested to make sure in saving memory scans the IO is not adversely affected. If the IO subsystem is not optimized to handle the new nature of page writes then a new bottleneck could be introduced into the system.