Storage migration from Google Cloud to Azure using Azure Functions

My Customer wants to move from Google Cloud to Azure. One of their problems was the storage migration.

However, the problem is they have too many files, so the gsutil, which is a tool of Google Cloud, didn't get a response for listing the files.

To solve this problem, I wrote code for Azure Functions and a client.

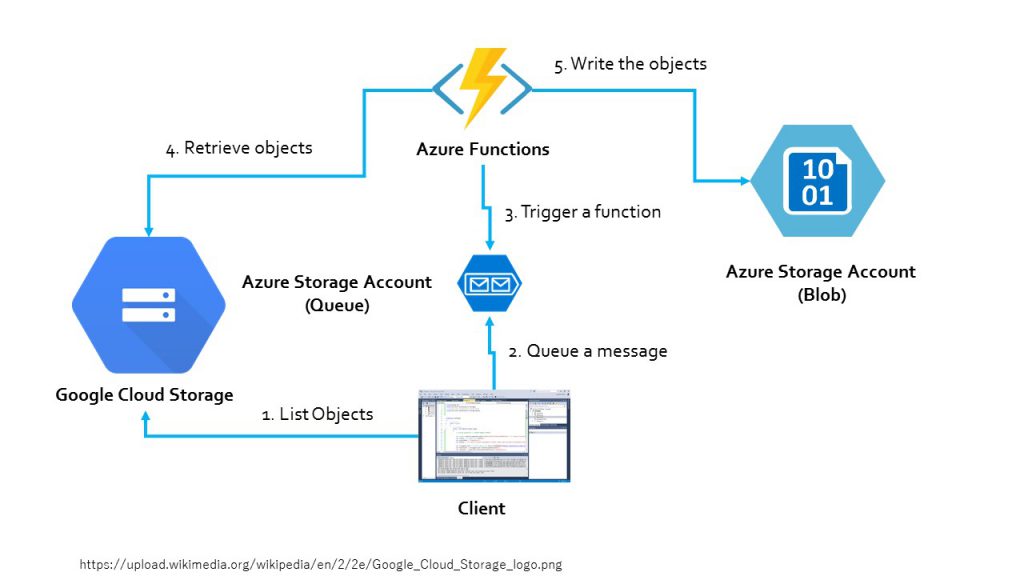

1. Architecture

You can access Google Cloud Storage object via Google Cloud SDK (.NET).

https://cloud.google.com/dotnet/docs/

Cloud Storage Client Libraries

- The client gets the list of the objects.

- The client split the list and send a message to Azure Storage Account Queue.

- Azure Functions detect the queue then a function starts.

- The function retrieves the objects

- then store into Azure Storage Account(Blob).

Azure Functions work concurrently.

2. Getting Credential from the console of Google Cloud Platform

Go to the console of Google Could Platform then go API Manager/Credentials. You need to create a Service account Key by the following process.

When you finish this process, you will get a JSON file. This is the credential file for accessing the Storage from your application.

3. Azure functions

NOTE: This code is for spike solution. For production, I highly recommend using async/await for concurrent programming. I'll share after finishing production code.

3.1. Upload a GCP credential

The problem is how to store the GCP credential file. The best answer might be an Azure Key Vault. However, Azure functions don't support it currently. We can use Key Vault SDK. However, we need to store KeyVault credential instead. I decide to upload the GCP credential to Azure functions. You can upload your credential on the Azure Functions page on your browser. I upload the credential named "servicecert.json".

See Feature request: retrieve Azure Functions' secrets from Key Vault

3.2. Create a Storage Account Blob Container in private

3.3. Getting Blob service SAS token

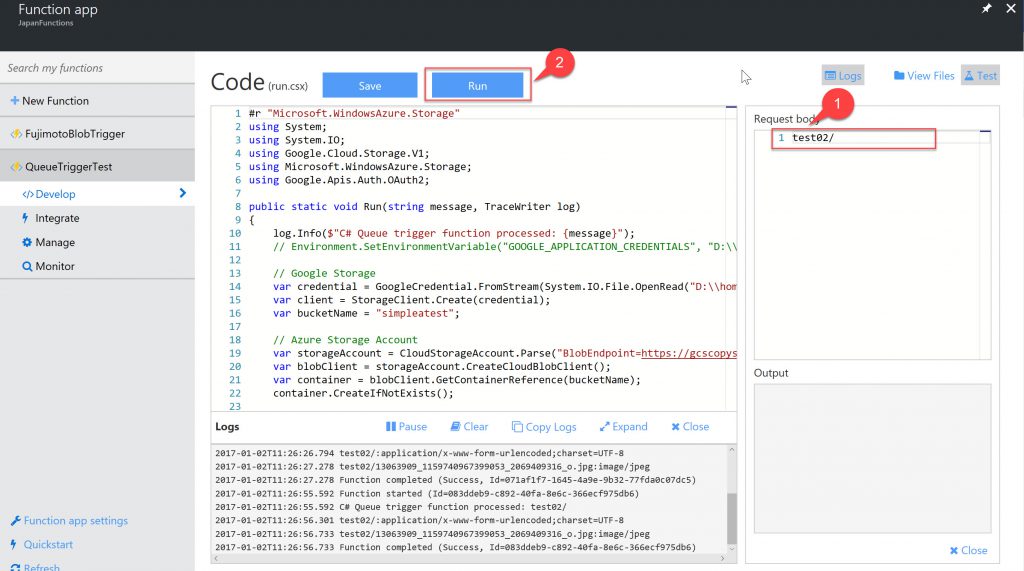

3.4. Write a function

I use the Queue Trigger for C# template. Since I need to copy whole Container, I don't use blob output bind.

The code is quite simple. However, the problem is you can't use the latest Google Cloud SDK(1.0.0-beta6) for Azure Functions. Currently, Azure Functions has an issue to manage the different versions library. See .... The problem might be solved in the near future. I just downgrade the library (1.0.0-beta5) and modify the code for adopting 1.0.0-beta5.

NOTE: This code is for spike solution. For production, I highly recommend using async/await for concurrent programming. I'll share after finishing production code.

project.json

{

"frameworks": {

"net46":{

"dependencies": {

"Google.Cloud.Storage.V1": "1.0.0-beta05"

}

}

}

}

run.csx

#r "Microsoft.WindowsAzure.Storage"

using System;

using System.IO;

using Google.Cloud.Storage.V1;

using Microsoft.WindowsAzure.Storage;

using Google.Apis.Auth.OAuth2;

public static void Run(string message, TraceWriter log)

{

log.Info($"C# Queue trigger function processed: {message}");

// Google Storage

var credential = GoogleCredential.FromStream(System.IO.File.OpenRead("D:\\home\\site\\wwwroot\\QueueTriggerTest\\servicecert.json"));

var client = StorageClient.Create(credential);

var bucketName = "simpleatest";

// Azure Storage Account

var storageAccount = CloudStorageAccount.Parse("BlobEndpoint={YOUR BLOB SERVICE SAS TOKEN is here}");

var blobClient = storageAccount.CreateCloudBlobClient();

var container = blobClient.GetContainerReference(bucketName);

container.CreateIfNotExists();

foreach(var obj in client.ListObjects(bucketName, message))

{

if (IsDirectory(obj.Name))

{

container.GetDirectoryReference(obj.Name);

} else

{

var blockBlob = container.GetBlockBlobReference(obj.Name);

using (var stream = blockBlob.OpenWrite())

{

client.DownloadObject(bucketName, obj.Name, stream);

}

}

log.Info($"{obj.Name}:{obj.ContentType}");

}

}

private static bool IsDirectory(string backetPath)

{

return backetPath.EndsWith("/");

}

Async/Await source code: https://gist.github.com/TsuyoshiUshio/b258e20b5a4c21d24200cec222757511

See Azure functions with NuGet packages that have different versions of the same dependency

Once you set the test parameter, you can test it via Browser.

If you specify ""(remove the letter) as the Request body, you can see the whole bucket(GCS) copied to a container(Azure).

4. Write a client code

Client Code sample is also simple. You just send messages to the queue. A message includes the filter string for storage objects.

You can split the objects any way you like.

var queueStorageAccount = CloudStorageAccount.Parse("DefaultEndpointsProtocol=https;AccountName={YOUR ACCOUNT NAME HERE};AccountKey={YOUR ACCOUNT KEY HERE}");

var queueClient = queueStorageAccount.CreateCloudQueueClient();

var queue = queueClient.GetQueueReference("myqueue");

queue.CreateIfNotExists();

:

var message = new CloudQueueMessage(obj.Name);

queue.AddMessage(message);

:

queue.DeleteMessage(message);

Get started with Azure Queue storage using .NET

Configure Azure Storage Connection Strings

Enjoy coding.