Microsoft SQL Server meets Redis and Node.js: How to follow long running batches with Windows Phone (or any browser, really) – Day 2

Last blog post was quite code heavy (https://bit.ly/1xnRn4Y). We have implemented the RESP protocol by hand in C# (well, some of it) and wrapped it in a nice SQLCRL stored procedure. We have shown how easy it to publish events from SQL Server to redis. And we captured the events with a console command. Well, the result was ugly: a black console :).

Today I will cover the second half of the pub/sub pattern showing you how easy is to create a fully fledged dynamic, scalable and cool logging analytic platform. And with very few lines of code nonetheless.

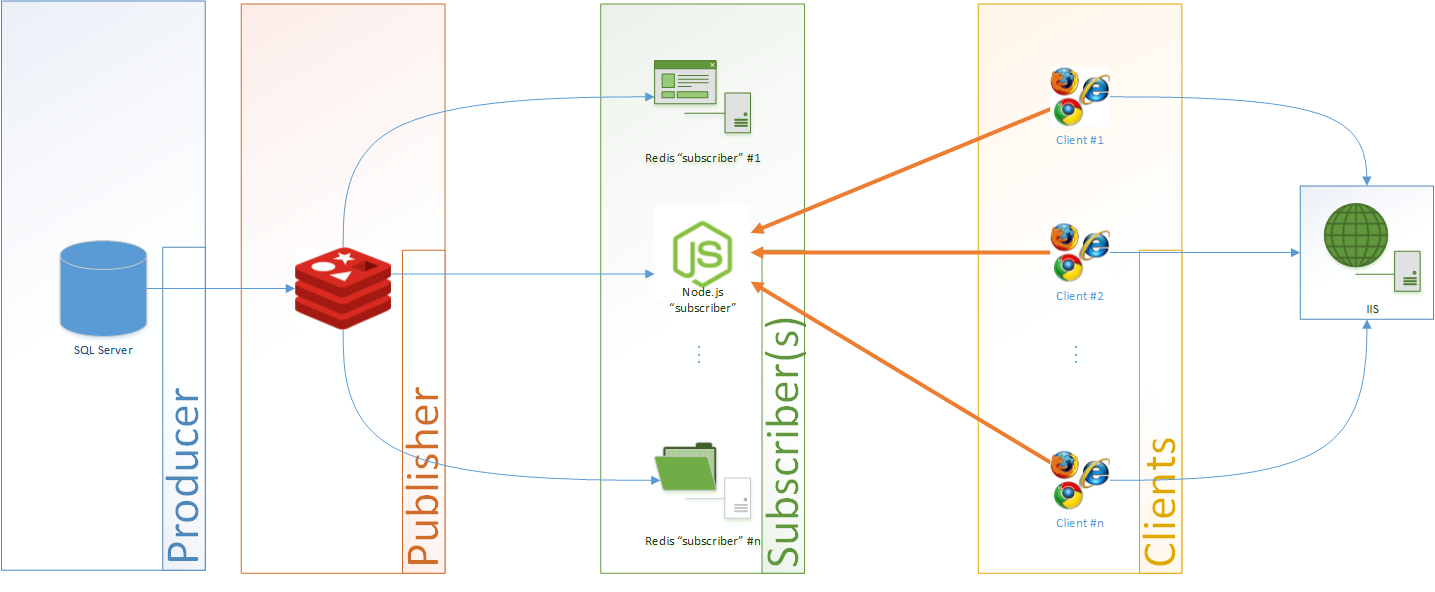

Let’s get back the previous architectural diagram:

The first subscriber type, the command line redis-cli output, was covered the last time. Now we will focus on the “globe” one. We want to show you a dynamically updating page that shows the events created by SQL Server. The main problem here is that the redis pub/sub pattern requires an active connection while the http is connectionless. Also, exposing our redis server to the clients is a bad idea. We need something in between that acts as demultiplexer. The easiest way to achieve this is using node.js: we will write a node.js application that exposes your events through a socket.io endpoint. Socket.io (more here https://socket.io/) allows you – amongst other things - to write client-server-like web applications with minimal effort. The redis node.js module covers the other side of the communication. Our revised architectural diagram is now:

Look at the big orange arrows. Our clients will download the static webpage from our webserver and will in turn look for the events from the node.js socket.io endpoint.

In other terms, we have separated our architecture in layers:

Isn’t that cool? Note that each layer effectively shields the following layers from the previous layer. For example, we could replace SQL Server with IIS or any event generator and the subscribers won’t be affected. The clients even less. This works on the other way around, too. Our SQL Server code will not change is we, say, would like to replace the web browsers with a Windows Phone App. We can also scale horizontally if needed (not for free tough).

Let’s implement the Node.js subscriber. Make sure you have the required Node.js modules installed. You can get them using the npm package manager. Just issue:

npm install socket.io

npm install redis

(note: if you have trouble with npm make sure to create the %AppData%\npm folder and try again). Our log.js code is very simple:

var http = require('http'),

io = require('socket.io'),

redis = require('redis'),

rc = redis.createClient();

server = http.createServer(function (req, res) {

// We don't want to serve standard HTTP pages here.

console.log("Direct http hit... not serviced here.");

res.writeHead(404);

res.end();

});

// Set up our server to listen on 8000 and serve socket.io

server.listen(8000);

var socketio = io.listen(server);

// Subscribe to our topic. We could subscribe to multiple topics here.

rc.on("connect", function () {

rc.subscribe("sql:event");

});

// This is the "core" of our code. Notice that since

// we are using socket.io we can easily push messages to

// clients (one-liner).

rc.on("message", function (channel, message) {

console.log("Sending: \"" + message + "\" to web clients.");

socketio.sockets.emit('message', message);

})

Notice that we subscribed to the “sql:event” topic. This string is our lose lace binding our node.js with our publisher. Also note how easy is to send events to clients. All we have to do is to call the emit method of socket.io.sockets. For more details please see https://socket.io/docs/server-api/#server#emit.

Now the webpage. It’s static – as far as IIS is concerned – because the code will run on the client (javascript + jquery magic).

Here is the code to put in the head section:

<script src="https://ajax.googleapis.com/ajax/libs/jquery/1.6.1/jquery.js"></script>

<script src="https://ubuntu-spare.pelucchi.local:8000/socket.io/socket.io.js"></script>

<script>

var socket = io.connect('ubuntu-spare.pelucchi.local:8000');

socket.on('message', function (data) {

console.log('Received a message from the server:', data);

var eMainTable = document.getElementById("maintable");

var txtNode = document.createTextNode(data);

var row = document.createElement('tr');

var cell = document.createElement('td');

cell.appendChild(txtNode);

row.appendChild(cell);

eMainTable.appendChild(row);

});

</script>

Notice the https://ubuntu-spare.pelucchi.local:8000/socket.io/socket.io.js address. You should configure it to point to your Node.js instance(s). All the magic is in the io class. It will call our code at each message received. All we have to do is to add another row to our table (called “maintable”). The table itself is in the body with this definition:

<tableid="maintable"></table>

Now let’s see it in action: start node.js with our code file, store the page in IIS and connect your browser to the page:

Now try to generate an event with SQL Server to the channel sql:event:

DECLARE @txt NVARCHAR(MAX)

SET @txt = N'This comes from ' + @@VERSION;

EXEC [MindFlavor].[Redis].[Publish] 'ubuntu-spare.pelucchi.local', 6379, 'sql:event', @txt

You should see our page react as expected:

Cool.

Now let’s use a more meaningful example. Let’s use the script of the previous blog post (the one that backups all the databases on an instance):

:setvar RedisServer N'ubuntu-spare.pelucchi.local'

:setvar RedisPort 6379

:setvar RedisPubTopic N'sql:event'

DECLARE pippo CURSOR FOR

SELECT name FROM sys.databases

OPEN pippo;

DECLARE @name NVARCHAR(255);

DECLARE @stmt NVARCHAR(4000);

DECLARE @txt NVARCHAR(MAX);

FETCH NEXT FROM pippo INTO @name;

WHILE @@FETCH_STATUS = 0

BEGIN

SET @txt = N'Starting backup of ' + @name + '. ';

EXEC [MindFlavor].[Redis].[Publish] $(RedisServer), $(RedisPort), $(RedisPubTopic), @txt;

SET @stmt = N'BACKUP DATABASE ' + QUOTENAME(@name) + N' TO DISK=''C:\temp\backup\' + @name + N'.bak'' WITH INIT, COMPRESSION';

SET @txt = N'Statement ' + @stmt + ' completed. ' + @@SERVERNAME + ', ' + USER_NAME() + ' (' + CONVERT(NVARCHAR, @@SPID) + ').';

EXEC [MindFlavor].[Redis].[Publish] $(RedisServer), $(RedisPort), $(RedisPubTopic), @txt;

EXEC sp_ExecuteSQL @stmt;

FETCH NEXT FROM pippo INTO @name;

END

CLOSE pippo;

DEALLOCATE pippo;

SET @txt = N'All backups completed!';

EXEC [MindFlavor].[Redis].[Publish] $(RedisServer), $(RedisPort), $(RedisPubTopic), @txt;

As you can see the events flow in:

Did I mention that you can follow it on your mobile phone too?

Look at these screenshots:

Happy coding,

Francesco Cogno

Comments

Anonymous

June 09, 2015

Great! Thank you! Can I find anywhere the SQLCLR proc code you used in these articles?Anonymous

June 09, 2015

Thank you Oleg! I've published the library to github. You can find it here: github.com/.../MindFlavor.RESP . Keep in mind that this is a just pre-alpha code, though :).