Multitouch Event System for Unity

I get to play with some cool toys on the Envisioning team such as Surface Hub and other large display devices that can track up to 100 touch points simultaneously. These devices encourage multiple people to interact at one time.

Unity, unfortunately, doesn't handle this very well. While the Input.touches array will show the touches, Unity's event handling for things like selected object, button clicks, drags, etc., treats the centroid of the points as the action point. If you have two people trying to interact on opposite sides of the screen, they are treated as one person interacting somewhere in the middle.

I need to work with gestures from one to five fingers at a time, in multiple clusters. Most of the multitouch gesture systems available for Unity do not integrate with the Unity event system. As such, it can be quite difficult to determine whether a Unity uGUI control (like a ScrollRect or Button) should handle the input, or some other element which is responding to a gesture recognizer.

So I forked the Unity.UI project to solve these issues. My modifications include:

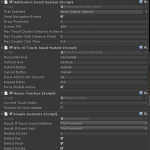

- MultiselectEventSystem: this component extends EventSystem to enable the new functionality

- Win10TouchInputModule: this component extends StandaloneInputModule to process the touch points into clusters.

- SimpleInputGestures: This component plugs into the gesture recognition pipeline to fire UnityEvents for Pan, Pinch, and Rotate gestures. The gesture pipeline is extensible by adding similar components to the EventSystem GameObject.

NOTE: I now have a version for Unity 5.3 and 5.4 which use reflection to integrate with UnityEngine.UI instead of having to build a custom verison. Please see my Unity 5.5 post for this new version.

Using the Multitouch Event System is accomplished by including these components on a GameObject in your scene.

Then you can use the IPanHandler, IPinchHandler, and IRotateHandler interfaces to receive these multitouch events. These events carry a MultiTouchPointerEventData object which extends PointerEventData. This contains information about the cluster of touch points that caused the event to fire. Things like the centroid position and delta and the TouchCluster describing the points.

Here's an example of processing the OnPinch event which applies a pinch event to the local scale of an object called HandRoot:

public void OnPinch(SimpleGestures sender, MultiTouchPointerEventData eventData, Vector2 pinchDelta)

{

float ratio = pinchDelta.magnitude / displaySize.magnitude;

ratio *= Mathf.Sign(pinchDelta.x);

ratio = 1.0f + (ratio * PinchFactor);

var scale = HandRoot.transform.localScale * ratio;

HandRoot.transform.localScale = scale;

}

One piece of magic that MultitouchEventSystem performs is to track multiple selected objects. During the processing for each cluster, the currentSelectedGameObject represents the selected object for that cluster. This allows normal Unity uGUI controls to treat the clusters (even if each is a single touch point) as if they were the only touches happening. Thus if you have two or more people interacting on the same screen, the normal controls will react to each of them simultaneously.

The current state of my fork, https://bitbucket.org/johnseghersmsft/ui, targets Unity 5.3.x. I'm in the process of updating it to handle 5.4.x (relatively minor changes).

The later versions of 5.5 beta include changes which allow the proper derivation of these classes without having to build a custom UnityEngine.UI subsystem. There were a number of methods and fields which needed to be protected instead of private. I will be updating my additions and publishing a new repository with only these files so that they can be used with the default 5.5 subsystems.

I will be demonstrating this system briefly as part of my talk at Unite 2016 Los Angeles, 10am November 3rd.