Enabling large disks and large sectors in Windows 8

One of the most basic services provided by an OS is the file system, and Windows has one of the most advanced file systems of any operating system used broadly. In Windows 7 we improved things substantially in terms of reliability, management, and robustness (for example, automating completely the antiquated notion of "defrag"). In Windows 8 we build on this work by focusing on scale and capacity. Bryan Matthew, a program manager on the Storage & File System team, authored this post.

--Steven

Our digital collections keep growing at an ever increasing rate – high resolution digital photography, high-definition home movies, and large music collections contribute significantly to this growth. Hard disk vendors have responded to this challenge by delivering very large capacity hard disk drives – a recent IDC market research report estimates that the maximum capacity of a single hard disk drive will increase to 8TB by 2015.

Maximum capacity growth over time for single-disk drives

(Source: IDC Study# 228266, Worldwide Hard Disk Drive 2011–2015 Forecast:

Transformational Times, May 2011)

In this blog entry, I’ll discuss how Windows 8 has evolved in conjunction with offerings from industry partners to enable you to more efficiently and fully utilize these very large capacity drives.

The challenges of very large capacity hard disk drives

To start you out with a little bit of context, we will define “very large capacity” disk drives as sizes > 2.2TB (per disk drive). The current architecture in Windows has some limits that makes these drives somewhat tricky to deal with in some scenarios.

Even as hard disk drive vendors innovated to deliver very large capacity drives, two key challenges required focused attention:

- Ensuring that the entire available capacity is addressable, so as to enable full utilization

- Supporting the hard disk drive vendors in their effort to deliver more efficiently managed physical disks – 4K (large) sector sizes

Let’s discuss both of these in more detail.

Addressing all available capacity

To fully understand the challenges with addressing all available capacity on very large disks, we need to delve into the following concepts:

- The addressing method

- The disk partitioning scheme

- The firmware implementation in the PC – whether BIOS or UEFI

The addressing method

Initially, disks were addressed using the CHS (Cylinder-Head-Sector) method, where you could pinpoint a specific block of data on the disk by specifying which Cylinder, Head, and Sector it was on. I remember in 2001 (when I was still in junior high!) we saw the introduction of a 160GB disk, which marked the limit of the CHS method of addressing (at around 137GB), and systems needed to be redesigned to support larger disks. [Editor’s note: my first hard drive was 5MB, and was the size of a tower PC. --Steven]

The new addressing method was called Logical Block Addressing (LBA) – instead of referring to sectors using discrete geometry, a sector number (logical block address) was used to refer to a specific block of data on the disk. Windows was updated to utilize this new mechanism of addressing available capacity on hard disk drives. With the LBA scheme, each sector has a predefined size (until recently, 512 bytes per sector), and sectors are addressed in monotonically increasing order, beginning with sector 0 and going on to sector n where:

n = (total capacity in bytes)/ (sector size in bytes)

The disk partitioning scheme

While LBA addressing theoretically allows arbitrarily large capacities to be accessed, in practice, the largest value of “n” can be limited by the associated disk partitioning scheme.

The notion of disk partitioning can be traced back to the early 1980s - at the time, system implementers identified the need to divide a disk drive into several partitions (i.e. sub-portions), which could then be individually formatted with a file system, and subsequently used to store data. The Master Boot Record partition table (MBR) scheme was invented at the time, which allowed for up to 32-bits of information to represent the maximum capacity of the disk. Simple math informs us that the largest addressable byte represented via 32 bits is 232 or 2.2TB. Of course, in the 1980s, this seemed a perfectly legitimate practical limitation to impose, considering that the largest consumer disk available then was a whopping 5MB and cost well over $1500!

As early as in the late 1990s, system implementers recognized the need to enable addressing greater than the 2.2TB limit (among other requirements). A group of companies collaborated to develop a scalable partitioning scheme called the GUID Partition Table (GPT), as part of the Unified Extensible Firmware Interface (UEFI) specification. GPT allows for up to 64-bits of information to store the number that represents the maximum size of a disk, which in turn allows for up to a theoretical maximum of 9.4 ZettaByte (1 ZB = 1,000,000,000,000,000,000,000 bytes).

Beginning with Windows Vista 64-bit, Windows has supported the ability to boot from a GPT partitioned hard disk drive with one key requirement – the system firmware must be UEFI. We've already talked about UEFI, so you know it can be enabled as a new feature of Windows 8 PCs. This leads us to the topic of firmware.

Firmware implementation in the PC – BIOS or UEFI

PC vendors include firmware that is responsible for basic hardware initialization (among other things) before control is handed over to the operating system (Windows). The venerable BIOS (Basic Input Output System) firmware implementations have been around since the PC was invented i.e. circa 1980. Given the very significant evolution in PCs over the decades, the UEFI specification was developed as a replacement for BIOS and implementations have existed since the late 1990s. UEFI was designed from the ground up to work with very large capacity drives by utilizing the GUID partition table, or GPT – although some BIOS implementations have attempted to prolong their own relevance and utility by using workarounds for large capacity drives (e.g. a hybrid MBR-GPT partitioning scheme). These mechanisms can be quite fragile, and can place data at risk. Therefore, Windows has consistently required modern UEFI firmware to be used in conjunction with the GPT scheme for boot disks.

Beginning with Windows 8, multiple new capabilities within Windows will necessitate UEFI. The combination of UEFI firmware + GPT partitioning + LBA allows Windows to fully address very large capacity disks with ease.

Our partners are working hard to deliver Windows 8 based systems that use UEFI to help enable these innovative Windows 8 features and scenarios (e.g. Secure Boot, Encrypted Drive, and Fast Start-up). You can expect that when Windows 8 is released, new systems will support installing Windows 8 to, and booting from, a 3TB or bigger disk. Here’s a preview:

Windows 8 booted from a 3 TB SATA drive with a UEFI system

4KB (large) sector sizes

All hard disk drives include some form of built-in error correction information and logic – this enables hard disk drive vendors to automatically deal with the Signal-to-Noise Ratio (SNR) when reading from the disk platters. As disk capacity increases, bits on the disk get packed closer and closer together; and as they do, the SNR of reading from the disk decreases. To compensate for decreasing SNR, individual sectors on the disk need to store more Error Correction Codes (ECC) to help compensate for errors in reading the sector. Modern disks are now at the point where the current method of storing ECCs is no longer an efficient use of space, – that is, a lot of the space in the current 512-byte sector is being used to store ECC information instead of being available for you to store your data. This, among other things, has led to the introduction of larger sector sizes.

Larger sector sizes – “Advanced Format” media

With a larger sector size, a different scheme can be used to encode the ECC; this is more efficient at correcting for errors, and uses less space overall. This efficiency helps to enable even larger capacities for the future. Hard disk manufacturers agreed to use a sector size of 4KB, which they call “Advanced Format (AF),” and they introduced the first AF drive to the market in late 2009. Since then, hard disk manufacturers have rapidly transitioned their product lines to AF media, with the expectation that all future storage devices will use this format.

Read-Modify-Write

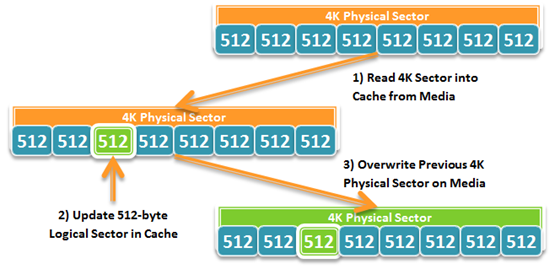

With an AF disk, the layout of data on the media is physically arranged in 4KB blocks. Updates to the media can only occur at that granularity, and so, to enable logical block addressing in smaller units, the disk needs to do some special work. Writes done in units of the physical sector size do not need this special work, so you can think of the physical sector size as the unit of atomicity for the media.

As illustrated below, a 4KB physical sector can be logically addressed with 512-byte logical sectors. In order to write to a single logical sector, the disk cannot simply move the disk head over that section of the physical sector and start writing. Instead, it needs to read the entire 4KB physical sector into a cache, modify the 512-byte logical sector in the cache, and then write the entire 4KB physical sector back to the media (replacing the old block). This is called Read-Modify-Write.

Disks with this emulation layer to support unaligned writes are called 4K with 512-byte emulation, or “512e” for short. Disks without this emulation layer are called “4K Native.”

As a result of Read-Modify-Write, performance can potentially suffer in applications and workloads that issue large amounts of unaligned writes. To provide support for this type of media, Windows needs to ensure that applications can retrieve the physical sector size of the device, and applications (both Windows applications and 3rd party applications) need to ensure that they align I/O to the reported physical sector size.

Designing for large sector disks

Learning from some issues identified with prior versions of Windows, AF disks have been a key design point for new features and technologies in Windows 8; as a result, Windows 8 is the first OS with full support for both types of AF disks – 512e and 4K Native.

To make this happen, we identified which features and technology areas were most vulnerable to the potential issues described above, and reached out to the teams developing those features to provide guidance and help them test hardware for these scenarios.

Issues we addressed included the following:

- Introduce new and enhance existing API to better enable applications to query for the physical sector size of a disk

- Enhancing large-sector awareness within the NTFS file system, including ensuring appropriate sector padding when performing extending writes (writing to the end of the file)

- Incorporating large-sector awareness in the new VHDx file format used by Hyper-V to fully support both types of AF disks

- Enhancing the Windows boot code to work correctly when booting from 4K native disks

This is just a small cross section of the amount of work done to enable across-the-board support for both types of AF disks in Windows 8. We are also working with other product teams within Microsoft and across the industry (e.g. database application developers) to ensure efficient and correct behavior with AF disks.

In closing

NTFS in Windows 8 fully leverages capabilities delivered by our industry partners to efficiently support very large capacity disks. You can rest assured that your large-capacity storage needs will be well handled beginning with Windows 8 and NTFS!

/Bryan

Comments

Anonymous

November 29, 2011

"Of course, in the 1980s, this seemed a perfectly legitimate practical limitation to impose, considering that the largest consumer disk available then was a whopping 5MB and cost well over $1500!" Sorry, but I'm going to call you on this. The PC/XT in 1982 which supported a 5M hard disk. In 1984, IBM introduced the PC/AT with a 10M hard disk and in 1986 or so (not quite sure of the exact dates), MSFT introduced DOS 3.2 which extended support to 32M hard disks. So claiming that in the 1980s the largest available disks were 5MB in size isn't quite accurate. Moores law applied to hard disks as well as chipsets.Anonymous

November 29, 2011

@Larry Osterman in the {1980s}, considering that the largest CONSUMER disk available then was a whopping 5MB and cost well over $1500.Anonymous

November 29, 2011

Apologies, it's "protogon", not "paragon". In any case, there's definitely references to it in the developer preview, so if NTFS is still the future, then what exactly is it?Anonymous

November 29, 2011

I was hoping there would be some mention of Protogon in this article, but alas there is none. Steve, can you please shine some light on what exactly Protogon is and what it does? Otherwise, another fantastic post. Thanks, and keep 'em comin'! -DaveAnonymous

November 29, 2011

The comment has been removedAnonymous

November 29, 2011

@Dave - RE: Protogon Not sure Protogon is even a real thing. Seems more like another WinFS/New and Improved FS rumor which will go nowhere. I am hoping for a better file system, but I will not hold my breath waiting.Anonymous

November 29, 2011

Please @Steve, you know Windows needs a new File System... NTFS had its time...Anonymous

November 29, 2011

A new NTFS file system. Good job. @Fernando What's wrong with NTFS? The title reminds me of something which shows how much technology has and has not changed. If you load the fdisk program from an old Windows 9x boot disk, a message says "Your computer has a disk larger than 512 MB. Do you want to enable large disk support?" Fortunately, we are far beyond the days of being prompted to enable support for disks of a certain size, and these new 3+ TB disk features are being built into the file system natively with no "large disk?"/"normal disk?" options.Anonymous

November 29, 2011

Well it is sad that it is just again a patched NTFS but what did you expect :/Anonymous

November 29, 2011

@WindowsVista567 I think I just miss the idea of WinFS... and that it coludn't be done with NTFS...Anonymous

November 29, 2011

@Fernando: What would you expect to see in a new general purpose filesystem that isn't already in NTFS? Remember that this will have to handle all the I/O for the system, which means it can't be tuned for specific purposes. Over the years, I've had direct experience with FAT, HPFS, NTFS and WinFS (which wasn't a filesystem, btw - it was actually implemented as a bunch of files layered on top of NTFS) and NTFS seems to do just about everything that I could think of as being reasonable in a general purpose filesystem.Anonymous

November 29, 2011

This is really only solving the technical problem of making large hard disks visible to Windows and taking advantage of UEFI. It does nothing to solve the user problem of huge data sets, organizing and finding stuff. WinFS was grand vision, it got scrapped, some of its ideas have made their way into Windows Search. But its still basically the same old filesystem with no more intelligence, knowledge or metadata. The Windows team needs to take a look at tools like Everything (voidtools.com) which search using the NTFS master index and are thus lightning fast. There is no reason why Windows itself couldn't have this feature, and use the indexed database only for file content searches.Anonymous

November 29, 2011

I'm glad we're stuck with NTFS for now - as someone who has large data sets of "end user type" information (music, video, photos, etc.) extending across several terabytes worth of hard drives, I'm glad that the file system doesn't get in the way of me organizing things the way I want them organized so that I know specifically where everything is. I don't need "help" with this task. I need the file system to let ME manage that task. I have other tools that help me with the specifics, but I got to choose them, and they work for ME. And if those same can work for me better on large hard drives, well, so much the better! Thanks MS!Anonymous

November 29, 2011

Nice! Now is there any chance that a Drive Extender-like feature will be supported (once again) so I can ditch my zfs server?Anonymous

November 29, 2011

the protogon.sys is 95% the same as ntfs.sys file, so obviously Protogon IS NTFS. but the protogon exports some intereting functions looks like some Jet database engine, and it has some reference to 'MinStore', maybe related to MinWin/MinIO/MinKernelAnonymous

November 29, 2011

The comment has been removedAnonymous

November 29, 2011

Well, looks like tvald, above, mentioned ZFS even before I had a chance. Including but not limited to, logical volume manager, end to end checksumming & more robust forms of RAID (6/7); data storage is expanding exponentially & without some form of the above managing large number of drives or ensuring data integrity/verification over time (bit rot & what not) we're (those of us unlucky enough to have large storage needs & the associated pain of knowing how to run these systems) stuck with ZFS & some form of UNIX (Solaris, FreeBSD or the zombies of OSol, namely OI). It is very unfortunate, time consuming & a pain to run an entirely different OS just for storage needs when all the systems at my home (desktops & laptops) & the vast majority at work (servers, workstations, desktops & laptops) are Windows machines. Either create a new FS with these ingredients (if possible), add them to NTFS (if create a new FS is not possible) or the last, license ZFS & port it to Windows. Please! P.S. Anyone setting up Samba (SMB/CIFS) to share your ZFS vdev (storage pools) for all the Windows machines on the network will know what I mean; a hint, its not as easy as click-&-share from Explorer.Anonymous

November 29, 2011

Is there a plan for a separate post covering file / data serving improvements for Windows Server?Anonymous

November 29, 2011

@MP: these? blogs.technet.com/.../windows-8-platform-storage-part-1.aspx blogs.technet.com/.../windows-8-platform-storage-part-2.aspxAnonymous

November 29, 2011

Nice cleared some of my doubts. Thanks.Anonymous

November 29, 2011

@Bob: NTFS already has transactional semantics - metadata has always been updated with transactional semantics, in Vista, support was added for transactional semantics of user data. Why would a key/value store help the filesystem? Remember that this is a general purpose filesystem - it needs to support applications that read and write data to a single data stream. The "NUL" and "PRN" semantics have nothing to do with NTFS - those special files exist for backwards compatibility and are Win32 constructs only. And what would you use to replace the ACL mechanism for access? You must provide a discretionary access control mechanism of some form that can be controlled by an administrator. And applications which make assumptions about the access control mechanisms on the filesystem must continue to work (since you're proposing a replacement for NTFS). What value would cryptographic checksums of the filesystem blocks gain? Why is adding such a beast a good thing? Oh and one final question: What scenarios would adding key/value store satisfy? How does this help customers have a better experience using Windows?Anonymous

November 29, 2011

just wondering if the ntfs version will change from 3.1.Anonymous

November 29, 2011

Guys. Congratulations! I just love MS!Anonymous

November 29, 2011

Please integrate "Microsoft Camera Codec Pack" to Windows 8's Explorer so viewing RAW file formats is a breeze... Thank you.Anonymous

November 29, 2011

what about the protogon file system???Anonymous

November 29, 2011

Protogon: From what I think and people are indicating here, Protogon seems to be nothing more than a research driver, kind of an extended version of the current NTFS file system, that exists side-by-side the "normal" NTFS driver for testing/debugging purposes until it might supersedes it in a future build. As someone pointed out, 95% of Protogon seems to be NTFS. The desciption of the protogon.sys reads something like "NT Protogon FS driver"... so if this would be really a new file system, why did they still put the "NT" in the name? Unfortunately, it's not possible to format a drive to Protogon in the Windows 8 Developer Preview (I tried and it didn't work for me), therefore it's not possible to compare the on-disk representations/structures of a freshly formatted NTFS/Protogon volume...Anonymous

November 29, 2011

(@this blog, but also @Larry Osterman, @Bob, @Guest, @anonchan, @tvald) I'm also interested in comparisons to ZFS, particularly when it comes to performance. From the Windows 8 Server blog, it looks like there are many improvements coming, but it's difficult from that broad overview to determine exactly how the enhancements are implemented and the implications. For example, though NTFS in theory supports files in the exabyte territory, in reality you are currently limited to volumes of 16 TB (with the NTFS default 4K allocation unit). If you want to make a large file share of say, 128 TB, currently you'd have to span volumes, which becomes ugly/infeasible quickly. Currently, the Data Protection Manager team actually recommends not exceeding 1.5 TB for NTFS volumes for stability reasons, and I've heard practical advice not to exceed 500 GB for individual volumes on file servers due to the time CHKDSK would take if necessary. It looks like the online CHKDSK mentioned in the Windows 8 Server blog may address the CHKDSK issue and also hopefully addresses the stability issues the DPM team is seeing with > 1.5 TB volumes. However, it doesn't make clear how it is achieving these larger volume sizes--is it via larger cluster sizes? A 64 TB file share achieved via 16K allocation unit size is not really a win. From a performance standpoint, ZFS really shines in JBOD mode--you get the redundancy that traditionally required expensive and cumbersome hardware RAID, and the resulting throughput from both IOPS and bandwidth standpoints is fantastic (see blogs.sun.com/.../zfs_msft.pdf for a more direct comparison; granted this is dated material, but still relevant; "1.99 to 15" times better performance than NTFS). It also employs intelligent and transparent caching mechanisms at the filesystem level--for example, allowing you to add SSDs to your storage hardware and leverage RAM and SSD drives as a ZIL cache (write cache) and/or L2ARC (read cache). From the Windows 8 Server blog, it appears that there is some new magic going on for redundancy beyond traditional software RAID--that is promising, although after the Windows Home Server issues, it might be wise to wait to make sure this is ready for critical data. However, they don't mention anything about performance improvements. They mention that you can target data at different storage (SSD vs. SAS vs. SATA), but apparently there's no automatic data tiering, and nothing is mentioned about employing RAM and SSD as part of the filesystem. Obviously some RAM caching happens at the OS level in Windows, but the performance disparity between NTFS and ZFS is large, and I see nothing mentioned so far about improvements on that front. I'm still anxious to see more about replication also--DFS is not bad, but it has many limitations from both a design and a practical standpoint. I would prefer to see this functionality at the filesystem level. Don't get me wrong--NTFS is great in many ways, and blows away HFS+ for example. But when compared with a newer generation filesystem like ZFS, it currently looks dated.Anonymous

November 29, 2011

Based on what you're saying about the 4K drives, it sounds like it would no longer make sense in any scenario to format a volume on one of these drives using an allocation unit of anything less than 4K. Is that correct? When creating a new volume on a 4K native drive, will those options no longer appear?Anonymous

November 29, 2011

The comment has been removedAnonymous

November 29, 2011

@Steven Sinofsky @Microsoft PLEASE DO REPLY I also feel that Microsoft is not being exactly innovative. In recent years most of its most hyped features originate from other places. www.xstore.co.za/wordpress blog.jayare.eu/windows-7-or-kubuntu-kde-4.html These two blog posts show what I am trying to say as does this presentation from Apple www.youtube.com/watch Taskbar grouping introduced in Windows XP - copied from KDE New start menu with search and scrollbar - copied from kickoff launcher in KDE which existed long before vista Desktop composition - Existed in many forms on linux systems Large taskbar - Flagship style of KDE Icon only taskbar with controls embedded - copied from mac OS X Windows 8 start launcher - Copied from Ubuntu Unity DashAnonymous

November 29, 2011

About the enterprises thing We held out a survey and gave a demo of WIndows 8 to them and the feedback was not nice at all. We were hoping to migrate from XP to Win8 but it seems that it is not a good idea. The way of shutting down the system itself if played in Windows 8.Anonymous

November 29, 2011

Great! That's using Windows without a compromise!

But advice please pay also attention to the tools for disk management and the Disk Clean up UI to reimagine every part of Windows.

Anonymous

November 29, 2011

Larry Osterman [MSFT]: The PC/XT in 1983 shipped with a 10MB drive and shipped with DOS 2.x which used the same FAT12 as floppy disks but with 4KB clusters to support it. The PC/AT shipped with a 20MB hard drive and shipped with DOS 3.x which introduced FAT16 allowing much small cluster sizes. DOS however still did not support more than 65536 sectors (32MB) until Compaq DOS 3.31 and DOS 4.x which added this support.Anonymous

November 29, 2011

And still well very much it would be desirable нативной support of foreign file systems, type ZFS, ext, Btrfs. It is important for development of computer networks and a corporate segment.Anonymous

November 29, 2011

Whether there will be connection SkyDrive, how memory directly in My computer? It is very convenient and is claimed, some manufacturers of the equipment so integrated the cloudy storehouses. Whether cloudy storehouse SkyDrive after authorization in Windows 8 on LiveID will be connected? Whether there will be a possibility of connection SkyDrive without authorization on LiveID? Whether there will be a possibility to connect simultaneously some storehouses SkyDrive of various users? Quickly to connect separate расшаренные folders of other users SkyDrive? Very much it would be desirable to see given функционал in approaching Windows 8!Anonymous

November 29, 2011

The comment has been removedAnonymous

November 29, 2011

Tell us about the new 'Protogon' file-system.Anonymous

November 29, 2011

@Kubuntu LINUX User Please stop spamming - we respect your choices but we don't need to see the same comment in every post - it's completely off topic.Anonymous

November 29, 2011

The comment has been removedAnonymous

November 29, 2011

Make the Ribbon flat, like in Office 2010! Remove the shadows and curvature.Anonymous

November 29, 2011

Steven, this is off topic, but I wanted to post it here, since for older blog posts, making new comments is disabled. Quote below from: Taskbar thumbnails www.winsupersite.com/.../windows-7-feature-focus-windows-taskbar “Tip: Invariably, the first time you access a full-screen preview you will then mouse off of the preview to access the actual window. That doesn't work: When you do so, the window (and the preview) disappear. Instead, you need to click on the taskbar thumbnail itself to access that window. This is, of course, a typical example of Microsoft's ham-handed UI design. I guess once you burn yourself you learn not to do it again.” I hope this will be fixed in Windows 8: "...you need to click on the taskbar thumbnail itself to access that window. " Thank you.Anonymous

November 29, 2011

@AHS0 I don't understand what you're asking for. Do you want it so that when you hover over a thumbnail then leave that window is focused? That would be utterly terrible. Hover gives a full preview, click to actually activate it. It makes sense and is by far the most useful method. Definitely doesn't need to be 'fixed'Anonymous

November 29, 2011

support.apple.com/.../TS2419 Can you also switch over to Base 10 instead of Base 2, for counting harddrive space? Apple did is in 10.6, you should do it as well so everyone is using the same system.Anonymous

November 29, 2011

but but but... What about Protogon? :'(Anonymous

November 29, 2011

When it comes to ntfs storage, I don't understand this: I have a ~300GB drive with ~200GBs free. A defrag analysis gives me this: some_system_restore_file 62MB 986 fragments some_other_system_file 9.4MB 147 fragments ntuser.dat.log 56Kb 105 fragments various_other_files ~2MB ~30 fragments ie_history_files ~as above Is it so difficult to find a contiguous 5/10/30/60MB free area to store these files ? You may say that data are added to them incrementally, but wouldn't rewriting them from scratch seem neater. I can, of course, defrag the drive, but a couple of days later the same thing happens again. On a side note, I would really like you to present some more info on you storage diagnostic tools. Something like the disk defragmenter interface you used in win xp.Anonymous

November 29, 2011

@Larry Osterman this is just a start of what NTFS can't do, not counting how slow it is. en.wikipedia.org/.../ZFSAnonymous

November 29, 2011

And while were talking about Unix tech, you guys should really just switch over to Unix file permissions, it'd make everything easier, it'd make it easy to port apps over to windows, and it'd just be awesome. yes, it'd make it easy for apps to be ported away potentially, but dude. everything going to the web, so provide a good UX to keep your customers.Anonymous

November 29, 2011

"What value would cryptographic checksums of the filesystem blocks gain? Why is adding such a beast a good thing?" I'm not even gonna lie, i'm NOT a file system guy, but if you could cryptographically verify that a file hasn't been modified, you can guarantee system security, AND you can guarantee data integrity, two VERY important features for ANY OS.Anonymous

November 29, 2011

The comment has been removedAnonymous

November 29, 2011

@Kubuntu LINUX User I know this is off topic but let me get this straight. You demo'd a developer preview version of an OS to your "enterprise users" to see survey them to see if you should deploy it or not. Did you miss the fact that it is a "DEVELOPER PREVIEW"? PRO TIP: Many things could / will change before we get to an actual release. I think you are just trolling but I had to respond.Anonymous

November 29, 2011

@Microsoft, you should just ban Kubuntu LINUX User's IP address :/Anonymous

November 29, 2011

The comment has been removedAnonymous

November 29, 2011

The comment has been removedAnonymous

November 29, 2011

NTFS was a great File System but needs to die! Its to old v1.0 with NT 3.1,[citation needed] released mid-1993 v1.1 with NT 3.5,[citation needed] released fall 1994 v1.2 with NT 3.51 (mid-1995) and NT 4 (mid-1996) (occasionally referred to as "NTFS 4.0", because OS version is 4.0) v3.0 from Windows 2000 ("NTFS V5.0" or "NTFS5") v3.1 from Windows XP (autumn 2001; "NTFS V5.1") Windows Server 2003 (spring 2003; occasionally "NTFS V5.2") Windows Server 2008 and Windows Vista (mid-2005) (occasionally "NTFS V6.0") Windows Server 2008 R2 and Windows 7 (occasionally "NTFS V6.1"). 18 years, 4 months, 4 weeks, 1 day. We need a new FSAnonymous

November 29, 2011

The comment has been removedAnonymous

November 30, 2011

@jader3rd: Since NT 3.1, NT has had a construct called an opportunistic lock (or oplock) which gives you exactly what you want - the AV/content indexing app, etc can take an opportunistic lock on the file and then when another application attempts to open the file, the scanning application gets notified and has an opportunity to release the file. For some applications (like content indexers), it makes sense to release the oplock, for others (like antivirus) it may not (if the file is potentially infected, you don't want people to access it until you've checked the file).Anonymous

November 30, 2011

The comment has been removedAnonymous

November 30, 2011

Denny you need to take a small lesson on how checksums work then your doubts will be cleared. Whenever a bit flips the checksum changes and it would trigger the diff and you know something changed.Anonymous

November 30, 2011

@Larry Osterman Is oplock avaiable in user land, specifically for use with Win32 MFC CFile?Anonymous

November 30, 2011

Apologies if this was addressed but I missed it on the blog, one of NTFS's most obnoxious limitations is the 64 per-volume shadow copy limit. With huge HD's (well, non-SSD's), increasing this limitation considerably would be a boon to the enterprise (allowing DPM to snapshot a users file repository every 30 minutes with 2 months would be fantastic for backup), as well as consumers. Previous Versions is nice, but the 64 shadow copy limit per volume really limits how frequently - and how far back - you can take snapshot backups.Anonymous

November 30, 2011

The comment has been removedAnonymous

November 30, 2011

@jamome: Read this: msdn.microsoft.com/.../aa365433(v=VS.85).aspx for more information on oplocks. The article discusses oplocks in the context of networking, but they should work on local filesystems as well.Anonymous

November 30, 2011

A few people have already brought up ZFS. As a laptop user data consistency is a key worry for me - as far as I am aware current NTFS versions do not offer any kind of real data integrity/consistency checking. One of the fundamental changes that came with ZFS was per-block checksums: files are made up of one or more blocks, each of which has a checksum computed when it is written out to disk. These block checksums are then checksummed and written to disk. Those checksum checksums might also be checksummed and written out. And so on. All the way up to the top level of the filesystem (in ZFS the uberblock). Essentially this means the filesystem can detect silent data corruption which might occur as a result of misdirected/phantom reads/writes or good old 'bit rot'. If more than one copy of the data exists (e.g. RAID 1) and only one copy is corrupt then the filesystem is able to self-heal. For those interested Jeff Bonwick has a super blog post about ZFS data integrity at blogs.oracle.com/.../zfs_end_to_end_data. It looks as though the Windows 8 Server guys have some new NTFS functionality that on the face of it would appear to pave the way for this behaviour in NTFS. See the post at blogs.technet.com/.../windows-8-platform-storage-part-2.aspx. Can Steven or Bryan provide some more input on this -- will the behaviour be available in the non-Server editions of Windows 8? Will functionality similar to ZFS 'scrub' be available so we can verify block-level checksums and detect corruption before it becomes a problem?Anonymous

November 30, 2011

The comment has been removedAnonymous

November 30, 2011

@Larry Taking a look at the article you pointed out it says "Opportunistic locks are of very limited use for applications. The only practical use is to test a network redirector or a server opportunistic lock handler." Whereas, since I'm writing applications I would like something has something greater than "very limited" use. From what I gather, my application requesting an opportunistic lock doesn't help my application get a file handle when another application currently has an exclusive lock on the file. Plus Opportunistic locks can be broken, which isn't what I want. I want my application to have an exclusive lock on the file for as long as it needs it. So opportunistic locks are not the solution for avoiding writing retry loops around every create/move/delete call.Anonymous

November 30, 2011

@jader3rd: I think you misunderstood my point about oplocks. Oplocks are of value for the scanning application - it allows the scanning application to know that another application wants to access the file and relinquish their hold on the file. They don't help you much if you're the "other application". But if scanning applications all universally used oplocks, it would alleviate the problem you describe.Anonymous

November 30, 2011

@sreesiv Kudos! It is indeed a relief that i am not the only one to have spotted Mr. Sinofsky's glaring mathematical errors! I take it that math is not one of his strengths. How curious that no consideration is made for the effects of flooding in Thailand. With the coming advent of Phase Change Memory, would it not be prudent to engineer improvements into Defrag in order to allow optimization of such media without degrading MTBF?Anonymous

November 30, 2011

@Danny Thats a great question, and the checksums could be written to the MFT with the pointer to the file on disk.Anonymous

November 30, 2011

Come on Steven.. we waited over a week for an update, and it's about enabling large disks? Serious? Momentum is everything. Consumer appetite for Windows 8 has already fallen if you believe Forrester Research. It would help if you could do bi-weekly updates to this blog, with one of the updates being something consumers, not system admins and people who worry about how data is written to a hard drive (all 4 people), will get excited about. My 2cAnonymous

November 30, 2011

@Rajeev: Thanks for providing those links! Still one question, though - can you adjust the size of a storage space, or do you have to create new spaces to take advantage of increased pool capacity?Anonymous

November 30, 2011

@ZipZapRap This is msdn. It's a place for developers, and somewhat IT pros. So it's okay if they make posts that people who develop applications find interesting. Consumer interest momentum will be more important after Windows 8 ships.Anonymous

November 30, 2011

@jader3rd Fair point, and I agree.. hence my point about having at least one consumer oriented post per week. However, I disagree that consumer momentum will be more important after W8 ships - that game changed years ago, and it's that mentality that makes MSFT behind the curve at the moment (irrespective of how good Windows 8 is currently, they don't have a GOOD shipped product that will own the tablet space). And let's be honest, it's the consumers who will drive the growth of this platform and OS. In 2011, as Apple has shown, the consumer is king. Enterprise is important, but the consumer market is what is driving technology; there's even a term for it: The Consumerisation if IT. And therefore, as developers, you should have a keen interest in keeping Windows 8 in the public conscious, as you will only get paid for your apps if they buy into it, and people are allegedly losing interest according to Forrester Research. I want Windows 8 to succeed.. I really really do, but if there's no consumer excitement going into 2012, well they've got their work cut out for them..Anonymous

November 30, 2011

The comment has been removedAnonymous

November 30, 2011

@ZipZapRap "hence my point about having at least one consumer oriented post per week." You make some good points however considering that currently MS are only making one post a week here then your suggestion above is not feasible. Equally it can be argued that this post is part consumer oriented given that 1 and 2 TB drives are already freely available at reasonable prices through Amazon and similar sites.Anonymous

November 30, 2011

@sreesiv "I guess it should be n = ( (total capacity in bytes)/ (sector size in bytes) - 1) considering sector number is zero-ordered. Right???" ; "...The 'number of' addressable units/sectors (not byte) represented via 32 bits is 2^32 = 4Gi Sectors...." You are correct - thanks for bring it to our attention. As you stated, n should be (total capacity in bytes)/(sector size in bytes) - 1, and of course that 2^32 -1 is the maximum LBA # that can be addressed using MBR. With a 4K logical sector size, and using a GPT partitioning scheme (64-bit addressing), the theoretical maximum addressable capacity is 75.56ZB, as you have calculated. @Ryan "Based on what you're saying about the 4K drives, it sounds like it would no longer make sense in any scenario to format a volume on one of these drives using an allocation unit of anything less than 4K. Is that correct? When creating a new volume on a 4K native drive, will those options no longer appear?" At format time, it is enforced that the cluster size is >= the reported physical sector size. Options to format to smaller cluster sizes are not available, because we do not want a single physical sector (i.e. the unit of atomicity for the media) to contain multiple clusters. Generally speaking, however, we would recommend a cluster size of 4K for volumes under 16TB regardless of the physical sector size.Anonymous

November 30, 2011

The comment has been removedAnonymous

November 30, 2011

The comment has been removedAnonymous

November 30, 2011

@ZipZapRap Even as I'm one of the "4 people" worrying "about how data is written to a hard drive"... :) I have to agree with you on some level as well. A post to point out that large hard drives will be supported... well, it's interesting for tech folks and good for us to understand, but even for tech folks and "almost everyone else" alike--should we expect anything less? I don't think anyone will get excited about the fact that new, inexorably larger hard drives will actually work with the latest Windows--that's the minimum expectation. We'd be upset if they didn't work, but for better or worse, I don't think anyone sees it as a major enhancement that they work, even if the engineering effort required was Herculean.Anonymous

November 30, 2011

can u please comment on the new filesystem "ReFS" protogon has been removed from newer buildsAnonymous

November 30, 2011

If NTFS is still the default FS in Win8, I'll just format my HDD in ext4 if you know what I mean... ;)Anonymous

November 30, 2011

@sevenacids Yes NTFS evoloved from NT3.1 version but 18 years its a lot of time. Windows 8 needs a new file system not a patched version of NTFS. NTFS was a great Filesystem but its time to change itAnonymous

November 30, 2011

@all idiots who want a new FS for the sake of having a new FS: NT is 18 years old, too. Should Microsoft rewrite it? Linux and OS X have UNIX roots, which is more than 40 years old. Should we stop using Linux and OS X? The Internet uses HTML, CSS and JavaScript which are more than 15 years old. Should we stop using them?Anonymous

November 30, 2011

@Magnus87 1 Dec 2011 4:04 AM: What do you hope to achive by replacing one FS for another? Just newness factor?Anonymous

November 30, 2011

The comment has been removedAnonymous

December 01, 2011

Maybe early unreleased builds of Windows 8 contained some resurrected tid-bits of the infamously cancelled WinFS? Shame, that would have been one nice new toy to play with: en.wikipedia.org/.../WinFSAnonymous

December 01, 2011

Please remove the create an MS-DOS startup disk option in the format window in explorer! We have 2011 and usb boot sticks follow now! Even on ARM this option would not make sense, so remove that legacy stuff!Anonymous

December 01, 2011

The comment has been removedAnonymous

December 01, 2011

@Rajeev: Just as I've thought about this more... I'm very interested in seeing how something like Storage Spaces would play with some of the newer SAN solutions out there. For instance, Coraid uses the AoE protocol (ATA over Ethernet) to present disk on the network as local SCSI devices to hosts--a huge improvement over iSCSI from latency, throughput, efficiency, and simplicity perspectives. Their costs are also significantly cheaper. Of course, they offer things like hardware RAID on the controller, but it sounds like Storage Spaces may be a superior way to take advantage of that disk if it can connect in JBOD mode and handle the redundancy itself (and it sounds likely better than RAID-5, at least). The performance could/should also be signifantly better, particularly for random IO. However, I'm curious how all this fits together in a clustered Hyper-V environment and from a backup/VSS perspective. Does a Windows host present the Storage Spaces to other hosts, or is this somehow a shared resource? I'm not sure how that's possible with DAS, unless the Windows host in effect becomes the storage controller for other Windows hosts. It seems like it may be possible with a solution like Coraid, though, since the disk is presented over the network. If that is the case, would multiple hosts be able to share the disk via SCSI-3 Persistent Reservations, and somehow in turn combine those disks via Storage Spaces and utilize those as Clustered Shared Volumes? And if so, would those CSVs be VSS-aware? If that is the case (or the end result), that could be a pretty big deal. Not only would that offer the potential to reduce the cost of such solutions greatly, it would immediately offer Microsoft-supported VSS support for CSVs--something many SAN vendors had (and some still have) quite a lot of trouble implementing. (I'm not trying to advertise for Coraid... just wondering how this might work.)Anonymous

December 01, 2011

I am extremely glad to see that Windows 8 will remove much of the need for additional translation layers between the OS and the disk, and will go as far as to remove some of the overhead involved in current non-aligned setups. My question is, what about 8K pages? Most SSDs on the market have already migrated away from 4K to 8K pages, most notably any that utilize Intel & Micron's 25nm NAND. Given that SSDs write in blocks but read in pages, the same issue seems to apply.Anonymous

December 01, 2011

I am puzzled by the twist and turns of this blog. Windows 8 seems aimed at the tablet and light weight workstation. Those have small to nonexistent local storage. I fail to see why we would need to spend time working on large disks technologies.Anonymous

December 01, 2011

@out in left field 1 Dec 2011 12:52 PM. No. It is general system. They mentioned it way back... (Also reason for dual personality)Anonymous

December 01, 2011

@Microsoft Could you tell me why Windows Search is much slower than Spotlight (OS X)? Has this anything to do with the filesystem? There should not be 50 ways of indexing data and getting results. Is this case - why is OS X gathering results nearly instantaneously so that Windows search takes a lot of time? I am just asking a question not critisizing.Anonymous

December 01, 2011

@Jin78 1 Dec 2011 2:07: I don't know how they can respond as there is no link showing how one came to this conclusion. (Something like benchmarks done by HW sites - steps taken for given scenario and how it was measured on what hardware and with what software present like AV) BTW:There might be multiple differencies between both systems making comparsion more difficult.Anonymous

December 01, 2011

@Jin78 If you have Offline files enabled, that'd do it.Anonymous

December 01, 2011

The comment has been removedAnonymous

December 01, 2011

You are very negative, every savvy technical administrator knows that Win 7 and Win 8 is significantly better than XP, unfortunately for these finding it difficult to let go of XP – it is just a fact.Anonymous

December 01, 2011

What's to hate about Windows 7? Nothing! What's to hate about Windows 8? Keyboard & Mouse usability, it's a nightmare :(. There's some great ideas and new technologies in Windows 8; not least these new larger disk capabilities, but it's all spoilt by an unusable user interface.Anonymous

December 01, 2011

Keyboard & Moust usability isn't complete. This is a developer preview, to get the API into the hands of developers. The UI is NOT finished and NOT polished and is STILL changing. Please stop judging Win8's UI by the incomplete UI in the pre-beta developer preview! It's just unconstructive whining at this point. If you have constructive comments, things you'd like to see, make those suggestions... but stop with the stupid insults and end-of-the-world drama!Anonymous

December 02, 2011

"It would help if you could do bi-weekly updates to this blog, with one of the updates being something consumers, not system admins and people who worry about how data is written to a hard drive (all 4 people), will get excited about." I have to agree with this. I'd love to see twice-a-week updates... one for a consumer aspect, and one for a technical aspect. I think that would keep a predictable drum-beat going foward. Once-a-week updates aren't often enough.Anonymous

December 02, 2011

support.microsoft.com/.../312067 is fixed in windows 8 if so ill upgrade once its out if not ill stick with windows 7Anonymous

December 02, 2011

In general the overall quality of drives has gone down substantially in the past few years. They may have gotten faster and bigger, but the reliability isn't that great anymore. Scary! The OS FS is very stable, but the hardware is not. What good is a stable OS if the hardware is going to die anyway? As a sys admin that supports over 700 users daily, I run into all kinds of disk-drive issues. I fear having to face a stack-o-DVDs and thumb drives while trying to salvage the data off of drives this big. This could be quite a nightmare, and in particular for Mr. and Mrs. Home-user that has many years of photos on their hard drive. What kind of backup solutions are offered with Windows 8? Getting the user to put in the effort to backup stuff is another matter, but having the tools in place is a good thing! Thanks again for the informative article.Anonymous

December 02, 2011

please help if it can to downlaod free window 8 professional which help to type in complecate language/ thanks 4 allAnonymous

December 02, 2011

just download windows 8 professional to try itAnonymous

December 02, 2011

@Mark Something like this? I can't understand what Microsoft did with Windows 7 to slow it down so much. Is it because of DRM protected IO support (so windows 7 can play bluray discs)? Or the support for metadata built into explorer, or bloat-by-design to make Windows slower so they can sell more new PCs with Microsoft OS included? www.youtube.com/watch This also happens on a brand new sandy bridge computer my brother built. They said Windows 8 should use less ram and run on ARM touch tablets, so I hope they optimize disk IO performance, metadata, explorer extension and thumbnail support too.Anonymous

December 03, 2011

@DarkUltra 3 Dec 2011 7:28 AM: I'd like to know what they did to those installs as that speed of Win7 enum is too bad compared to any install I have(or had) acros different HDDS. (No SSDs) AFAIK one of main culprits would be badly written driver filter. Also one should remember that I/O has lower priority then other user facing subsystems. (Sometimes drivers and HW could seriously impact performance of XP or when HDD had serious problems...) TL:DR: One shouldn't see too different performance between installs. If there is big difference one needs to make sure that it is caused by system and not by application or drivers or by HW. Test by different file managers, some benchmarking tools - free do exist- and check for new drivers. Also process monitor can help to see file system and registry activity, if there are any programms doing extra work. (I think Mark Russinovich blogs.technet.com/.../markrussinovich or Performance team blogs.technet.com/.../askperf have some posts about such type of problems) Also DRM should have no effect until protected content is played.Anonymous

December 03, 2011

autocad 2012 - autocad jaws beta don't work on Developer previewAnonymous

December 03, 2011

@Chris Thanks for your comment, and yes, I agree with Paul of winsupersite.com about the one more click we have to do to be able to activate the window. I think its best for Microsoft to put this function "as Paul mentioned' by default, to reduce the click fatigue. As for other users like you that don't like it to be as default function, I guess Microsoft will make an option for that to be able to use one more click for the window to be activated/selected. So no worries here Chris. I also understand that the keyboard shortcuts for navigating through the open thumbnail windows of an app in taskbar, user can hit the tab key, and using space key, user can activate/select the desired thumbnail/window. And considering about users who just use mouse mainly, hovering over a thumbnail preview of a window, then for the window to be selected automatically, it would be very convenient IMHO. I can also be contacted via the same unsername, just as 'us' at the end, and the rest is “@live.com”. Thank you.Anonymous

December 03, 2011

@Chris Thanks for your comment, and yes, I agree with Paul of winsupersite.com about the one more click we have to do to be able to activate the window. I think it’s best for Microsoft to put this function, as Paul has mentioned, by default, to reduce the click fatigue. As for the other users like you that don't like it to be as default function, I guess Microsoft will make an option for that too. So you would be able to use one more click for the window to be activated/selected. So no worries here Chris. I also understand that the keyboard shortcuts for navigating through the open thumbnail windows of an app in taskbar, user can press the tab key, and using space key, user can activate/select the desired thumbnail/window. Considering users who just use mouse mainly, hovering over a thumbnail preview of a window, then for the window to be selected automatically, it would be very convenient indeed IMHO. I can also be contacted via the same unsername, just add 'us' at the end, and the rest is “@live.com”. Thank you.Anonymous

December 04, 2011

Very glad to hear that so many improvements were made for storage. I especially liked the TechNet Platform Storage posts. But, we still can't do simple operations, like creating multiple partitions on a memory stick, or installing the OS on a memory stick. I recently needed to partition a memory stick, to create a FAT32 partition and an EXT2 partition. Disk Management options related to partitioning are grayed out, and diskpart allows me to create a partition, using half the space, then reports that the disk usage is 100% and doesn't allow me to create the second partition, on Windows Developer Preview: DISKPART> list disk Disk ### Status Size Free Dyn Gpt -------- ------------- ------- ------- --- --- Disk 0 Online 74 GB 0 B Disk 1 Online 465 GB 11 MBDisk 2 Online 962 MB 962 MB DISKPART> create partition primary size=400 DiskPart succeeded in creating the specified partition. DISKPART> create partition primary size=400 No usable free extent could be found. It may be that there is insufficient free space to create a partition at the specified size and offset. Specify different size and offset values or don't specify either to create the maximum sized partition. It may be that the disk is partitioned using the MBR disk partitioning format and the disk contains either 4 primary partitions, (no more partitions may be created), or 3 primary partitions and one extended partition, (only logical drives may be created). DISKPART> list partition Partition ### Type Size Offset ------------- ---------------- ------- -------

Partition 1 Primary 400 MB 64 KB DISKPART> list disk Disk ### Status Size Free Dyn Gpt -------- ------------- ------- ------- --- --- Disk 0 Online 74 GB 0 B Disk 1 Online 465 GB 11 MB

Disk 2 Online 962 MB 0 B This is stupid and annoying. And talking about bugs, when typing in the browser, Windows 8 seems to lose a lot of key presses. While typing this message, I almost gave up. It was so horrible that had to use Notepad for some parts, then I copy/pasted here. Sample text typed blindly to show the issue: ello Worldel orld ello rld ellWrld Hello World Hello World ello Wod llo rdl Actually, I typed "Hello World", correctly, eight times. I think most likely the changes related to spellchecking are the cause. The longer the text, the more key presses are lost. So I will probably have to post shorter comments :) And, most importantly, we must have an option to use the classic start menu instead of the start screen. I used only Windows 8 on my main computer for the last two weeks, and the start screen is very annoying.

Anonymous

December 04, 2011

So, no prototon? We still need to defrag? I think I'll use ext4 then...Anonymous

December 04, 2011

We still need to defrag??? Are you kidding me? Both, hfs+ and ext4 seem much better...Anonymous

December 04, 2011

It would be awesome if the music player tile itself had pause/play key in the start screen.....Anonymous

December 04, 2011

hfs+ or ext being better? I don't think so... (In some cases they just caught up with NTFS.)Anonymous

December 04, 2011

"Windows has one of the most advanced file systems of any operating system used broadly [...] we will define “very large capacity” disk drives as sizes > 2.2TB (per disk drive)" This is just ridiculous, now you are lying to yourself. NTFS is an OK file system, but not a state o the art file system. The problem here is that nobody else use the same definition. Others works like this: 3TB drives commercially available so we support it. I never had an issue with large drives or more than 4GB memory (o a 32bit system) under Linux. Actually I was forced to use Linux because of this. I mean I cannot format 3TB drive with Win 7. Then it is not a state of the art OS. Then it is a two years behind OSAnonymous

December 05, 2011

I like what you have done with winows 8 so far, looking forward to a beta. Your widows 7 tablet (HP slate 500 or sumdung 7 series etc) versus competition are better, but i think your mareting unit is doing a bad job, they dont seem to use online reputation management software (to listen and respond to what people are saying about MSFT and tablets or other things), the market still thinks you dont have tablet devices even your CEO (MSFT) was asked by an investor why MSFT was late to tablets and he showed them the sumsang 7 series. small suggestion: when a user right clicks on a file and clicks send to (drive F, etc), please give an option to directly tagert not just the drive location but a specific folder. Good work,Anonymous

December 05, 2011

I like what you have done with winows 8 so far, looking forward to a beta. Your widows 7 tablet (HP slate 500 or sumdung 7 series etc) versus competition are better, but i think your mareting unit is doing a bad job, they dont seem to use online reputation management software (to listen and respond to what people are saying about MSFT and tablets or other things), the market still thinks you dont have tablet devices even your CEO (MSFT) was asked by an investor why MSFT was late to tablets and he showed them the sumsang 7 series. small suggestion: when a user right clicks on a file and clicks send to (drive F, etc), please give an option to directly tagert not just the drive location but a specific folder. Good work,Anonymous

December 05, 2011

@Adam Koncz 5 Dec 2011 6:26 AM:´

- Windows for desktop were lmited on x86 to 4GB even with PAE, while Server version can use PAE for bigger RAM. (AFAIK it wasn't that recommended unless specific usecase required it) In part due to reportedly driver and software problems and to drive adoption of x64 by lmiting licence. 2)Complaining about two years old system being two years old and not beinging state-of-the-art is funny.

- 3TB limit is not limit of NTFS. (AFAIK it is at most limit of partition manager) Anyway I didn't hear about that and can't test it. (prices jumped sooner then I got to order...) Anyway I recommend reading Windows Internals.

Anonymous

December 05, 2011

The comment has been removedAnonymous

December 05, 2011

The comment has been removedAnonymous

December 05, 2011

@jcitron You are quite right about the lesser reliability of newer hard drives. The problem is not limited to hard drives. NASA has researched the issues of 'tin wisker' growth associated with Pb-Free solders & has studied this in-depth. Their recommendation is to add 3% Pb content (as NASA does), yet under current legal requirements, the solution is unlikely to be implemented concerning consumer devices, especially in California or the European Union. nepp.nasa.gov/.../2011-kostic-Pb-free.pdf nepp.nasa.gov/.../index.html nepp.nasa.gov/.../index.html I note that the official USB-standard specifications recommend gold-alloy plated connectors, yet non-compliant manufacturers may use tin-plated connectors. These 'whiskers' are pervasive enough that they have been noted to 'grow' through circuit boards. Ask yourself how often that you have seen tin-plated circuit boards on consumer-rated hard drives. @Mr. Sinofsky I'm wondering if the maximum file-size will be increased for Win8. If so, what will the impact be on defragmentation of the largest of files? I also note that MS-DOS start-up disk creation should be retained, as some tech's are still forced to use this to assist in the creation of BIOS update media (as scarey as the prospect may seem, its not so when using well-maintained portable floppy drives & double-checked with proper SHA1-minimum hashes). I reason that this 'disk-creation' wizard would be unneeded, undesirable, & unsupported for systems utilizing UEFI &/or Safe-Boot, yet should be available to those with a BIOS. I cannot see MS releasing any certificate(s)/signatures for UEFI, nor would i advise so, IMHO, for the potential 'back-door' it could facilitate. Thank you again for continuing your excellent series of articles & your consideration.Anonymous

December 05, 2011

Hi Brian! Please ask to correct Russian version saying "when I was still in the elementary school". :) I was really surprised.Anonymous

December 05, 2011

The comment has been removedAnonymous

December 05, 2011

Im a bit lost here... can someone point to a comprehensive comaprison betwen ZFS and NTFS. Including table comparison like this: FILE SYSTEM__________ZFS____________NTFS MaxVolumenSize X ZiB Y ZiB MaxFileSize Feature1 Feature2Anonymous

December 05, 2011

Alvaro, just FYI: there is a difference between NTFS limitations in general and limitations of current ntfs.sys realization.Anonymous

December 06, 2011

@A Win user 6 Dec 2011 3:27 AM: Are analysists any better then fortune tellers (whome nobody hopefully believes), especially when they have no prior data and trends? And IDC doesn't exactly have stellar track record... At TechReport are quite interesting comments on this "prediction": techreport.com/.../22111 Anyway I don't think experience on desktops is ruined either. (Mainly currently waiting for beta for better overview of changes)Anonymous

December 06, 2011

@Steven Sinofsky news.cnet.com/.../will-windows-8-be-irrelevant-to-regular-pc-users Please reply and post something, anything about improving/fixing the desktop experience for windows 8...Anonymous

December 06, 2011

@klimax Plenty of comments in the blogs about not liking the desktop experience in windows 8 to support.Anonymous

December 06, 2011

@Alvaro: can't vouch for the accuracy of this or how up-to-date it is, but this is a good starting point to compare various filesystems: en.wikipedia.org/.../Comparison_of_file_systems Also, as Igor points out (and is mentioned in footnote 21 of that page), the current implementation of NTFS and its theoretical limits are different.