First steps with Docker - Introduction

As you know, in the AppConsult team our focus is to help customers all around the world in the modernization of desktop application: MSIX, XAML Island, .NET Core 3.0, UWP. Anything that can help developers to take their existing desktop application to the next level.

What about the web world? I'm pretty sure that out there, especially in the enterprise world, there are many customers who are building web applications that are facing the same challenges. They may have lot of web apps that still work great and they don't need a complete rewrite, but still they would benefit from the opportunity of supporting modern scenarios, like running in the cloud, scaling in case of high workloads, etc.

With this idea in mind, I decided to go out from my comfort zone and start digging into something new: Docker and container technologies. Before we start understanding more this technology, let me do a premise: if your goal is to become a Docker master, feel free to look for other resources. There are plenty of great ones on the web. The goal of this series of post is to share my experience with Docker from a newbie point of view, so I will focus on highlighting all the challenges I've faced and how I solved them.

Are you ready? Let's go! This first post will be more theoretical, since it will introduce the basic concepts you need to know to start using Docker. In the next posts we're going to be more practical and to build some applications running inside a container.

Why Docker?

Docker is an open source platform based on the container technology. You can think of a container like a silo-ed ecosystem (operating system, frameworks, etc.) which includes only the minimum setup to run your application. Thanks to Docker, you're able to package everything which is required by your application into a single unit that you can share and run on any computer.

Docker is an open source platform based on the container technology. You can think of a container like a silo-ed ecosystem (operating system, frameworks, etc.) which includes only the minimum setup to run your application. Thanks to Docker, you're able to package everything which is required by your application into a single unit that you can share and run on any computer.

They are often compared to virtual machines, but it can be misleading. They serve a similar purpose, but they are very different technologies.

When you build a virtual machine, you are generating an image that you can easily transfer to other computers, no matter if it's a server in the cloud or just another machine, in a similar way you do with containers. However, virtual machines runs on virtualized hardware and you need to assign a specific set of resources when you create it. You need to specify how much RAM you want to assign, how many CPU cores you want to dedicate, how much disk to use, etc.

Additionaly, unlike containers, virtual machines use the opposite approach: they provide everything offered by the platform and then, eventually, it's up to you to strip it down. As a consequence, typically virtual machine images are pretty big and they take time to startup, since they basically need to bootstrap the whole operating system.

A container runs based on images which, instead, are strip at the bare minimum and with super fast startup timings, since they run on top of the existing OS. This is another big difference from a virtual machine: they actually run in the same operating system, not in a virtualized one. However, they run with a silo-ed approach. The application doesn't have any notion of what's happening in the rest of operating system: it isn't aware of other running processes; it can't access to your file system or hardware resources; it can't directly connect to your network, but it must use a bridge to the native one. However, since it's running on the bare machine, it has access to all the resources provided by the computer, unlike a virtual machine. If, for example, your container is a resource hog, nothing stops it to consume all your RAM or all your CPU.

The concept of running processes in a container isn't new. Linux provides this feature since a long time. However, thanks to Docker, these containers now can be easily packaged, deployed and distributed.

The best way to understand this behavior is to actually run one or more containers on your machine and list all the running processes. Let's say that you are running 3 SQL Server containers. If you check the list of processes running on your machine you will find, other than the docker service, also 3 intsances of them, like if you're directly running the services on your host machine. This is a major difference compared to a virtual machine. If you start to run applications and services inside a virtual machine, they won't appear as processes running in the host. Eventually, you will see running only the process who handles the virtual machine.

As a consequence, another key difference is that a container doesn't offer a user interface. You can't connect with Remote Desktop or VNC and interact with your mouse with the operating system running inside the container. Only command line operations are supported. This impacts the kind of applications you can run inside a container. Docker is great for running console based applications or background services, like a web server, a database, a backend. It isn't made, for example, to run a WPF or Windows Forms application, because you wouldn't have any way to connect to it and interact with it.

This is why you won't find any Docker image, for example, for Windows 10 or Windows Server but, instead, you will find images only for operating systems that are console based, like Linux or Windows Nanoserver.

If you have ever worked with the Desktop Bridge and the Desktop App Converter, you will remember having the same limitation when it comes to the kind of installers that you can convert. Only silent installers are supported. This is exactly because the Desktop App Converter, under the hood, uses a Windows Container to perform the installation and the packaging. The installer is executed inside a clean Windows 10 installation so that the tool can detect the changes performed by the setup. Since the container doesn't offer a user interface, you can't actually see the installer running and interact with it, so every thing must happen without any kind of user interaction.

Another big advantage of Docker is that when you create an image, you aren't deploying only your application, but also all the other dependencies (the operating system, frameworks, etc.) with the proper configuration. This means that there will be no differences between the testing environment and the production environment. Even if they are two different machines, all the relevant pieces for your application (configuration, OS version, framework version, etc.) will be exactly the same.

This is why we're seeing more and more Docker integrated in the DevOps experience. It's easy to plug Docker in a continuous integration workflow and automatically generate a new image every time you commit some new code of your web application.

Setting up Docker on your machine

Installing Docker on your Windows computer has been made really easy thanks to a dedicated setup, which you can download from https://www.docker.com/products/docker-desktop. The tool will take care of installing both the components required to run Docker:

- The server, which is the service that handles the creation and the execution of the various containers

- The client, which is a command line tool, that you can use to send commands to the server to take actions (run a container, build an image, etc.)

The two components are independent. You can use the CLI to connect to any server, including a remote one; it doesn't necessarily need to run on a machine which hosts also the Docker service.

The setup process is quite straightforward. However, at some point, you will be asked if you want to use Windows or Linux containers. Let's understand more what does it mean.

Linux vs Windows

A container is usually a mix of operating system, frameworks and applications. As such, Docker supports multiple kind of containers, based on the application you need to run. If you want to run a .NET Core website inside a container, you can opt-in to use a Linux based container, since .NET Core is cross platform. However, if you want to host SQL Server or IIS, you will need a Windows Container.

If you have understood how a container works under the hood, it will be easy for your to understand why you can't run a Linux container on Windows or the other way around. It would be like trying to run a WPF or Windows Forms application on a Linux machine. It just can't work.

However, thanks to Hyper-V, Docker for Windows is able to support both kind of containers. During the setup, you can choose which kind of containers you want to use by default. Right now, you can't mix them, but the Docker team is working closely with the Windows team to enable this. In case you opt-in for Windows containers, they will run directly on your Windows machine, in the same way as Linux does (so in an isolated way, without direct communication with the full operating system).

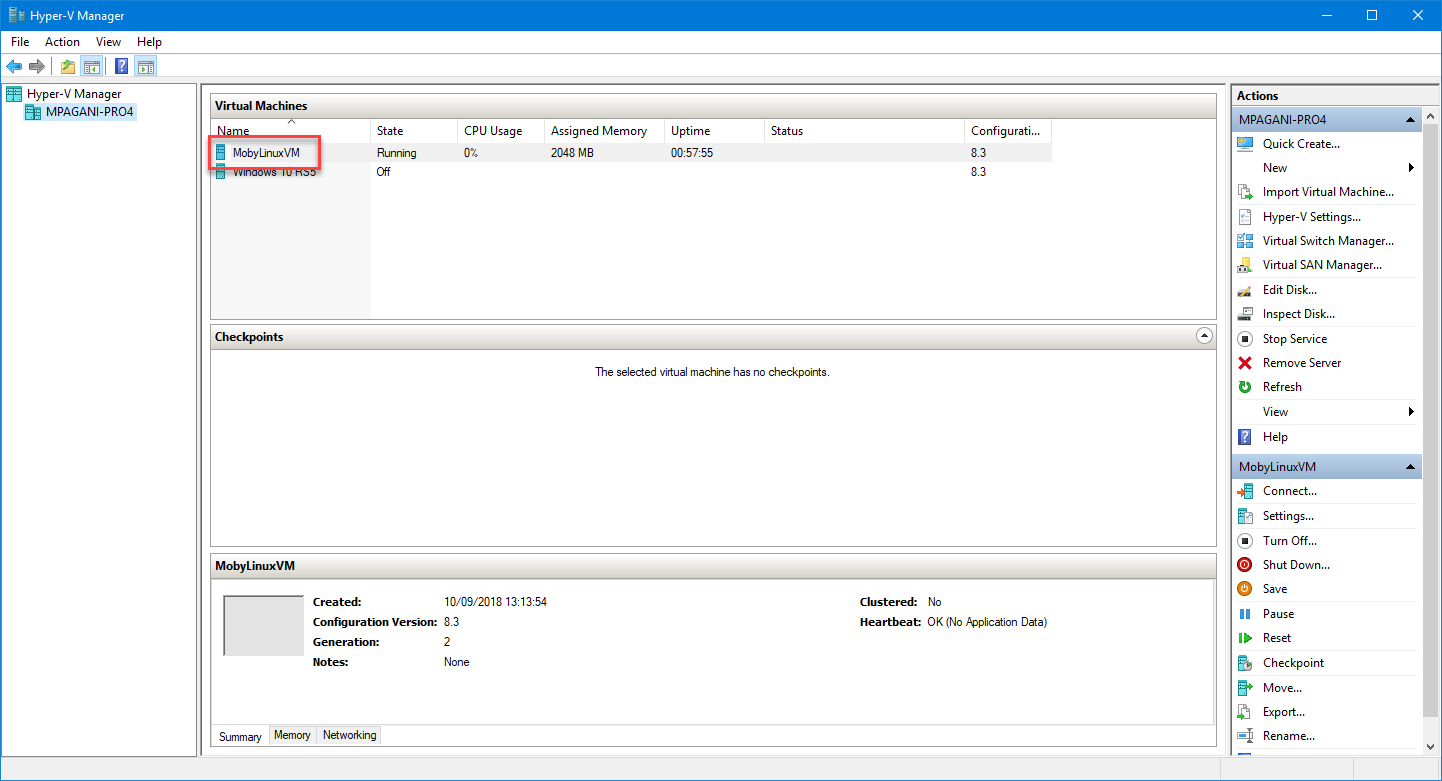

In case, instead, you opt-in for Linux containers, you will find at the end of the setup a new virtual machine in your Hyper-V console:

When you run a Docker container based on Linux, it will be executed inside this virtual machine.

As a consequence, in order to run Docker for Windows with Linux containers you need at least Windows 10 Pro or Enterprise and Hyper-V enabled in your Windows configuration.

For the samples we're going to use in this series of posts, we're going to focus on Linux containers. Howevers, all the concepts are exactly the same. The difference is only in terms of the kind of applications that you can run.

Everything you need to know about images

A Docker image is the single unit which contains everything you need to run your application. When you run a container, you are running an instance of that image. As you can understand, the image is unique, while you can have multiple containers based on the same image running at the same time (for example, for scaling purposes).

Typically images are built to run a single process. If your application requires multiple processes running together, you will create different images and then you will run a container for each of them. For example, let's say that you have a web application which connects to a set of REST APIs. In the Docker world, the web application and the Web API project won't run in the same container. Each of them will have a dedicated image and, if you need to deploy the full application, you will need to create an instance of both images. This is one of the reasons why containers are great for microservices-based solutions.

Docker images are hosted in a repository, which is the location from where you can pull them on your local machine so that you can start a container based on them. The most popular repository is Docker Hub, which is a centralized registry where you can store and distribute your own images. Docker Hub hosts also a set official images from various organizations. For example, Microsoft releases images for .NET Core or Windows Server; you will find images from Ubuntu, Redis, MySQL, etc.

Docker supports also creating on-premise repositories, in case you don't want to make your own images available on the cloud or in case you want to use another provider. For example, Azure offers a service called Azure Container Registry, which you can use to build your own Docker registry hosted on your Azure account.

Every image is identified by a unique name, which is usually prefixed by the username of the user who created it. In order to publish images on Docker Hub, in fact, you need to register and choose a unique username. The only exception are official images, which can be recognized because they don't have the prefix. For example, the official sample of a minimal Docker container is named just hello_world, since it's published by the Docker team. The .NET Core image, instead, isn't coming from an official organization, so its name is microsoft/dotnet.

Please note: this doesn't mean that the .NET Core image isn't official, but just that Microsoft is treated like a regular user and not as an official organization.

Another important concept related to images is tagging. You can create different images with the same name, but a different tag. This feature is mainly used to support versioning. Take a look at the official .NET Core images for Docker. As you can see, the same image (called microsoft/dotnet) has multiple tags, like 2.1-sdk or 2.0-sdk. This allows you to keep, on the same machine, multiple versions of the same image, based on the version of the operating system / framework / application you want to use.

The tag is specified after the name of the image, separated by a colon. For example, if you want to download the 2.1 SDK for .NET Core, you use the identifier microsoft/dotnet:2.1-sdk. There's a special tag called latest, which will always give you the most recent version of the image.

Typically an image can be used for two purposes:

- As it is, in case you just need to leverage a feature which is directly exposed by the image. This is a common scenario when the image hosts a service. For example, let's say that you want to add caching to your application and you want to leverage Redis, a very popular open source solution. Since Redis is a service, you will probably just pull the default image and use it as it is.

- As a building block for creating your own custom image. This is a very common scenario when the base image exposes a development framework or a platform. Let's take, for example, the .NET Core image from Microsoft. As it is, it's basically useless, because .NET Core itself doesn't do anything. What you want to do is to take the existing .NET Core image and create a new one on top of it, so that you can add your web application.

During the various posts we're going to see examples of both approaches.

Hello World

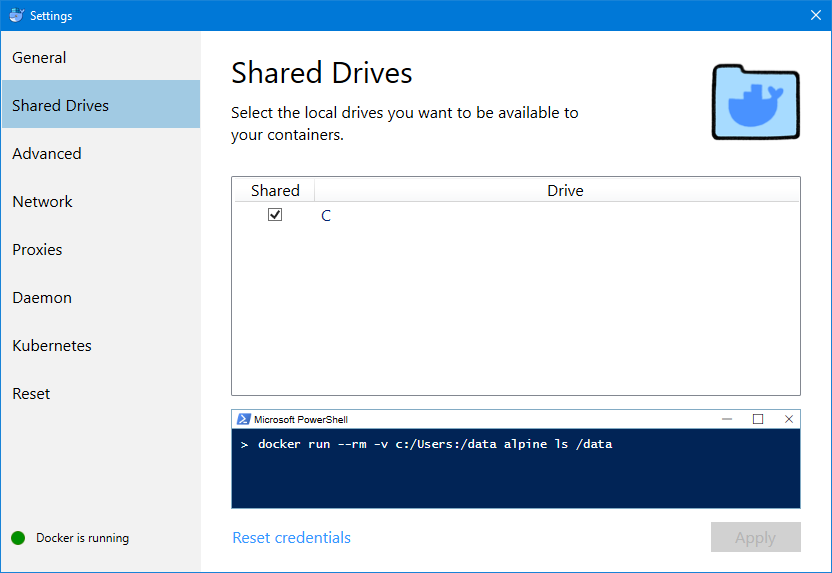

Let's see a Hello World in Docker and, for achieving this, we can leverage the official Hello World sample. First, however, we need to make our hard drive available to the Docker service, so that containers can access to it to store data. Docker for Windows makes the operation very easy. If Docker is up & running, you can see a whale icon (the Docker logo) in your system tray. Right click on it and choose Settings, then move to Shared Drives.

You will find a list of all the drives on your computer:

Click on the one drive you want to share and press Apply. You will be asked for the credentials of a user which has admin access to the selected drive.

Another interesting section that you may want to look is Advanced, which allows you to configure the Hyper-V virtual machine which hosts your Linux containers. Thanks to this option, you can limit or increase the resources (CPU, RAM, hard disk size) available to your containers.

Once you have verified that everything is up and running, we can start to use the Docker CLI. When you install Docker for Windows, the CLI is available on every console you have in Windows, so feel free to choose the one you prefer. It can be a regular command prompt or a PowersShell prompt.

You can check that everything is working as expected by typing docker version:

PS C:\Users\mpagani> docker version

Client:

Version: 18.06.1-ce

API version: 1.38

Go version: go1.10.3

Git commit: e68fc7a

Built: Tue Aug 21 17:21:34 2018

OS/Arch: windows/amd64

Experimental: false

Server:

Engine:

Version: 18.06.1-ce

API version: 1.38 (minimum version 1.12)

Go version: go1.10.3

Git commit: e68fc7a

Built: Tue Aug 21 17:29:02 2018

OS/Arch: linux/amd64

Experimental: false

The output should be similar to the above one. We can see that we have installed on our machine both the client and the server. The client is built for Windows, while the server is running Linux. This is expected, because we have chosen during the setup to use Linux containers.

Now we can pull the image from the repository, by using the pull command:

PS C:\Users\mpagani> docker pull hello-world

Using default tag: latest

latest: Pulling from library/hello-world

d1725b59e92d: Pull complete

Digest: sha256:0add3ace90ecb4adbf7777e9aacf18357296e799f81cabc9fde470971e499788

Status: Downloaded newer image for hello-world:latest

Docker has found an image called hello-world on Docker Hub. Since we haven't specified a tag, the tool automatically applies the latest one, so you will get the most recent version. After the download has been completed, you can use the docker image list command to see it:

hello-world latest 4ab4c602aa5e 2 days ago 1.84kB

The output will show you the name of the image, the tag, a unique identifier, the last update date and the size.

However, with this command we have just pulled the image. We haven't executed it and turned it into a container. We can check this by running the docker ps command, which lists all the running containers:

PS C:\Users\mpagani> docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

PS C:\Users\mpagani>

As expected, the list is empty. So, let's run it! The command to take an image and execute it inside a container is called docker run, which must be followed by the name of the image.

PS C:\Users\mpagani> docker run hello-world

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

Our container has been launched and it has executed the application contained inside the image. In this case, the application just takes care of displaying the text output you can see above. Now let's try to run again the docker ps command:

PS C:\Users\mpagani> docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

PS C:\Users\mpagani>

The list is still empty! The reason is that what we have launched isn't a service or a daemon, like a web server or a DBMS, but a console application. Once it has completed its task (in this case, displaying the message), the process is terminated and, as such, the container is stopped.

Please notice: the pull operation we have performed is optional. Docker, in fact, is smart enough to download first an image if, when we execute the docker run command, the requested image isn't available in the local repository. As such, if we would have executed directly the docker run hello-world command, Docker would have downloaded first the hello-world image and then we would have seen the text message.

Running a service in a container

Let's see now a more meaningful example with a container that actually stays alive and that can be leveraged by other applications. Let's start to pull a new image called nginx:

PS C:\Users\mpagani> docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

802b00ed6f79: Already exists

e9d0e0ea682b: Pull complete

d8b7092b9221: Pull complete

Digest: sha256:24a0c4b4a4c0eb97a1aabb8e29f18e917d05abfe1b7a7c07857230879ce7d3d3

Status: Downloaded newer image for nginx:latest

NGINX is a lightweight and powerful web server, which we can use to host and deliver our web applications.

Now that we have the image, let's spin a container from it:

PS C:\Users\mpagani> docker run -d -p 8080:80 nginx

6d986b5dc78f17e2bc6f7fd77557fa685fe7c3de0cd6c087fbdc0564e28188e1

As you can see, the command we have issued is a little bit different. We have added a couple of parameters:

- -d means "detached". This is the best option when you need to run a background service, like in this case. The container will be executed and then the control will be returned back to the console.

- -p 8080:80 is used to associate a port inside the container with a port on your host machine. In this case, we are telling that we want to expose the port 80 inside the container to the port 8080 on our machine. NGINX, in fact, like every web server, can be reached using port 80. However, since the container is isolated, we need to forward the port to the host machine in order to reach it.

Now run again the docker ps command. This time, you will see something different:

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d3a25eb94817 nginx "nginx -g 'daemon of…" 5 seconds ago Up 3 seconds 0.0.0.0:8080->80/tcp wizardly_curran

Now we have a container up & running! We can see some information about it, like its identifier, the image used to create it, the port forwarding, etc.

Since the container is exposed to our machine, we can test it just by opening a browser and hitting the URL https://localhost:8080 . You should see the default NGINX page:

Pretty cool, isn't it? We are running a web server without having actually installed anything on our machine. This is a nice example of how Docker can be helpful not just for web development. You can use it also to test services and frameworks without messing up with your machine. Once you don't need anymore the NGINX server, you can simply run the command docker stop followed by the id of the container:

docker stop d3a25eb94817

d3a25eb94817

And if you want to completely get rid of the web server, you can just delete it by using the docker rmi nginx command.

From this point of view, this approach isn't very different from what we did with desktop applications using the Desktop Bridge. When we package an application, in fact, many operations are isolated, like writing to the registry. This way, when we uninstall the app, there are no leftovers and there is no impact on the machine.

Stopping a container vs deleting a container

If you try to remove the nginx image with the docker rmi command, you may get an error like the following one:

PS C:\Users\mpagani> docker rmi nginx

Error response from daemon: conflict: unable to remove repository reference "nginx" (must force) - container 302e8bd6aa6b is using its referenced image 06144b287844

The reason is that, by default, when you stop a container it isn't actually removed it. You won't be able to see it with the docker ps command because it lists only the running containers, so you will need to add the -a parameter:

PS C:\Users\mpagani> docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7baadb044e9d nginx "nginx -g 'daemon of…" About a minute ago Exited (0) 7 seconds ago blissful_wing

This is helpful when you want to maintain the state across multiple execution of a container. However, in some cases, you want to automatically destroy a container after its task has been completed. For example, in our case we just need to host a simple web application, so we don't need its state to kept over time.

We can achieve this goal by adding the --rm parameter to our docker run command:

PS C:\Users\mpagani> docker run --rm -d -p 8080:80 nginx

a627c79af9cf968074f92f71838af5cd5abc4ffcacb37f6faa82845668be1dcf

If you now stop this container and then you run docker ps -a again, you will notice that there isn't any container in the list.

Running an interactive container

The last example I want to show you is about running a container in interactive mode, which mean that you can connect to it to perform commands. Let's see an example with a Linux distribution like Ubuntu.

To start the interactive mode you will need to pass the -it parameter, like in the following sample:

PS C:\Users\mpagani> docker run -it ubuntu

Unable to find image 'ubuntu:latest' locally

latest: Pulling from library/ubuntu

124c757242f8: Pull complete

9d866f8bde2a: Pull complete

fa3f2f277e67: Pull complete

398d32b153e8: Pull complete

afde35469481: Pull complete

Digest: sha256:de774a3145f7ca4f0bd144c7d4ffb2931e06634f11529653b23eba85aef8e378

Status: Downloaded newer image for ubuntu:latest

root@d1f4c9bd79ee:/#

As you can see from the previous snippet, after we have executed the docker run command we aren't returned back to PowerShell, but we are connected to a terminal inside the container. Since we are using Ubuntu, we can issue any UNIX command, like ls:

root@d1f4c9bd79ee:/# ls

bin boot dev etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

As you can see, we can explore the full content of the operating system. By using this image, we can also learn by experience how containers are based on a stripped down version of the OS, in order to be as much lightweight as possible. For example, try to run the nano or vi commands, which are two very popular text editors. Both commands will return a command not found error, because both tools aren't installed. If you want to add it, you will need to execute the command apt-get install nano (in case you have never used it, apt-get is the most used Linux package manager).

However, remember that when you are performing this commands, you are working with a container, not with the image. If you run a new instance of the ubuntu image, the Nano editor will still be missing. If you want to have a Ubuntu image with nano preinstalled, you will have to create your own custom image using the Ubuntu one as a starting point. But this is a topic for the next blog post 😃

Wrapping up

Docker is, without any doubt, one of the most successfull open source projects in the tech history. Despite it's fairly new (it has been released in 2013), it has quickly became a standard in the industry.

In this post we have seen an introduction to this platform, which allows to run your applications inside a container.

Thanks to this approach, you can deploy an application being sure that it's configured exactly in the right way and with all the right dependencies. When you create a Docker image, in fact, you aren't just sharing the application, but the whole required stack including the operating system and frameworks. This approach helps to be more confident in the deployment phase, because there will be no differences between the testing and the production environment.

Additionally, since containers represents a single unit of work, it's easy to scale and to support heavy workloads, since you can spin additional containers starting from the same image very quickly.

In the next post we'll put in practice what we have learned and we'll start to create a web application, which will be hosted in a Docker container.

Happy deploying!