合成交换链代码示例

这些代码示例使用 Windows 实现库 (WIL)。 安装 WIL 的一种便捷方法是转到 Visual Studio,单击“项目”>“管理 NuGet 包...”>“浏览”,在搜索框中键入或粘贴 Microsoft.Windows.ImplementationLibrary,选择搜索结果中的项,然后单击“安装”以安装该项目的包。

示例 1 - 在支持合成交换链 API 的系统上创建演示管理器

如前所述,合成交换链 API 需要支持的驱动程序才能正常运行。 以下示例演示了如果系统支持 API,应用程序如何创建演示管理器。 这是一个 TryCreate 样式的函数,只有在支持 API 的情况下才返回演示管理器。 此函数还演示如何正确创建 Direct3D 设备来支持演示管理器。

C++ 示例

bool TryCreatePresentationManager(

_In_ bool requestDirectPresentation,

_Out_ ID3D11Device** ppD3DDevice,

_Outptr_opt_result_maybenull_ IPresentationManager **ppPresentationManager)

{

// Null the presentation manager and Direct3D device initially

*ppD3DDevice = nullptr;

*ppPresentationManager = nullptr;

// Direct3D device creation flags. The composition swapchain API requires that applications disable internal

// driver threading optimizations, as these optimizations are incompatible with the

// composition swapchain API. If this flag is not present, then the API will fail the call to create the

// presentation factory.

UINT deviceCreationFlags =

D3D11_CREATE_DEVICE_BGRA_SUPPORT |

D3D11_CREATE_DEVICE_SINGLETHREADED |

D3D11_CREATE_DEVICE_PREVENT_INTERNAL_THREADING_OPTIMIZATIONS;

// Create the Direct3D device.

com_ptr_failfast<ID3D11DeviceContext> d3dDeviceContext;

FAIL_FAST_IF_FAILED(D3D11CreateDevice(

nullptr, // No adapter

D3D_DRIVER_TYPE_HARDWARE, // Hardware device

nullptr, // No module

deviceCreationFlags, // Device creation flags

nullptr, 0, // Highest available feature level

D3D11_SDK_VERSION, // API version

ppD3DDevice, // Resulting interface pointer

nullptr, // Actual feature level

&d3dDeviceContext)); // Device context

// Call the composition swapchain API export to create the presentation factory.

com_ptr_failfast<IPresentationFactory> presentationFactory;

FAIL_FAST_IF_FAILED(CreatePresentationFactory(

(*ppD3DDevice),

IID_PPV_ARGS(&presentationFactory)));

// Determine whether the system is capable of supporting the composition swapchain API based

// on the capability that's reported by the presentation factory. If your application

// wants direct presentation (that is, presentation without the need for DWM to

// compose, using MPO or iflip), then we query for direct presentation support.

bool isSupportedOnSystem;

if (requestDirectPresentation)

{

isSupportedOnSystem = presentationFactory->IsPresentationSupportedWithIndependentFlip();

}

else

{

isSupportedOnSystem = presentationFactory->IsPresentationSupported();

}

// Create the presentation manager if it is supported on the current system.

if (isSupportedOnSystem)

{

FAIL_FAST_IF_FAILED(presentationFactory->CreatePresentationManager(ppPresentationManager));

}

return isSupportedOnSystem;

}

示例 2 - 创建演示管理器和演示表面

以下示例说明了应用程序如何创建一个演示管理器和一个演示图面,以便绑定到可视化树中的可视化对象。 随后的示例将显示如何将演示图面绑定到 DirectComposition 和 Windows.UI.Composition 视觉树。

C++ 示例 (DCompositionGetTargetStatistics)

bool MakePresentationManagerAndPresentationSurface(

_Out_ ID3D11Device** ppD3dDevice,

_Out_ IPresentationManager** ppPresentationManager,

_Out_ IPresentationSurface** ppPresentationSurface,

_Out_ unique_handle& compositionSurfaceHandle)

{

// Null the output pointers initially.

*ppD3dDevice = nullptr;

*ppPresentationManager = nullptr;

*ppPresentationSurface = nullptr;

compositionSurfaceHandle.reset();

com_ptr_failfast<IPresentationManager> presentationManager;

com_ptr_failfast<IPresentationSurface> presentationSurface;

com_ptr_failfast<ID3D11Device> d3d11Device;

// Call the function we defined previously to create a Direct3D device and presentation manager, if

// the system supports it.

if (TryCreatePresentationManager(

true, // Request presentation with independent flip.

&d3d11Device,

&presentationManager) == false)

{

// Return 'false' out of the call if the composition swapchain API is unsupported. Assume the caller

// will handle this somehow, such as by falling back to DXGI.

return false;

}

// Use DirectComposition to create a composition surface handle.

FAIL_FAST_IF_FAILED(DCompositionCreateSurfaceHandle(

COMPOSITIONOBJECT_ALL_ACCESS,

nullptr,

compositionSurfaceHandle.addressof()));

// Create presentation surface bound to the composition surface handle.

FAIL_FAST_IF_FAILED(presentationManager->CreatePresentationSurface(

compositionSurfaceHandle.get(),

presentationSurface.addressof()));

// Return the Direct3D device, presentation manager, and presentation surface to the caller for future

// use.

*ppD3dDevice = d3d11Device.detach();

*ppPresentationManager = presentationManager.detach();

*ppPresentationSurface = presentationSurface.detach();

// Return 'true' out of the call if the composition swapchain API is supported and we were able to

// create a presentation manager.

return true;

}

示例 3 — 将演示图面绑定到 Windows.UI.Composition 图面画笔

以下示例说明了应用程序如何将绑定到演示图面的合成图面句柄(如上例中创建)绑定到 Windows.UI.Composition (WinComp) 图面画笔,该画笔随后可绑定到应用程序的可视化树中的子画面视觉对象。

C++ 示例

void BindPresentationSurfaceHandleToWinCompTree(

_In_ ICompositor * pCompositor,

_In_ ISpriteVisual * pVisualToBindTo, // The sprite visual we want to bind to.

_In_ unique_handle& compositionSurfaceHandle)

{

// QI an interop compositor from the passed compositor.

com_ptr_failfast<ICompositorInterop> compositorInterop;

FAIL_FAST_IF_FAILED(pCompositor->QueryInterface(IID_PPV_ARGS(&compositorInterop)));

// Create a composition surface for the presentation surface's composition surface handle.

com_ptr_failfast<ICompositionSurface> compositionSurface;

FAIL_FAST_IF_FAILED(compositorInterop->CreateCompositionSurfaceForHandle(

compositionSurfaceHandle.get(),

&compositionSurface));

// Create a composition surface brush, and bind the surface to it.

com_ptr_failfast<ICompositionSurfaceBrush> surfaceBrush;

FAIL_FAST_IF_FAILED(pCompositor->CreateSurfaceBrush(&surfaceBrush));

FAIL_FAST_IF_FAILED(surfaceBrush->put_Surface(compositionSurface.get()));

// Bind the brush to the visual.

auto brush = surfaceBrush.query<ICompositionBrush>();

FAIL_FAST_IF_FAILED(pVisualToBindTo->put_Brush(brush.get()));

}

示例 4 — 将演示图面绑定到 DirectComposition 视觉对象

以下示例说明了应用程序如何将演示图面绑定到可视化树中的 DirectComposition (DComp) 视觉对象。

C++ 示例

void BindPresentationSurfaceHandleToDCompTree(

_In_ IDCompositionDevice* pDCompDevice,

_In_ IDCompositionVisual* pVisualToBindTo,

_In_ unique_handle& compositionSurfaceHandle) // The composition surface handle that was

// passed to CreatePresentationSurface.

{

// Create a DComp surface to wrap the presentation surface.

com_ptr_failfast<IUnknown> dcompSurface;

FAIL_FAST_IF_FAILED(pDCompDevice->CreateSurfaceFromHandle(

compositionSurfaceHandle.get(),

&dcompSurface));

// Bind the presentation surface to the visual.

FAIL_FAST_IF_FAILED(pVisualToBindTo->SetContent(dcompSurface.get()));

}

示例 5 - 在演示图面上设置 alpha 模式和颜色空间

以下示例说明应用程序如何在演示图面上设置 alpha 模式和颜色空间。 alpha 模式描述是否以及如何解释纹理中的 alpha 通道。 颜色空间描述纹理像素所指的颜色空间。

所有像这样的属性更新都将作为应用程序下一次演示的一部分生效,并与作为该演示一部分的任何缓冲区更新一起以原子方式生效。 如果应用程序需要,演示也可以不更新任何缓冲区,而只包含属性更新。 任何没有在特定演示上更新缓冲区的演示表面都将绑定到它们在该演示之前绑定的任何缓冲区。

C++ 示例

void SetAlphaModeAndColorSpace(

_In_ IPresentationSurface* pPresentationSurface)

{

// Set alpha mode.

FAIL_FAST_IF_FAILED(pPresentationSurface->SetAlphaMode(DXGI_ALPHA_MODE_IGNORE));

// Set color space to full RGB.

FAIL_FAST_IF_FAILED(pPresentationSurface->SetColorSpace(DXGI_COLOR_SPACE_RGB_FULL_G22_NONE_P709));

}

示例 6 - 在演示图面上设置字母框边距

以下示例说明应用程序如何在演示图面上指定字母框边距。 字母框用于填充表面内容本身之外的指定区域,以考虑内容与显示设备之间的不同纵横比。 一个很好的例子是,在电脑上观看为宽屏影院设置格式的电影时,通常显示在内容上方和下方的黑条。 合成交换链 API 允许指定在这种情况下呈现字母框的边距。

目前,字母框区域总是用不透明的黑色填充。

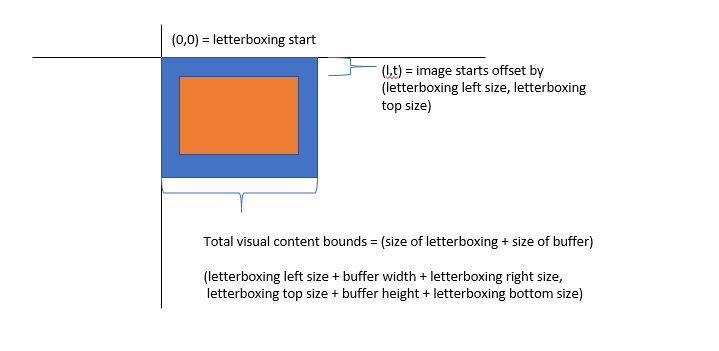

演示图面作为视觉树内容,存在于其宿主视觉的坐标空间中。 在存在字母框的情况下,视觉坐标空间的原点对应于字母框的左上角。 也就是说,缓冲区本身存在于视觉的坐标空间中。 边界计算为缓冲区的大小加上字母框的大小。

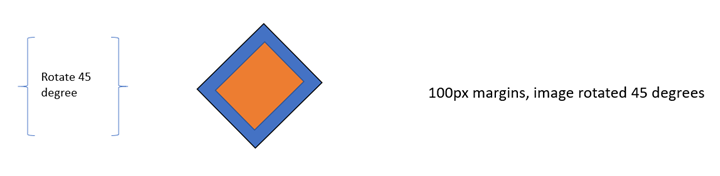

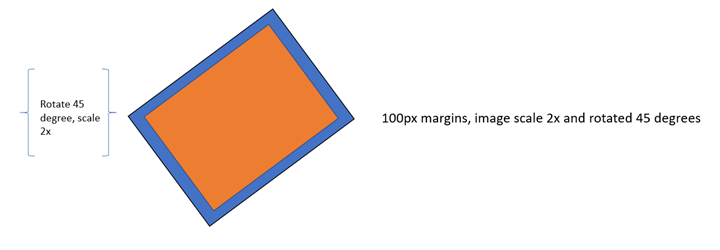

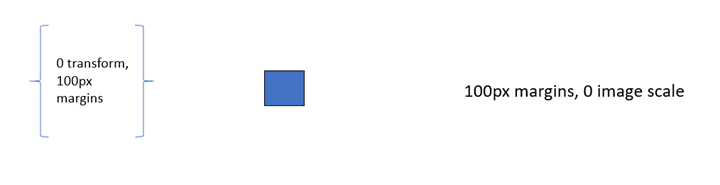

以下说明了缓冲区和字母框在视觉坐标空间中的位置,以及边界的计算方式。

最后,如果应用于演示图面的转换具有偏移量,则缓冲区或字母框未覆盖的区域被视为内容边界之外,并且被视为透明,类似于在内容边界以外处理任何其他视觉树内容的方式。

字母框和转换交互

为了提供一致的字母框边距大小,无论应用程序为填充某些内容区域而对缓冲区应用的比例如何,DWM 都将尝试以一致大小呈现边距,但要考虑应用于演示图面的转换结果。

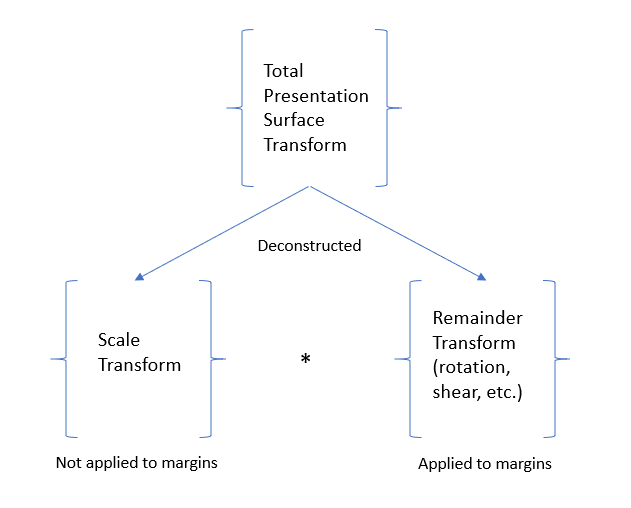

换句话说,从技术上讲,在应用演示表面的转换之前应用字母框边距,但是补偿可能是该转换的一部分的任何比例。 也就是说,演示图面的转换被解构为两个组件,即缩放的转换的一部分以及转换的其余部分。

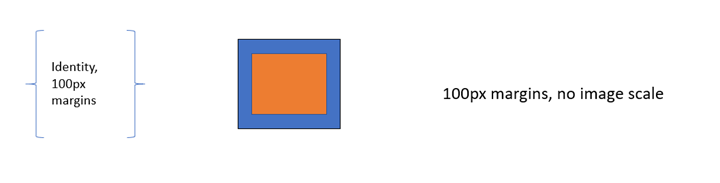

例如,对于 100px 字母框边距,并且没有应用于演示图面的转换,则生成的缓冲区将在没有缩放的情况下进行呈现,并且字母框边距将为 100px 宽。

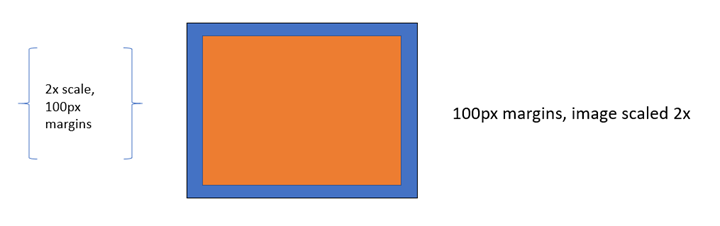

另一个例子是,使用 100px 的字母框边距,并在演示表面上应用 2x 缩放转换,生成的缓冲区将以 2x 比例呈现,屏幕上看到的字母框边距在所有大小下仍将为 100px。

另一个示例,使用 45 度旋转转换,得到的字母框边距将显示为 100px,并且字母框边距将与缓冲区一起旋转。

另一个示例是缩放 2 倍和旋转 45 度转换,图像将旋转和缩放,而字母框边距也为 100px 宽,并使用缓冲区旋转。

如果无法从应用程序应用于演示图面的转换中明确提取缩放转换,例如生成的 X 或 Y 缩放为 0,则会在应用整个转换时呈现字母框边距,我们不会尝试补偿缩放。

C++ 示例

void SetContentLayoutAndFill(

_In_ IPresentationSurface* pPresentationSurface)

{

// Set layout properties. Each layout property is described below.

// The source RECT describes the area from the bound buffer that will be sampled from. The

// RECT is in source texture coordinates. Below we indicate that we'll sample from a

// 100x100 area on the source texture.

RECT sourceRect;

sourceRect.left = 0;

sourceRect.top = 0;

sourceRect.right = 100;

sourceRect.bottom = 100;

FAIL_FAST_IF_FAILED(pPresentationSurface->SetSourceRect(&sourceRect));

// The presentation transform defines how the source rect will be transformed when

// rendering the buffer. In this case, we indicate we want to scale it 2x in both

// width and height, and we also want to offset it 50 pixels to the right.

PresentationTransform transform = { 0 };

transform.M11 = 2.0f; // X scale 2x

transform.M22 = 2.0f; // Y scale 2x

transform.M31 = 50.0f; // X offset 50px

FAIL_FAST_IF_FAILED(pPresentationSurface->SetTransform(&transform));

// The letterboxing parameters describe how to letterbox the content. Letterboxing

// is commonly used to fill area not covered by video/surface content when scaling to

// an aspect ratio that doesn't match the aspect ratio of the surface itself. For

// example, when viewing content formatted for the theater on a 1080p home screen, one

// can typically see black "bars" on the top and bottom of the video, covering the space

// on screen that wasn't covered by the video due to the differing aspect ratios of the

// content and the display device. The composition swapchain API allows the user to specify

// letterboxing margins, which describe the number of pixels to surround the surface

// content with on screen. In this case, surround the top and bottom of our content with

// 100 pixel tall letterboxing.

FAIL_FAST_IF_FAILED(pPresentationSurface->SetLetterboxingMargins(

0.0f,

100.0f,

0.0f,

100.0f));

}

示例 7 - 在演示图面上设置内容限制

以下示例说明应用程序如何阻止其他应用程序为保护内容的目的读回演示图面的内容(来自 PrintScreen、DDA、Captureneneneba API 等技术)。 还显示了如何将演示图面的显示限制为仅显示单个 DXGI 输出。

如果已禁用内容读回,则当应用程序尝试读回时,捕获的图像将包含不透明的黑色来代替演示图面。 如果演示图面的显示仅限于 IDXGIOutput,则它在所有其他输出上将显示为不透明的黑色。

C++ 示例

void SetContentRestrictions(

_In_ IPresentationSurface* pPresentationSurface,

_In_ IDXGIOutput* pOutputToRestrictTo)

{

// Disable readback of the surface via printscreen, bitblt, etc.

FAIL_FAST_IF_FAILED(pPresentationSurface->SetDisableReadback(true));

// Restrict display of surface to only the passed output.

FAIL_FAST_IF_FAILED(pPresentationSurface->RestrictToOutput(pOutputToRestrictTo));

}

示例 8 - 向演示管理器添加演示缓冲区

以下示例说明应用程序如何分配一组 Direct3D 纹理,并将其添加到演示管理器以用作演示缓冲区,这些缓冲区可以呈现给演示图面。

如前所述,纹理可以注册的演示管理器的数量没有限制。 但是,在大多数正常使用情况下,纹理将仅注册到单个演示管理器。

还要注意的是,由于 API 在将纹理注册为演示管理器中的演示缓冲区时接受共享缓冲区句柄作为货币,并且由于 DXGI 可以为单个 texture2D 创建多个共享缓冲区,因此从技术上讲,应用程序可以为单个纹理创建多个共用缓冲区句柄,并将它们同时注册到演示管理器,基本上具有多次添加相同缓冲液的效果。 不建议这样做,因为这将破坏演示管理器提供的同步机制,因为唯一跟踪的两个演示缓冲区实际上将对应于相同的纹理。 由于这是一个高级 API,而且在实现中很难在这种情况发生时检测到,因此 API 不会尝试验证这种情况。

C++ 示例

void AddBuffersToPresentationManager(

_In_ ID3D11Device* pD3D11Device, // The backing Direct3D device

_In_ IPresentationManager* pPresentationManager, // Previously-made presentation manager

_In_ UINT bufferWidth, // The width of the buffers to add

_In_ UINT bufferHeight, // The height of the buffers to add

_In_ UINT numberOfBuffersToAdd, // The number of buffers to add to the presentation manager

_Out_ vector<com_ptr_failfast<ID3D11Texture2D>>& textures, // Array of textures returned

_Out_ vector<com_ptr_failfast<IPresentationBuffer>>& presentationBuffers) // Array of presentation buffers returned

{

// Clear the returned vectors initially.

textures.clear();

presentationBuffers.clear();

// Add the desired buffers to the presentation manager.

for (UINT i = 0; i < numberOfBuffersToAdd; i++)

{

com_ptr_failfast<ID3D11Texture2D> texture;

com_ptr_failfast<IPresentationBuffer> presentationBuffer;

// Call our helper to make a new buffer of the desired type.

AddNewPresentationBuffer(

pD3D11Device,

pPresentationManager,

bufferWidth,

bufferHeight,

&texture,

&presentationBuffer);

// Track our buffers in our own set of vectors.

textures.push_back(texture);

presentationBuffers.push_back(presentationBuffer);

}

}

void AddNewPresentationBuffer(

_In_ ID3D11Device* pD3D11Device,

_In_ IPresentationManager* pPresentationManager,

_In_ UINT bufferWidth,

_In_ UINT bufferHeight,

_Out_ ID3D11Texture2D** ppTexture2D,

_Out_ IPresentationBuffer** ppPresentationBuffer)

{

com_ptr_failfast<ID3D11Texture2D> texture2D;

unique_handle sharedResourceHandle;

// Create a shared Direct3D texture and handle with the passed attributes.

MakeD3D11Texture(

pD3D11Device,

bufferWidth,

bufferHeight,

&texture2D,

out_param(sharedResourceHandle));

// Add the texture2D to the presentation manager, and get back a presentation buffer.

com_ptr_failfast<IPresentationBuffer> presentationBuffer;

FAIL_FAST_IF_FAILED(pPresentationManager->AddBufferFromSharedHandle(

sharedResourceHandle.get(),

&presentationBuffer));

// Return back the texture and buffer presentation buffer.

*ppTexture2D = texture2D.detach();

*ppPresentationBuffer = presentationBuffer.detach();

}

void MakeD3D11Texture(

_In_ ID3D11Device* pD3D11Device,

_In_ UINT textureWidth,

_In_ UINT textureHeight,

_Out_ ID3D11Texture2D** ppTexture2D,

_Out_ HANDLE* sharedResourceHandle)

{

D3D11_TEXTURE2D_DESC textureDesc = {};

// Width and height can be anything within max texture size of the adapter backing the Direct3D

// device.

textureDesc.Width = textureWidth;

textureDesc.Height = textureHeight;

// MipLevels and ArraySize must be 1.

textureDesc.MipLevels = 1;

textureDesc.ArraySize = 1;

// Format can be one of the following:

// DXGI_FORMAT_B8G8R8A8_UNORM

// DXGI_FORMAT_R8G8B8A8_UNORM

// DXGI_FORMAT_R16G16B16A16_FLOAT

// DXGI_FORMAT_R10G10B10A2_UNORM

// DXGI_FORMAT_NV12

// DXGI_FORMAT_YUY2

// DXGI_FORMAT_420_OPAQUE

// For this

textureDesc.Format = DXGI_FORMAT_B8G8R8A8_UNORM;

// SampleDesc count and quality must be 1 and 0 respectively.

textureDesc.SampleDesc.Count = 1;

textureDesc.SampleDesc.Quality = 0;

// Usage must be D3D11_USAGE_DEFAULT.

textureDesc.Usage = D3D11_USAGE_DEFAULT;

// BindFlags must include D3D11_BIND_SHADER_RESOURCE for RGB textures, and D3D11_BIND_DECODER

// for YUV textures. For RGB textures, it is likely your application will want to specify

// D3D11_BIND_RENDER_TARGET in order to render to it.

textureDesc.BindFlags =

D3D11_BIND_SHADER_RESOURCE |

D3D11_BIND_RENDER_TARGET;

// MiscFlags should include D3D11_RESOURCE_MISC_SHARED and D3D11_RESOURCE_MISC_SHARED_NTHANDLE,

// and might also include D3D11_RESOURCE_MISC_SHARED_DISPLAYABLE if your application wishes to

// qualify for MPO and iflip. If D3D11_RESOURCE_MISC_SHARED_DISPLAYABLE is not provided, then the

// content will not qualify for MPO or iflip, but can still be composed by DWM

textureDesc.MiscFlags =

D3D11_RESOURCE_MISC_SHARED |

D3D11_RESOURCE_MISC_SHARED_NTHANDLE |

D3D11_RESOURCE_MISC_SHARED_DISPLAYABLE;

// CPUAccessFlags must be 0.

textureDesc.CPUAccessFlags = 0;

// Use Direct3D to create a texture 2D matching the desired attributes.

com_ptr_failfast<ID3D11Texture2D> texture2D;

FAIL_FAST_IF_FAILED(pD3D11Device->CreateTexture2D(&textureDesc, nullptr, &texture2D));

// Create a shared handle for the texture2D.

unique_handle sharedBufferHandle;

auto dxgiResource = texture2D.query<IDXGIResource1>();

FAIL_FAST_IF_FAILED(dxgiResource->CreateSharedHandle(

nullptr,

GENERIC_ALL,

nullptr,

&sharedBufferHandle));

// Return the handle to the caller.

*ppTexture2D = texture2D.detach();

*sharedResourceHandle = sharedBufferHandle.release();

}

示例 9 - 从演示管理器中删除演示缓冲区和对象生存期

从演示管理器中删除演示缓冲区就像将 IPresentationBuffer 对象释放到 0 refcount 一样简单。 然而,当这种情况发生时,并不一定意味着可以释放演示缓冲区。 在某些情况下,缓冲区可能仍在使用中,例如,如果演示缓冲区在屏幕上可见,或者具有引用该缓冲区的未完成演示。

简言之,演示管理器将跟踪应用程序直接和间接使用每个缓冲区的方式。 它将跟踪显示哪些缓冲区、呈现的内容是未完成的缓冲区和引用缓冲区,并且它将在内部跟踪所有此状态,以确保缓冲区在真正不再使用之前不会真正释放/取消注册。

考虑缓冲区生存期的一个好方法是,如果应用程序释放 IPresentationBuffer,它会告诉 API,它将不再在任何将来的调用中使用该缓冲区。 合成交换链 API 将跟踪这一点,以及正在使用缓冲区的任何其他方式,并且仅当缓冲区完全安全执行此操作时,它才会完全释放缓冲区。

简言之,应用程序应在完成时释放 IPresentationBuffer,并知道当所有其他用途也已完成时,合成交换链 API 将完全释放缓冲区。

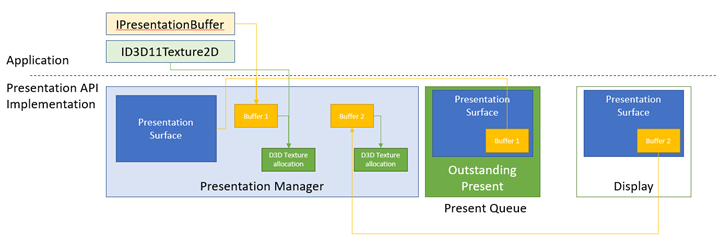

下面是对上述概念的说明。

在本例中,我们有一个具有两个表示缓冲区的演示管理器。 缓冲区 1 有三个引用,使其保持活动状态。

- 应用程序有一个引用它的 IPresentationBuffer。

- 在 API 内部,它被存储为演示表面的演示绑定缓冲区,因为它是应用程序绑定的最后一个缓冲区,然后它会显示该缓冲区。

- 演示队列中还有一个未完成的演示,该队列打算在演示图面上显示缓冲区 1。

缓冲区 1 还在其相应的 Direct3D 纹理分配上保存引用。 应用程序还有一个 ID3D11Texture2D 引用该分配。

缓冲区 2 没有来自应用程序端的未完成引用,也没有它引用的纹理分配,但缓冲区 2 将保持活动状态,因为它当前正在由屏幕上的演示图面显示。 当 Present 1 被处理,并且缓冲区 1 被显示在屏幕上时,缓冲区 2 将没有更多引用,并且将释放,进而在其 Direct3D texture2D 分配上释放其引用。

C++ 示例

void ReleasePresentationManagerBuffersExample(

_In_ ID3D11Device* pD3D11Device,

_In_ IPresentationManager* pPresentationManager)

{

com_ptr_failfast<ID3D11Texture2D> texture2D;

com_ptr_failfast<IPresentationBuffer> newBuffer;

// Create a new texture/presentation buffer.

AddNewPresentationBuffer(

pD3D11Device,

pPresentationManager,

200, // Buffer width

200, // Buffer height

&texture2D,

&newBuffer);

// Release the IPresentationBuffer. This indicates that we have no more intention to use it

// from the application side. However, if we have any presents outstanding that reference

// the buffer, it will be kept alive internally and released when all outstanding presents

// have been retired.

newBuffer.reset();

// When the presentation buffer is truly released internally, it will in turn release its

// reference on the texture2D which it corresponds to. Your application must also release

// its own references to the texture2D for it to be released.

texture2D.reset();

}

示例 10 - 调整演示管理器中的缓冲区大小

以下示例说明应用程序如何执行调整演示缓冲区的大小。 例如,如果电影流媒体服务正在流式传输 480p,但由于高网络带宽的可用性而决定将格式切换为 1080p,则它将希望将其缓冲区从 480p 重新分配到 1080p 以便开始呈现 1080p 内容。

在 DXGI 中,这称为调整大小操作。 DXGI 以原子方式重新分配所有缓冲区,这代价高昂,并且可以在屏幕上产生故障。 此处的示例介绍如何在合成交换链 API 中使用 DXGI 实现原子大小调整。 稍后的示例将显示如何以交错的方式调整大小,在多个演示之间间隔重新分配,实现比原子交换链调整大小更好的性能。

C++ 示例

void ResizePresentationManagerBuffersExample(

_In_ ID3D11Device* pD3D11Device,

_In_ IPresentationManager* pPresentationManager) // Previously-made presentation manager.

{

// Vectors representing the IPresentationBuffer and ID3D11Texture2D collections.

vector<com_ptr_failfast<ID3D11Texture2D>> textures;

vector<com_ptr_failfast<IPresentationBuffer>> presentationBuffers;

// Add 6 50x50 buffers to the presentation manager. See previous example for definition of

// this function.

AddBuffersToPresentationManager(

pD3D11Device,

pPresentationManager,

50, // Buffer width

50, // Buffer height

6, // Number of buffers

textures,

presentationBuffers);

// Release all the buffers we just added. The presentation buffers internally will be released

// when any other references are also removed (outstanding presents, display references, etc.).

textures.clear();

presentationBuffers.clear();

// Add 6 new 100x100 buffers to the presentation manager to replace the

// old ones we just removed.

AddBuffersToPresentationManager(

pD3D11Device,

pPresentationManager,

100, // Buffer width

100, // Buffer height

6, // Number of buffers

textures,

presentationBuffers);

}

示例 11 - 使用缓冲区可用事件同步演示,以及处理演示管理器丢失的事件

如果应用程序要发布一个包含先前演示中使用过的演示缓冲区的演示,则必须确保先前的演示已完成,以便再次呈现到演示缓冲区。 合成交换链 API 提供了几种不同的机制来促进这种同步。 最简单的是演示缓冲区的可用事件,这是一个 NT 事件对象,当缓冲区可供使用时发出信号(即,所有以前的演示都已进入即将停用或已停用状态)会发出信号,否则将不发出信号。 此示例说明如何使用缓冲区可用事件来选取要呈现的可用缓冲区。 还说明如何侦听设备丢失错误,这些错误在道义上等同于 DXGI 设备丢失,并需要重新创建演示管理器。

演示管理器丢失错误

如果演示管理器在内部遇到无法恢复的错误,则可能会丢失。 应用程序必须销毁丢失的演示管理器,并创建一个新的演示管理器来使用。 当演示管理器丢失时,它将停止接受更多的演示。 任何对丢失的演示管理器调用 Present 的尝试都将返回 PRESENTATION_ERROR_LOST。 然而,所有其他方法都将正常工作。 这是为了确保应用程序只需在演示调用中检查 PRESENTATION_ERROR_LOST,而不需要预测/处理每个 API 调用上丢失的错误。 如果应用程序希望检查演示调用之外的丢失错误或收到丢失错误的通知,则它可以使用 IPresentationManager::GetLostEvent 返回的丢失事件。

演示队列和计时

演示管理器发布的演示可以指定目标的特定时间。 该目标时间是系统尝试显示演示的理想时间。 由于屏幕以有限的节奏更新,演示不太可能在指定的时间准确显示,但它会尽可能接近时间显示。

此目标时间是系统相对时间,或系统自启动以来一直在运行的时间(以数百纳秒为单位)。 计算当前时间的一种简单方法是查询当前的 QueryPerformanceCounter (QPC) 值,将其除以系统的 QPC 频率,再乘以 10,000,000。 除了舍入和精度限制之外,这将以适用的单位计算当前时间。 系统还可以通过调用 MfGetSystemTime 或 QueryInterruptTimePrecise 来获取当前时间。

一旦知道了当前时间,应用程序通常会从当前时间开始以不断增加的偏移量发出演示。

无论目标时间如何,演示总是按队列顺序处理。 即使演示时间早于上一个演示时间,也要在处理完前一个演示之后才能进行处理。 这本质上意味着,任何不以比之前晚的时间为目标的演示都将覆盖之前的演示。 这也意味着,如果应用程序希望尽早发出演示,则它可以简单地不设置目标时间(在这种情况下,目标时间将保留上次演示的时间),或将目标时间设置为 0。 两者的效果都相同。 如果应用程序不想等待以前的演示完成后再收到新演示,则需要取消以前的演示。 后面的示例介绍如何执行此操作。

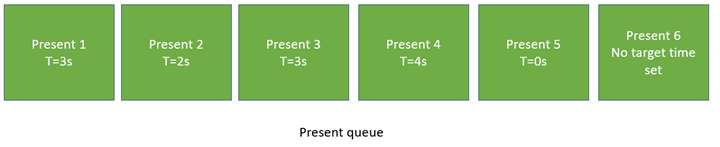

T=3s:演示 1、2、3 全部就绪,3 成为最后一个演示,胜出。T=4s:演示 4、5、6 全部就绪,6 成为最后一个演示,胜出。

上图说明了演示队列中未完成演示的示例。 演示 1 的目标时间为 3s。 因此,在 3s 之前不会发生任何演示。 然而,演示 2 实际上的目标是更早的时间 2s,演示 3 的目标也是 3s。 因此,在时间 3s,演示 1、2 和 3 将全部完成,并且演示 3 将是实际生效的演示。 之后,下一个演示 4 将在 4s 处被满足,但立即被以 0s 为目标的演示 5 和没有设置目标时间的演示 6 覆盖。 5 和 6 都会尽早生效。

C++ 示例

bool SimpleEventSynchronizationExample(

_In_ ID3D11Device* pD3D11Device,

_In_ IPresentationManager* pPresentationManager,

_In_ IPresentationSurface* pPresentationSurface,

_In_ vector<com_ptr_failfast<ID3D11Texture2D>>& textures,

_In_ vector<com_ptr_failfast<IPresentationBuffer>>& presentationBuffers)

{

// Track a time we'll be presenting to below. Default to the current time, then increment by

// 1/10th of a second every present.

SystemInterruptTime presentTime;

QueryInterruptTimePrecise(&presentTime.value);

// Build an array of events that we can wait on to perform various actions in our work loop.

vector<unique_event> waitEvents;

// The lost event will be the first event in our list. This is an event that signifies that

// something went wrong in the system (due to extreme conditions such as memory pressure, or

// driver issues) that indicate that the presentation manager has been lost, and should no

// longer be used, and instead should be recreated.

unique_event lostEvent;

pPresentationManager->GetLostEvent(&lostEvent);

waitEvents.emplace_back(std::move(lostEvent));

// Add each buffer's available event to the list of events we will be waiting on.

for (UINT bufferIndex = 0; bufferIndex < presentationBuffers.size(); bufferIndex++)

{

unique_event availableEvent;

presentationBuffers[bufferIndex]->GetAvailableEvent(&availableEvent);

waitEvents.emplace_back(std::move(availableEvent));

}

// Iterate for 120 presents.

constexpr UINT numberOfPresents = 120;

for (UINT onPresent = 0; onPresent < numberOfPresents; onPresent++)

{

// Advance our present time 1/10th of a second in the future. Note the API accepts

// time in 100ns units, or 1/1e7 of a second, meaning that 1 million units correspond to

// 1/10th of a second.

presentTime.value += 1'000'000;

// Wait for the lost event or an available buffer. Since WaitForMultipleObjects prioritizes

// lower-indexed events, it is recommended to put any higher importance events (like the

// lost event) first, and then follow up with buffer available events.

DWORD waitResult = WaitForMultipleObjects(

static_cast<UINT>(waitEvents.size()),

reinterpret_cast<HANDLE*>(waitEvents.data()),

FALSE,

INFINITE);

// Failfast if the wait hit an error.

FAIL_FAST_IF((waitResult - WAIT_OBJECT_0) >= waitEvents.size());

// Our lost event was the first event in the array. If this is signaled, the caller

// should recreate the presentation manager. This is very similar to how Direct3D devices

// can be lost. Assume our caller knows to handle this return value appropriately.

if (waitResult == WAIT_OBJECT_0)

{

return false;

}

// Otherwise, compute the buffer corresponding to the available event that was signaled.

UINT bufferIndex = waitResult - (WAIT_OBJECT_0 + 1);

// Draw red to that buffer

DrawColorToSurface(

pD3D11Device,

textures[bufferIndex],

1.0f, // red

0.0f, // green

0.0f); // blue

// Bind the presentation buffer to the presentation surface. Changes in this binding will take

// effect on the next present, and the binding persists across presents. That is, any number

// of subsequent presents will imply this binding until it is changed. It is completely fine

// to only update buffers for a subset of the presentation surfaces owned by a presentation

// manager on a given present - the implication is that it simply didn't update.

//

// Similarly, note that if your application were to call SetBuffer on the same presentation

// surface multiple times without calling present, this is fine. The policy is last writer

// wins.

//

// Your application may present without first binding a presentation surface to a buffer.

// The result will be that presentation surface will simply have no content on screen,

// similar to how DComp and WinComp surfaces appear in a tree before they are rendered to.

// In that case system content will show through where the buffer would have been.

//

// Your application may also set a 'null' buffer binding after previously having bound a

// buffer and present - the end result is the same as if your application had presented

// without ever having set the content.

pPresentationSurface->SetBuffer(presentationBuffers[bufferIndex].get());

// Present at the targeted time. Note that a present can target only a single time. If an

// application wants to updates two buffers at two different times, then it must present

// two times.

//

// Presents are always processed in queue order. A present will not take effect before any

// previous present in the queue, even if it targets an earlier time. In such a case, when

// the previous present is processed, the next present will also be processed immediately,

// and override that previous present.

//

// For this reason, if your application wishes to present "now" or "early as possible", then

// it can simply present, without setting a target time. The implied target time will be 0,

// and the new present will override the previous present.

//

// If your application wants to present truly "now", and not wait for previous presents in the

// queue to be processed, then it will need to cancel previous presents. A future example

// demonstrates how to do this.

//

// Your application will receive PRESENTATION_ERROR_LOST if it attempts to Present a lost

// presentation manager. This is the only call that will return such an error. A lost

// presentation manager functions normally in every other case, so applications need only

// to handle this error at the time they call Present.

pPresentationManager->SetTargetTime(presentTime);

HRESULT hrPresent = pPresentationManager->Present();

if (hrPresent == PRESENTATION_ERROR_LOST)

{

// Our presentation manager has been lost. Return 'false' to the caller to indicate that

// the presentation manager should be recreated.

return false;

}

else

{

FAIL_FAST_IF_FAILED(hrPresent);

}

}

return true;

}

void DrawColorToSurface(

_In_ ID3D11Device* pD3D11Device,

_In_ const com_ptr_failfast<ID3D11Texture2D>& texture2D,

_In_ float redValue,

_In_ float greenValue,

_In_ float blueValue)

{

com_ptr_failfast<ID3D11DeviceContext> D3DDeviceContext;

com_ptr_failfast<ID3D11RenderTargetView> renderTargetView;

// Get the immediate context from the D3D11 device.

pD3D11Device->GetImmediateContext(&D3DDeviceContext);

// Create a render target view of the passed texture.

auto resource = texture2D.query<ID3D11Resource>();

FAIL_FAST_IF_FAILED(pD3D11Device->CreateRenderTargetView(

resource.get(),

nullptr,

renderTargetView.addressof()));

// Clear the texture with the specified color.

float clearColor[4] = { redValue, greenValue, blueValue, 1.0f }; // red, green, blue, alpha

D3DDeviceContext->ClearRenderTargetView(renderTargetView.get(), clearColor);

}

示例 12 — 高级同步 — 使用演示同步围栏将工作流限制为未完成演示队列的大小,并处理演示管理器丢失的事件

以下示例说明应用程序如何为未来提交大量演示,然后休眠,直到仍然未完成的演示数量降至特定数量。 Windows Media Foundation 等框架以这种方式限制,以最大程度地减少发生的 CPU 唤醒次数,同时仍确保演示队列不会耗尽(这会妨碍顺畅播放,并导致故障)。 这样可以最大限度地减少演示工作流程中的 CPU 功耗。 应用程序将对最大数量的演示进行排队(基于它们分配的演示缓冲区的数量),然后休眠,直到演示队列耗尽以重新填充队列。

C++ 示例

bool FenceSynchronizationExample(

_In_ ID3D11Device* pD3D11Device,

_In_ IPresentationManager* pPresentationManager,

_In_ IPresentationSurface* pPresentationSurface,

_In_ vector<com_ptr_failfast<ID3D11Texture2D>>& textures,

_In_ vector<com_ptr_failfast<IPresentationBuffer>>& presentationBuffers)

{

// Track a time we'll be presenting to below. Default to the current time, then increment by

// 1/10th of a second every present.

SystemInterruptTime presentTime;

QueryInterruptTimePrecise(&presentTime.value);

// Get present retiring fence.

com_ptr_failfast<ID3D11Fence> presentRetiringFence;

FAIL_FAST_IF_FAILED(pPresentationManager->GetPresentRetiringFence(

IID_PPV_ARGS(&presentRetiringFence)));

// Get the lost event to query before presentation.

unique_event lostEvent;

pPresentationManager->GetLostEvent(&lostEvent);

// Create an event to synchronize to our queue depth with. We'll use Direct3D to signal this event

// when our synchronization fence indicates reaching a specific present.

unique_event presentQueueSyncEvent;

presentQueueSyncEvent.create(EventOptions::ManualReset);

// Cycle the present queue 10 times.

constexpr UINT numberOfPresentRefillCycles = 10;

for (UINT onRefillCycle = 0; onRefillCycle < numberOfPresentRefillCycles; onRefillCycle++)

{

// Fill up presents for all presentation buffers. We compare the presentation manager's

// next present ID to the present confirmed fence's value to figure out how

// far ahead we are. We stop when we've issued presents for all buffers.

while ((pPresentationManager->GetNextPresentId() -

presentRetiringFence->GetCompletedValue()) < presentationBuffers.size())

{

// Present buffers in cyclical pattern. We can figure out the current buffer to

// present by taking the modulo of the next present ID by the number of buffers. Note that the

// first present of a presentation manager always has a present ID of 1 and increments by 1 on

// each subsequent present. A present ID of 0 is conceptually meant to indicate that "no

// presents have taken place yet".

UINT bufferIndex = static_cast<UINT>(

pPresentationManager->GetNextPresentId() % presentationBuffers.size());

// Assert that the passed buffer is tracked as available for presentation. Because we throttle

// based on the total number of buffers, this should always be true.

NT_ASSERT(presentationBuffers[bufferIndex]->IsAvailable());

// Advance our present time 1/10th of a second in the future.

presentTime.value += 1'000'000;

// Draw red to the texture.

DrawColorToSurface(

pD3D11Device,

textures[bufferIndex],

1.0f, // red

0.0f, // green

0.0f); // blue

// Bind the presentation buffer to the presentation surface.

pPresentationSurface->SetBuffer(presentationBuffers[bufferIndex].get());

// Present at the targeted time.

pPresentationManager->SetTargetTime(presentTime);

HRESULT hrPresent = pPresentationManager->Present();

if (hrPresent == PRESENTATION_ERROR_LOST)

{

// Our presentation manager has been lost. Return 'false' to the caller to indicate that

// the presentation manager should be recreated.

return false;

}

else

{

FAIL_FAST_IF_FAILED(hrPresent);

}

};

// Now that the buffer is full, go to sleep until the present queue has been drained to

// the desired queue depth. To figure out the appropriate present to wake on, we subtract

// the desired wake queue depth from the presentation manager's last present ID. We

// use Direct3D's SetEventOnCompletion to signal our wait event when that particular present

// is retiring, and then wait on that event. Note that the semantic of SetEventOnCompletion

// is such that even if we happen to call it after the fence has already reached the

// requested value, the event will be set immediately.

constexpr UINT wakeOnQueueDepth = 2;

presentQueueSyncEvent.ResetEvent();

FAIL_FAST_IF_FAILED(presentRetiringFence->SetEventOnCompletion(

pPresentationManager->GetNextPresentId() - 1 - wakeOnQueueDepth,

presentQueueSyncEvent.get()));

HANDLE waitHandles[] = { lostEvent.get(), presentQueueSyncEvent.get() };

DWORD waitResult = WaitForMultipleObjects(

ARRAYSIZE(waitHandles),

waitHandles,

FALSE,

INFINITE);

// Failfast if we hit an error during our wait.

FAIL_FAST_IF((waitResult - WAIT_OBJECT_0) >= ARRAYSIZE(waitHandles));

if (waitResult == WAIT_OBJECT_0)

{

// The lost event was signaled - return 'false' to the caller to indicate that

// the presentation manager was lost.

return false;

}

// Iterate into another refill cycle.

}

return true;

}

示例 13 — VSync 中断和硬件翻转队列支持

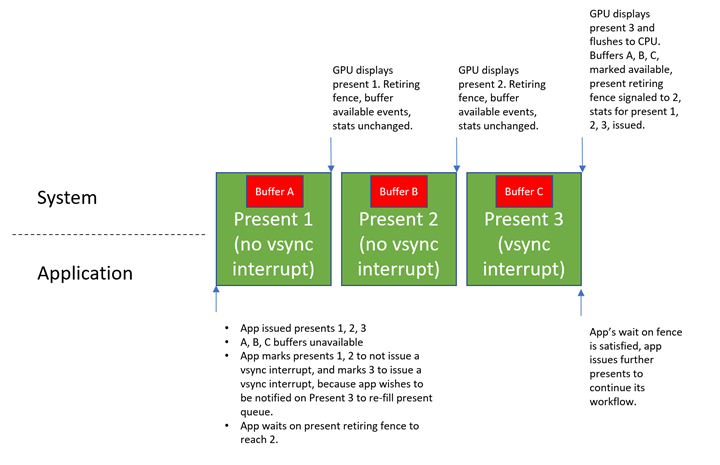

与此 API 一起引入了一种名为硬件翻转队列的新形式的翻转队列管理,本质上允许 GPU 硬件完全独立于 CPU 管理呈现。 这样做的主要好处是能效。 当 CPU 不需要参与演示过程时,消耗的电量就会减少。

使 GPU 句柄独立呈现的缺点是 CPU 状态不能再立即反映何时显示演示。 合成交换链 API 概念(如缓冲区可用事件、同步围栏和演示统计信息)不会立即更新。 相反,GPU 只会周期性地刷新 CPU 状态,以显示所演示的内容。 这意味着对应用程序的演示状态的反馈将伴随着延迟。

应用程序通常会关心某些演示何时显示,但不会太关心其他演示。 例如,如果应用程序发出 10 个演示,它可能会决定想知道第 8 个演示何时显示,这样它就可以再次开始填充演示队列。 在这种情况下,它真正想要得到反馈的唯一演示是第 8 个。 当显示 1-7 或 9 时,它不打算执行任何操作。

是否显示更新 CPU 状态取决于 GPU 硬件是否配置为在显示该状态时生成 VSync 中断。 如果 CPU 还没有激活,则此 VSync 中断会唤醒 CPU,然后 CPU 运行特殊的内核级代码,根据自上次检查以来 GPU 上发生的演示更新自身,进而更新反馈机制,如缓冲区可用事件、演示停用围栏和演示统计信息。

为了允许应用程序明确指示哪些演示应发出 VSync 中断,演示管理器公开了一个 IPresentationManager::ForceVSyncInterrupt 方法,该方法指定后续演示是否应发出 VSSync 中断。 此设置适用于所有未来的演示,直到更改为止,非常类似于 IPresentationManager::SetTargetTime 和 IPresentationManager::SetPreferredPresentDuration。

如果在特定的演示上启用了此设置,则硬件将在显示该演示时立即通知 CPU,使用更多的电源,但确保在出现演示时立即告知 CPU,以便应用程序尽快做出响应。 如果在特定的演示上禁用了此设置,那么当演示显示时,系统将被允许推迟更新 CPU,从而节省电源,但延迟反馈。

应用程序通常不会强制对除要同步的演示之外的任何演示执行 VSync 中断。 在上面的示例中,由于应用程序希望在显示第 8 个演示时唤醒以重新填充其演示队列,因此它会请求演示 8 发出 VSync 中断信号,但演示 1-7 和演示 9 不发出信号。

默认情况下,如果应用程序未配置此设置,则在显示每个演示时,演示管理器将始终发出 VSync 中断信号。 不关心电源使用情况或不知道硬件翻转队列支持的应用程序可以简单地不调用 ForceVSyncInterrupt,因此可以保证它们正确同步。 了解硬件翻转队列支持的应用程序可以明确控制此设置,以提高电源效率。

下图描述了 API 在 VSync 中断设置方面的行为。

C++ 示例

bool ForceVSyncInterruptPresentsExample(

_In_ ID3D11Device* pD3D11Device,

_In_ IPresentationManager* pPresentationManager,

_In_ IPresentationSurface* pPresentationSurface,

_In_ vector<com_ptr_failfast<ID3D11Texture2D>>& textures,

_In_ vector<com_ptr_failfast<IPresentationBuffer>>& presentationBuffers)

{

// Track a time we'll be presenting to below. Default to the current time, then increment by

// 1/10th of a second every present.

SystemInterruptTime presentTime;

QueryInterruptTimePrecise(&presentTime.value);

// Get present retiring fence.

com_ptr_failfast<ID3D11Fence> presentRetiringFence;

FAIL_FAST_IF_FAILED(pPresentationManager->GetPresentRetiringFence(

IID_PPV_ARGS(&presentRetiringFence)));

// Get the lost event to query before presentation.

unique_event lostEvent;

pPresentationManager->GetLostEvent(&lostEvent);

// Create an event to synchronize to our queue depth with. We will use Direct3D to signal this event

// when our synchronization fence indicates reaching a specific present.

unique_event presentQueueSyncEvent;

presentQueueSyncEvent.create(EventOptions::ManualReset);

// Issue 10 presents, and wake when the present queue is 2 entries deep (which happens when

// present 7 is retiring).

constexpr UINT wakeOnQueueDepth = 2;

constexpr UINT numberOfPresents = 10;

const UINT presentIdToWakeOn = numberOfPresents - 1 - wakeOnQueueDepth;

while (pPresentationManager->GetNextPresentId() <= numberOfPresents)

{

UINT bufferIndex = static_cast<UINT>(

pPresentationManager->GetNextPresentId() % presentationBuffers.size());

// Advance our present time 1/10th of a second in the future.

presentTime.value += 1'000'000;

// Draw red to the texture.

DrawColorToSurface(

pD3D11Device,

textures[bufferIndex],

1.0f, // red

0.0f, // green

0.0f); // blue

// Bind the presentation buffer to the presentation surface.

pPresentationSurface->SetBuffer(presentationBuffers[bufferIndex].get());

// Present at the targeted time.

pPresentationManager->SetTargetTime(presentTime);

// If this present is not going to retire the present that we want to wake on when it is shown, then

// we don't need immediate updates to buffer available events, present retiring fence, or present

// statistics. As such, we can mark it as not requiring a VSync interrupt, to allow for greater

// power efficiency on machines with hardware flip queue support.

bool forceVSyncInterrupt = (pPresentationManager->GetNextPresentId() == (presentIdToWakeOn + 1));

pPresentationManager->ForceVSyncInterrupt(forceVSyncInterrupt);

HRESULT hrPresent = pPresentationManager->Present();

if (hrPresent == PRESENTATION_ERROR_LOST)

{

// Our presentation manager has been lost. Return 'false' to the caller to indicate that

// the presentation manager should be recreated.

return false;

}

else

{

FAIL_FAST_IF_FAILED(hrPresent);

}

}

// Now that the buffer is full, go to sleep until presentIdToWakeOn has begun retiring. We

// configured the subsequent present to force a VSync interrupt when it is shown, which will ensure

// this wait is completed immediately.

presentQueueSyncEvent.ResetEvent();

FAIL_FAST_IF_FAILED(presentRetiringFence->SetEventOnCompletion(

presentIdToWakeOn,

presentQueueSyncEvent.get()));

HANDLE waitHandles[] = { lostEvent.get(), presentQueueSyncEvent.get() };

DWORD waitResult = WaitForMultipleObjects(

ARRAYSIZE(waitHandles),

waitHandles,

FALSE,

INFINITE);

// Failfast if we hit an error during our wait.

FAIL_FAST_IF((waitResult - WAIT_OBJECT_0) >= ARRAYSIZE(waitHandles));

if (waitResult == WAIT_OBJECT_0)

{

// The lost event was signaled - return 'false' to the caller to indicate that

// the presentation manager was lost.

return false;

}

return true;

}

示例 14 — 取消为未来安排的演示

对未来的演示进行深度排队的媒体应用程序可能会决定取消之前发布的演示。 例如,如果应用程序正在播放视频,为将来发布了大量帧,并且用户决定暂停视频播放,则可能会发生这种情况。 在这种情况下,应用程序将想要坚持当前帧,并取消任何尚未进入队列的未来帧。 如果媒体应用程序决定将播放移动到视频中的不同点,也可能发生这种情况。 在这种情况下,应用程序将希望取消所有尚未排队等候视频中旧位置的演示,并将其替换为新位置的演示。 在这种情况下,取消以前的演示后,应用程序可以在未来发布与视频中的新点相对应的新演示。

C++ 示例

void PresentCancelExample(

_In_ IPresentationManager* pPresentationManager,

_In_ UINT64 firstPresentIDToCancelFrom)

{

// Assume we've issued a number of presents in the future. Something happened in the app, and

// we want to cancel the issued presents that occur after a specified time or present ID. This

// may happen, for example, when the user pauses playback from inside a media application. The

// application will want to cancel all presents posted targeting beyond the pause time. The

// cancel will apply to all previously posted presents whose present IDs are at least

// 'firstPresentIDToCancelFrom'. Note that Present IDs are always unique, and never recycled,

// so even if a present is canceled, no subsequent present will ever reuse its present ID.

//

// Also note that if some presents we attempt to cancel can't be canceled because they've

// already started queueing, then no error will be returned, they simply won't be canceled as

// requested. Cancelation takes a "best effort" approach.

FAIL_FAST_IF_FAILED(pPresentationManager->CancelPresentsFrom(firstPresentIDToCancelFrom));

// In the case where the media application scrubbed to a different position in the video, it may now

// choose to issue new presents to replace the ones canceled. This is not illustrated here, but

// previous examples that demonstrate presentation show how this may be achieved.

}

示例 15 — 交错调整缓冲区大小以提高性能

此示例说明应用程序如何交错缓冲区大小以提高 DXGI 的性能。 回想一下我们之前的调整大小示例,其中电影流媒体服务客户端希望将播放分辨率从 720p 更改为 1080p。 在 DXGI 中,应用程序将在 DXGI 交换链上执行调整大小操作,这将以原子方式丢弃所有以前的缓冲区,并同时重新分配所有新的 1080p 缓冲区,并将其添加到交换链。 这种类型的原子调整缓冲区大小非常昂贵,并有可能花费很长时间并导致故障。 新 API 对各个演示缓冲区提供了更精细的控制。 因此,可以在多个演示中逐个重新分配和替换缓冲区,以随着时间的推移划分工作负载。 这对任何一个演示的影响较小,而且不太可能导致故障。 实质上,适用于具有 n 个演示缓冲区的演示管理器。对于“n”个演示,应用程序可以删除旧大小的旧演示缓冲区,分配新大小的新演示缓冲区并呈现。 在'n'出现后,所有缓冲区都将为新的大小。

C++ 示例

bool StaggeredResizeExample(

_In_ ID3D11Device* pD3D11Device,

_In_ IPresentationManager* pPresentationManager,

_In_ IPresentationSurface* pPresentationSurface,

_In_ vector<com_ptr_failfast<ID3D11Texture2D>> textures,

_In_ vector<com_ptr_failfast<IPresentationBuffer>> presentationBuffers)

{

// Track a time we'll be presenting to below. Default to the current time, then increment by

// 1/10th of a second every present.

SystemInterruptTime presentTime;

QueryInterruptTimePrecise(&presentTime.value);

// Assume textures/presentationBuffers vector contains 10 100x100 buffers, and we want to resize

// our swapchain to 200x200. Instead of reallocating 10 200x200 buffers all at once,

// like DXGI does today, we can stagger the reallocation across multiple presents. For

// each present, we can allocate one buffer at the new size, and replace one old buffer

// at the old size with the new one at the new size. After 10 presents, we will have

// reallocated all our buffers, and we will have done so in a manner that's much less

// likely to produce delays or glitches.

constexpr UINT numberOfBuffers = 10;

for (UINT bufferIndex = 0; bufferIndex < numberOfBuffers; bufferIndex++)

{

// Advance our present time 1/10th of a second in the future.

presentTime.value += 1'000'000;

// Release the old texture/presentation buffer at the presented index.

auto& replacedTexture = textures[bufferIndex];

auto& replacedPresentationBuffer = presentationBuffers[bufferIndex];

replacedTexture.reset();

replacedPresentationBuffer.reset();

// Create a new texture/presentation buffer in its place.

AddNewPresentationBuffer(

pD3D11Device,

pPresentationManager,

200, // Buffer width

200, // Buffer height

&replacedTexture,

&replacedPresentationBuffer);

// Draw red to the new texture.

DrawColorToSurface(

pD3D11Device,

replacedTexture,

1.0f, // red

0.0f, // green

0.0f); // blue

// Bind the presentation buffer to the presentation surface.

pPresentationSurface->SetBuffer(replacedPresentationBuffer.get());

// Present at the targeted time.

pPresentationManager->SetTargetTime(presentTime);

HRESULT hrPresent = pPresentationManager->Present();

if (hrPresent == PRESENTATION_ERROR_LOST)

{

// Our presentation manager has been lost. Return 'false' to the caller to indicate that

// the presentation manager should be recreated.

return false;

}

else

{

FAIL_FAST_IF_FAILED(hrPresent);

}

}

return true;

}

示例 16 — 读取和处理演示统计信息

API 报告为提交的每个演示提供统计信息。 概括而言,演示统计信息是一种反馈机制,描述系统如何处理或显示特定的演示。 应用程序可以注册接收的不同类型的统计信息,而且 API 中的统计信息基础结构本身是可扩展的,因此将来可以添加更多类型的统计信息。 此 API 介绍如何回读统计信息,并描述目前定义的统计信息类型,以及它们在高级别传达的信息。

C++ 示例

// This is an identifier we'll assign to our presentation surface that will be used to reference that

// presentation surface in statistics. This is to avoid referring to a presentation surface by pointer

// in a statistics structure, which has unclear refcounting and lifetime semantics.

static constexpr UINT_PTR myPresentedContentTag = 12345;

bool StatisticsExample(

_In_ ID3D11Device* pD3D11Device,

_In_ IPresentationManager* pPresentationManager,

_In_ IPresentationSurface* pPresentationSurface,

_In_ vector<com_ptr_failfast<ID3D11Texture2D>>& textures,

_In_ vector<com_ptr_failfast<IPresentationBuffer>>& presentationBuffers)

{

// Track a time we'll be presenting to below. Default to the current time, then increment by

// 1/10th of a second every present.

SystemInterruptTime presentTime;

QueryInterruptTimePrecise(&presentTime.value);

// Register to receive 3 types of statistics.

FAIL_FAST_IF_FAILED(pPresentationManager->EnablePresentStatisticsKind(

PresentStatisticsKind_CompositionFrame,

true));

FAIL_FAST_IF_FAILED(pPresentationManager->EnablePresentStatisticsKind(

PresentStatisticsKind_PresentStatus,

true));

FAIL_FAST_IF_FAILED(pPresentationManager->EnablePresentStatisticsKind(

PresentStatisticsKind_IndependentFlipFrame,

true));

// Stats come back referencing specific presentation surfaces. We assign 'tags' to presentation

// surfaces in the API that statistics will use to reference the presentation surface in a

// statistic.

pPresentationSurface->SetTag(myPresentedContentTag);

// Build an array of events that we can wait on.

vector<unique_event> waitEvents;

// The lost event will be the first event in our list. This is an event that signifies that

// something went wrong in the system (due to extreme conditions like memory pressure, or

// driver issues) that indicate that the presentation manager has been lost, and should no

// longer be used, and instead should be recreated.

unique_event lostEvent;

FAIL_FAST_IF_FAILED(pPresentationManager->GetLostEvent(&lostEvent));

waitEvents.emplace_back(std::move(lostEvent));

// The statistics event will be the second event in our list. This event will be signaled

// by the presentation manager when there are statistics to read back.

unique_event statisticsEvent;

FAIL_FAST_IF_FAILED(pPresentationManager->GetPresentStatisticsAvailableEvent(&statisticsEvent));

waitEvents.emplace_back(std::move(statisticsEvent));

// Add each buffer's available event to the list of events we will be waiting on.

for (UINT bufferIndex = 0; bufferIndex < presentationBuffers.size(); bufferIndex++)

{

unique_event availableEvent;

presentationBuffers[bufferIndex]->GetAvailableEvent(&availableEvent);

waitEvents.emplace_back(std::move(availableEvent));

}

// Iterate our workflow 120 times.

constexpr UINT iterationCount = 120;

for (UINT i = 0; i < iterationCount; i++)

{

// Wait for an event to be signaled.

DWORD waitResult = WaitForMultipleObjects(

static_cast<UINT>(waitEvents.size()),

reinterpret_cast<HANDLE*>(waitEvents.data()),

FALSE,

INFINITE);

// Failfast if the wait hit an error.

FAIL_FAST_IF((waitResult - WAIT_OBJECT_0) >= waitEvents.size());

// Our lost event was the first event in the array. If this is signaled, then the caller

// should recreate the presentation manager. This is very similar to how Direct3D devices

// can be lost. Assume our caller knows to handle this return value appropriately.

if (waitResult == WAIT_OBJECT_0)

{

return false;

}

// The second event in the array is the statistics event. If this event is signaled,

// read and process our statistics.

if (waitResult == (WAIT_OBJECT_0 + 1))

{

StatisticsExample_ProcessStatistics(pPresentationManager);

}

// Otherwise, the event corresponds to a buffer available event that is signaled.

// Compute the buffer for the available event that was signaled and present a

// frame.

else

{

DWORD bufferIndex = waitResult - (WAIT_OBJECT_0 + 2);

// Draw red to the texture.

DrawColorToSurface(

pD3D11Device,

textures[bufferIndex],

1.0f, // red

0.0f, // green

0.0f); // blue

// Bind the texture to the presentation surface.

pPresentationSurface->SetBuffer(presentationBuffers[bufferIndex].get());

// Advance our present time 1/10th of a second in the future.

presentTime.value += 1'000'000;

// Present at the targeted time.

pPresentationManager->SetTargetTime(presentTime);

HRESULT hrPresent = pPresentationManager->Present();

if (hrPresent == PRESENTATION_ERROR_LOST)

{

// Our presentation manager has been lost. Return 'false' to the caller to indicate that

// the presentation manager should be recreated.

return false;

}

else

{

FAIL_FAST_IF_FAILED(hrPresent);

}

}

}

return true;

}

void StatisticsExample_ProcessStatistics(

_In_ IPresentationManager* pPresentationManager)

{

// Dequeue a single present statistics item. This will return the item

// and pop it off the queue of statistics.

com_ptr_failfast<IPresentStatistics> presentStatisticsItem;

pPresentationManager->GetNextPresentStatistics(&presentStatisticsItem);

// Read back the present ID this corresponds to.

UINT64 presentId = presentStatisticsItem->GetPresentId();

UNREFERENCED_PARAMETER(presentId);

// Switch on the type of statistic this item corresponds to.

switch (presentStatisticsItem->GetKind())

{

case PresentStatisticsKind_PresentStatus:

{

// Present status statistics describe whether a given present was queued for display,

// skipped due to some future present being a better candidate to display on a given

// frame, or canceled via the API.

auto presentStatusStatistics = presentStatisticsItem.query<IPresentStatusStatistics>();

// Read back the status

PresentStatus status = presentStatusStatistics->GetPresentStatus();

UNREFERENCED_PARAMETER(status);

// Possible values for status:

// PresentStatus_Queued

// PresentStatus_Skipped

// PresentStatus_Canceled;

// Depending on the status returned, your application can adjust their workflow

// accordingly. For example, if your application sees that a large percentage of their

// presents are skipped, it means they are presenting more frames than the system can

// display. In such a case, your application might decided to lower the rate at which

// you present frames.

}

break;

case PresentStatisticsKind_CompositionFrame:

{

// Composition frame statistics describe how a given present was used in a DWM frame.

// It includes information such as which monitors displayed the present, whether the

// present was composed or directly scanned out via an MPO plane, and rendering

// properties such as what transforms were applied to the rendering. Composition

// frame statistics are not issued for iflip presents - only for presents issued by the

// compositor. iflip presents have their own type of statistic (described next).

auto compositionFrameStatistics =

presentStatisticsItem.query<ICompositionFramePresentStatistics>();

// Stats should come back for the present statistics item that we tagged earlier.

NT_ASSERT(compositionFrameStatistics->GetContentTag() == myPresentedContentTag);

// The composition frame ID indicates the DWM frame ID that the present was used

// in.

CompositionFrameId frameId = compositionFrameStatistics->GetCompositionFrameId();

// Get the display instance array to indicate which displays showed the present. Each

// instance of the presentation surface will have an entry in this array. For example,

// if your application adds the same presentation surface to four different visuals in the

// visual tree, then each instance in the tree will have an entry in the display instance

// array. Similarly, if the presentation surface shows up on multiple monitors, then each

// monitor instance will be accounted for in the display instance array that is

// returned.

//

// Note that the pointer returned from GetDisplayInstanceArray is valid for the

// lifetime of the ICompositionFramePresentStatistics. Your application must not attempt

// to read this pointer after the ICompositionFramePresentStatistics has been released

// to a refcount of 0.

UINT displayInstanceArrayCount;

const CompositionFrameDisplayInstance* pDisplayInstances;

compositionFrameStatistics->GetDisplayInstanceArray(

&displayInstanceArrayCount,

&pDisplayInstances);

for (UINT i = 0; i < displayInstanceArrayCount; i++)

{

const auto& displayInstance = pDisplayInstances[i];

// The following are fields that are available in a display instance.

// The LUID, VidPnSource, and unique ID of the output and its owning

// adapter. The unique ID will be bumped when a LUID/VidPnSource is

// recycled. Applications should use the unique ID to determine when

// this happens so that they don't try and correlate stats from one

// monitor with another.

displayInstance.outputAdapterLUID;

displayInstance.outputVidPnSourceId;

displayInstance.outputUniqueId;

// The instanceKind field indicates how the present was used. It

// indicates that the present was composed (rendered to DWM's backbuffer),

// scanned out (via MPO/DFlip) or composed to an intermediate buffer by DWM

// for effects.

displayInstance.instanceKind;

// The finalTransform field indicates the transform at which the present was

// shown in world space. It will include all ancestor visual transforms and

// can be used to know how it was rendered in the global visual tree.

displayInstance.finalTransform;

// The requiredCrossAdapterCopy field indicates whether or not we needed to

// copy your application's buffer to a different adapter in order to display

// it. Applications should use this to determine whether or not they should

// reallocate their buffers onto a different adapter for better performance.

displayInstance.requiredCrossAdapterCopy;

// The colorSpace field indicates the colorSpace of the output that the

// present was rendered to.

displayInstance.colorSpace;

// For example, if your application sees that the finalTransform is scaling your

// content by 2x, you might elect to pre-render that scale into your presentation

// surface, and then add a 1/2 scale. At which point, the finalTransform should

// be 1x, and some MPO hardware will be more likely to MPO a presentation surface

// with a 1x scale applied, since some hardware has a maximum they are able to

// scale in an MPO plane. Similarly, if your application's content is being scaled

// down on screen, you may wish to simply render its content at a

// smaller scale to conserve resources, and apply an enlargement transform.

}

// Additionally, we can use the CompositionFrameId reported by the statistic

// to query timing-related information about that specific frame via the new

// composition timing API, such as when that frame showed up on screen.

// Note this is achieved using a separate API from the composition swapchain API, but

// using the composition frame ID reported in the composition swapchain API to

// properly specify which frame your application wants timing information from.

COMPOSITION_FRAME_TARGET_STATS frameTargetStats;

COMPOSITION_TARGET_STATS targetStats[4];

frameTargetStats.targetCount = ARRAYSIZE(targetStats);

frameTargetStats.targetStats = targetStats;

// Specify the frameId that we got from stats in order to pass to the call

// below and retrieve timing information about that frame.

frameTargetStats.frameId = frameId;

FAIL_FAST_IF_FAILED(DCompositionGetTargetStatistics(1, &frameTargetStats));

// If the frameTargetStats comes back with a 0 frameId, it means the frame isn't

// part of statistics. This might mean that it has expired out of

// DCompositionGetTargetStatistics history, but that call keeps a history buffer

// roughly equivalent to ~5 seconds worth of frame history, so if your application

// is processing statistics from the presentation manager relatively regularly,

// by all accounts it shouldn't worry about DCompositionGetTargetStatistics

// history expiring. The more likely scenario when this occurs is that it's too

// early, and that this frame isn't part of statistics YET. In that case, your application

// should defer processing for this frame, and try again later. For the purposes

// if sample brevity, we don't bother trying again here. A good method to use would

// be to add this present info to a list of presents that we haven't gotten target

// statistics for yet, and try again for all presents in that list any time we get

// a new PresentStatisticsKind_CompositionFrame for a future frame.

if (frameTargetStats.frameId == frameId)

{

// The targetCount will represent the count of outputs the given frame

// applied to.

frameTargetStats.targetCount;

// The targetTime corresponds to the wall clock QPC time DWM was

// targeting for the frame.

frameTargetStats.targetTime;

for (UINT i = 0; i < frameTargetStats.targetCount; i++)

{

const auto& targetStat = frameTargetStats.targetStats[i];

// The present time corresponds to the targeted present time of the composition

// frame.

targetStat.presentTime;

// The target ID corresponds to the LUID/VidPnSourceId/Unique ID for the given

// target.

targetStat.targetId;

// The completedStats convey information about the time a compositor frame was

// completed, which marks the time any of its associated composition swapchain API

// presents entered the displayed state. In particular, your application might wish

// to use the 'time' to know if a present showed at a time it expected.

targetStat.completedStats.presentCount;

targetStat.completedStats.refreshCount;

targetStat.completedStats.time;

// There is various other timing statistics information conveyed by

// DCompositionGetTargetStatistics.

}

}

}

break;

case PresentStatisticsKind_IndependentFlipFrame:

{

// Independent flip frame statistics describe a present that was shown via

// independent flip.

auto independentFlipFrameStatistics =

presentStatisticsItem.query<IIndependentFlipFramePresentStatistics>();

// Stats should come back for the present statistics item that we tagged earlier.

NT_ASSERT(independentFlipFrameStatistics->GetContentTag() == myPresentedContentTag);

// The driver-approved present duration describes the custom present duration that was

// approved by the driver and applied during the present. This is how, for example, media

// will know whether or not they got 24hz mode for their content if they requested it.

independentFlipFrameStatistics->GetPresentDuration();

// The displayed time is the time the present was confirmed to have been shown

// on screen.

independentFlipFrameStatistics->GetDisplayedTime();

// The adapter LUID/VidpnSource ID describe the output on which the present took

// place. Unlike the composition statistic above, we don't report a unique ID here

// because a monitor recycle would kick the presentation out of iflip.

independentFlipFrameStatistics->GetOutputAdapterLUID();

independentFlipFrameStatistics->GetOutputVidPnSourceId();

}

break;

}

}

示例 17 — 使用抽象层通过应用程序中的新合成交换链 API 或 DXGI 进行演示

鉴于新的合成交换链 API 的系统/驱动程序要求较高,应用程序可能希望在不支持新 API 的情况下使用 DXGI。 幸运的是,可以相当轻松地引入一个抽象层,该层利用任何 API 来执行演示。 以下示例说明了如何实现这一点。

C++ 示例

// A base class presentation provider. We'll provide implementations using both DXGI and the new

// composition swapchain API, which the example will use based on what's supported.

class PresentationProvider

{

public:

virtual void GetBackBuffer(

_Out_ ID3D11Texture2D** ppBackBuffer) = 0;

virtual void Present(

_In_ SystemInterruptTime presentationTime) = 0;

virtual bool ReadStatistics(

_Out_ UINT* pPresentCount,

_Out_ UINT64* pSyncQPCTime) = 0;

virtual ID3D11Device* GetD3D11DeviceNoRef()

{

return m_d3dDevice.get();

}

protected:

com_ptr_failfast<ID3D11Device> m_d3dDevice;

};

// An implementation of PresentationProvider using a DXGI swapchain to provide presentation

// functionality.

class DXGIProvider :

public PresentationProvider

{

public:

DXGIProvider(

_In_ UINT width,

_In_ UINT height,

_In_ UINT bufferCount)

{

com_ptr_failfast<IDXGIAdapter> dxgiAdapter;

com_ptr_failfast<IDXGIFactory7> dxgiFactory;

com_ptr_failfast<IDXGISwapChain1> dxgiSwapchain;

com_ptr_failfast<ID3D11DeviceContext> d3dDeviceContext;

// Direct3D device creation flags.

UINT deviceCreationFlags =

D3D11_CREATE_DEVICE_BGRA_SUPPORT |

D3D11_CREATE_DEVICE_SINGLETHREADED;

// Create the Direct3D device.

FAIL_FAST_IF_FAILED(D3D11CreateDevice(

nullptr, // No adapter

D3D_DRIVER_TYPE_HARDWARE, // Hardware device

nullptr, // No module

deviceCreationFlags, // Device creation flags

nullptr, 0, // Highest available feature level

D3D11_SDK_VERSION, // API version

&m_d3dDevice, // Resulting interface pointer

nullptr, // Actual feature level

&d3dDeviceContext)); // Device context

// Make our way from the Direct3D device to the DXGI factory.

auto dxgiDevice = m_d3dDevice.query<IDXGIDevice>();

FAIL_FAST_IF_FAILED(dxgiDevice->GetParent(IID_PPV_ARGS(&dxgiAdapter)));

FAIL_FAST_IF_FAILED(dxgiAdapter->GetParent(IID_PPV_ARGS(&dxgiFactory)));

// Create a swapchain matching the desired parameters.

DXGI_SWAP_CHAIN_DESC1 desc = {};

desc.Width = width;

desc.Height = height;

desc.Format = DXGI_FORMAT_B8G8R8A8_UNORM;

desc.BufferUsage = DXGI_USAGE_RENDER_TARGET_OUTPUT;

desc.SampleDesc.Count = 1;

desc.SampleDesc.Quality = 0;

desc.BufferCount = bufferCount;

desc.Scaling = DXGI_SCALING_STRETCH;

desc.SwapEffect = DXGI_SWAP_EFFECT_FLIP_SEQUENTIAL;

desc.AlphaMode = DXGI_ALPHA_MODE_PREMULTIPLIED;

FAIL_FAST_IF_FAILED(dxgiFactory->CreateSwapChainForComposition(

m_d3dDevice.get(),

&desc,

nullptr,

&dxgiSwapchain));

// Store the swapchain.

dxgiSwapchain.query_to(&m_swapchain);

}

void GetBackBuffer(

_Out_ ID3D11Texture2D** ppBackBuffer) override

{

// Get the backbuffer directly from the swapchain.

FAIL_FAST_IF_FAILED(m_swapchain->GetBuffer(

m_swapchain->GetCurrentBackBufferIndex(),

IID_PPV_ARGS(ppBackBuffer)));

}

void Present(

_In_ SystemInterruptTime presentationTime) override

{

// Convert the passed presentation time to a present interval. The implementation is

// not provided here, as there's a great deal of complexity around computing this

// most accurately, but it essentially boils down to taking the time from now and

// figuring out the number of vblanks that corresponds to that time duration.

UINT vblankIntervals = ComputePresentIntervalFromTime(presentationTime);

// Issue a present to the swapchain. If we wanted to allow for a time to be specified,

// code here could convert the time to a present duration, which could be passed here.

FAIL_FAST_IF_FAILED(m_swapchain->Present(vblankIntervals, 0));

}

bool ReadStatistics(

_Out_ UINT* pPresentCount,

_Out_ UINT64* pSyncQPCTime) override

{

// Zero our output parameters initially.

*pPresentCount = 0;

*pSyncQPCTime = 0;

// Grab frame statistics from the swapchain.

DXGI_FRAME_STATISTICS frameStatistics;

FAIL_FAST_IF_FAILED(m_swapchain->GetFrameStatistics(&frameStatistics));

// If the statistics have changed since our last read, then return the new information

// to the caller.

bool hasNewStats = false;

if (frameStatistics.PresentCount > m_lastPresentCount)

{

m_lastPresentCount = frameStatistics.PresentCount;

hasNewStats = true;

*pPresentCount = frameStatistics.PresentCount;

*pSyncQPCTime = frameStatistics.SyncQPCTime.QuadPart;

}

return hasNewStats;

}

private:

com_ptr_failfast<IDXGISwapChain4> m_swapchain;

UINT m_lastPresentCount = 0;

};

// An implementation of PresentationProvider using the composition swapchain API to provide

// presentation functionality.

class PresentationAPIProvider :

public PresentationProvider

{

public:

PresentationAPIProvider(

_In_ UINT width,

_In_ UINT height,

_In_ UINT bufferCount)

{

// Create the presentation manager and presentation surface using the function defined in a

// previous example.

MakePresentationManagerAndPresentationSurface(

&m_d3dDevice,

&m_presentationManager,

&m_presentationSurface,

m_presentationSurfaceHandle);

// Register for present statistics.

FAIL_FAST_IF_FAILED(m_presentationManager->EnablePresentStatisticsKind(

PresentStatisticsKind_CompositionFrame,

true));

// Get the statistics event from the presentation manager.

FAIL_FAST_IF_FAILED(m_presentationManager->GetPresentStatisticsAvailableEvent(

&m_statisticsAvailableEvent));

// Create and register the specified number of presentation buffers.

for (UINT i = 0; i < bufferCount; i++)

{

com_ptr_failfast<ID3D11Texture2D> texture;

com_ptr_failfast<IPresentationBuffer> presentationBuffer;

AddNewPresentationBuffer(

m_d3dDevice.get(),

m_presentationManager.get(),

width,

height,

&texture,

&presentationBuffer);

// Add the new presentation buffer and texture to our array.

m_textures.push_back(texture);

m_presentationBuffers.push_back(presentationBuffer);

// Store the available event for the presentation buffer.

unique_event availableEvent;

FAIL_FAST_IF_FAILED(presentationBuffer->GetAvailableEvent(&availableEvent));

m_bufferAvailableEvents.emplace_back(std::move(availableEvent));

}

}

void GetBackBuffer(

_Out_ ID3D11Texture2D** ppBackBuffer) override

{

// Query an available backbuffer using our available events.

DWORD waitIndex = WaitForMultipleObjects(

static_cast<UINT>(m_bufferAvailableEvents.size()),

reinterpret_cast<HANDLE*>(m_bufferAvailableEvents.data()),

FALSE,

INFINITE);

UINT bufferIndex = waitIndex - WAIT_OBJECT_0;

// Set the backbuffer to be the next presentation buffer.

FAIL_FAST_IF_FAILED(m_presentationSurface->SetBuffer(m_presentationBuffers[bufferIndex].get()));

// Return the backbuffer to the caller.

m_textures[bufferIndex].query_to(ppBackBuffer);

}

void Present(

_In_ SystemInterruptTime presentationTime) override

{

// Present at the targeted time.

m_presentationManager->SetTargetTime(presentationTime);

HRESULT hrPresent = m_presentationManager->Present();

if (hrPresent == PRESENTATION_ERROR_LOST)

{

// Our presentation manager has been lost. See previous examples regarding how to handle this.

return;

}

else

{

FAIL_FAST_IF_FAILED(hrPresent);

}

}

bool ReadStatistics(

_Out_ UINT* pPresentCount,

_Out_ UINT64* pSyncQPCTime) override

{

// Zero our out parameters initially.

*pPresentCount = 0;

*pSyncQPCTime = 0;

bool hasNewStats = false;

// Peek at the statistics available event state to see if we've got new statistics.

while (WaitForSingleObject(m_statisticsAvailableEvent.get(), 0) == WAIT_OBJECT_0)

{

// Pop a statistics item to process.

com_ptr_failfast<IPresentStatistics> statisticsItem;

FAIL_FAST_IF_FAILED(m_presentationManager->GetNextPresentStatistics(

&statisticsItem));

// If this is a composition frame stat, process it.

if (statisticsItem->GetKind() == PresentStatisticsKind_CompositionFrame)

{

// We've got new stats to report.

hasNewStats = true;

// Convert to composition frame statistic item.

auto frameStatisticsItem = statisticsItem.query<ICompositionFramePresentStatistics>();

// Query DirectComposition's target statistics API to determine the completed time.

COMPOSITION_FRAME_TARGET_STATS frameTargetStats;

COMPOSITION_TARGET_STATS targetStats[4];

frameTargetStats.targetCount = ARRAYSIZE(targetStats);

frameTargetStats.targetStats = targetStats;

// Specify the frameId we got from stats in order to pass to the call

// below and retrieve timing information about that frame.

frameTargetStats.frameId = frameStatisticsItem->GetCompositionFrameId();

FAIL_FAST_IF_FAILED(DCompositionGetTargetStatistics(1, &frameTargetStats));

// Return the statistics information for the first target.

*pPresentCount = static_cast<UINT>(frameStatisticsItem->GetPresentId());

*pSyncQPCTime = frameTargetStats.targetStats[0].completedStats.time;

// Note that there's a much richer variety of statistics information in the new

// API that can be used to infer much more than is possible with DXGI frame

// statistics.

}

}

return hasNewStats;

}

static bool IsSupportedOnSystem()

{

// Direct3D device creation flags. The composition swapchain API requires that applications disable internal

// driver threading optimizations, as these optimizations break synchronization of the API.

// If this flag isn't present, then the API will fail the call to create the presentation factory.

UINT deviceCreationFlags =

D3D11_CREATE_DEVICE_BGRA_SUPPORT |

D3D11_CREATE_DEVICE_SINGLETHREADED |

D3D11_CREATE_DEVICE_PREVENT_INTERNAL_THREADING_OPTIMIZATIONS;

// Create the Direct3D device.

com_ptr_failfast<ID3D11Device> d3dDevice;

com_ptr_failfast<ID3D11DeviceContext> d3dDeviceContext;

FAIL_FAST_IF_FAILED(D3D11CreateDevice(

nullptr, // No adapter

D3D_DRIVER_TYPE_HARDWARE, // Hardware device

nullptr, // No module

deviceCreationFlags, // Device creation flags

nullptr, 0, // Highest available feature level

D3D11_SDK_VERSION, // API version

&d3dDevice, // Resulting interface pointer

nullptr, // Actual feature level

&d3dDeviceContext)); // Device context

// Call the composition swapchain API export to create the presentation factory.

com_ptr_failfast<IPresentationFactory> presentationFactory;

FAIL_FAST_IF_FAILED(CreatePresentationFactory(

d3dDevice.get(),

IID_PPV_ARGS(&presentationFactory)));

// Now determine whether the system is capable of supporting the composition swapchain API based

// on the capability that's reported by the presentation factory.

return presentationFactory->IsPresentationSupported();

}

private:

com_ptr_failfast<IPresentationManager> m_presentationManager;

com_ptr_failfast<IPresentationSurface> m_presentationSurface;

vector<com_ptr_failfast<ID3D11Texture2D>> m_textures;

vector<com_ptr_failfast<IPresentationBuffer>> m_presentationBuffers;

vector<unique_event> m_bufferAvailableEvents;

unique_handle m_presentationSurfaceHandle;

unique_event m_statisticsAvailableEvent;

};

void DXGIOrPresentationAPIExample()

{

// Get the current system time. We'll base our 'PresentAt' time on this result.

SystemInterruptTime currentTime;

QueryInterruptTimePrecise(¤tTime.value);

// Track a time we'll be presenting at below. Default to the current time, then increment by

// 1/10th of a second every present.

auto presentTime = currentTime;

// Allocate a presentation provider using the composition swapchain API if it is supported;

// otherwise fall back to DXGI.

unique_ptr<PresentationProvider> presentationProvider;

if (PresentationAPIProvider::IsSupportedOnSystem())

{

presentationProvider = std::make_unique<PresentationAPIProvider>(

500, // Buffer width

500, // Buffer height

6); // Number of buffers

}

else

{

// System doesn't support the composition swapchain API. Fall back to DXGI.

presentationProvider = std::make_unique<DXGIProvider>(

500, // Buffer width

500, // Buffer height

6); // Number of buffers

}

// Present 50 times.

constexpr UINT numPresents = 50;

for (UINT i = 0; i < 50; i++)

{

// Advance our present time 1/10th of a second in the future.

presentTime.value += 1'000'000;

// Call the presentation provider to get a backbuffer to render to.

com_ptr_failfast<ID3D11Texture2D> backBuffer;

presentationProvider->GetBackBuffer(&backBuffer);

// Render to the backbuffer.

DrawColorToSurface(

presentationProvider->GetD3D11DeviceNoRef(),

backBuffer,

1.0f, // red

0.0f, // green

0.0f); // blue

// Present the backbuffer.

presentationProvider->Present(presentTime);

// Process statistics.

bool hasNewStats;

UINT64 presentTime;

UINT presentCount;

hasNewStats = presentationProvider->ReadStatistics(

&presentCount,

&presentTime);

if (hasNewStats)

{

// Process these statistics however your application wishes.

}

}

}